Uprise: Universal Prompt Retrieval for Improving Zero-Shot Evaluation

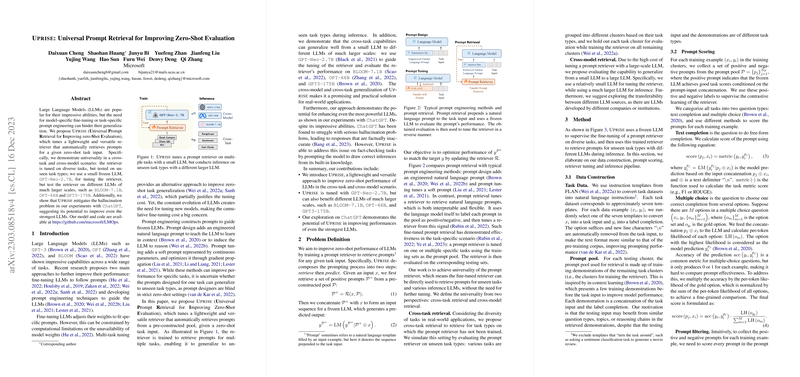

The paper "Uprise: Universal Prompt Retrieval for Improving Zero-Shot Evaluation" introduces a novel approach to enhancing the zero-shot evaluation capabilities of LLMs through universal prompt retrieval. This method seeks to overcome the limitations associated with traditional model-specific fine-tuning and task-specific prompt engineering.

Key Contributions

- Universal Retrievers: The paper proposes Uprise, a versatile retriever designed to automatically select prompts from a pre-defined pool for a given zero-shot task input. It is trained on a small LLM, GPT-Neo-2.7B, and demonstrates its effectiveness on larger models like BLOOM-7.1B, OPT-66B, and GPT3-175B.

- Cross-Task and Cross-Model Generalization: Uprise is tested across different tasks and models, highlighting its ability to extend beyond the tasks it was initially trained on and apply to different LLM configurations. This generalization from a small to a large model is crucial for practical applications.

- Hallucination Mitigation: The approach also addresses the hallucination problem in models like ChatGPT, showing improved accuracy in fact-checking tasks, which underscores Uprise's potential for enhancing even the strongest LLMs.

- Structured Evaluation: A comprehensive evaluation is conducted across various tasks including Reading Comprehension, Closed-book QA, Paraphrase Detection, Natural Language Inference, and Sentiment Analysis. The paper presents robust numerical results indicating significant performance gains in zero-shot settings.

- Training Data Diversity: The paper explores the impact of diverse training data on the effectiveness of universal prompt retrieval, showing that tasks with diverse question and answer types are more generalizable.

Implications and Future Research

The introduction of Uprise has several implications for the field of AI and LLMs:

- Scalability and Efficiency: By adopting a universal prompt retrieval approach, the need for constant model-specific fine-tuning is reduced, leading to more scalable and resource-efficient solutions.

- Adaptive AI Systems: Uprise sets the groundwork for developing adaptive AI systems capable of handling a wide range of tasks without prior task-specific adjustments.

- Research Directions: Future work could extend the universality of prompt retrieval to more complex AI systems involving multimodal information or external knowledge bases. Further, exploring prompt retrieval's impact on few-shot learning provides an exciting avenue for research.

Conclusion

This paper provides a thoughtful examination of universal prompt retrieval as a means to enhance zero-shot evaluation. By focusing on cross-task and cross-model generalization, Uprise offers a promising direction for improving the flexibility and efficiency of LLMs in diverse applications.