Overview of "Multimodal Learning with Transformers: A Survey"

This comprehensive survey by Xu, Zhu, and Clifton examines the state of multimodal learning with Transformers, reflecting the burgeoning interest in leveraging unified architectures to handle diverse data modalities. With the advent of big data and the proliferation of multimodal applications, Transformer models have emerged as a pivotal technology in AI research.

The paper provides a structured examination of Transformer-based multimodal learning techniques, first surveying the landscape from a high-level perspective before diving into technical challenges and presenting potential future research directions.

Key Components of the Survey

The survey organizes its findings into several major sections:

- Background and Context: The authors begin by setting the stage for understanding the role of multimodal learning in AI, emphasizing how human cognition integrates multiple sensory modalities. Multimodal learning aims to emulate this human ability, seeking to advance AI systems that can seamlessly interpret and utilize diverse data types.

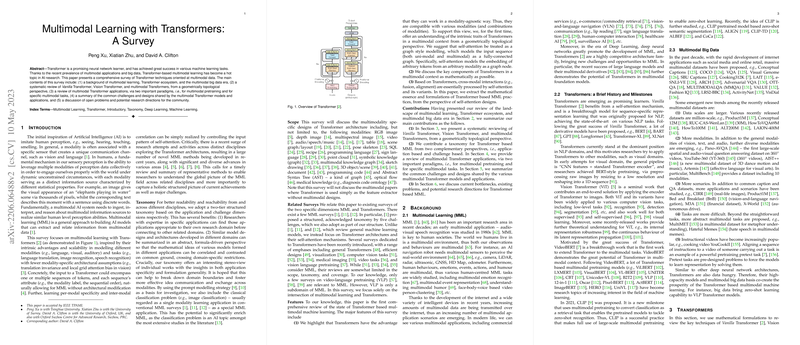

- Transformer Architectures: Transformers are highlighted for their versatility across modalities, notably featuring self-attention mechanisms and modular architectures that can be adapted readily without modifications. This survey categorizes Transformer architectures into Vanilla Transformers, Vision Transformers, and multimodal variants, examining their unique characteristics and applicability.

- Taxonomy and Methodology: Emphasizing a taxonomic approach, the authors propose a matrix that categorizes multimodal learning into applications and design challenges. This matrix serves as a navigational tool for researchers, helping to identify commonalities and differences across disciplines.

- Challenges and Solutions: The paper discusses technical challenges inherent to Transformer-based multimodal learning, including fusion of modalities, cross-modal alignment, model transferability, efficiency, and interpretability. Each challenge is accompanied by a discussion of existing methods and techniques adopted to overcome these hurdles.

Notable Contributions

- Geometrically Topological Perspective: The narrative adopts a unique geometrically topological outlook to understand how Transformers naturally accommodate various modality-specific requirements, offering a fresh theoretical perspective on their intrinsic traits.

- Self-Attention and Cross-Modal Interactions: By establishing a robust connection between self-attention and cross-modal interactions, the paper provides insights into how Transformer models manage and synchronize information from multiple sources.

- Multimodal Pretraining: The survey extensively reviews multimodal pretraining methodologies, discussing how large datasets have enabled breakthroughs in zero-shot learning and model generalization. It reflects on task-specific versus agnostic pretraining objectives and contrasts various strategies.

Implications and Future Directions

The paper suggests that while substantial progress has been made, the diversity in application contexts and the complexity of multimodal integration pose ongoing challenges. The authors point to areas needing further research, such as self-supervised learning with unaligned data, leveraging weak supervision, and developing unified architectures that can handle generative and discriminative tasks holistically.

Crucially, the importance of designing task-agnostic architectures that naturally accommodate the intrinsic challenges posed by large-scale, multimodal input data is highlighted. Future explorations might focus on improving efficiency and robustness, and addressing the fine-grained challenges in semantic alignment across modalities.

Conclusion

In summary, this survey encapsulates the current understanding and progress in the field of multimodal learning with Transformers, marking a stepping stone for further inquiry and development. It calls for an increased focus on unifying approaches and highlights significant gaps in current knowledge and practice. By navigating the vast landscape of multimodal learning through the lens of Transformer architectures, the survey offers a valuable resource for established and emerging researchers in the domain.