Integrating a Manual Pipette into a Collaborative Robot Manipulator for Flexible Liquid Dispensing (2207.01214v1)

Abstract: This paper presents a system integration approach for a 6-DoF (Degree of Freedom) collaborative robot to operate a pipette for liquid dispensing. Its technical development is threefold. First, we designed an end-effector for holding and triggering manual pipettes. Second, we took advantage of a collaborative robot to recognize labware poses and planned robotic motion based on the recognized poses. Third, we developed vision-based classifiers to predict and correct positioning errors and thus precisely attached pipettes to disposable tips. Through experiments and analysis, we confirmed that the developed system, especially the planning and visual recognition methods, could help secure high-precision and flexible liquid dispensing. The developed system is suitable for low-frequency, high-repetition biochemical liquid dispensing tasks. We expect it to promote the deployment of collaborative robots for laboratory automation and thus improve the experimental efficiency without significantly customizing a laboratory environment.

Summary

- The paper introduces a robotic system that integrates a manual pipette with a 6-DoF collaborative robot to enable flexible and precise liquid dispensing.

- It employs hardware modifications, including 3D-printed gripper fingers and dual RGB cameras, along with direct teaching for effective labware pose acquisition.

- The system achieves sub-millimeter error using vision transformer-based tip alignment, demonstrating robust performance in unstructured laboratory environments.

Integrating a Manual Pipette into a Collaborative Robot Manipulator for Flexible Liquid Dispensing

Introduction and Motivation

The paper presents a system for integrating a standard manual pipette with a 6-DoF collaborative robot manipulator to automate flexible liquid dispensing tasks in laboratory environments. The approach addresses the limitations of conventional gantry-based liquid handling robots, which require significant space and environmental customization, and the lack of precision in manipulator-based systems. The proposed system leverages a modified parallel gripper, direct teaching for pose acquisition, and vision-based classifiers for high-precision tip attachment, enabling robust operation in crowded, unstructured lab settings.

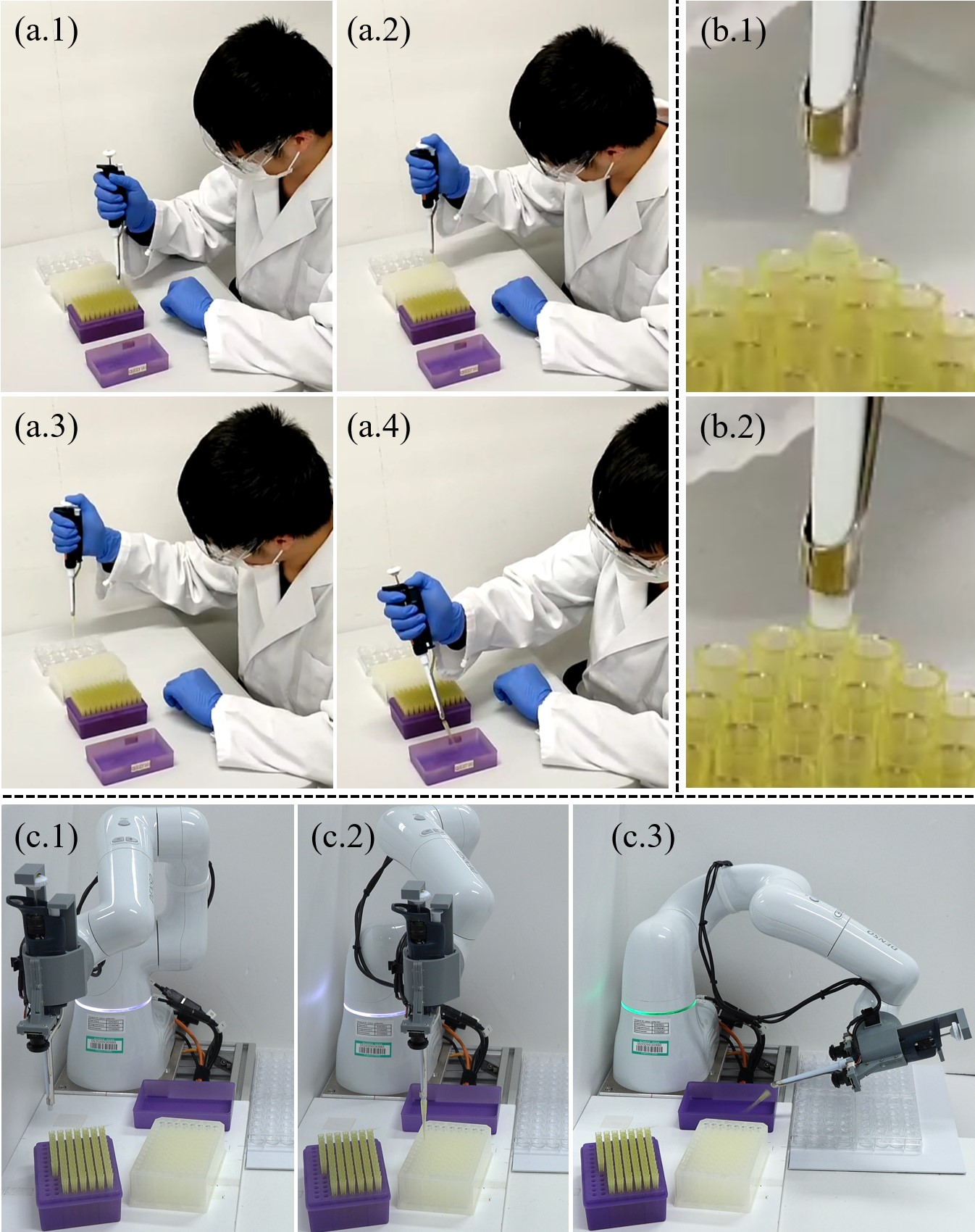

Figure 1: The system integrates a manual pipette with a collaborative robot, using custom fingertips, direct teaching for pose recognition, and a vision transformer for robust tip alignment and attachment.

System Design and Hardware Modifications

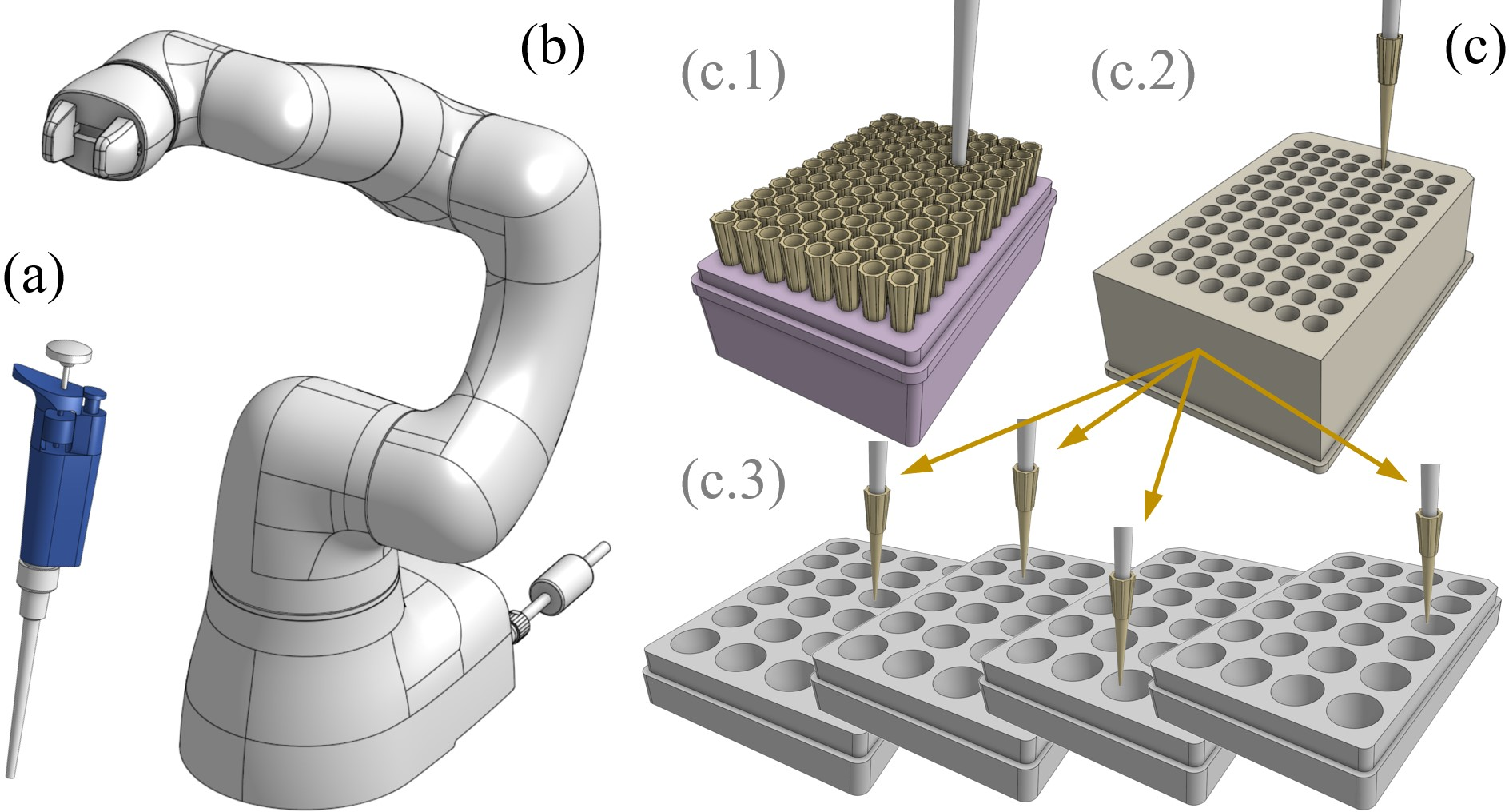

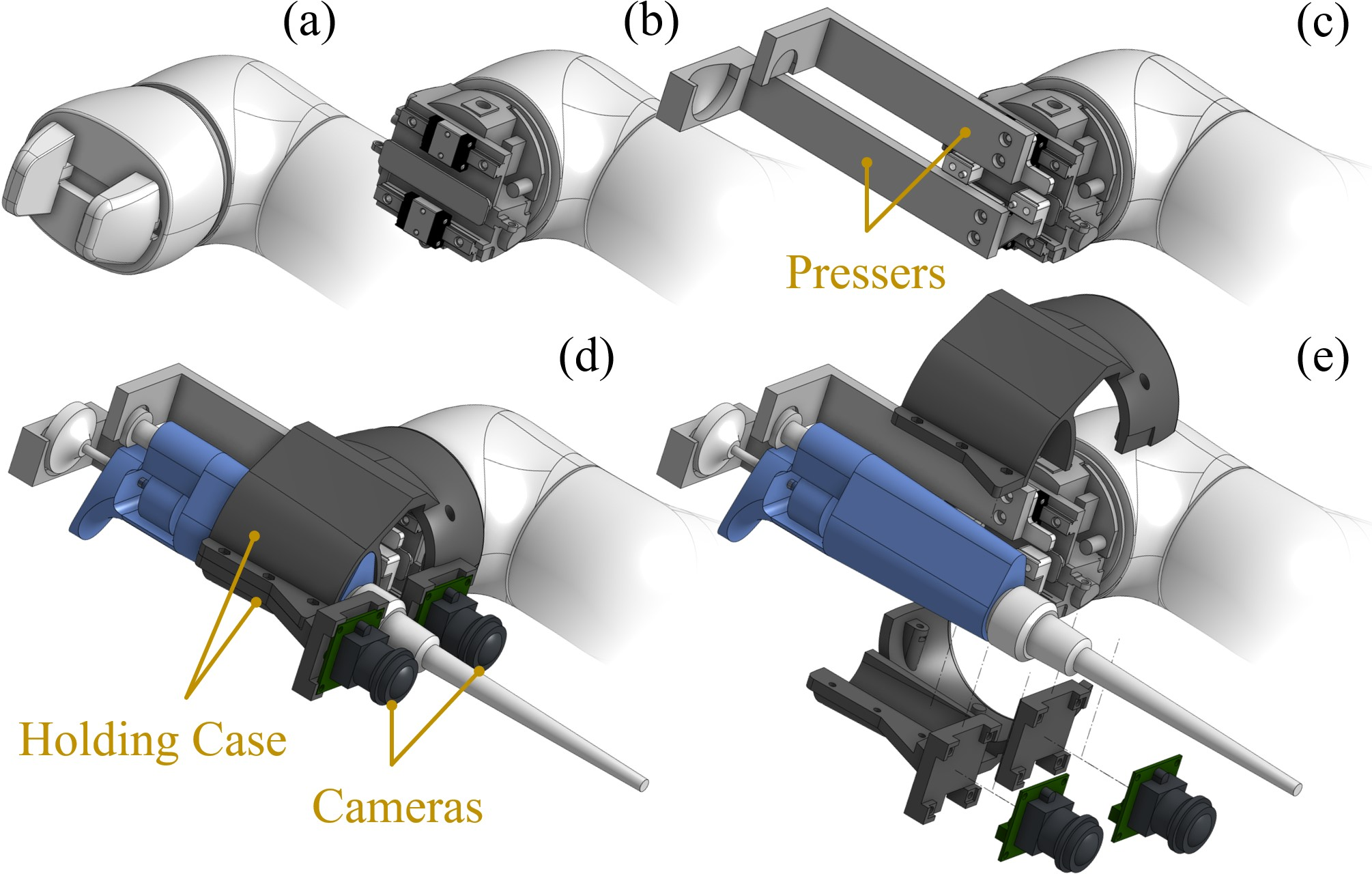

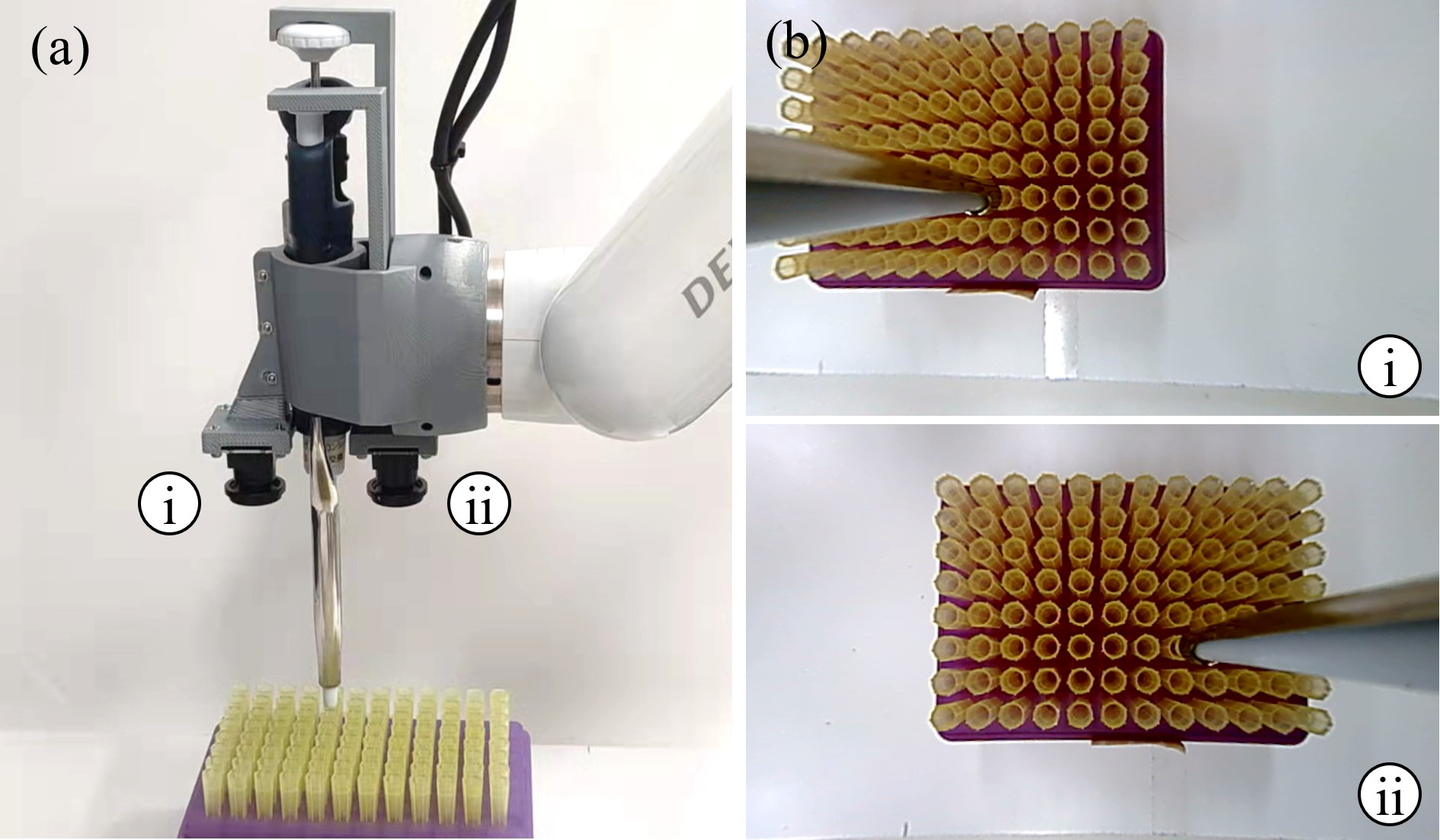

The hardware platform is based on a DENSO COBOTTA collaborative robot and a Gilson manual pipette. The commercial parallel gripper is modified with 3D-printed L-shaped fingers and a custom holding case to enable both secure grasping and actuation of the pipette's plunger and tip ejector. Two compact RGB cameras are mounted on the end-effector for visual feedback during tip alignment.

Figure 2: The system components include the manual pipette, collaborative robot, and labware such as tip racks and microplates.

Figure 3: The gripper is modified with L-shaped fingers and a holding case to enable pipette actuation and camera mounting.

Pose Acquisition and Motion Planning

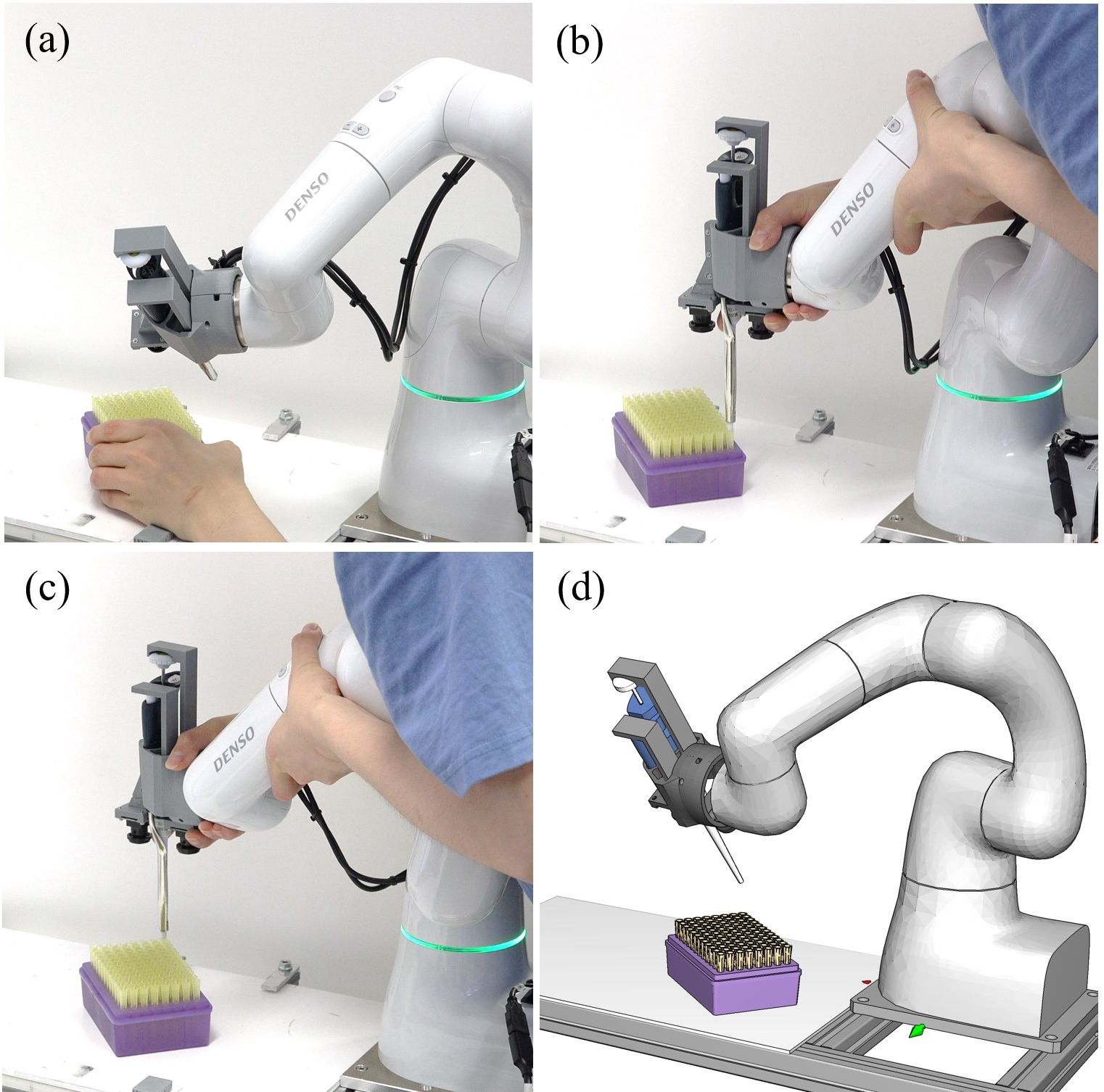

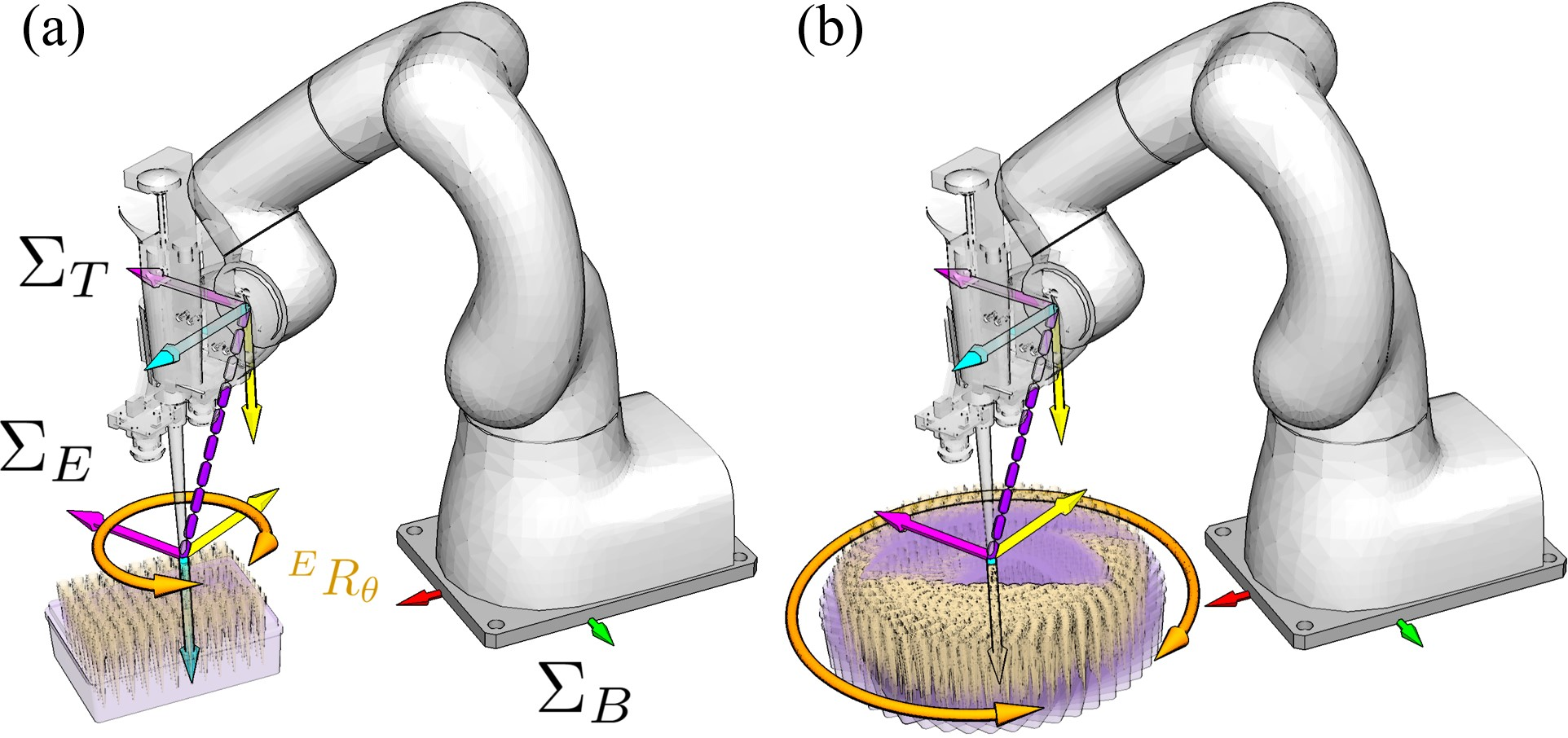

Labware pose acquisition is achieved via the robot's direct teaching mode, where an operator manually guides the end-effector to key positions on the tip rack, enabling the system to infer the rack's pose without external vision or calibration. This approach circumvents the challenges of depth sensing with translucent objects and the need for global calibration.

Figure 4: Direct teaching is used to record key positions on the tip rack, enabling pose estimation in the robot's coordinate frame.

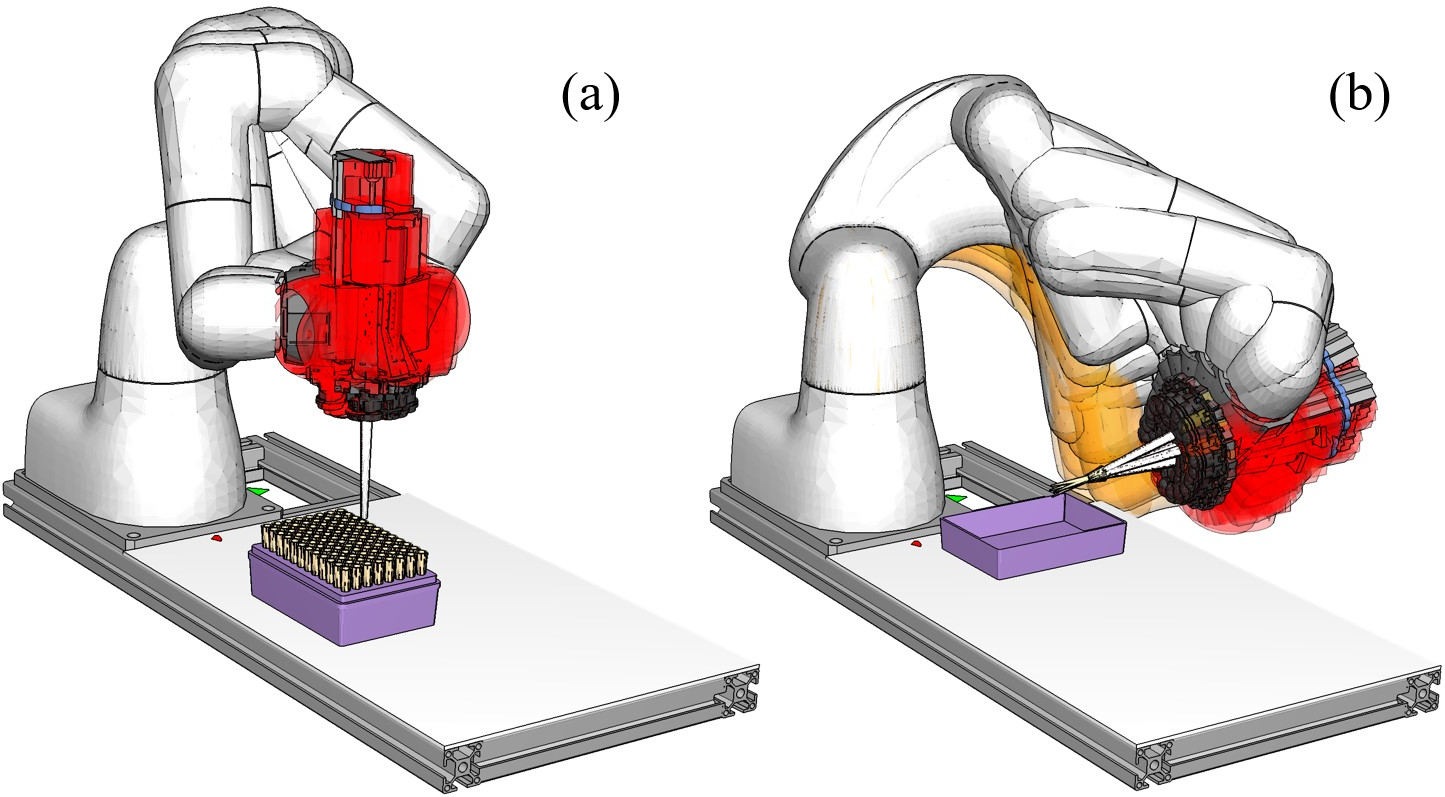

Motion planning is performed using RRT-Connect for collision-free path generation, followed by path pruning and time-optimal parameterization (TOPPRA) for efficient execution. The system searches for feasible end-effector poses by exploiting the rotational redundancy of the pipette shaft, ensuring reachability even in constrained workspaces.

Figure 5: The system searches for feasible robot configurations for tip attachment and disposal, considering kinematic and collision constraints.

Vision-Based Tip Alignment and Error Correction

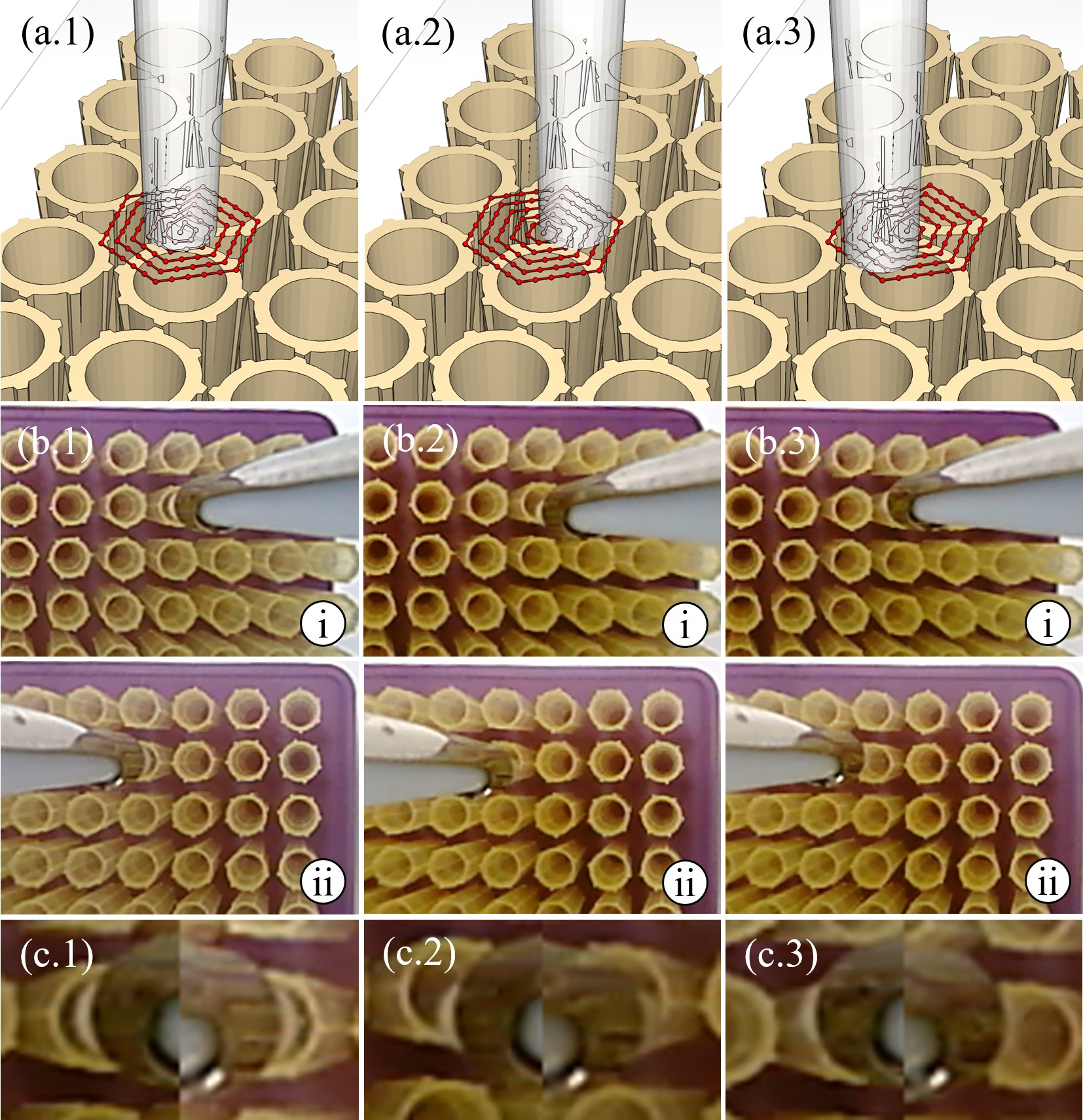

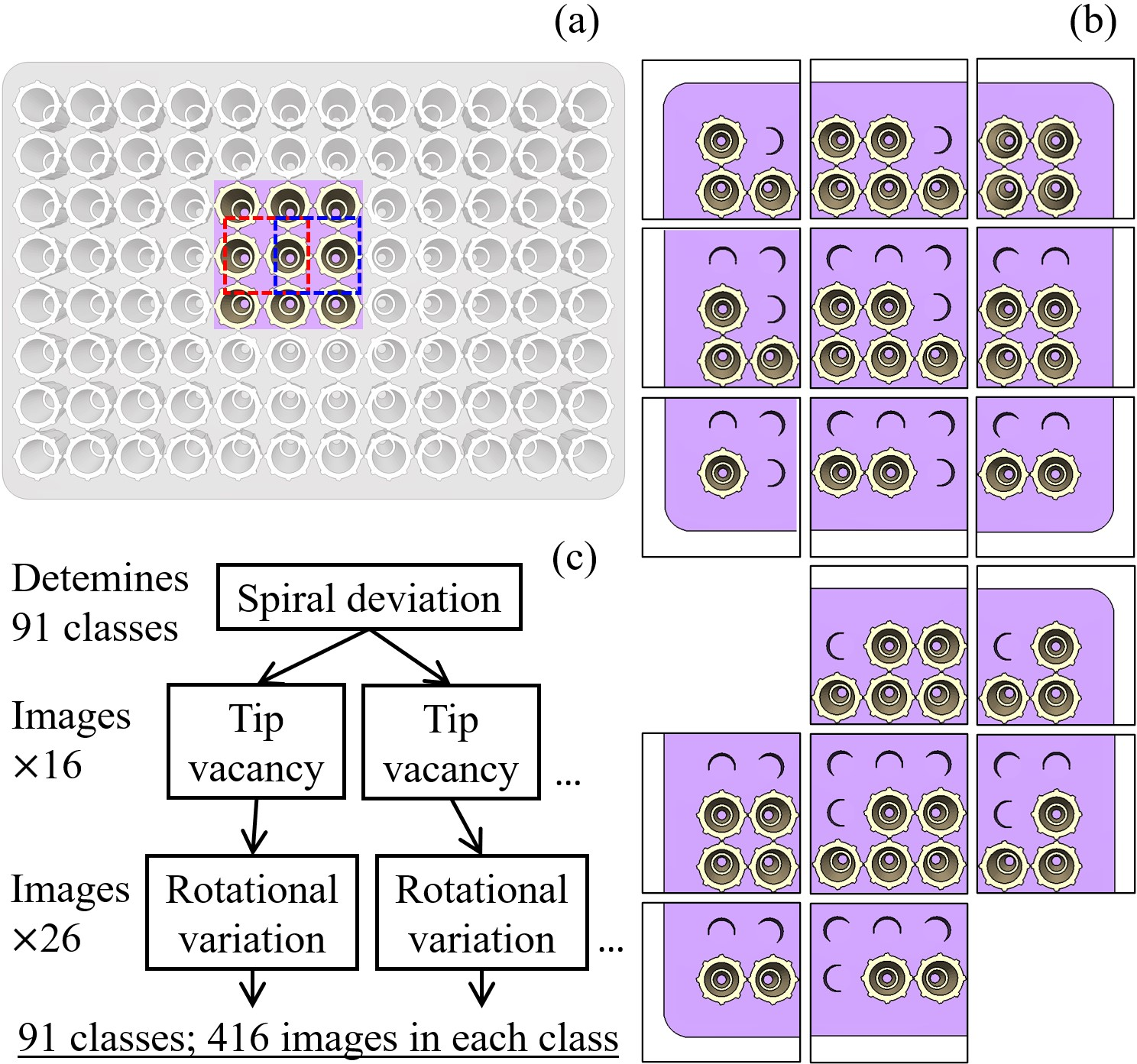

Precise tip attachment is formulated as a vision-based classification problem. The system collects training data by moving the pipette tip along a spiral path centered on the target tip, capturing images from both cameras at each offset. Each class corresponds to a specific deviation from the ideal alignment.

Figure 6: The pipetting end-effector prototype with dual cameras for visual feedback.

Figure 7: Training data is collected by moving the pipette along a spiral path, capturing images at each node for classifier training.

The spiral path is designed using equilateral triangulation to ensure uniform coverage and bounded residual error after correction. The maximum residual error is constrained by the compliance of the plastic tips, with a typical class spacing of 0.5 mm.

Rotational variations due to fabrication and installation uncertainties are addressed by rotating the tip rack during data collection, obviating the need for precise calibration of the pipette tip's local frame.

Figure 8: Rotational variations are incorporated by rotating the rack, avoiding the need for precise end-effector calibration.

Figure 9: Image collections are generated for each spiral node and rack rotation, forming the training set for the classifier.

Tip availability is also considered, with training data generated for all relevant patterns of neighboring tip presence, reflecting the sequential tip pickup routine.

Figure 10: Only the eight neighboring tips affect the merged images; tip availability and rotational variations expand the training set.

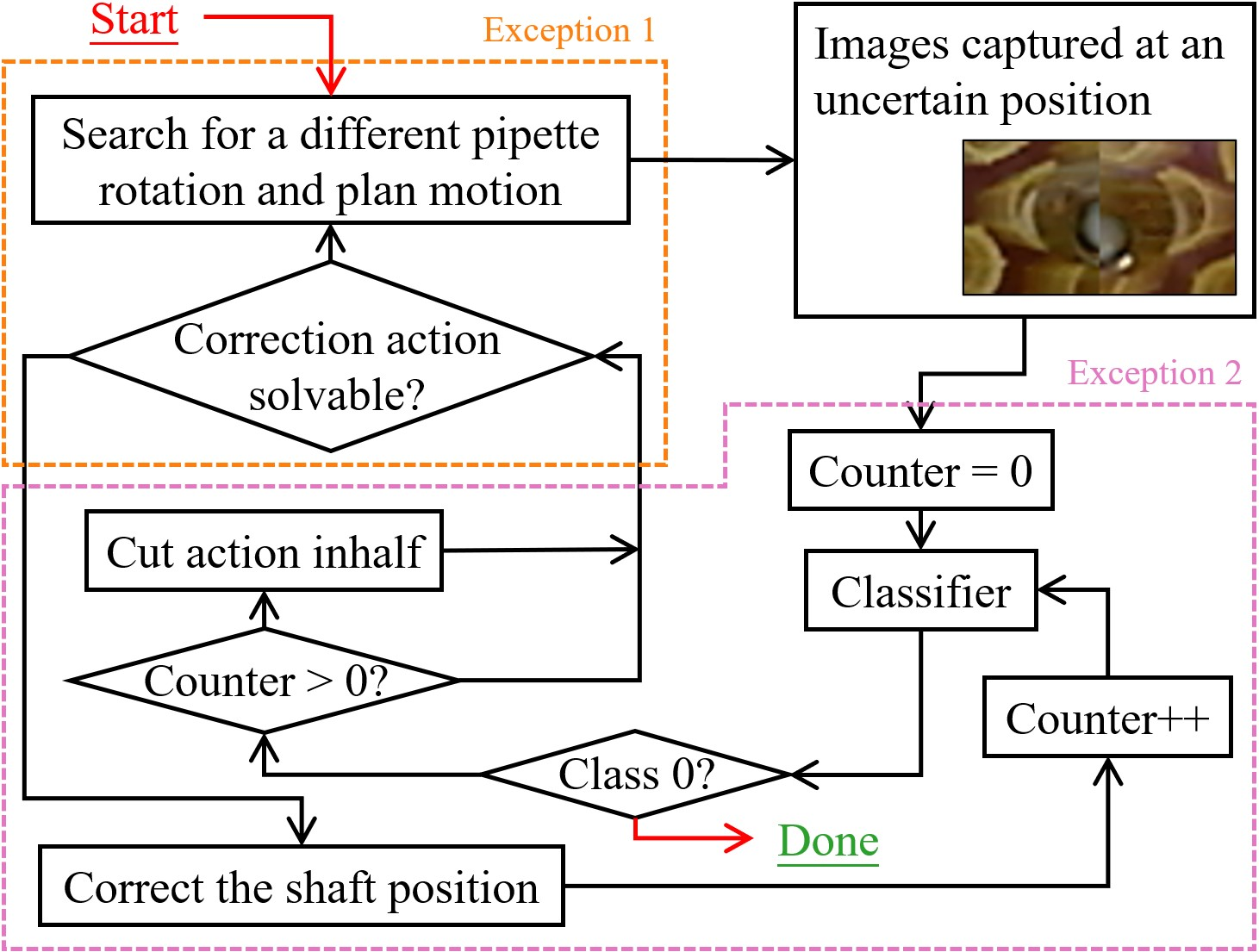

The correction workflow includes exception handling for infeasible IK solutions and potential infinite correction loops, with strategies such as halving the correction step size to ensure convergence.

Figure 11: The workflow for using the trained classifier to predict and correct positioning errors, with exception handling.

Classifier Evaluation and Experimental Results

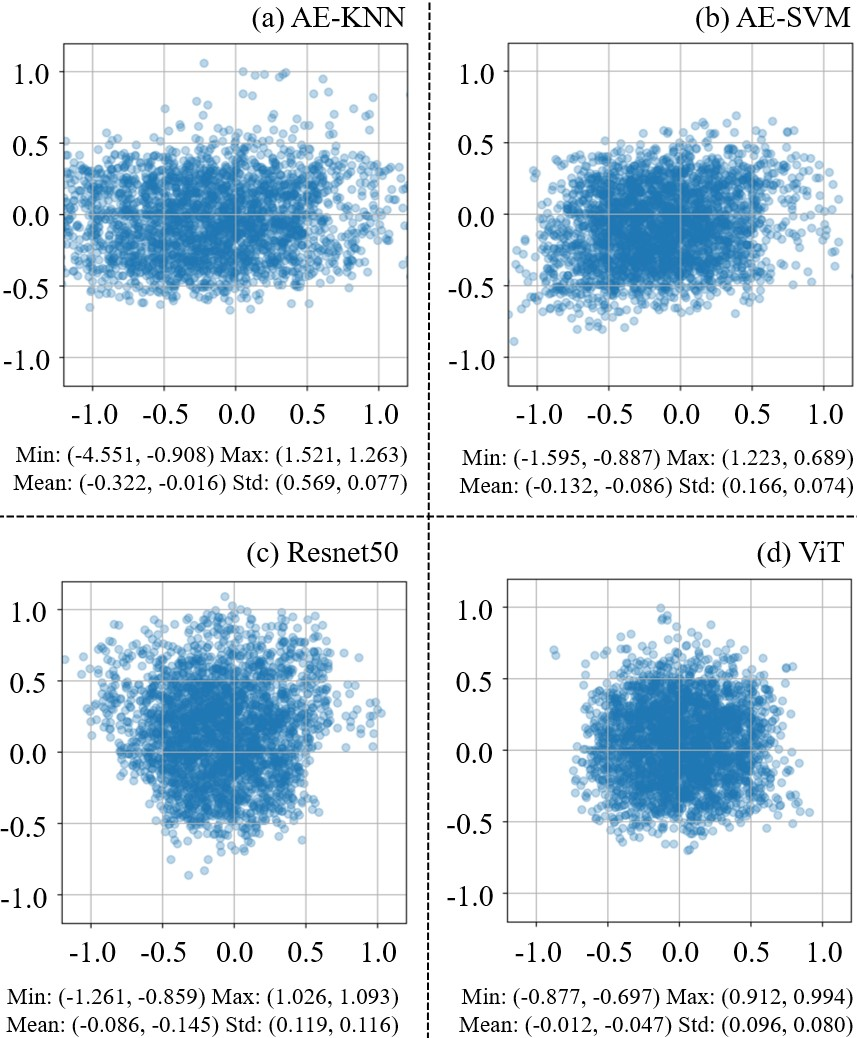

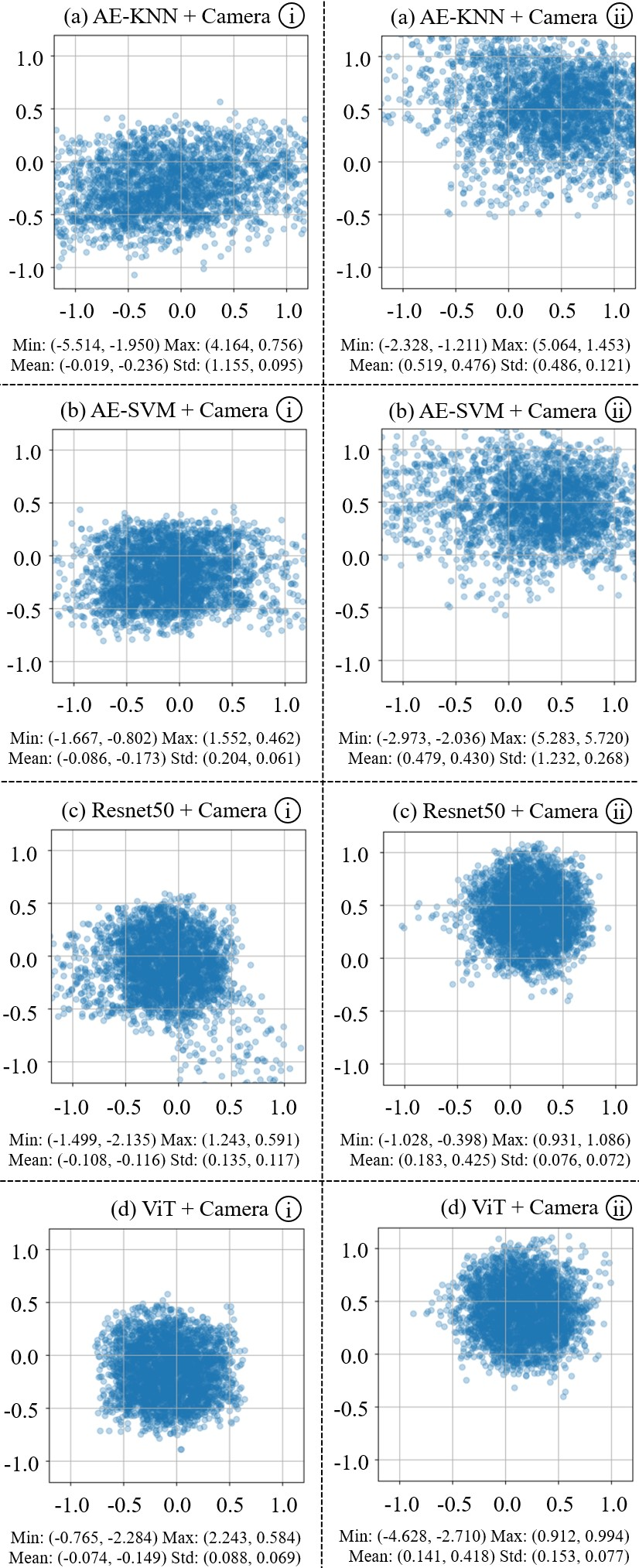

Four classifiers are evaluated: AE-KNN, AE-SVM, ResNet50, and Vision Transformer (ViT). The ViT consistently achieves the lowest prediction error (<1 mm) and, together with ResNet50, enables 100% successful tip insertions in dual-camera configurations. Single-camera setups exhibit reduced precision and increased correction steps, highlighting the importance of stereo vision for this task.

Figure 12: ViT achieves the best prediction accuracy among the evaluated classifiers, with errors below 1 mm.

Figure 13: Single-camera configurations show increased prediction bias and reduced precision compared to dual-camera setups.

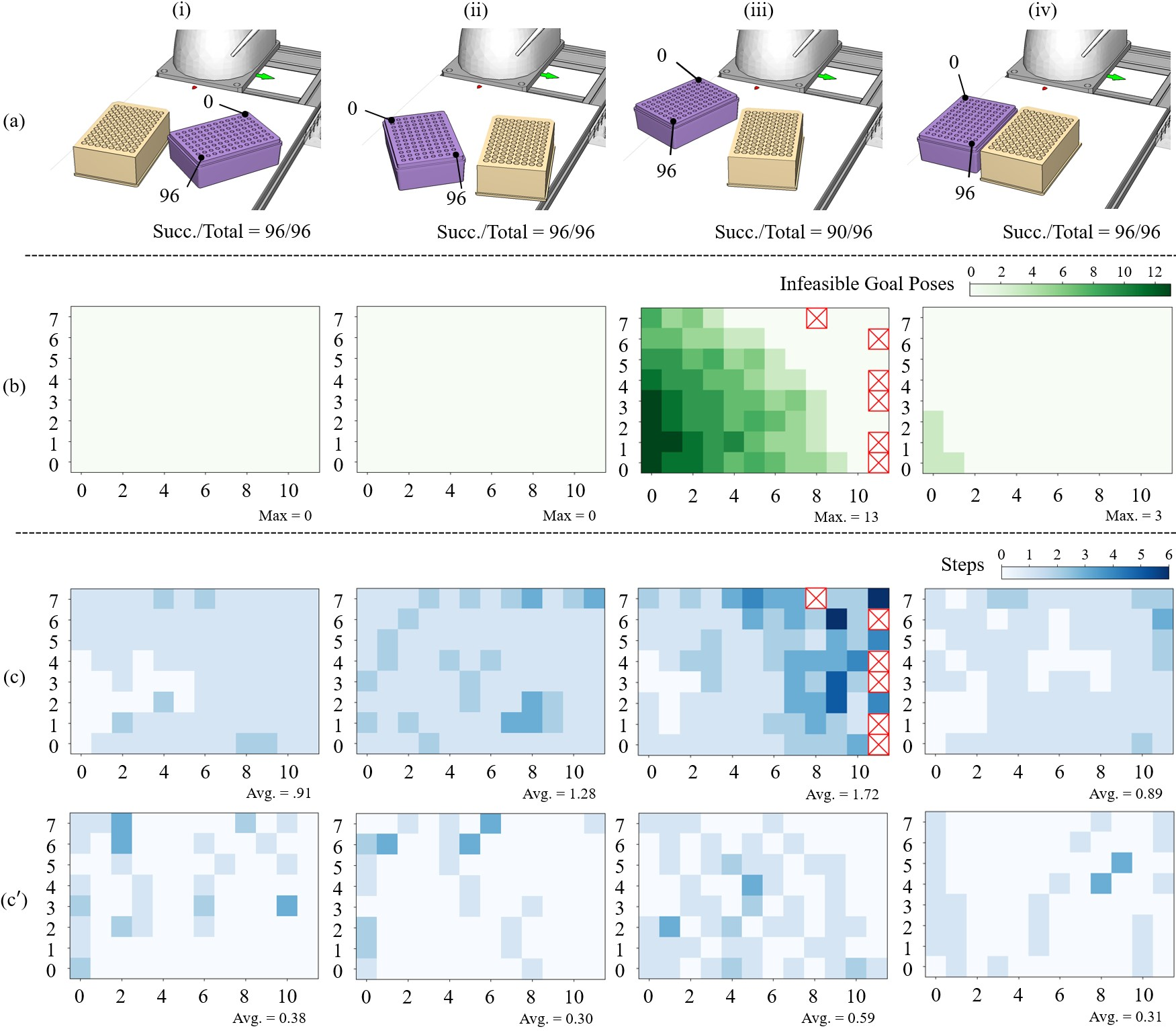

The system demonstrates robust performance in real-world liquid dispensing routines, with high success rates across random rack and plate placements. Failures are primarily attributed to large absolute positioning errors near workspace boundaries, which are mitigated by closed-loop pose updates using previously predicted deviations.

Figure 14: Real-world dispensing results show high success rates and efficient correction, with closed-loop updates further improving performance.

The system is also successfully deployed alongside the RIKEN Integrated Phenotyping System (RIPPS), demonstrating compatibility with existing automation infrastructure.

Figure 15: The robotic system is deployed for chemical dispensing to plant pots transported by RIPPS.

Implications and Future Directions

The integration of a manual pipette with a collaborative robot using vision-based error correction enables flexible, high-precision liquid handling in unstructured laboratory environments. The approach eliminates the need for specialized labware or extensive workspace customization, facilitating rapid deployment and coexistence with human operators and other automation systems.

The use of vision transformers for fine-grained alignment tasks demonstrates the efficacy of attention-based models in robotic manipulation, particularly when combined with carefully designed data collection protocols that account for translational, rotational, and environmental variability.

Future work may focus on generalizing the end-effector design and error correction methods to accommodate a broader range of pipette types and tip geometries, as well as extending the approach to other peg-in-hole or insertion tasks in laboratory and industrial automation.

Conclusion

This work presents a comprehensive system for integrating a manual pipette into a collaborative robot manipulator, achieving flexible and robust liquid dispensing through hardware modifications, direct teaching, and vision-based error correction. The system demonstrates high precision and adaptability in real-world laboratory scenarios, with strong empirical results validating the proposed methods. The approach offers a practical pathway for enhancing laboratory automation without significant infrastructure changes, and the underlying techniques have broader applicability to other precision manipulation tasks.

Follow-up Questions

- How does the direct teaching approach enhance the system's pose estimation accuracy?

- What are the advantages of using dual RGB cameras for pipette tip alignment over single-camera setups?

- How do the custom 3D-printed L-shaped fingers improve the pipette actuation and overall system reliability?

- What potential limitations exist when integrating a manual pipette with a collaborative robot in crowded lab settings?

- Find recent papers about robotic liquid dispensing.

Related Papers

- Accelerating Laboratory Automation Through Robot Skill Learning For Sample Scraping (2022)

- SOLIS: Autonomous Solubility Screening using Deep Neural Networks (2022)

- Leveraging Multi-modal Sensing for Robotic Insertion Tasks in R&D Laboratories (2023)

- Towards Robotic Laboratory Automation Plug & Play: The "LAPP" Framework (2021)

- Adaptive Robotic Tool-Tip Control Learning Considering Online Changes in Grasping State (2024)

- Active Vision Might Be All You Need: Exploring Active Vision in Bimanual Robotic Manipulation (2024)

- Coarse-to-Fine Learning for Multi-Pipette Localisation in Robot-Assisted In Vivo Patch-Clamp (2025)

- RoboCulture: A Robotics Platform for Automated Biological Experimentation (2025)

- Robotic System for Chemical Experiment Automation with Dual Demonstration of End-effector and Jig Operations (2025)

- Multimodal Behaviour Trees for Robotic Laboratory Task Automation (2025)