- The paper introduces the AV-ALOHA system that integrates a dedicated active vision arm to enhance imitation learning in bimanual teleoperation.

- It employs differential inverse kinematics and VR-based control to dynamically adjust camera perspectives, improving performance in both simulation and real-world tasks.

- Results show that optimal camera views substantially boost success rates in precise manipulation tasks while highlighting challenges in managing data complexity.

Active Vision for Bimanual Robotic Manipulation

This paper introduces AV-ALOHA, a bimanual teleoperation robot system that integrates active vision (AV) to enhance imitation learning for manipulation tasks. The system extends the ALOHA 2 robot by incorporating an additional 7-DoF robotic arm dedicated to controlling a stereo camera, which streams video to a VR headset worn by the operator. This allows the operator to dynamically adjust the camera's viewpoint using head and body movements, providing an immersive teleoperation experience. The authors demonstrate the effectiveness of AV-ALOHA through experiments in both simulation and real-world environments, showing significant improvements over fixed camera setups in tasks with limited visibility.

System Design and Implementation

The AV-ALOHA system builds upon the existing ALOHA 2 setup, retaining the two leader and follower arms, as well as the original four Intel RealSense D405 cameras. A key innovation is the introduction of the AV arm, equipped with a ZED mini stereo camera. The AV arm is enhanced with an additional degree of freedom, increasing its dexterity and range of motion (Figure 1).

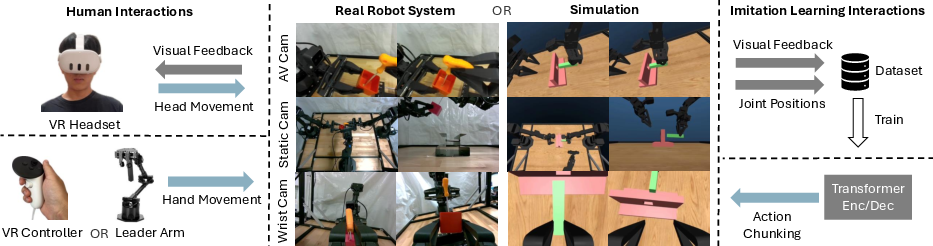

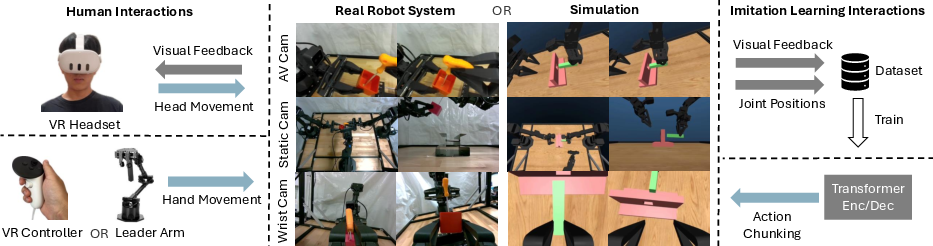

Figure 1: Illustration of AV-ALOHA, a bimanual robot system with 7-DoF AV.

The system offers two teleoperation options: one using VR controllers and the other utilizing the original ALOHA leader arms. Both options leverage a Unity application that interfaces with the robot system via WebRTC, streaming stereo video from the AV arm to the VR headset. Differential Inverse Kinematics (IK) with Damped Least Squares is employed to map the operator's head and hand movements to the robot's joint angles. A simulation environment, mirroring the real robot system, is also developed using MuJoCo, enabling data collection and policy evaluation in a controlled setting. The data collection and imitation learning pipeline is shown in (Figure 2).

Figure 2: The AV-ALOHA system enables intuitive data collection using a VR headset for AV and either VR controllers or leader arms for manipulation.

Experimental Evaluation

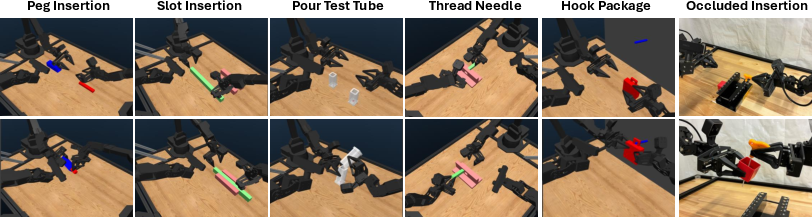

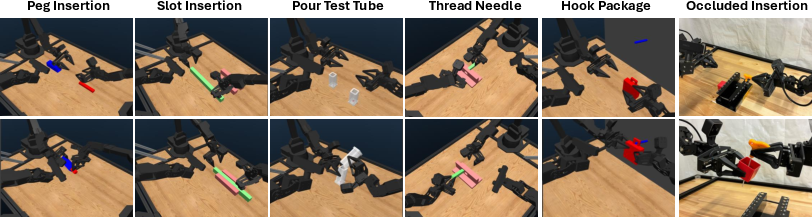

The authors evaluate the effectiveness of AV-ALOHA using the Action Chunking with Transformers (ACT) imitation learning framework. They conduct experiments on five simulation tasks and one real-world task, each designed to assess the impact of AV on bimanual manipulation. The tasks are divided into two groups: those that can be completed without AV and those that potentially benefit from improved camera perspectives. The six tasks are depicted in (Figure 3).

Figure 3: The simulation and real-world tasks vary in their complexity, with some explicitly designed to encourage the robot to seek optimal perspectives for execution.

The results, summarized in Table 1 of the paper, demonstrate that AV can significantly improve performance in tasks with limited visibility or when precise camera perspective is crucial.

Results and Discussion

The experiments reveal several key findings. For tasks where AV is not essential, non-AV setups can achieve comparable or even better performance. This suggests that the inclusion of additional camera feeds and the increased complexity of the action space can sometimes hinder learning. However, for tasks that benefit from improved camera perspectives, AV-enhanced setups consistently outperform non-AV configurations. In the real-world occluded insertion task, the AV + wrist camera combination achieves the highest success rate, indicating that AV enhances precision in tasks requiring visual feedback of small and intricate details.

Interestingly, the authors find that using all available cameras simultaneously does not lead to optimal performance. They hypothesize that adding more cameras can be detrimental if the additional views do not provide significant new information or if the control architecture is not capable of effectively managing the increased complexity. The results also indicate that a single, consistent optimal camera perspective during training data generation can improve learning by shrinking the solution space.

Conclusion

This paper presents a compelling case for the integration of AV into bimanual robotic manipulation systems. The AV-ALOHA system offers an intuitive and effective platform for collecting human demonstrations and training imitation learning policies. The experimental results demonstrate that AV can significantly improve performance in tasks where camera perspective is critical. However, the authors also acknowledge the challenges associated with AV, including the need for more sophisticated control architectures and the potential for increased data and training requirements. The open-source nature of the AV-ALOHA hardware and software is poised to spur further research and development in this area.