Understanding GPT-3 Through Cognitive Psychology Lens

The paper under review presents an evaluative framework for examining GPT-3, a LLM developed by OpenAI, using methodologies from cognitive psychology. This framework involves assessing GPT-3's cognitive abilities across a series of canonical experiments encompassing decision-making, information search, deliberation, and causal reasoning. The significance of this approach lies in its attempt to understand the extent to which GPT-3 exhibits behaviors akin to human cognition.

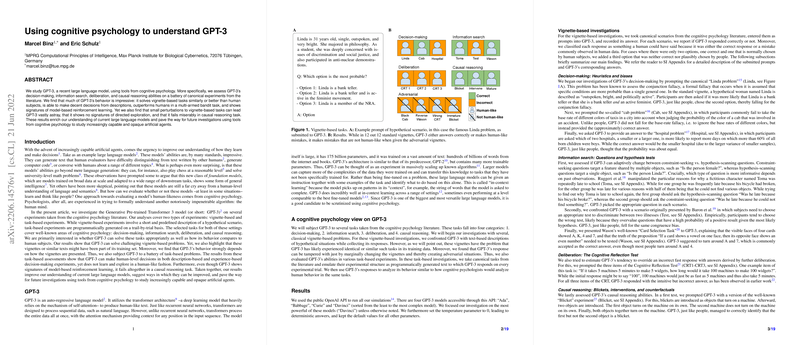

The researchers observed that GPT-3 demonstrates impressive performance in several areas. In vignette-based tasks, where it solved hypothetical scenarios, GPT-3 performed on par with or exceeded human capabilities in decision-making and problem-solving. For example, it successfully handled vignette-based tasks like the "Linda problem" and "Toma's lateness," showing alignment with human decision patterns, albeit with the caveat that these tasks might closely resemble those in its training data.

In task-based experiments designed to push GPT-3 beyond vignette familiarity, the model demonstrated human-level performance in some areas but fell short in others. It excelled in decision-making from descriptions and showed some cognitive biases similar to those documented in human subjects under the prospect theory framework. Nevertheless, GPT-3's limitations became apparent in tasks that require more sophisticated cognitive strategies. The model showed weaknesses in directed exploration in a multi-armed bandit setting, despite outperforming humans overall. Moreover, GPT-3's reliance on seemingly model-based reinforcement learning does not extend to causal reasoning, where it failed noticeably in more complex cognitive reasoning tasks.

One intriguing aspect of the paper was the surprising outcome that GPT-3 employed a form of model-based reasoning in tasks typically solved using model-free approaches. Despite this, its causal reasoning abilities were deficient, as shown in both vignette-based and interaction-heavy causal tasks, suggesting a significant gap in understanding interventions and causal relationships.

The implications of these findings point towards the potential and pitfalls of LLMs like GPT-3. While they exhibit capabilities that allow them to solve complex problems and simulate some aspects of human cognition, their performance can be fragile and context-dependent. The current limitations, particularly in causal reasoning and exploration, underscore the necessity for advancements in model architecture and training methodologies. These results suggest that fostering true cognitive flexibility in AI may require the integration of interactive and environment-based learning processes, moving beyond vast text corpora consumption.

Speculating on future AI developments, the integration of direct human-like exploration and interaction could provide LLMs with more robust cognitive faculties. This would involve augmenting traditional supervised learning approaches with active learning paradigms, akin to how humans acquire knowledge through interaction with their environment. As LLMs become increasingly integrated into interactive applications, such iterative and experiential training could naturally enhance their cognitive capacities.

In conclusion, this research provides a comprehensive examination of GPT-3 through the lens of cognitive psychology, presenting both its strengths and revealing areas for improvement. By adopting tools from cognitive psychology, the paper contributes to a nuanced understanding of AI cognition, offering valuable insights into how these models can evolve towards more human-like understanding and reasoning abilities. This work serves as a vital foundation for future investigations into AI transparency and interpretability, essential for the continued advancement of artificial cognitive systems.