Overview of the Paper

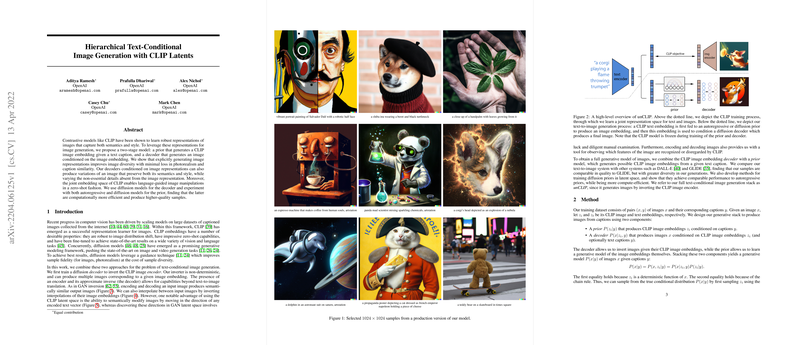

In a paper detailed by researchers from OpenAI, a new model is presented to generate images from textual descriptions, leveraging the strengths of CLIP embeddings and diffusion models. Initial investigations reveal strong image diversity with a balance maintained in photorealism, offering a unique capability to vary non-essential details in an image while holding onto its core semantic content and style.

New Method Proposed

The proposed two-stage model consists of a prior that creates CLIP image embeddings from textual captions, followed by a decoder that generates the final image conditioned on these embeddings. Essentially, the prior guides the model on what to generate, and the decoder determines how to visually express it. The model's prior and decoder apply diffusion processes, known for producing high-quality visuals. Specifically, the decoder is trained to invert the CLIP image encoder, allowing multiple semantically similar images to be produced from a single embedding, akin to translation in language. This leads to an ability to interpolate between images and to manipulate images in alignment with specified textual cues, a process termed "zero-shot fashion" due to its immediacy and efficiency.

Experimental Findings

Comparisons with competing systems such as DALL-E and GLIDE indicate that the new model, which the authors refer to as unCLIP, generates images with quality comparable to GLIDE but with notably increased diversity. Empirical tests demonstrate that the diffusion priors perform on par with auto-regressive priors while being more compute-efficient. In-depth analyses underline that the diffusion prior consistently surpasses the autoregressive prior across various aspects, including efficiency and quality metrics.

Implications and Limitations

The paper also explores potential image manipulations enabled by this model, such as creating variations of a given image and blending contents from multiple sources while conforming to the semantics guided by embedded textual descriptions. However, the authors acknowledge limitations in attribute binding and challenges in generating coherent text within images, signaling areas for future improvement.

The researchers provide extensive details on the model's architecture, training process, and the extensive dataset used, while also elucidating risks associated with the generation of deceptive content. As AI continues to evolve, the ability to distinguish between generated and authentic images becomes increasingly challenging, raising ethical and safety concerns. Assessing and deploying such models, hence, requires careful consideration, safeguards, and an ongoing evaluation of societal impacts.

Overall, the research delivers a sophisticated approach to synthesizing images with textual fine-tuning, optimizing the balance between image diversity and fidelity, and opening avenues for novel applications in digital art, design, and beyond.