An Overview of "Improved Lossy Image Compression with Priming and Spatially Adaptive Bit Rates for Recurrent Networks"

This paper presents a novel approach to lossy image compression utilizing recurrent convolutional neural networks (RNNs) that improve upon existing methods, such as BPG (4:2:0), WebP, JPEG2000, and JPEG, particularly in metrics assessed by MS-SSIM. The authors introduce three significant enhancements to the standard recurrent network architecture: the use of pixel-wise perceptually-weighted loss, an improved recurrent architecture for better spatial data diffusion, and a spatially adaptive bit allocation algorithm. Each improvement contributes to the superior performance of the proposed model.

Technical Contributions

- Perceptually-Weighted Loss Function: The paper departs from using traditional loss functions like or by incorporating a pixel-wise loss function weighted by the Structural Similarity Index (SSIM). This approach effectively leverages a perceptual similarity metric to guide training, aligning more closely with the goal of human-like image reconstruction quality.

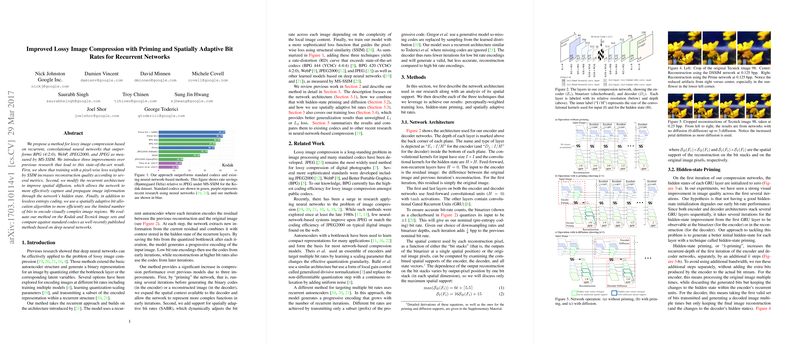

- Improved Recurrent Architecture: The modified architecture focuses on enhancing spatial diffusion, thus allowing the network to propagate relevant image information across its hidden states more efficiently. This design enables the network to better capture complex spatial relationships within image data.

- Spatially Adaptive Bit Rates (SABR): This innovation dynamically adjusts the bit rate based on local image content complexity, leading to more efficient compression. High-complexity regions receive more bits, optimizing the overall quality-to-size ratio without increasing the average bit rate beyond necessary.

Evaluation and Results

The authors validate their method using the Kodak and Tecnick datasets, comparing their results against standard and contemporary neural-network-based codecs. The proposed model consistently outperforms these codecs across a range of bit rates, as measured by MS-SSIM. The integration of priming and diffusion techniques significantly boosts performance without incurring prohibitive computational costs during the training or inference phases.

The paper rigorously examines several architectures, including baseline models trained with traditional loss functions versus those with DSSIM (Dis-Similarity Index Modified) loss, showing that the latter achieves better Area Under Curve (AUC) results for multiple quality metrics on both test sets. The Best Model, incorporating 3-priming and trained with the DSSIM loss function, achieves appreciable compression efficiency gains over other methods, especially under bandwidth restrictions.

Implications and Future Directions

The contributions of this research extend practical image compression capabilities, particularly for applications where both quality and efficiency are critical. The advances in network architecture, informed training losses, and adaptive bit rate allocation collectively push the boundaries of neural network-based image compression, offering a competitive alternative to traditional codecs like JPEG and BPG.

Looking forward, this approach may prompt further examination of recurrent networks for other data compression needs, potentially inspiring hybrid models that bring together the strengths of RNNs and other neural architectures. Future developments could explore deeper integrations of adaptive mechanisms into other aspects of neural image processing, optimizing networks for low-complexity tasks or deploying more sophisticated models on devices where computational resources are limited. The methodology for aligning perceptual similarity with computational efficiency will likely see broader applications within and beyond image compression.