- The paper presents a two-phase deep learning pipeline combining semantic segmentation and Fisher Vector encoding to achieve fine-grained UAV imagery damage assessment.

- It utilizes pre-trained networks like VGG-M and DeepLab with SVM classifiers to overcome challenges such as noisy, imbalanced data and varying object scales.

- Evaluation on diverse datasets, including transfer learning on Philippines data, demonstrates the method's high precision and robustness for disaster response.

Nazr-CNN: Fine-Grained Classification of UAV Imagery for Damage Assessment

Introduction

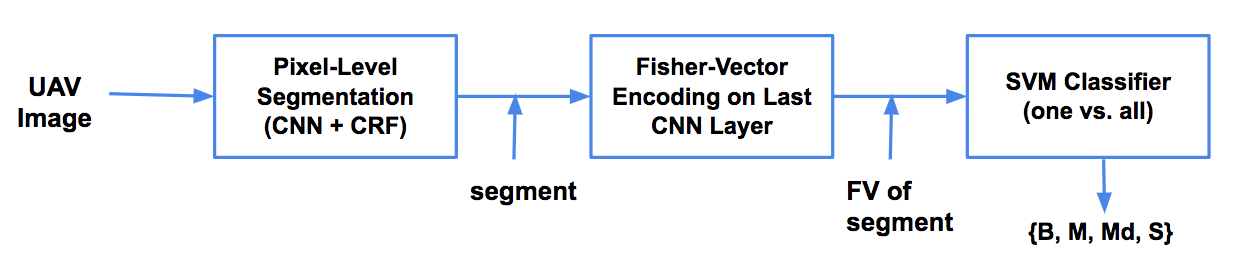

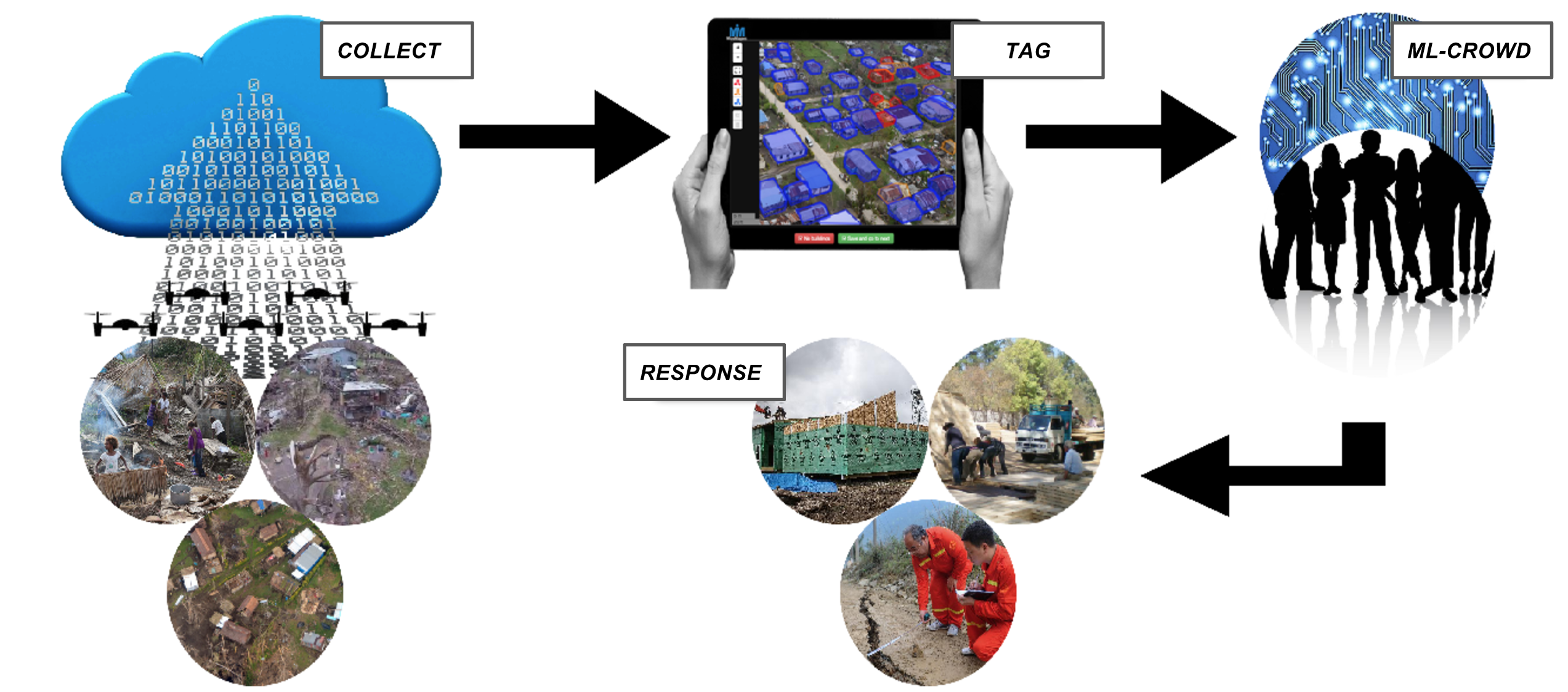

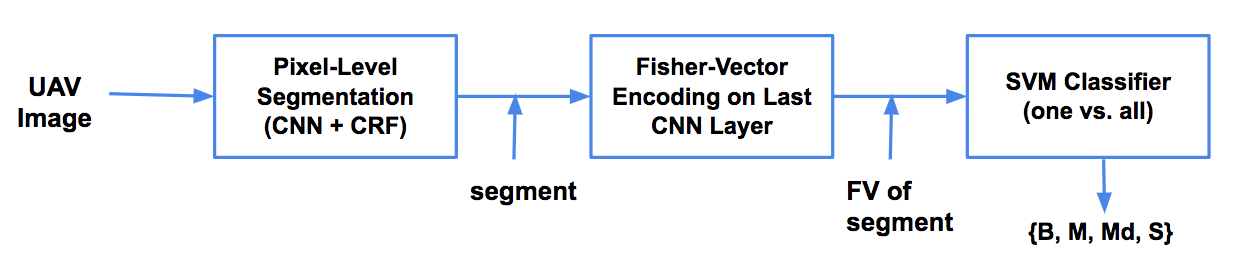

Nazr-CNN is presented as a deep learning pipeline specifically tuned for performing object detection and fine-grained classification of imagery acquired from Unmanned Aerial Vehicles (UAVs) in disaster-struck regions. The pipeline consists of two main components: pixel-level classification for object localization and Fisher Vector (FV) encoding through a Convolutional Neural Network (CNN's) hidden layer for texture discrimination among damage levels. This is particularly useful in post-disaster scenarios where rapid damage assessment is critical for effective response and resource allocation.

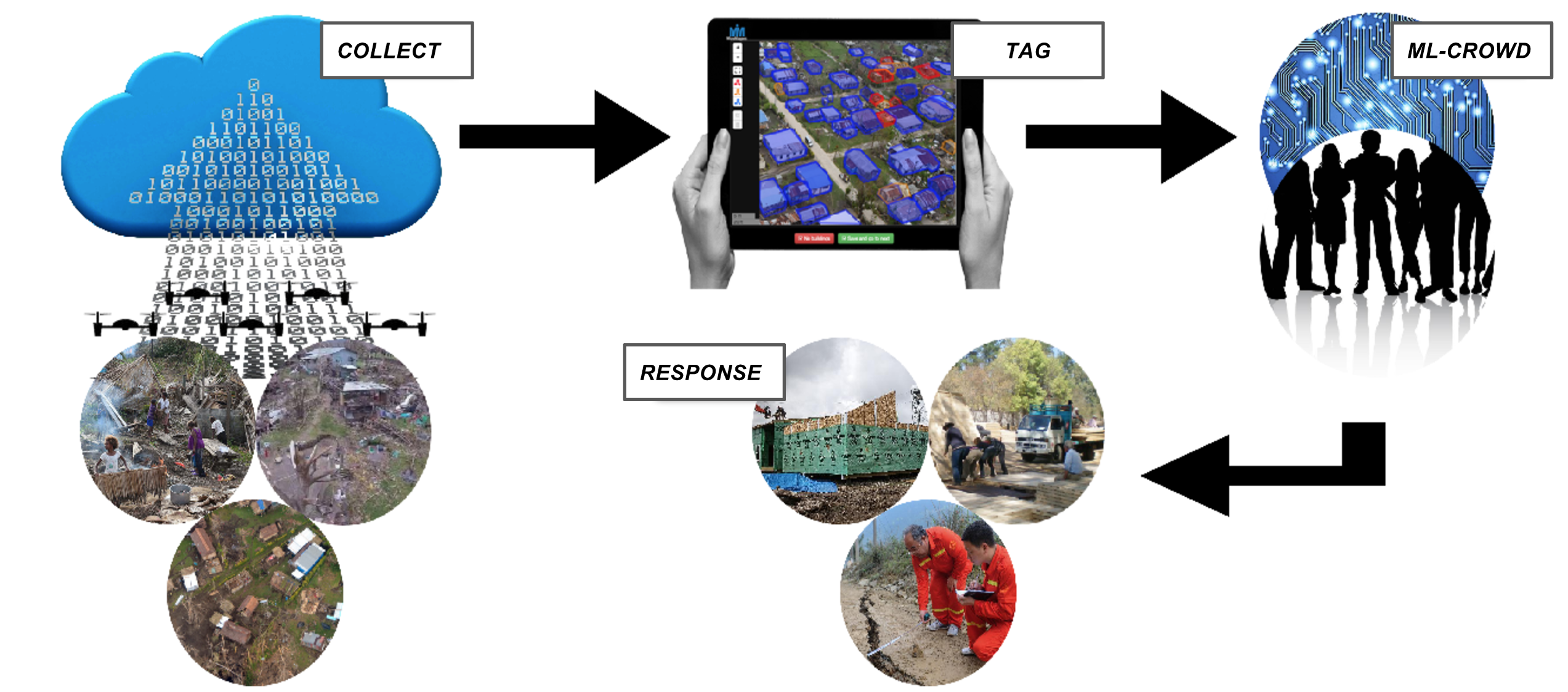

Figure 1: UAV image acquisition and annotation workflow implemented in MicroMappers for this paper, following the approach used for text classification by AIDR\protect\footnotemark.

Problem Definition and Constraints

The problem addressed is the automatic classification of images into damage categories using UAV-sourced aerial views. The constraints involve handling the heterogeneity of backgrounds and variable object scales due to varying UAV altitudes, designing classifiers robust to noisy and limited data, and overcoming annotation discrepancies. The goal is to achieve precise object detection where structures need classification into categories: Mild, Medium, Severe, or Background.

Deep Learning Framework

Pre-Trained Networks

The usage of pre-trained networks such as VGG-M is emphasized, adapting weight initializations for feature extraction due to limited data availability. This approach ensures computational efficiency while leveraging established networks' strengths for handling aerial imagery.

Semantic Segmentation

The first pipeline utilizes DeepLab, which integrates CNNs with Conditional Random Fields (CRFs) to enhance segmentation accuracy by refining boundaries and addressing noise. DeepLab's framework uses fully connected CRFs to focus on local shape extraction over mere image smoothening.

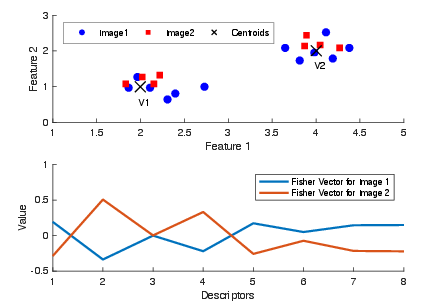

Fisher Vector Encoding

Fisher Vectors extend the traditional Bag-of-Visual-Words model, applying Gaussian Mixture Models to CNN-extracted features, thus allowing for detailed texture-based classification. This encoding is critical for discriminating between various damage levels, pivotal for effective disaster response.

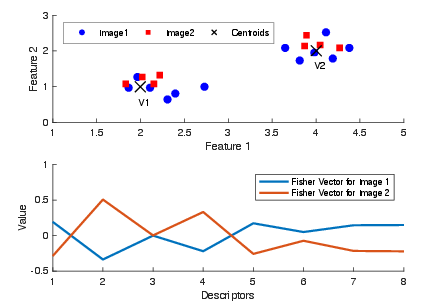

Figure 2: Bag of Visual Words (BoV) vs. Fisher Vector (FV) representation.

Proposed Pipeline

The proposed pipeline is a two-phase process: semantic segmentation for localization followed by FV-encoded CNN processing for classification, with SVM used as a classifier for the extracted segments. Integrating both ensures geometry and texture are leveraged for effective damage assessment.

Figure 3: \ combines pixel-level classification with FV-CNN. The Fisher Vectors are then trained using multi-class SVM.

Experiments and Evaluation

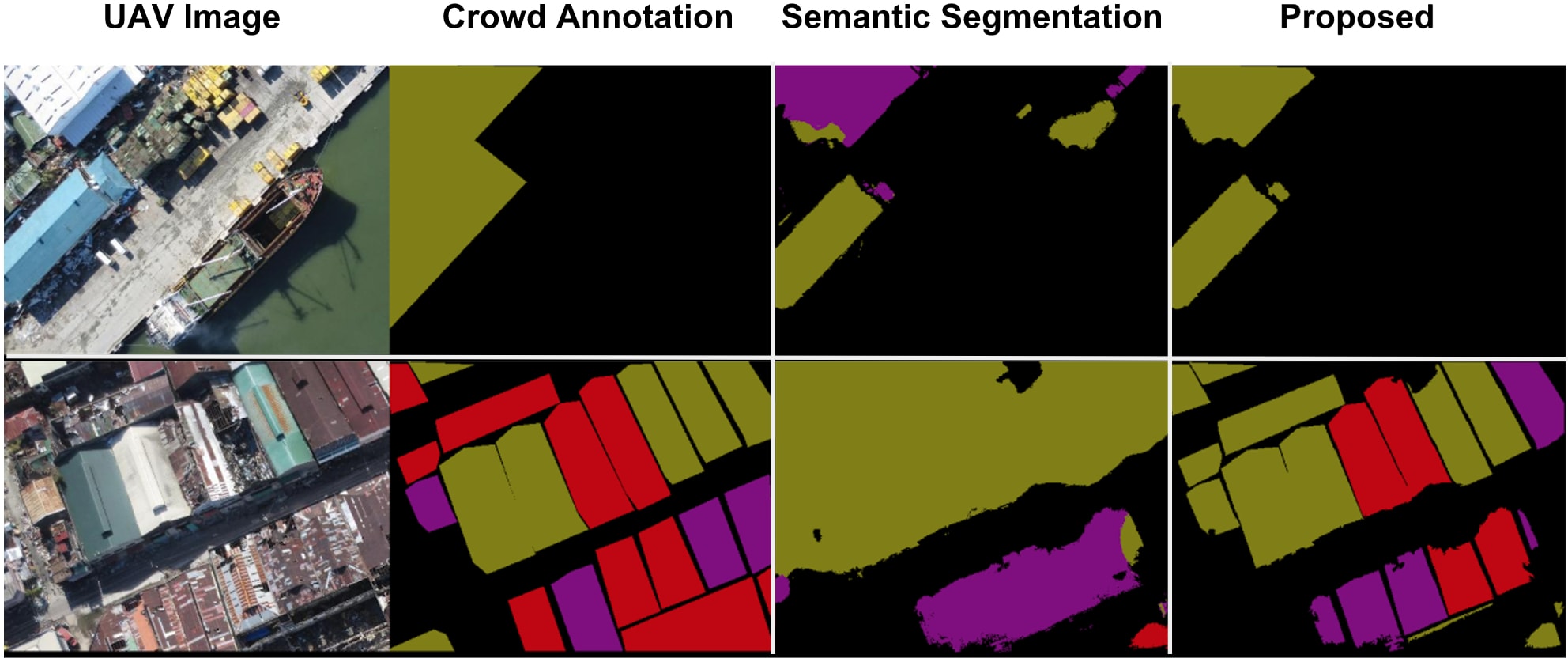

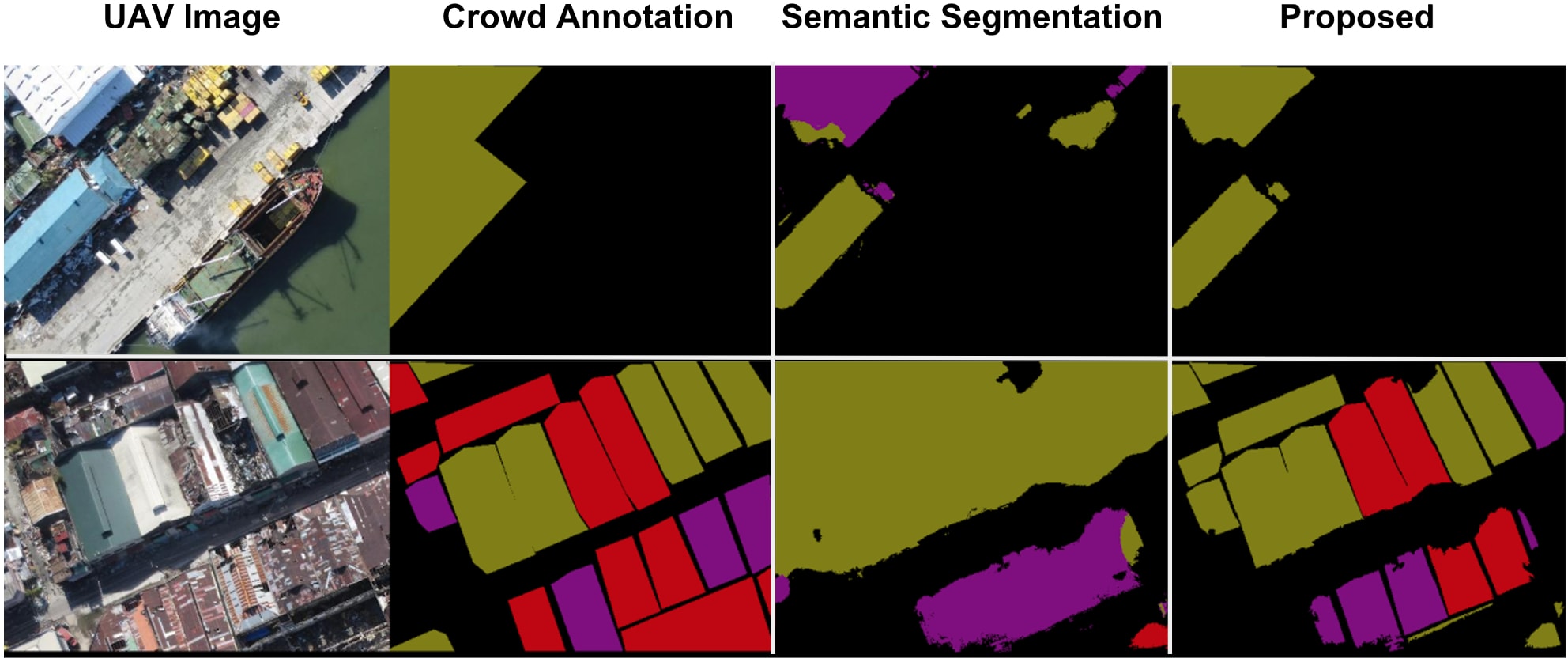

Semantic Segmentation Results

DeepLab achieves impressive localization outcomes but requires class weighting for improved accuracy across imbalanced damage categories. Class weighting proved to enhance object detection and damage categorization accuracy significantly.

Integrated Pipeline Effectiveness

Nazr-CNN's integration of semantic segmentation with FV-CNN exceeded baseline results, demonstrating robustness against labeling errors and height variability inherent in UAV imagery. Precision-recall metrics reinforce the model's effective fine-grained classification across damage classes.

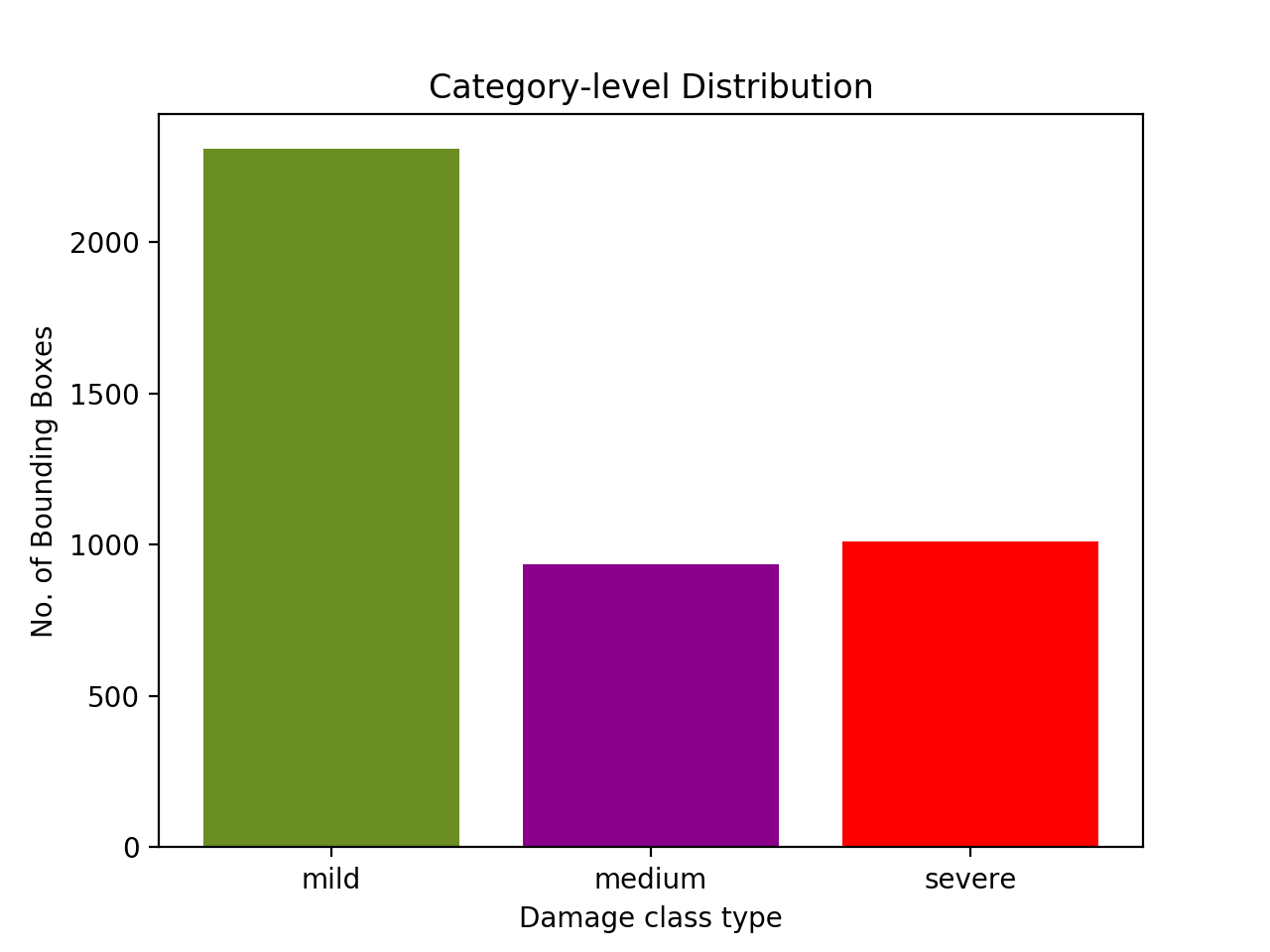

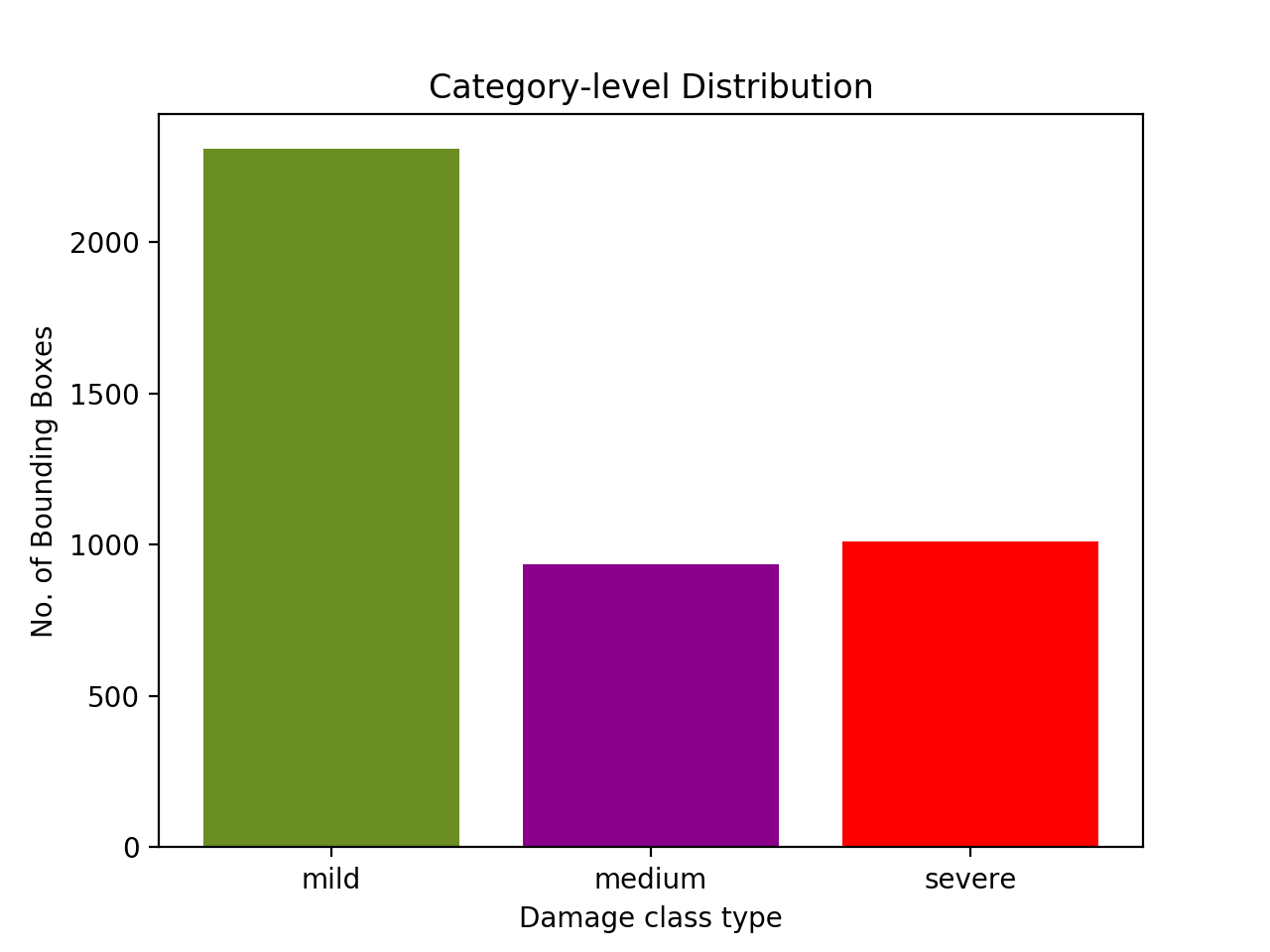

Figure 4: The bar chart shows the damage-class distribution among 4253 bounding boxes in a total of 1085 image dataset.

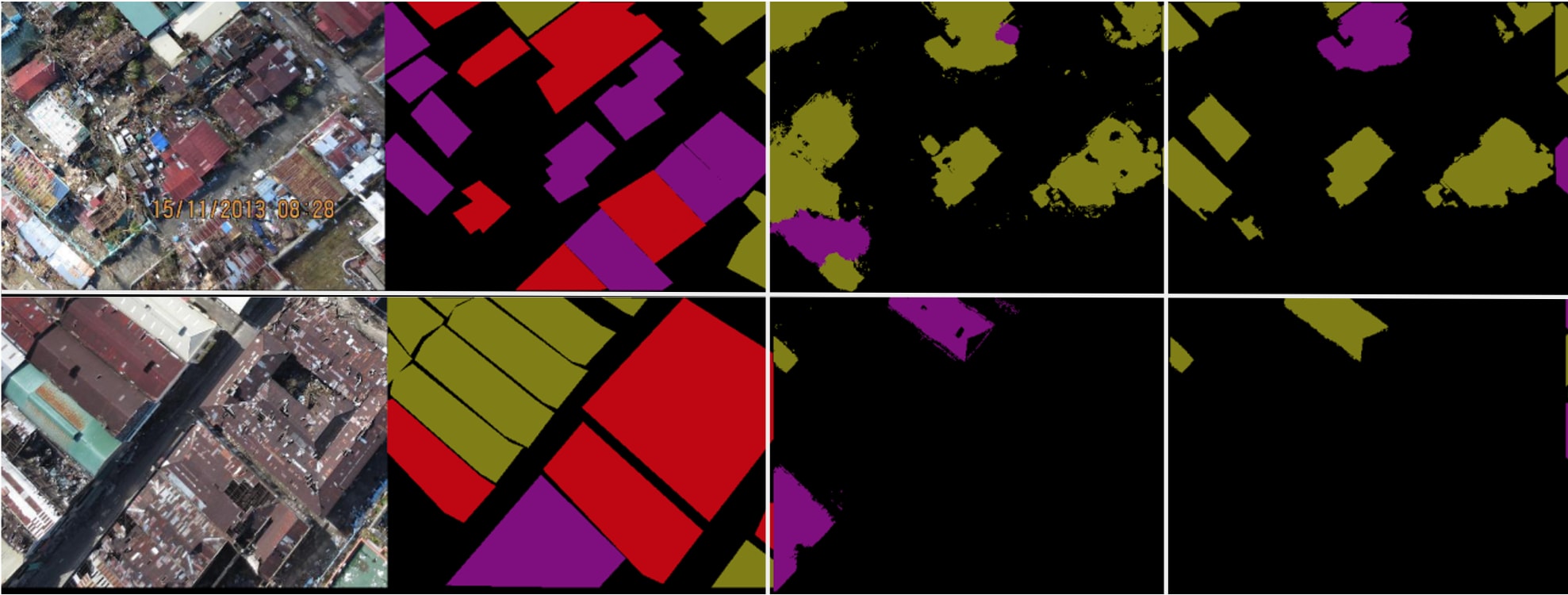

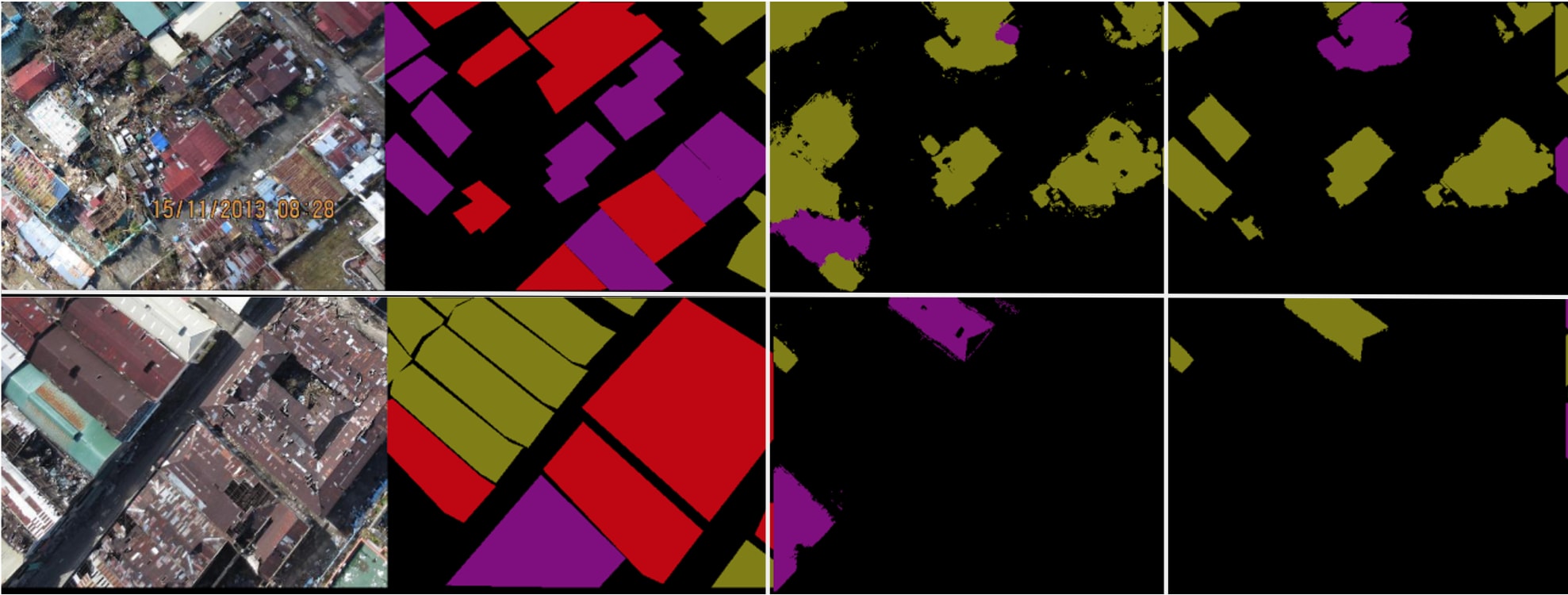

Transfer Learning and Generalization

Nazr-CNN also exhibited promising results when applied to a new, heterogeneous dataset from the Philippines, illustrating its ability to generalize beyond its training environment. This adaptation signifies its transferability to diverse disaster contexts, though challenges remain in fine-tuning for different textures and landscape environments.

Figure 5: Transfer learning on Philippines data.

Conclusion

Nazr-CNN emerges as a robust system for UAV imagery damage assessment, leveraging deep learning's potential to address challenges in disaster management environments. Future research directions include refining end-to-end segmentation techniques to further mitigate annotation noise and ambiguity, enhancing system performance and adaptability.