- The paper presents a novel framework that combines semantic depth alignment with multi-view geometry-guided supervision to improve 3D reconstruction from sparse views.

- The paper utilizes SfM-based semantic segmentation and monocular depth estimation to generate dense point clouds for accurate Gaussian splatting initialization.

- The paper demonstrates significant improvements in rendering fidelity and computational efficiency, achieving higher PSNR and consistent appearance over prior methods.

Multi-Appearance Sparse-View 3D Gaussian Splatting in the Wild: A Technical Analysis of MS-GS

Introduction

MS-GS introduces a robust framework for novel view synthesis and scene reconstruction from sparse, multi-appearance image collections using 3D Gaussian Splatting (3DGS). The method addresses two major challenges: (1) insufficient geometric support due to sparse Structure-from-Motion (SfM) point clouds, and (2) photometric inconsistencies arising from images captured under varying conditions. MS-GS leverages semantic depth alignment for dense point cloud initialization and multi-view geometry-guided supervision to regularize appearance and geometry, outperforming prior NeRF and 3DGS-based approaches in both quantitative and qualitative metrics.

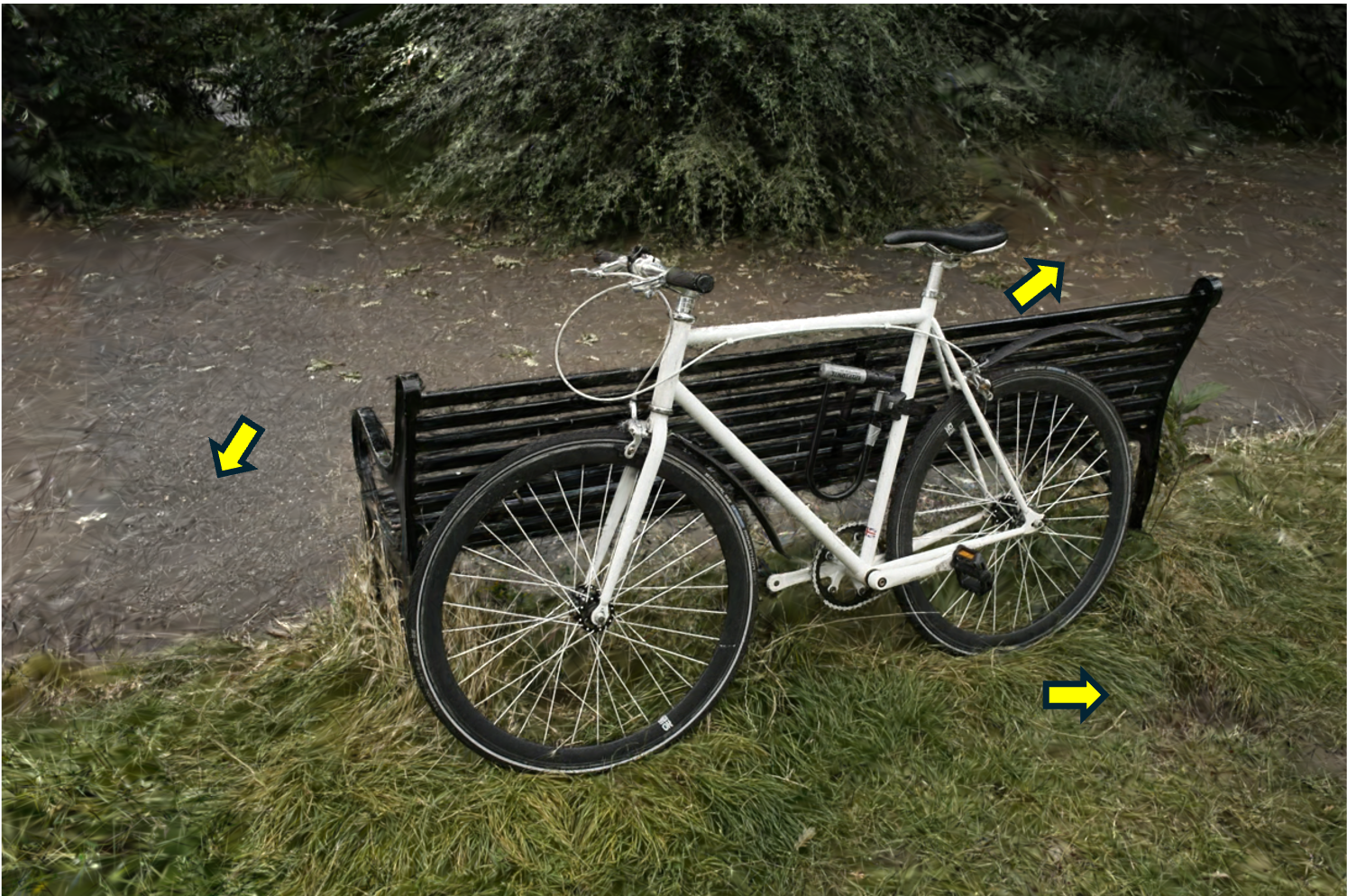

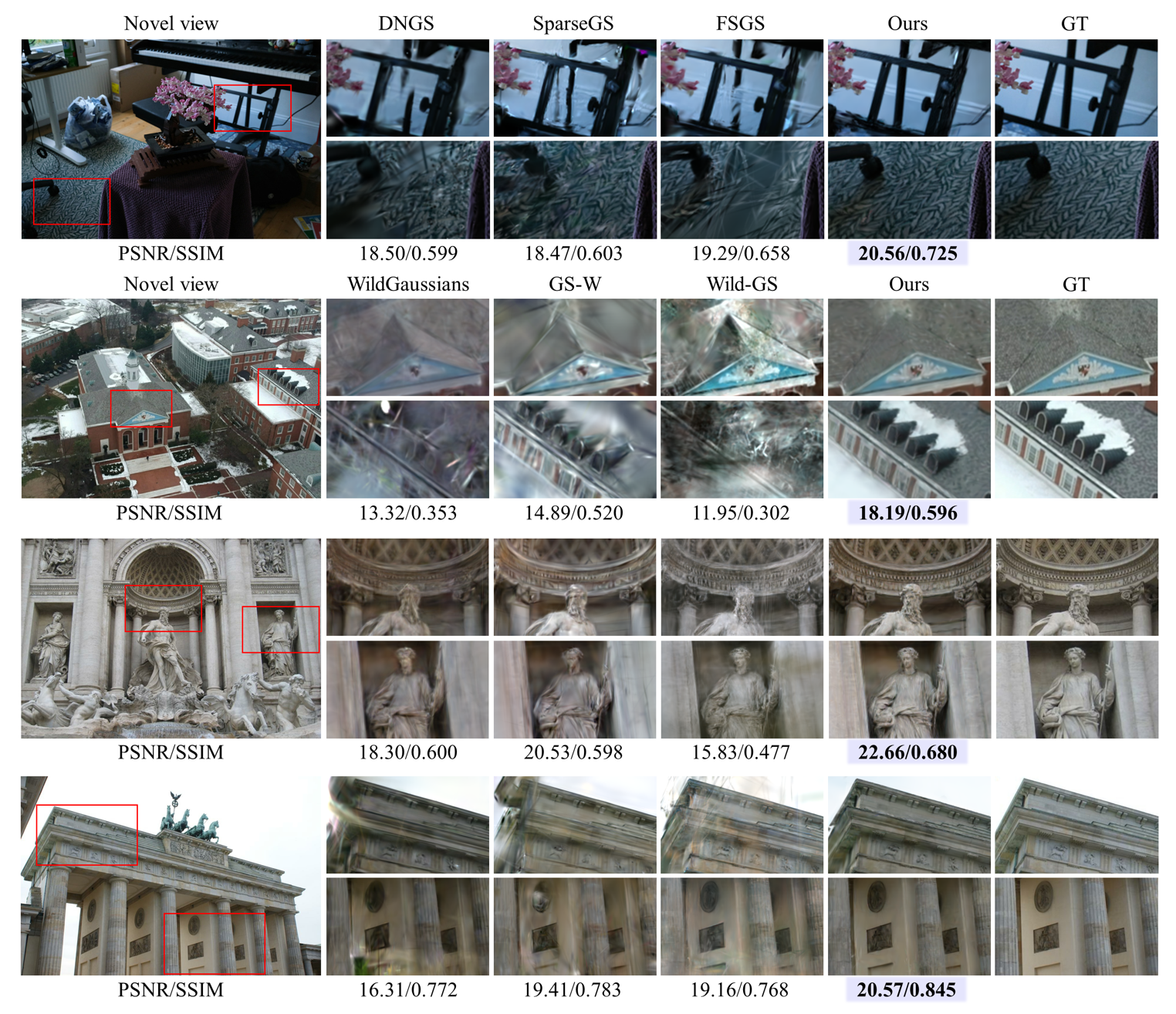

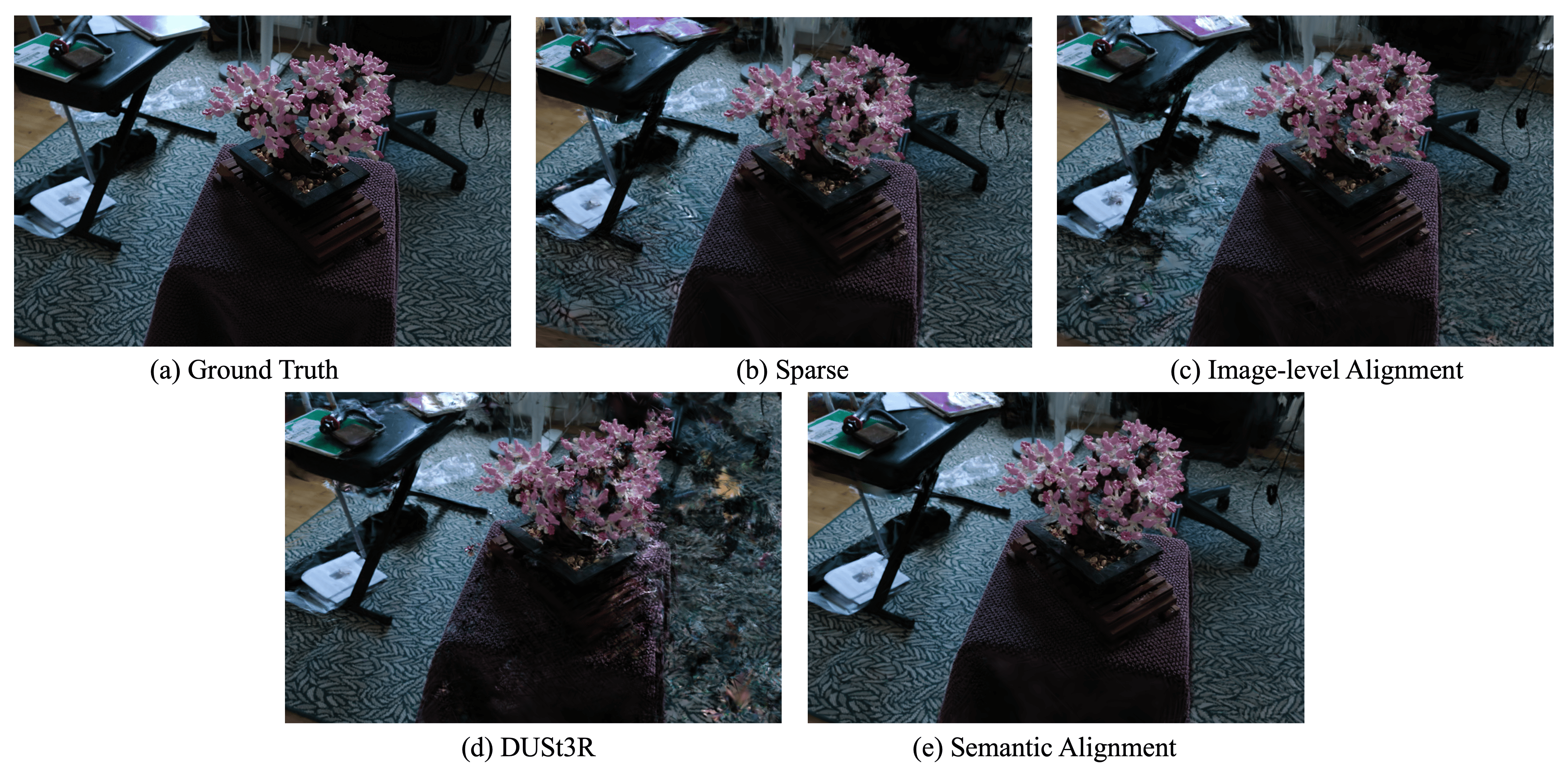

Figure 1: With 20 input views, DNGS and FSGS produce overly smooth rendering in regions lacking support from sparse point cloud initialization, while MS-GS recovers fine details and coherent structure.

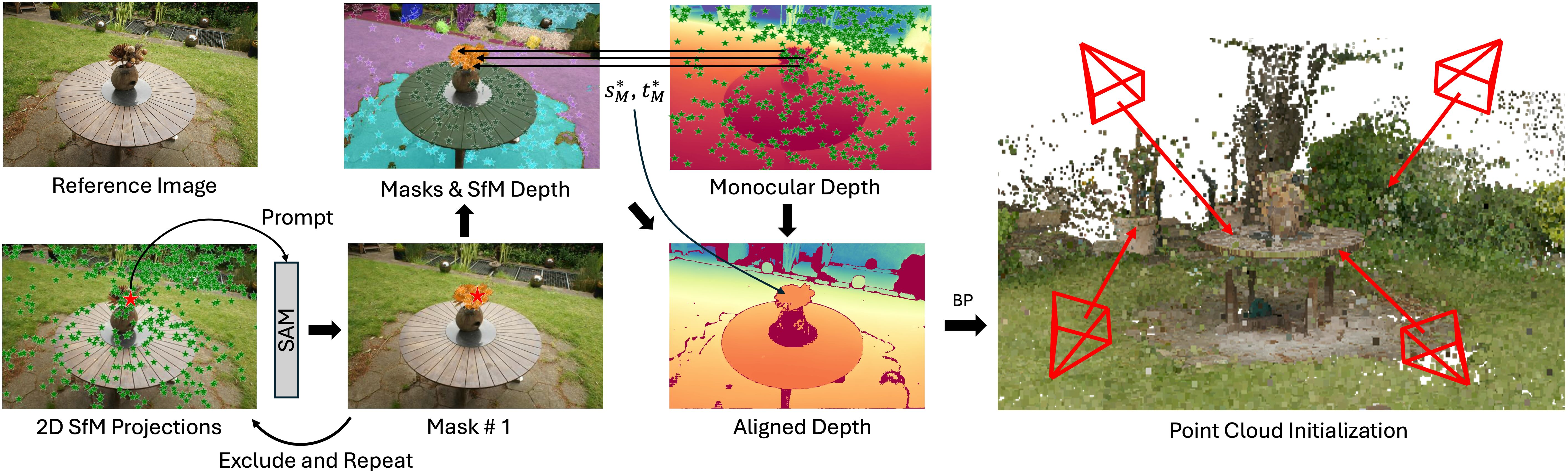

Semantic Depth Alignment for Dense Initialization

The initialization of 3DGS is critical, especially in sparse-view scenarios where conventional SfM point clouds are insufficiently dense and lack coverage. MS-GS proposes a semantic depth alignment strategy that combines monocular depth estimation with local semantic segmentation, anchored by SfM points. The process involves:

- Projecting SfM points onto each image and extracting semantic regions using a point-prompted segmentation model (e.g., Segment Anything).

- Iteratively refining semantic masks to ensure sufficient SfM point support and merging overlapping masks for completeness.

- Aligning monocular depth within each semantic region to SfM depth via least-squares optimization of scale and shift parameters.

- Back-projecting aligned depths to generate a dense, semantically meaningful point cloud for 3DGS initialization.

This approach mitigates the ambiguities and noise inherent in global monocular depth alignment, providing reliable geometric anchors for subsequent Gaussian densification and pruning.

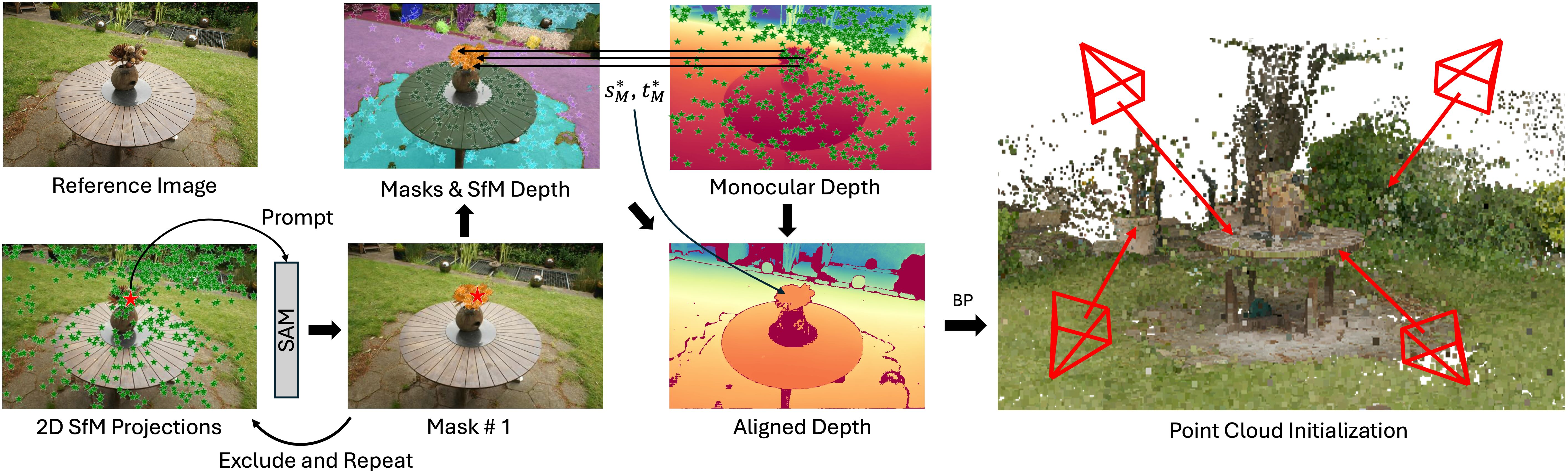

Figure 2: Overview of depth prior initialization in MS-GS, showing semantic mask extraction and depth alignment for dense point cloud construction.

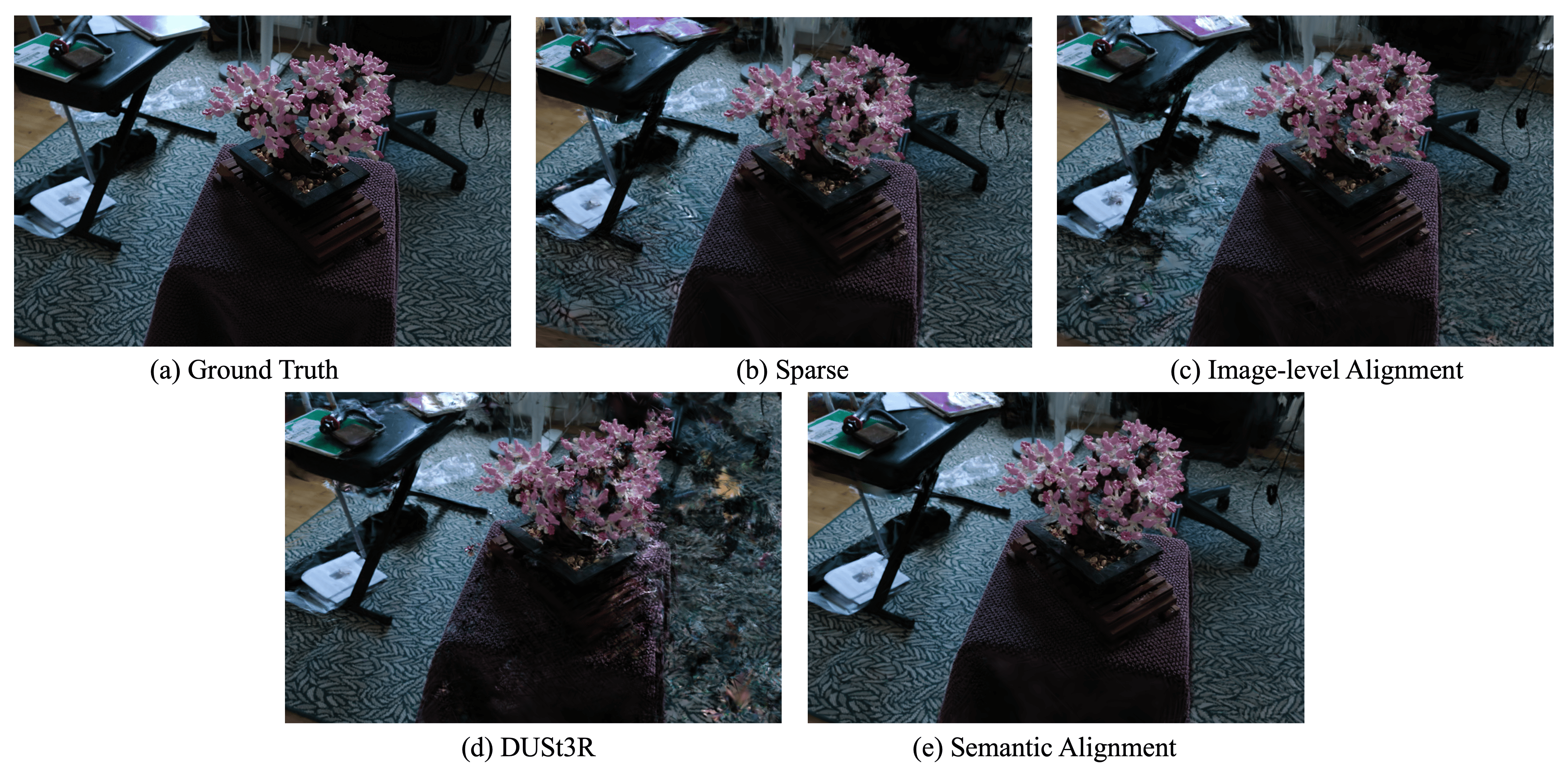

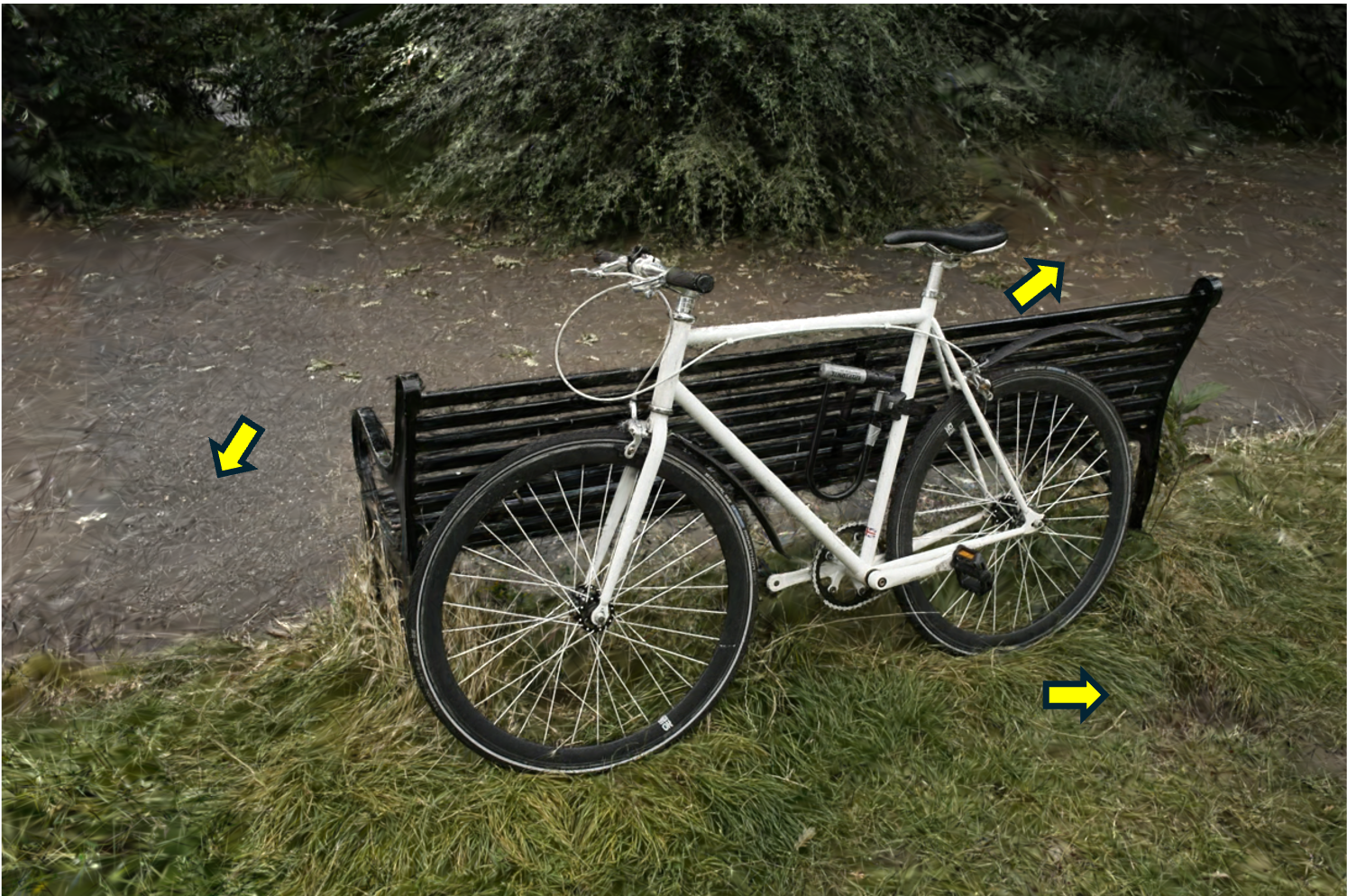

Figure 3: Visualizations of rendering with different point cloud initializations, highlighting the superiority of semantic alignment over sparse and image-level alignment.

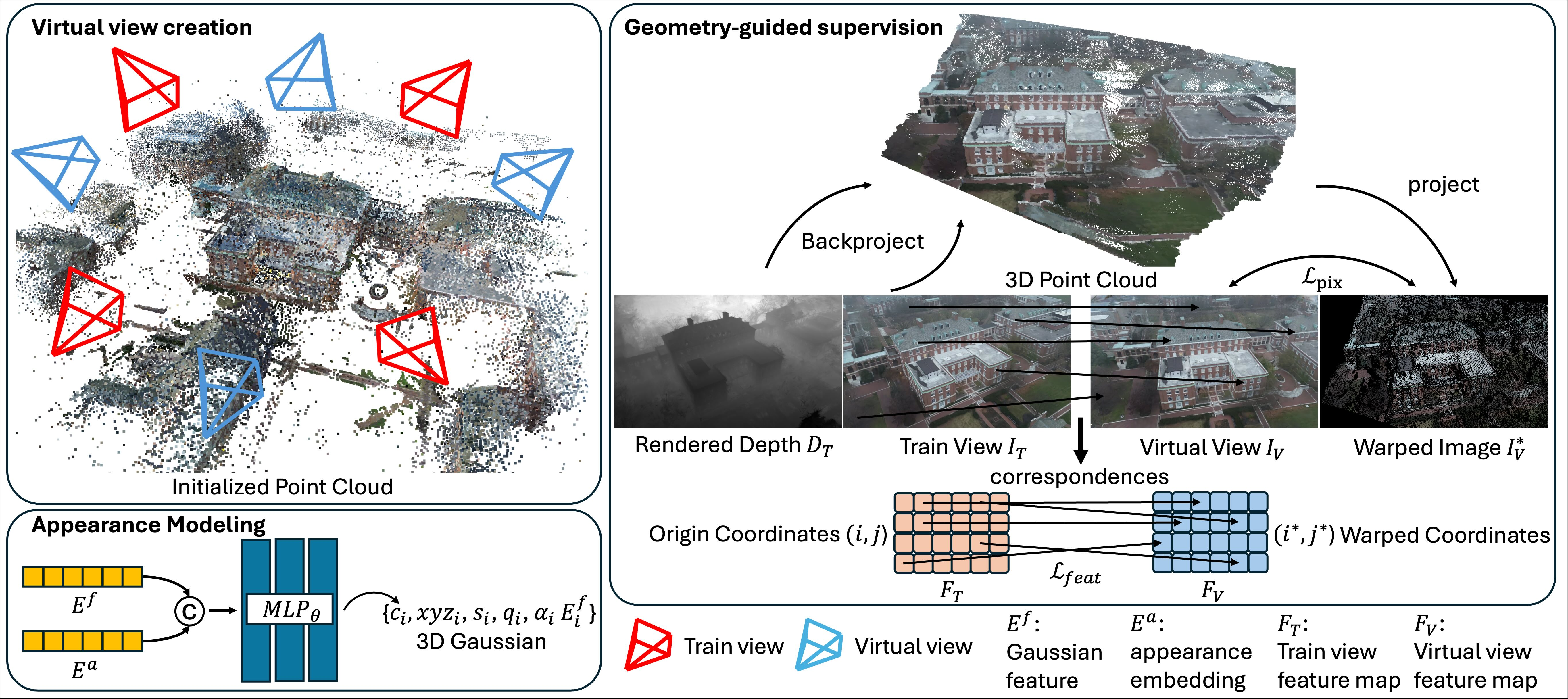

Multi-View Geometry-Guided Supervision

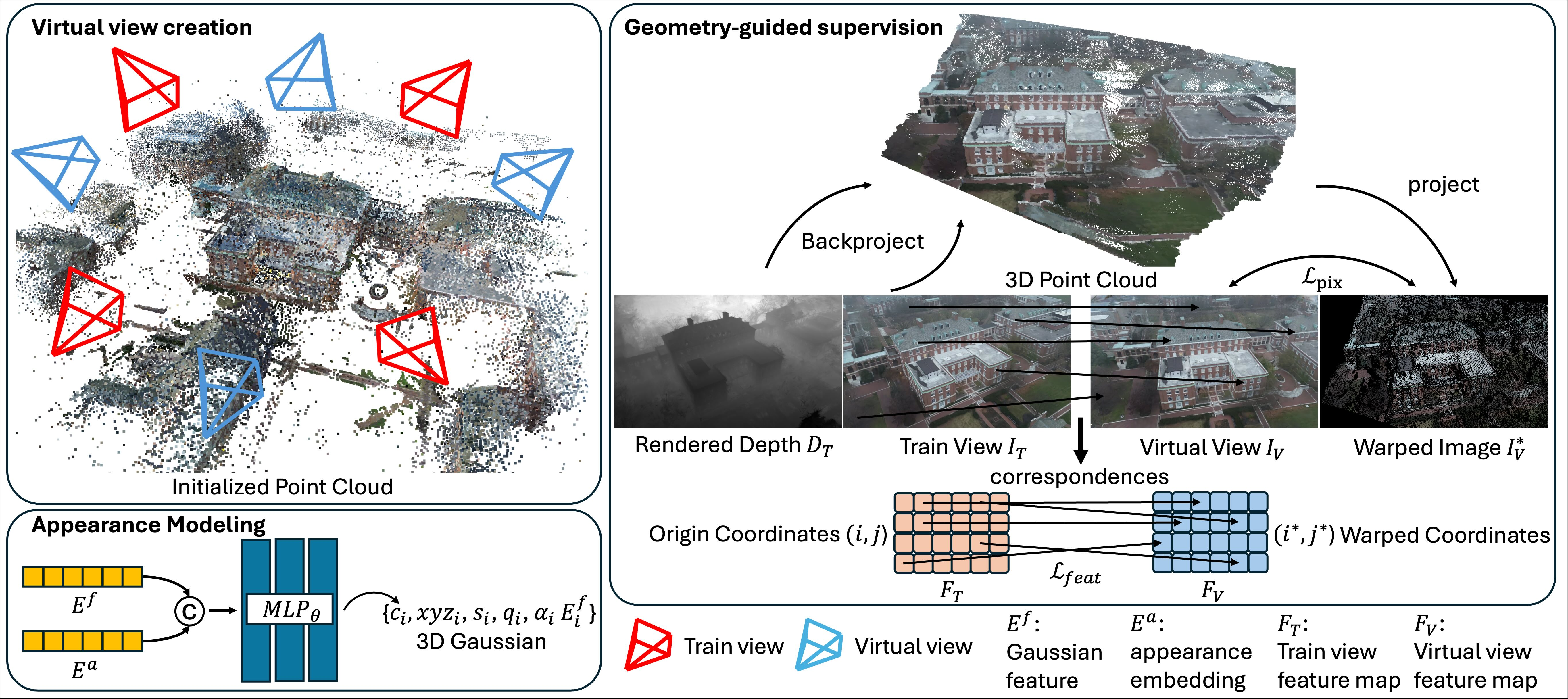

To address overfitting and appearance inconsistency in sparse, multi-appearance settings, MS-GS introduces multi-view geometry-guided supervision:

- Appearance Modeling: Decomposes appearance into per-image embeddings (capturing global variations) and per-Gaussian feature embeddings (encoding canonical scene appearance). An MLP fuses these embeddings to predict Gaussian colors.

- Virtual View Creation: Interpolates camera poses between training views to generate virtual viewpoints, using SLERP for rotation and linear interpolation for translation and FOV.

- 3D Warping Supervision: Establishes pixel-wise correspondences between training and virtual views via backprojection and forward projection, enforcing appearance consistency with explicit pixel loss and occlusion masking.

- Semantic Feature Supervision: Applies feature-level loss using VGG-extracted feature maps, mapped via 3D warping correspondences, to regularize appearance at a coarser semantic level and handle occlusions.

This dual-level supervision constrains both fine-grained and coarse appearance, promoting multi-view consistency and reducing artifacts.

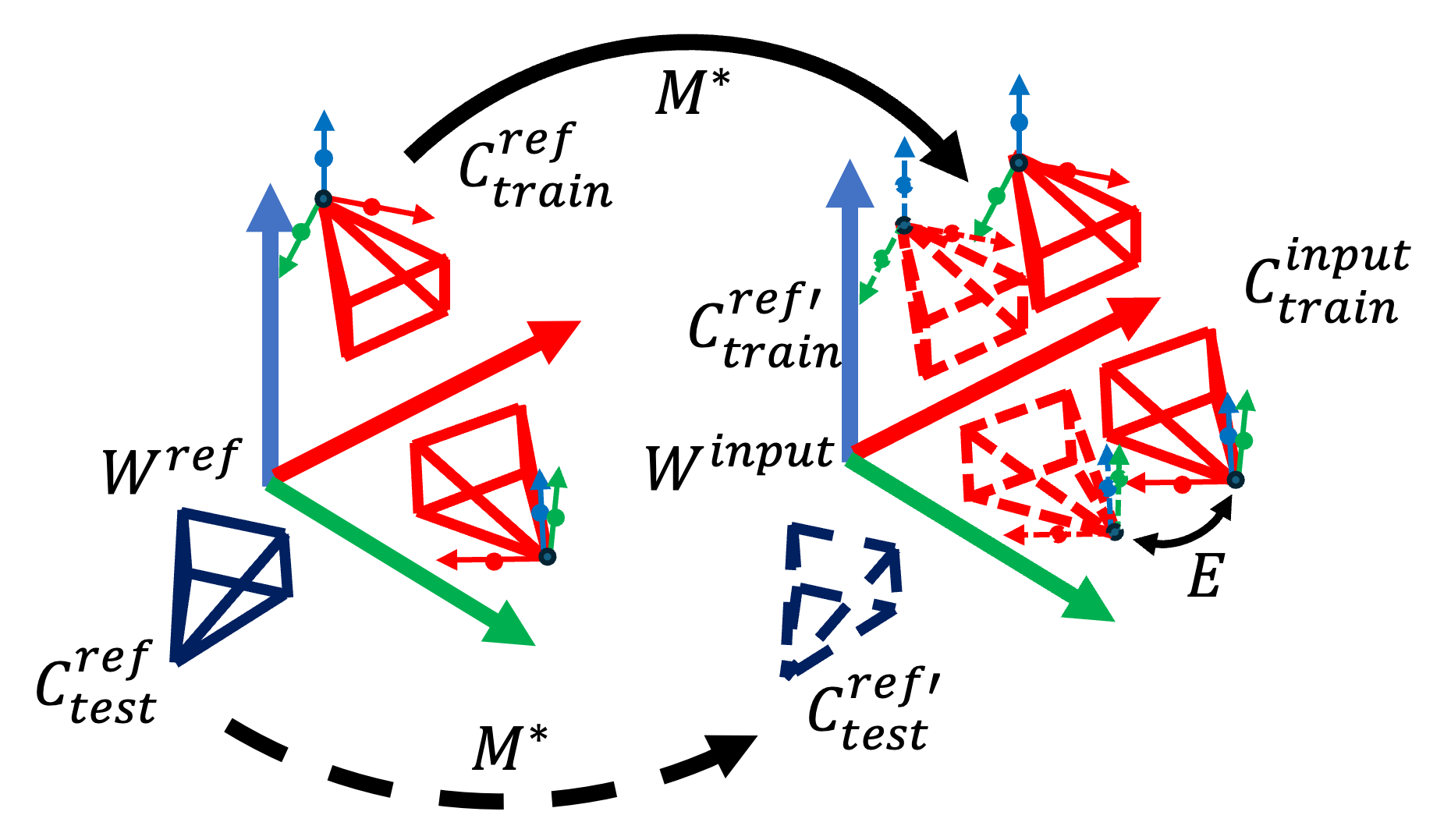

Figure 4: Overview of multi-view geometry-guided supervision, illustrating virtual view creation and 3D warping for pixel and feature loss computation.

Experimental Results and Ablation

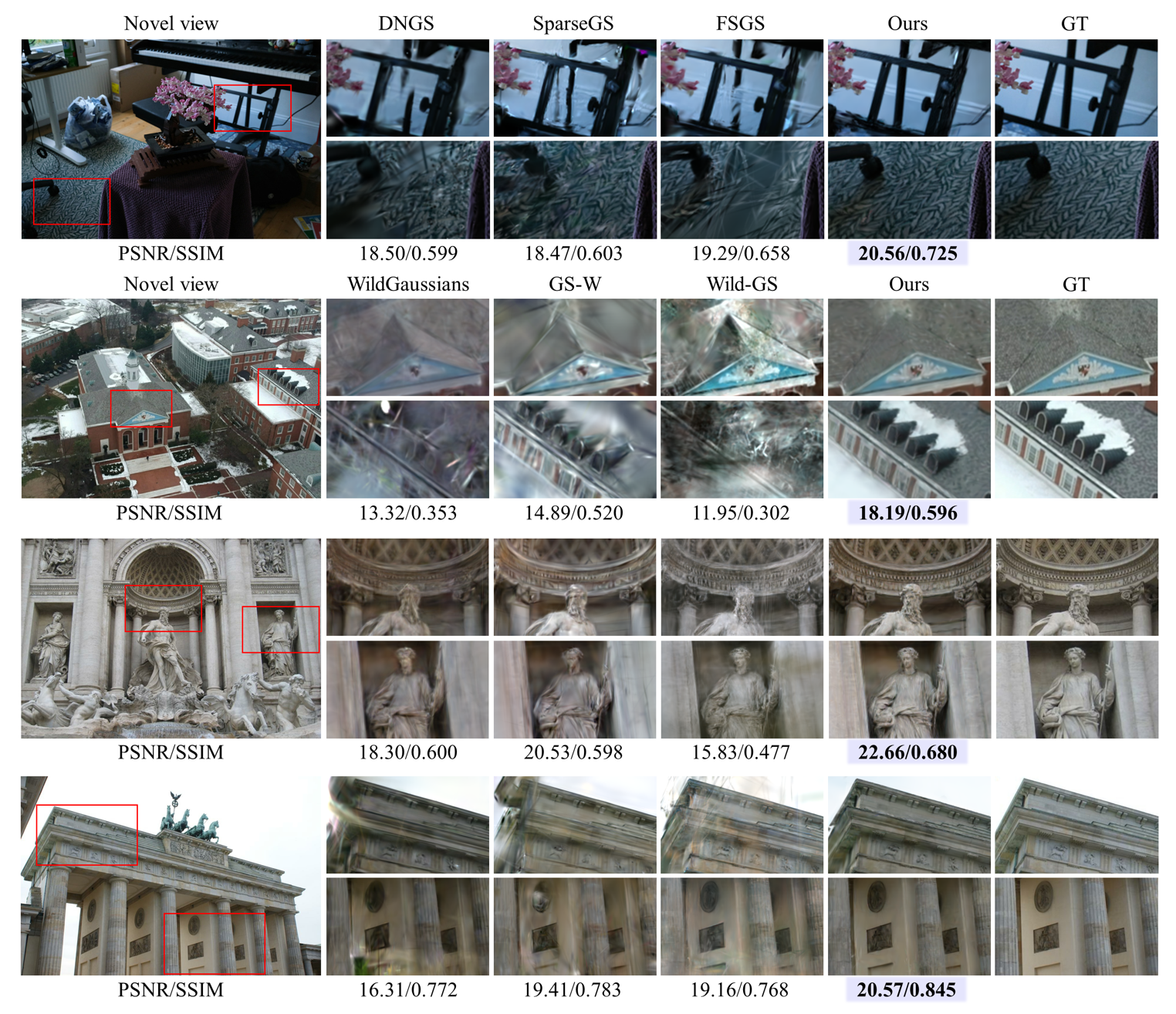

MS-GS is evaluated on three benchmarks: Sparse Mip-NeRF 360, Sparse Phototourism, and a newly introduced Sparse Unbounded Drone dataset. The method demonstrates substantial improvements over state-of-the-art NeRF and 3DGS variants, especially in perceptual metrics (LPIPS, DSIM) and rendering speed.

- Quantitative Results: On the Sparse Unbounded Drone dataset, MS-GS achieves a PSNR of 19.87, SSIM of 0.580, LPIPS of 0.322, and DSIM of 0.096, outperforming the best prior method by 2.54 dB in PSNR and reducing perceptual error by over 65%.

- Qualitative Results: MS-GS consistently reconstructs fine details and maintains appearance coherence across novel views, whereas prior methods exhibit floaters, artifacts, and inconsistent radiance.

Ablation studies confirm the complementary benefits of semantic dense initialization and multi-view supervision. Dense initialization alone improves PSNR by 0.8 dB, while the addition of geometry-guided losses further boosts performance.

Figure 5: Qualitative comparison of novel view synthesis across datasets, with MS-GS excelling at detailed structure and appearance consistency.

Implementation Considerations

Limitations and Future Directions

MS-GS is not designed to handle transient objects, which remain a challenge in sparse-view settings due to increased uncertainty and ambiguity. Future work may explore dynamic scene modeling, improved appearance disentanglement, and integration with large-scale, unbounded scene representations.

Conclusion

MS-GS establishes a new baseline for multi-appearance, sparse-view 3D Gaussian Splatting, combining semantic depth alignment and multi-view geometry-guided supervision to achieve superior reconstruction fidelity and appearance consistency. The method is computationally efficient, broadly applicable, and sets the stage for further advances in robust, in-the-wild novel view synthesis.