- The paper introduces a content-aware spatial encoder and spatial contrastive learning to effectively align audio–text embeddings under multi-source conditions.

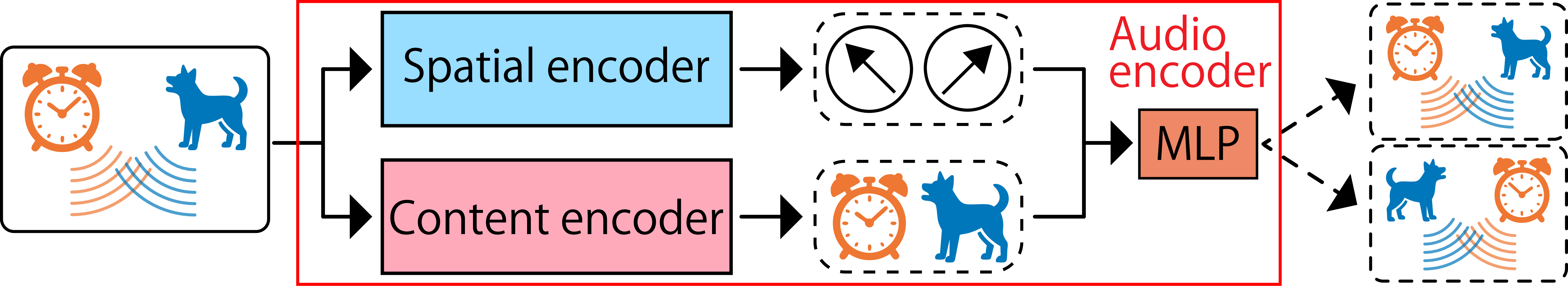

- It employs a dual-encoder architecture that fuses monaural and spatial features, overcoming permutation challenges in complex auditory scenes.

- Experimental results reveal enhanced retrieval and captioning accuracy and robust generalization to unseen multi-source scenarios.

Spatial-CLAP: Spatially-Aware Audio–Text Embeddings for Multi-Source Conditions

Introduction and Motivation

Spatial-CLAP addresses a critical limitation in current audio–text embedding models: the inability to robustly encode spatial information, especially under multi-source conditions. While models such as CLAP, AudioCLIP, and Pengi have demonstrated strong performance in aligning audio and text representations, their focus has been primarily on monaural or single-source audio, discarding spatial cues essential for real-world auditory scene understanding. The central challenge is to develop embeddings that not only capture "what" is sounding but also "where" each event occurs, particularly when multiple sources are present simultaneously.

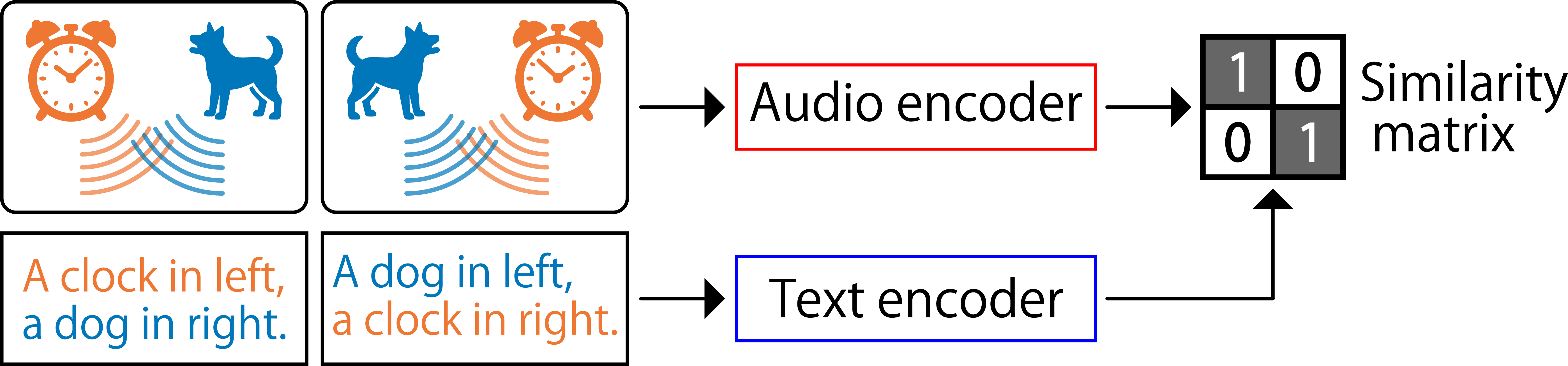

Prior attempts to extend CLAP with spatial encoders have been restricted to single-source scenarios, failing to resolve the permutation problem inherent in multi-source environments—namely, the correct binding between each source and its spatial location. Spatial-CLAP introduces a content-aware spatial encoder (CA-SE) and a novel spatial contrastive learning (SCL) strategy to explicitly enforce content–space correspondence, enabling robust, spatially-aware audio–text embeddings.

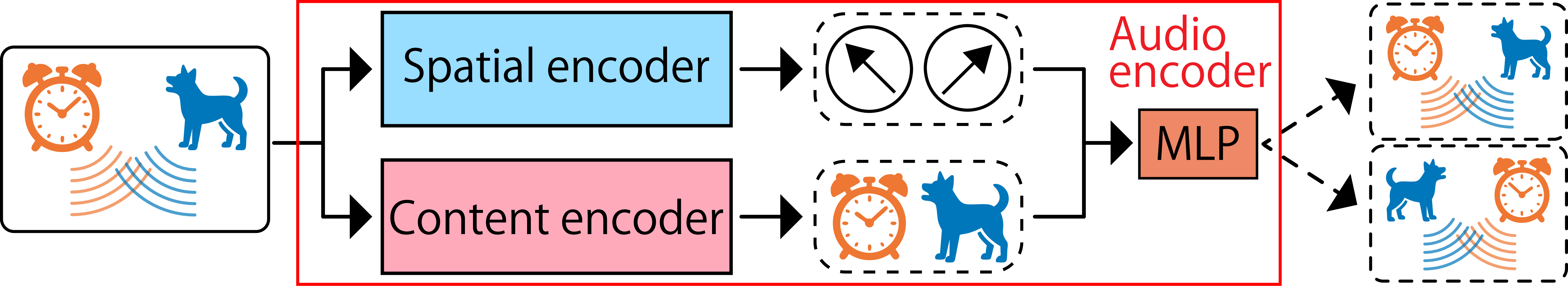

Figure 1: Comparison of audio encoders. (a) Conventional method encodes content and spatial information separately, causing permutation problems under multi-source conditions. (b) Spatial-CLAP introduces a content-aware spatial encoder aligning content and spatial embeddings.

Model Architecture

Spatial-CLAP employs a dual-encoder architecture for audio and text:

- Content Encoder (CE): Processes the average of stereo channels using a monaural CLAP encoder, leveraging large-scale pretraining for rich content representation.

- Content-Aware Spatial Encoder (CA-SE): Adapted from SELDNet, pretrained on sound event localization and detection (SELD) tasks, and designed to couple spatial cues with content information. The CA-SE processes stereo input and outputs a fixed-dimensional embedding.

- Fusion: The outputs of CE and CA-SE are concatenated and passed through a two-layer MLP to produce the final audio embedding.

- Text Encoder: A RoBERTa-base model, fine-tuned within the contrastive learning framework, produces text embeddings aligned with the audio space.

This architecture enables the model to unify content and spatial information within a single embedding, overcoming the limitations of prior approaches that treat these aspects independently.

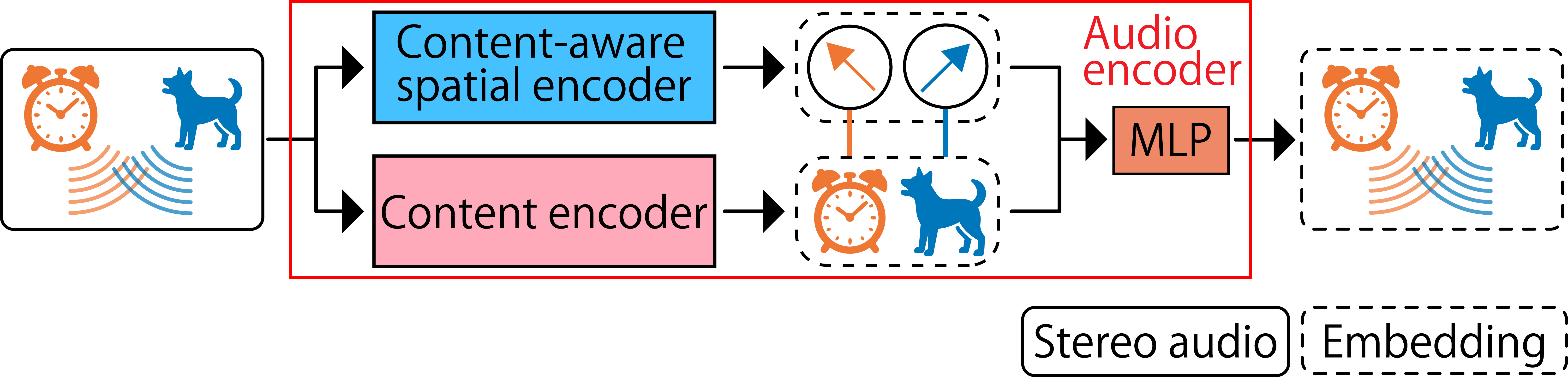

Spatial Contrastive Learning (SCL)

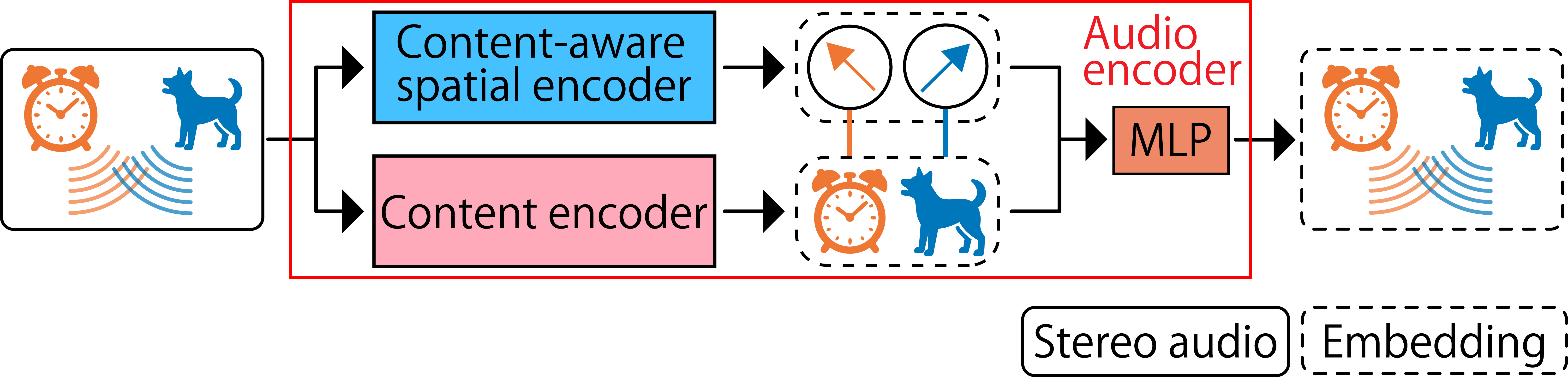

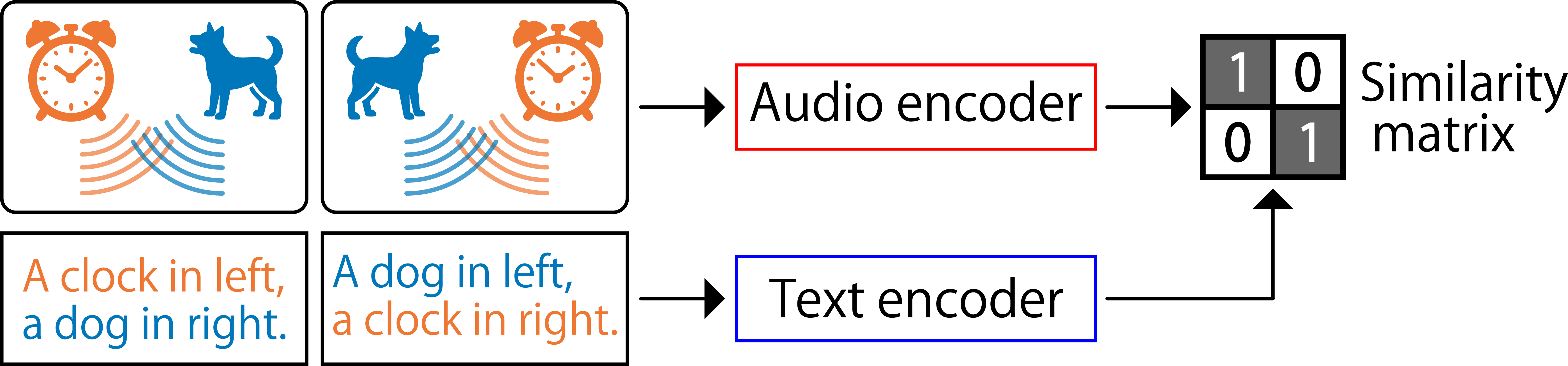

Standard contrastive learning aligns audio and text embeddings using InfoNCE loss, but is insufficient for enforcing correct content–space correspondence in multi-source conditions. SCL addresses this by generating hard negative examples through permutation of content–space assignments. For a two-source mixture, SCL constructs both the correct and permuted (swapped) versions of the audio–text pair, including both in the batch. The model is thus explicitly penalized for failing to distinguish between correct and incorrect content–space bindings.

Figure 2: Spatial contrastive learning (SCL) enforces the model to explicitly learn content–space correspondence in multi-source environments by adding permuted content–space assignment audio–text pairs as hard negative examples.

This approach generalizes to n sources by generating all n!−1 non-identity permutations as negatives, providing strong supervision for the binding problem.

Experimental Evaluation

Datasets and Setup

- Audio: AudioCaps 2.0, with 91,256 training, 2,475 validation, and 975 test samples. Each monaural clip is convolved with simulated RIRs to generate stereo signals.

- Spatial Labels: Five DoA classes (front-left, front, front-right, left, right), with captions augmented to include spatial descriptions.

- Room Simulation: 440 reverberant rooms, with disjoint splits for training, validation, and testing.

- Model Details: CE uses HTS-AT initialized with CLAP weights; CA-SE is based on SELDNet; text encoder is RoBERTa-base. All components are fine-tuned jointly.

Baselines

- Monaural: No spatial encoder; standard CLAP.

- Conventional: Spatial encoder trained on DoA estimation, but not content-aware; trained only on single-source data.

- Structured: SE and CE outputs processed independently, preventing content–space binding.

- Ours (Spatial-CLAP): Full model with CA-SE and SCL.

- Ablations: Variants without SCL, without CLAP pretraining, or with a non-content-aware SE.

Embedding-Based Evaluation

Spatial-CLAP achieves the highest R@1 retrieval scores and spatial classification accuracy under both single- and multi-source conditions. Notably, in the two-source (2-src) setting, Spatial-CLAP outperforms all baselines in both retrieval and content–space assignment accuracy, demonstrating its ability to resolve the permutation problem and generalize to complex mixtures.

Downstream Task: Spatial Audio Captioning

Spatial-CLAP embeddings are evaluated on a spatial audio captioning task using a frozen audio encoder and a GPT-2 decoder. Metrics include BLEU, ROUGE-L, METEOR, CIDEr, SPICE, SPIDEr, BERTScore, SBERT, and the proposed DW-SBERT (direction-wise SBERT) for spatial evaluation.

Spatial-CLAP achieves the highest scores across all metrics, with particularly strong improvements in DW-SBERT and spatial description accuracy, indicating effective encoding of both content and spatial information. Conventional semantic metrics (e.g., SBERT) are shown to be insufficient for spatial evaluation, motivating the use of spatially-aware metrics.

Embedding Visualization

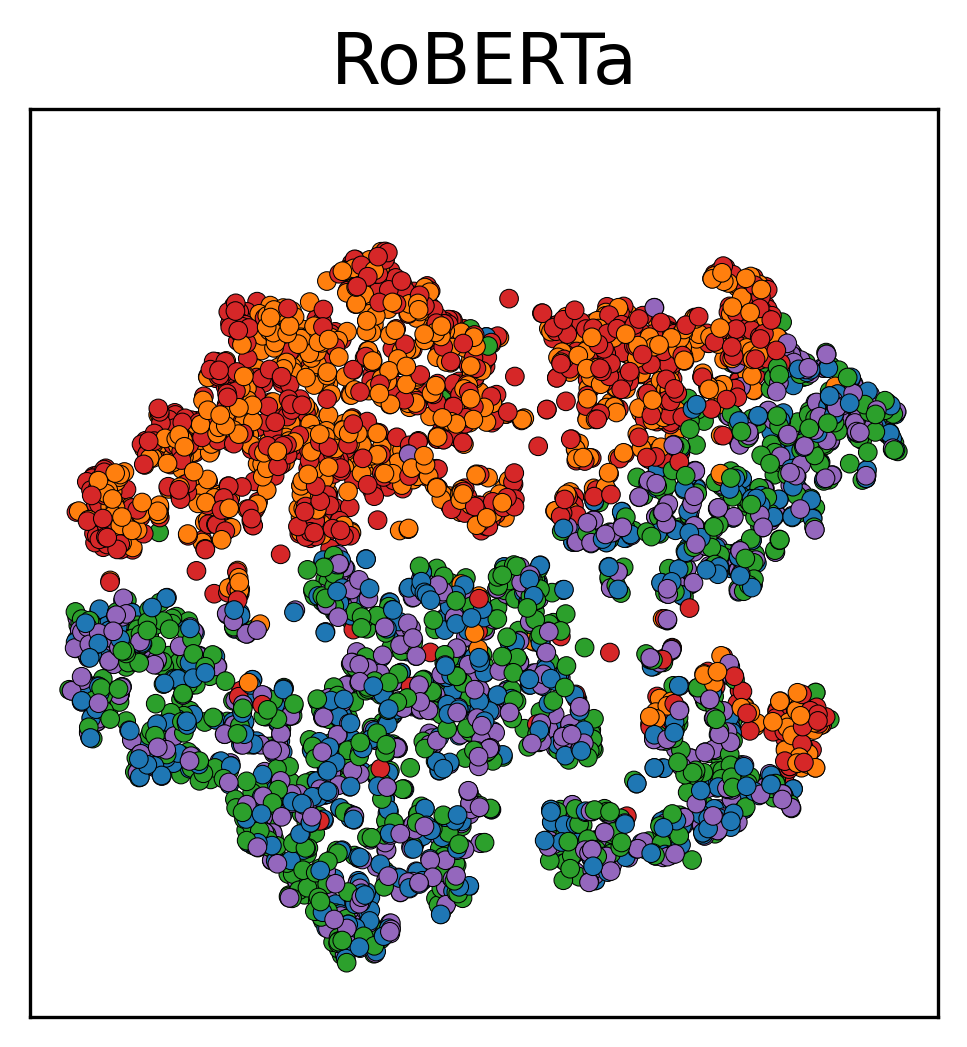

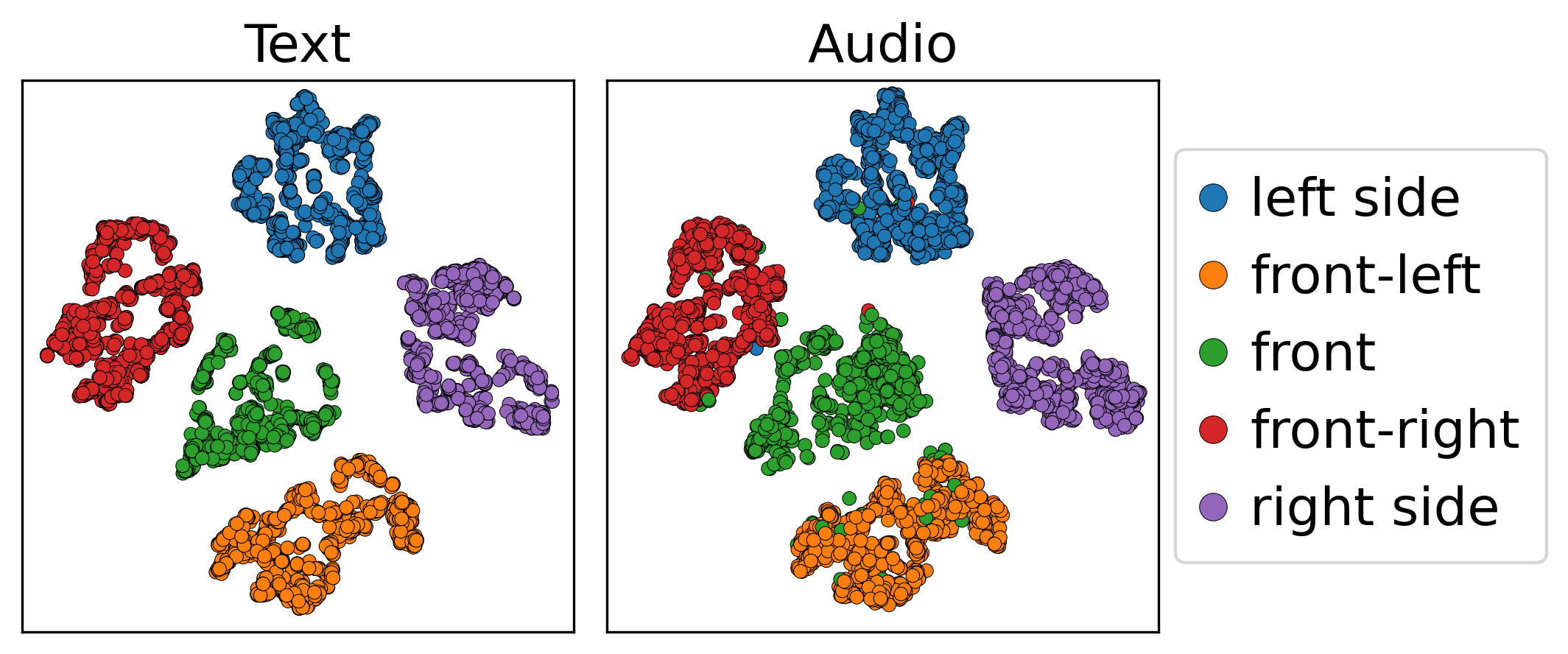

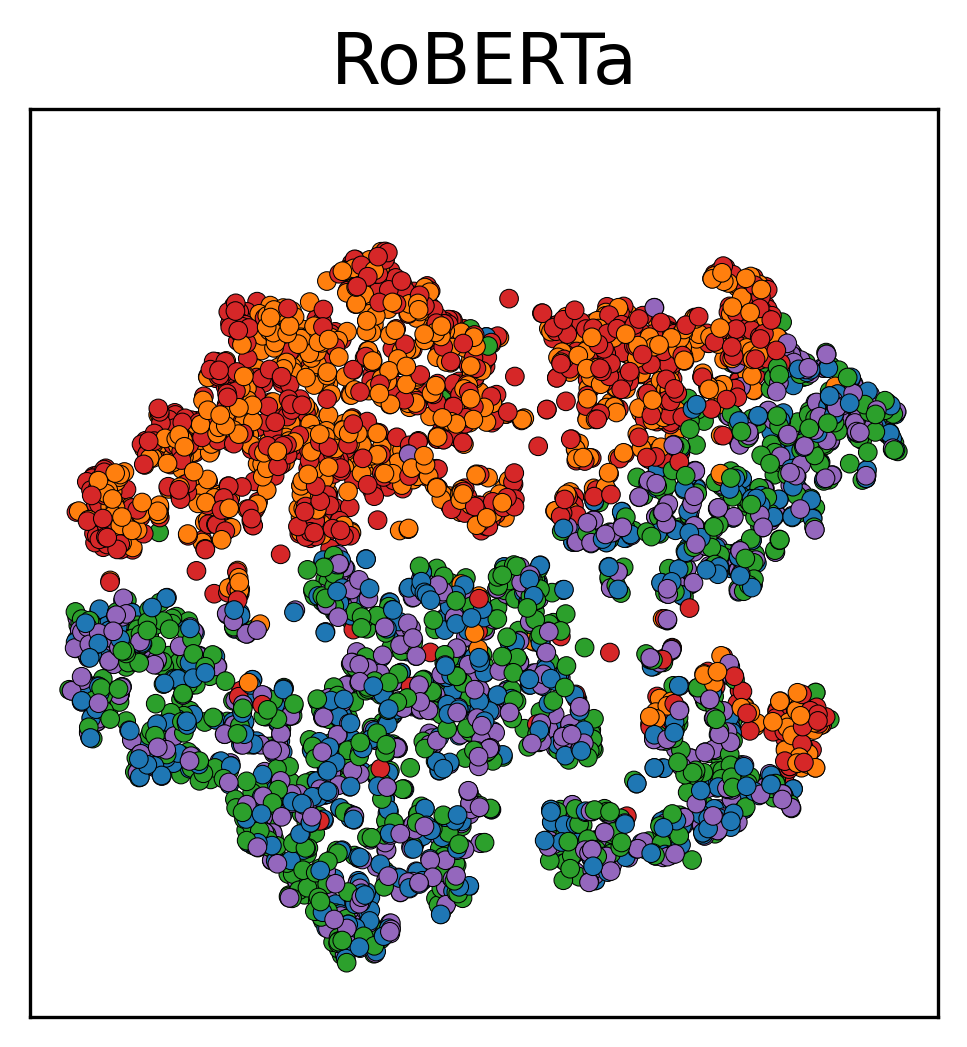

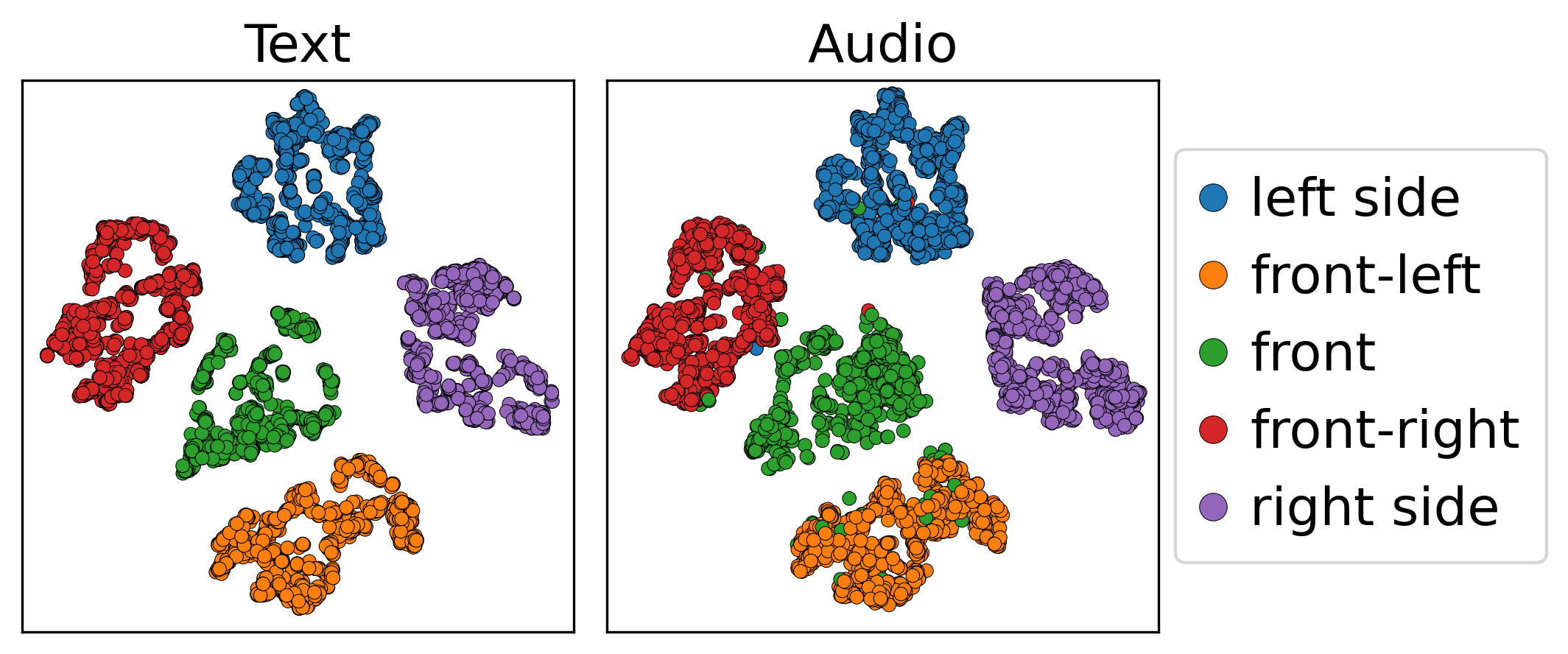

Figure 3: Comparison of t-SNE visualizations of embeddings. RoBERTa produces mixed clusters without clear separation, while Spatial-CLAP forms distinct clusters by spatial class.

t-SNE visualizations reveal that Spatial-CLAP embeddings form well-separated clusters corresponding to spatial classes, in contrast to RoBERTa embeddings, which lack such structure. This demonstrates the emergence of spatial structure through audio–text alignment.

Generalization to Unseen Multi-Source Conditions

Spatial-CLAP generalizes to three-source mixtures, achieving content–space assignment accuracy significantly above chance, despite being trained only on up to two sources. In contrast, the conventional baseline remains at chance level, underscoring the importance of multi-source training and SCL for generalization.

Implications and Future Directions

Spatial-CLAP establishes a new paradigm for spatially-aware audio–text embeddings, enabling robust modeling of complex auditory scenes with multiple simultaneous sources. The explicit enforcement of content–space correspondence via SCL is critical for resolving the permutation problem and achieving generalization beyond the training regime.

Practically, this work enables applications in AR/VR, robot audition, and open-vocabulary SELD, where understanding both "what" and "where" is essential. The release of code and pretrained models provides a strong baseline for future research.

Theoretically, the results highlight the necessity of multi-source training and the limitations of single-source spatial extensions. The findings also motivate the development of more sophisticated spatial evaluation metrics and the extension of the framework to dynamic scenes with moving sources.

Conclusion

Spatial-CLAP introduces a content-aware spatial encoder and spatial contrastive learning to achieve spatially-aware audio–text embeddings effective under multi-source conditions. Experimental results demonstrate strong performance in both retrieval and captioning tasks, with clear advantages over prior approaches in content–space binding and generalization. This work provides a foundation for future research in spatial audio–text modeling, with immediate implications for real-world auditory scene understanding and multimodal AI systems.