- The paper introduces an explicit correspondence map for drag-based editing that replaces unstable attention-based heuristics, resulting in robust and semantically consistent edits.

- It leverages a two-part attention control mechanism in MM-DiTs to preserve background and identity, enabling full-strength inversion without test-time optimization.

- Experimental results on DragBench demonstrate state-of-the-art performance in drag accuracy, visual fidelity, and semantic consistency using both drag and move modes.

Introduction

LazyDrag addresses a core limitation in drag-based image editing with diffusion models: the instability and inaccuracy arising from implicit point matching via attention mechanisms. Prior approaches, especially those based on U-Nets, rely on attention-based heuristics to match user-specified handle and target points, leading to a trade-off between edit accuracy and visual fidelity. These methods often require test-time optimization (TTO) or weakened inversion strength, which suppresses generative capabilities, degrades inpainting, and limits text-guided editing. LazyDrag introduces a principled, training-free solution for Multi-Modal Diffusion Transformers (MM-DiTs) by replacing implicit attention-based matching with an explicit correspondence map, enabling robust, high-fidelity, and semantically consistent drag-based editing under full-strength inversion.

Methodology

Explicit Correspondence Map Generation

LazyDrag constructs an explicit correspondence map from user drag instructions, which directly encodes the mapping from source (handle) points to target points. This is achieved via a winner-takes-all (WTA) strategy, where each editable region in the latent space is assigned to its nearest handle point, forming a Voronoi partition. The displacement field is computed per instruction, and each region's movement is determined solely by its assigned instruction, preserving the full magnitude of opposing drags and enabling complex edits such as opening a mouth with antagonistic drags.

The initial latent zT^ is constructed by warping the inverted source latent zT according to the correspondence map. Destination regions are filled with warped source content, inpainting regions are initialized with Gaussian noise (aligned with the diffusion prior), and background/transition regions are preserved or smoothly blended. This approach eliminates the repetitive artifacts and unnatural warping observed in prior methods that use interpolation or averaging.

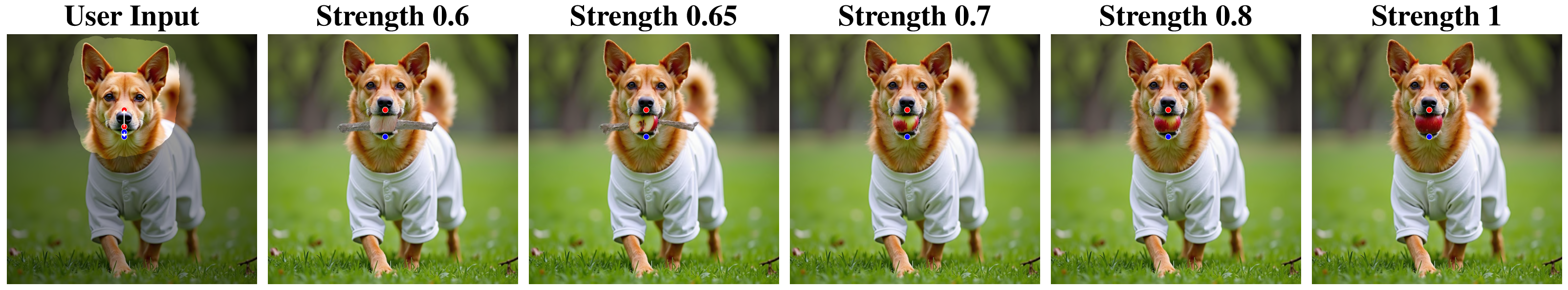

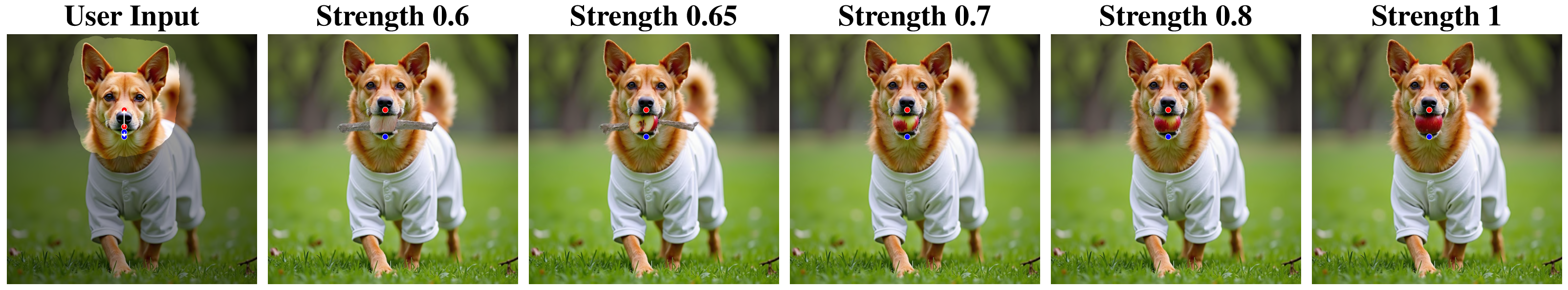

Figure 1: Effect of inversion strength. LazyDrag maintains edit fidelity and semantic consistency under full-strength inversion, unlike prior methods that degrade or fail.

Attention Control in MM-DiTs

LazyDrag leverages the architectural advantages of MM-DiTs, which provide tighter vision–text fusion and robust inversion. The method applies a two-part attention control mechanism in all single-stream attention layers:

- Attention Input Control:

- Background Preservation: For background regions, attention tokens (Q, K, V) are replaced with their cached originals from the inversion process, ensuring absolute preservation.

- Identity Preservation: For destination and transition regions, the cached tokens from the corresponding source points (as defined by the explicit map) are concatenated to the current tokens, providing a strong, correspondence-driven signal for identity preservation and smooth blending at boundaries.

- Attention Output Refinement:

- The attention output at each position is refined via a gated merge with the cached output from the corresponding source, weighted by the pre-computed matching strength and a time-dependent decay factor. This ensures precise control at handle points and natural relaxation in surrounding regions, eliminating the need for multi-step latent optimization.

Full-Strength Inversion and Text Guidance

A key innovation is the ability to perform editing under full-strength inversion, which was previously unstable due to fragile attention-based matching. The explicit correspondence map stabilizes the process, enabling high-fidelity inpainting and robust text-guided generation. Ambiguities in drag instructions (e.g., where to move a hand) can be resolved via text prompts, allowing for context-aware, semantically meaningful edits.

Experimental Results

Quantitative and Qualitative Evaluation

LazyDrag is evaluated on DragBench, a standard benchmark for drag-based editing, and compared against eight baselines, including both TTO-based and TTO-free methods. Metrics include mean distance (MD) for drag accuracy and VIEScore (SC, PQ, O) for semantic consistency, perceptual quality, and overall performance.

LazyDrag achieves state-of-the-art results across all metrics, outperforming both TTO-based and TTO-free baselines. Notably, it matches or exceeds the drag accuracy of TTO-based methods while maintaining superior perceptual quality and semantic consistency, all without per-image optimization.

(Figure 2)

Figure 2: Qualitative comparison on DragBench. LazyDrag preserves background and object identity while achieving precise geometric edits, outperforming baselines in both accuracy and visual fidelity.

A user paper with 20 expert participants further confirms the superiority of LazyDrag, with a 61.88% preference rate over all baselines.

Ablation Studies

Ablation experiments demonstrate the necessity of each component:

- Removing WTA and the explicit latent initialization increases MD and reduces perceptual quality, confirming the importance of robust correspondence and noise-based inpainting.

- Disabling background or identity preservation leads to color shifts, artifacts, and degraded semantic consistency.

- Replacing the explicit correspondence-driven attention control with attention-similarity matching (as in CharaConsist) causes a sharp drop in performance, especially under full-strength inversion.

Mode Flexibility

LazyDrag supports both drag and move/scale modes. The drag mode enables complex geometric transformations (e.g., 3D rotations, extensions), while the move mode better preserves fine details and identity. Both modes are robust, and the explicit correspondence map allows for future extension to more complex transformations.

Implementation Considerations

- Architecture: LazyDrag is implemented on top of FLUX.1 Krea-dev (an MM-DiT backbone), using the UniEdit-Flow inversion method. All modifications are confined to single-stream attention layers for efficiency.

- Resource Requirements: The method is training-free and does not require per-image optimization, making it suitable for interactive applications and scalable deployment.

- Limitations: Performance is bounded by the underlying base model and VAE compression. Very small drag distances or overlapping target points may still introduce artifacts, though these are mitigated by tuning the activation timestep and leveraging text guidance.

Implications and Future Directions

LazyDrag demonstrates that the perceived trade-off between edit stability and generative quality in drag-based editing is not fundamental, but rather a consequence of flawed implicit point matching. By introducing explicit correspondence-driven attention control, the method unlocks the full generative capacity of MM-DiTs for interactive editing, supporting complex, semantically guided, and high-fidelity edits without TTO.

This paradigm shift has several implications:

- Unified Editing Frameworks: The explicit correspondence map can be extended to other forms of spatial control, including region-based, multi-object, and video editing.

- Integration with Text and Multimodal Guidance: The approach naturally supports joint spatial and semantic control, paving the way for more intuitive, multimodal creative workflows.

- Scalability and Deployment: The training-free, efficient design is well-suited for real-world deployment in creative tools and user-facing applications.

Future work may explore more sophisticated matching strategies (e.g., 2D/3D transformations), integration with improved base models, and extension to video and 3D content.

Conclusion

LazyDrag establishes a new state-of-the-art in drag-based editing by introducing explicit correspondence-driven attention control for MM-DiTs. It achieves robust, high-fidelity, and semantically consistent edits under full-strength inversion, without the need for test-time optimization. This work provides a principled foundation for future research in interactive generative editing and multimodal control, with broad implications for both theoretical understanding and practical deployment.