- The paper presents a compact GRU-based recurrent policy that generalizes to unseen quadrotor platforms through zero-shot adaptation.

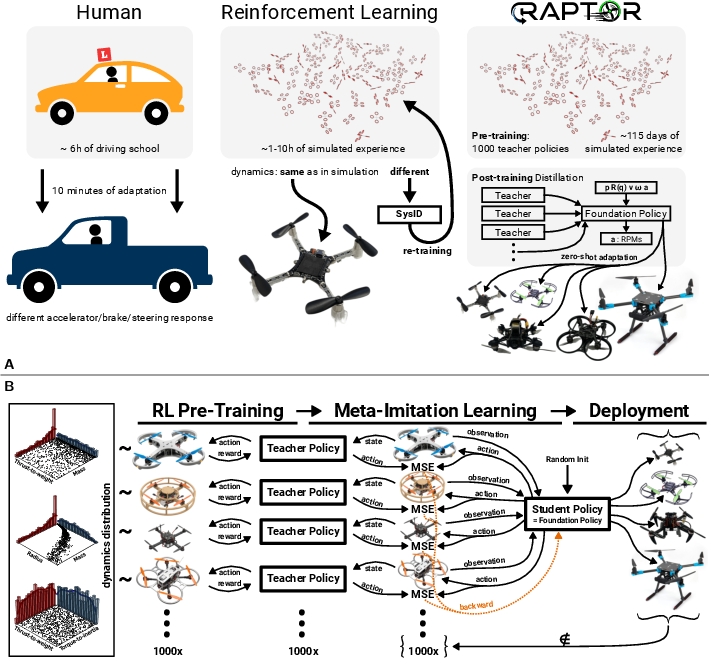

- It employs meta-imitation learning with a dual-phase training pipeline, using 1000 teacher policies to distill robust control behavior.

- Experimental results validate RAPTOR's emergent system identification and its reliable performance across diverse real-world and simulated conditions.

RAPTOR: A Foundation Policy for Quadrotor Control

Overview and Motivation

The RAPTOR framework introduces a highly adaptive, end-to-end neural network policy for quadrotor control, designed to generalize across a wide spectrum of quadrotor platforms and dynamic conditions. The central innovation is the training of a single, compact recurrent policy capable of zero-shot adaptation to unseen quadrotors, leveraging in-context learning via recurrence. This approach addresses the limitations of conventional RL-based controllers, which typically overfit to specific platforms and require retraining or explicit system identification for even minor hardware changes.

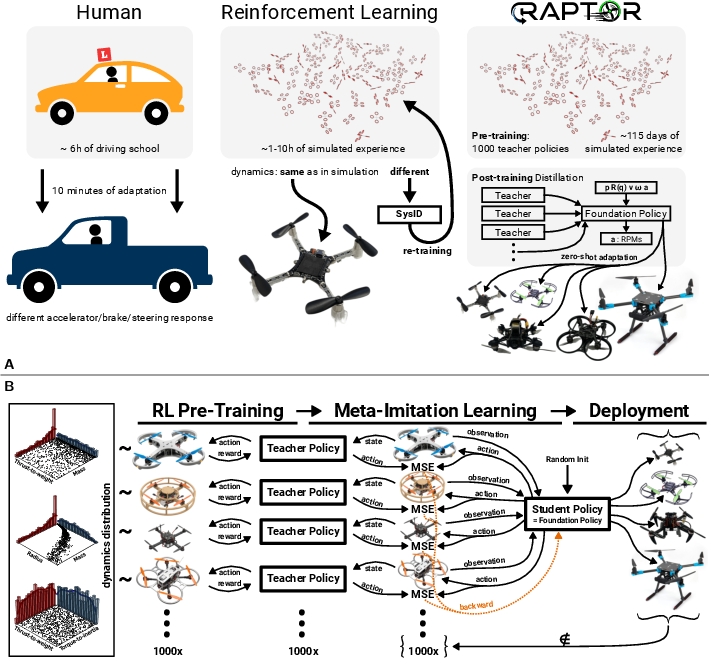

Figure 1: (A) Motivation—comparison of adaptation capabilities between humans, RL-based policies, and RAPTOR; (B) RAPTOR architecture overview.

Methodology

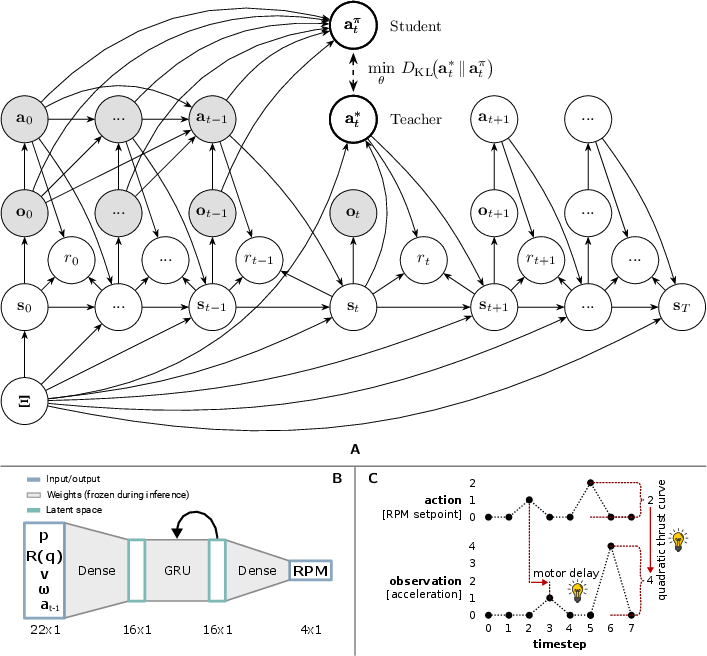

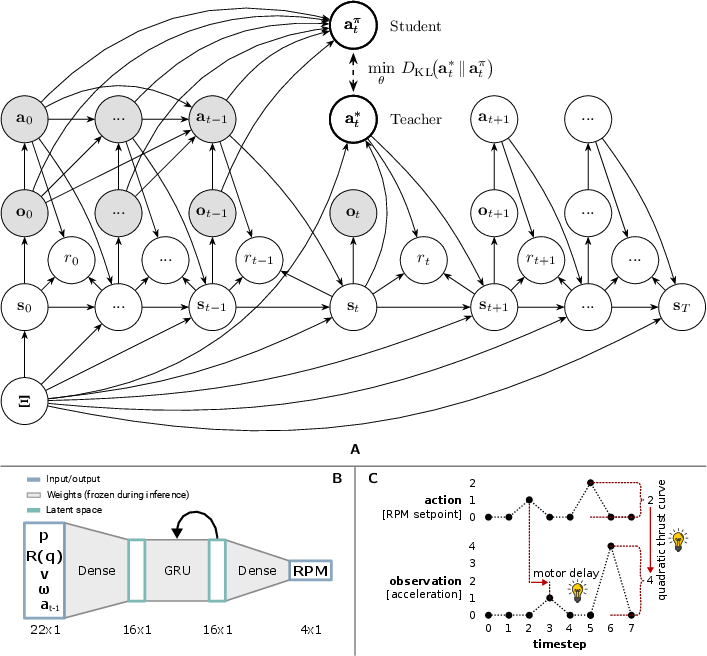

Quadrotor control is formalized as a Bayes Adaptive POMDP, with the RAPTOR policy derived from probabilistic graphical modeling principles. The architecture consists of a three-layer GRU-based recurrent neural network with only 2084 parameters, enabling deployment on resource-constrained microcontrollers while maintaining real-time inference capabilities.

Figure 2: (A) Bayesian network for quadrotor dynamics/control; (B) RAPTOR policy network architecture; (C) Illustration of emergent system identification via input/output reasoning.

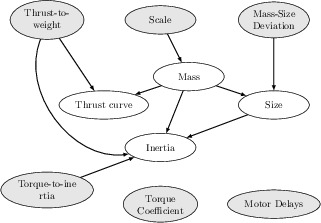

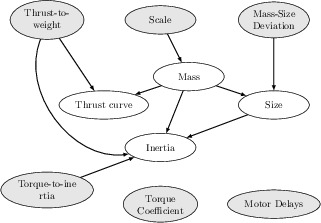

Domain Randomization and Sampling

A physically plausible, factorized distribution over quadrotor dynamics parameters is constructed, covering mass, geometry, inertia, thrust curves, torque coefficients, and motor delays. Ancestral sampling is used to efficiently generate diverse quadrotor instances for training, ensuring broad coverage of real-world platforms.

Figure 3: Probabilistic graphical model for ancestral sampling of quadrotors.

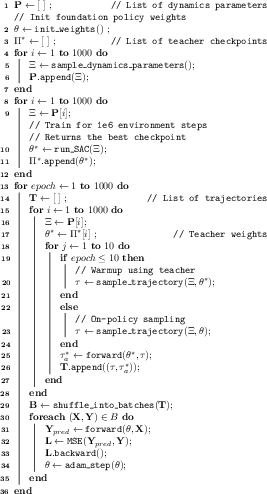

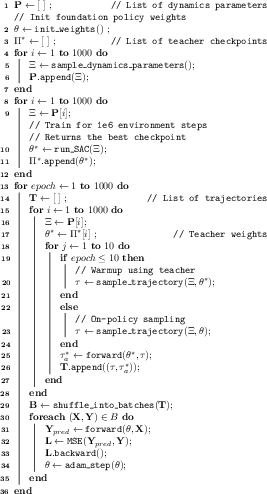

The training pipeline is divided into two phases:

- Pre-Training: 1000 teacher policies are trained via RL, each specialized for a sampled quadrotor. Teachers are overparameterized for robust convergence and observe full state information.

- Meta-Imitation Learning: The behaviors of all teachers are distilled into a single student policy. The student, lacking explicit knowledge of system parameters, must infer relevant dynamics from observation-action histories. On-policy imitation learning is employed, minimizing the MSE between student and teacher actions.

Figure 4: Meta-Imitation Learning algorithm schematic.

Experimental Results

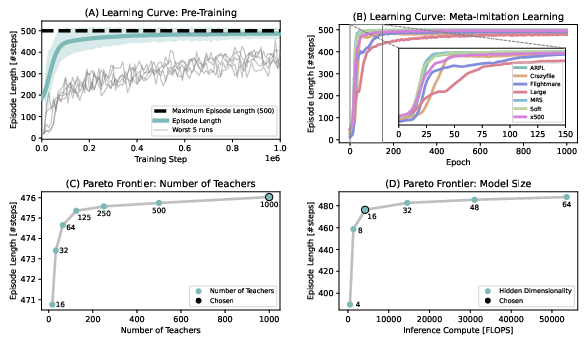

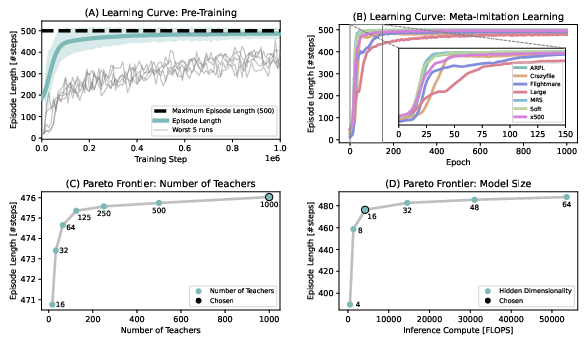

Training Dynamics and Scaling

Pre-training reliably converges for all 1000 teachers, with robust episode lengths achieved after 100k steps. Meta-Imitation Learning enables the student policy to generalize to unseen quadrotors, with performance converging after ~1000 epochs. Scaling studies reveal that a hidden dimension of 16 suffices for high performance, and increasing the number of teachers improves generalization.

Figure 5: (A) Pre-training learning curve; (B) Meta-imitation learning curve; (C) Pareto frontier: performance vs. number of teachers; (D) Pareto frontier: performance vs. policy size.

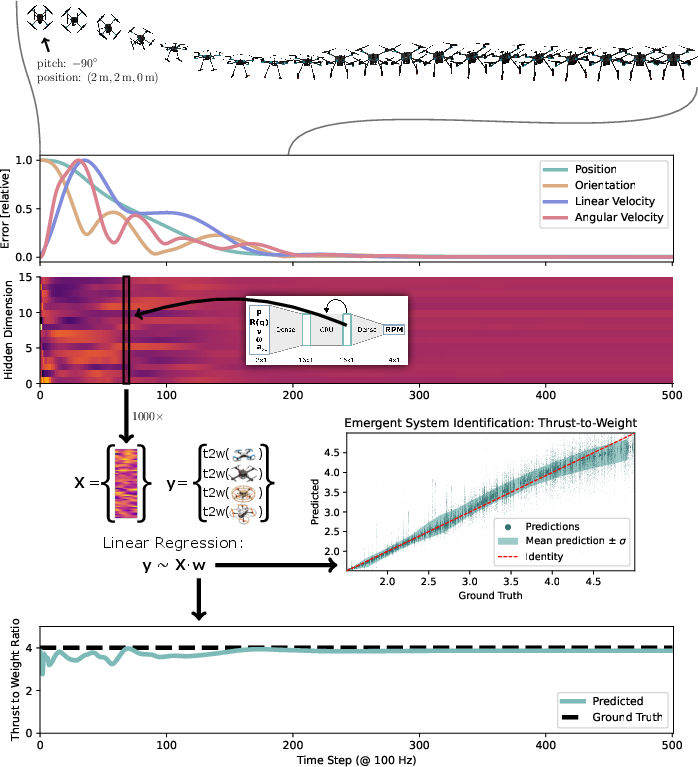

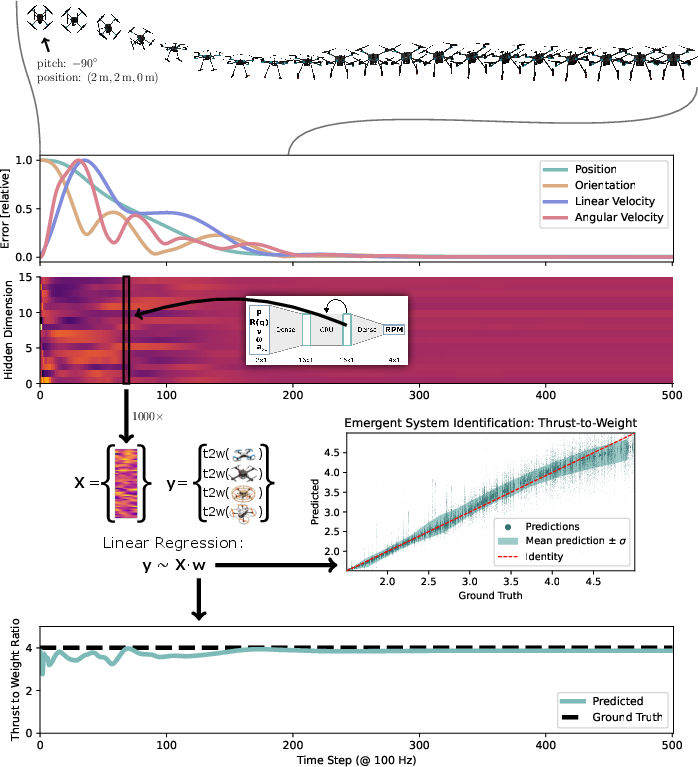

Emergent System Identification

The RAPTOR policy demonstrates emergent, implicit system identification. Linear probing of the latent state reveals strong predictive power for thrust-to-weight ratio (R2=0.949, MSE = 0.047), indicating that the policy encodes relevant dynamics in its hidden state through in-context learning.

Figure 6: Recovery from adverse initial condition; latent state trajectory and linear probe for system identification.

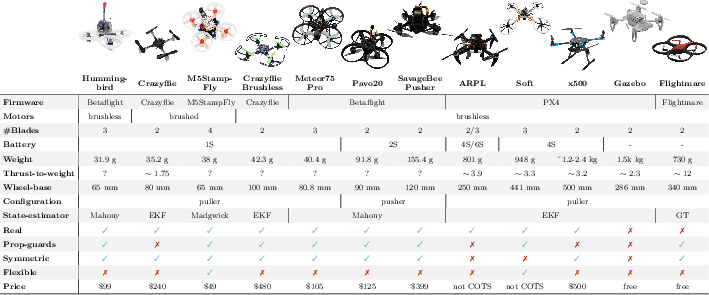

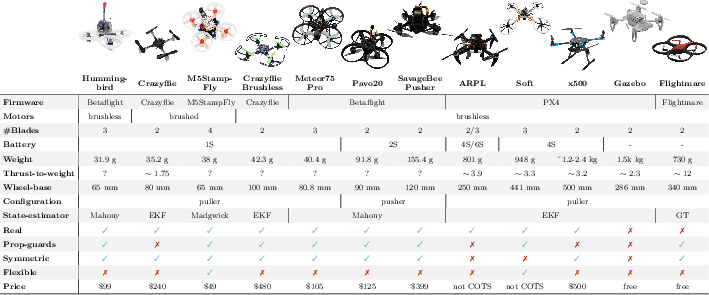

Real-World and Simulated Deployment

RAPTOR is deployed on 10 real quadrotors and 2 simulators, spanning a wide range of weights (32g–2.4kg), thrust-to-weight ratios (1.75–12), motor types, frame rigidity, and flight controllers. The policy adapts zero-shot to both in-distribution and out-of-distribution platforms, including flexible frames and mixed propeller configurations.

Figure 7: Diverse set of 10 real and 2 simulated quadrotors used in experiments.

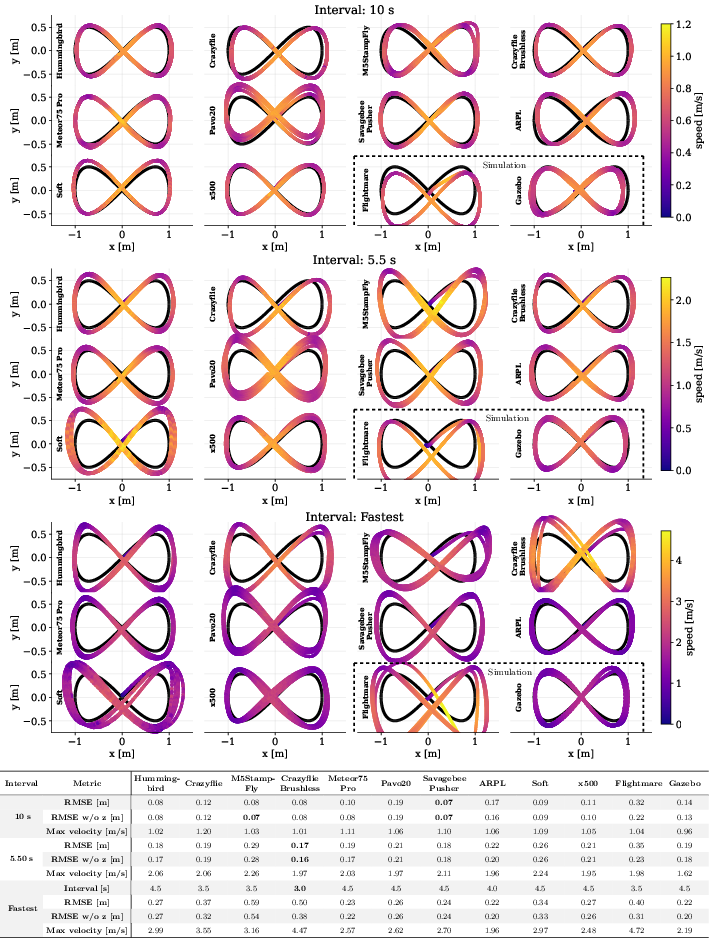

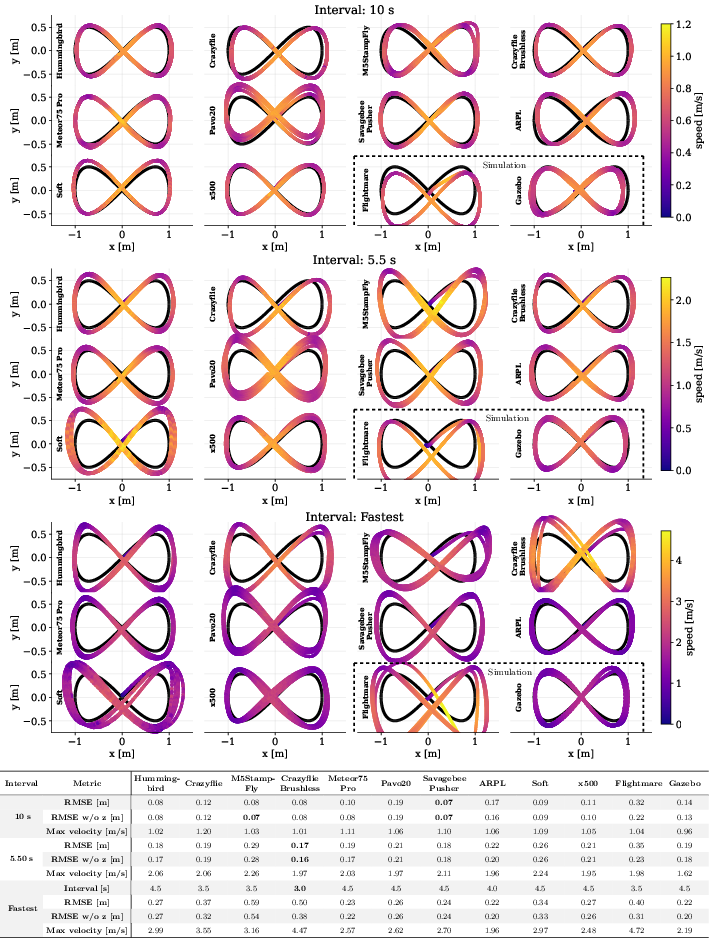

Trajectory Tracking and Robustness

Trajectory tracking experiments show that RAPTOR achieves RMSE errors comparable to state-of-the-art dedicated policies, with robust performance across all platforms. The policy generalizes to longer context windows and maintains repeatable performance over extended flights.

Figure 8: Trajectory tracking results for all quadrotors.

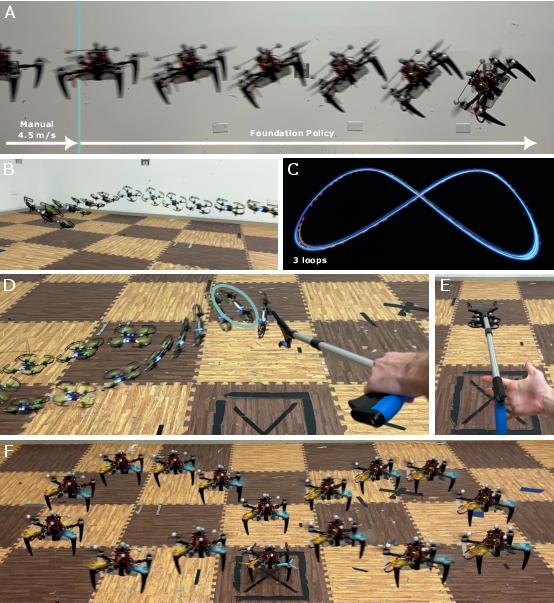

Disturbance Recovery and Adaptation

RAPTOR exhibits rapid recovery from aggressive initial states, wind disturbances, physical poking, and payload changes. The policy adapts to mixed propeller configurations and maintains stable flight under significant perturbations.

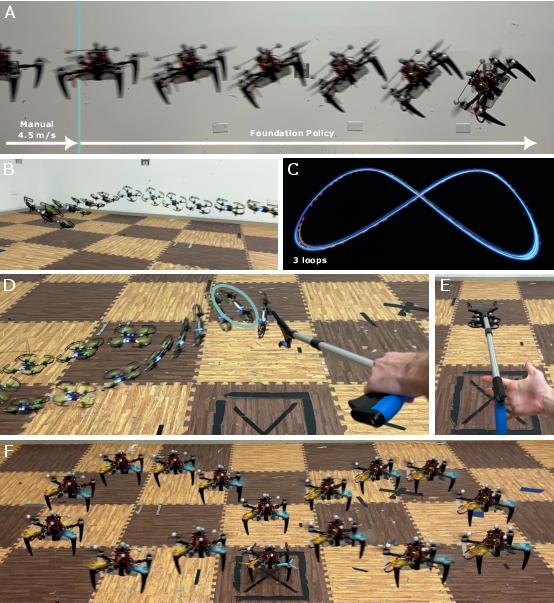

Figure 9: RAPTOR policy performance under various disturbances and configurations.

Computational Considerations

The separation of pre-training and meta-imitation learning enables embarrassingly parallel training, with pre-training distributed across multiple cores and meta-imitation learning requiring orders of magnitude less compute. The compact policy size allows deployment on microcontrollers with <10% CPU utilization at high control frequencies.

Theoretical and Practical Implications

RAPTOR demonstrates that a small, recurrent neural policy can achieve robust, zero-shot adaptation to a wide range of quadrotor platforms, challenging the notion that end-to-end neural policies are fundamentally limited by Sim2Real gaps. The emergent system identification in the latent state suggests that meta-learning via in-context reasoning is a viable alternative to explicit system identification or domain randomization.

The framework's reproducibility, open-source codebase, and ease of integration into existing flight controllers position RAPTOR as a strong baseline for future research in adaptive robotic control.

Future Directions

Potential avenues for extension include:

- Incorporating reward function variability for broader task generalization.

- Scaling to more complex aerial vehicles and multi-agent scenarios.

- Integrating trajectory lookahead for improved agile tracking.

- Exploring attention-based architectures for longer context windows.

Conclusion

RAPTOR establishes a principled, practical approach for training foundation policies in quadrotor control, achieving robust zero-shot adaptation, emergent system identification, and efficient deployment. The results suggest that meta-imitation learning with broad domain randomization and recurrence is a powerful paradigm for adaptive control in robotics, with significant implications for both theory and real-world applications.