- The paper introduces Probabilistic Structure Integration (PSI) to integrate zero-shot extracted intermediate structures for enhanced world modeling.

- It employs a Local Random-Access Sequence (LRAS) model that supports both sequential and parallel sampling, enabling scalable and controlled generation.

- The approach facilitates explicit uncertainty estimation and dynamic scene generation, demonstrating applications in video editing and robotic motion planning.

Probabilistic Structure Integration: A Framework for Controllable World Modeling

Introduction

"World Modeling with Probabilistic Structure Integration" presents Probabilistic Structure Integration (PSI), a unified framework for constructing richly controllable, promptable world models from raw data. PSI is designed to overcome the limitations of current generative and world models in non-linguistic domains, particularly vision, by enabling flexible querying, precise control, and continual self-improvement. The approach is built on a three-step cycle: (1) probabilistic prediction via a random-access autoregressive sequence model, (2) zero-shot extraction of meaningful intermediate structures through causal inference, and (3) integration of these structures as new token types, expanding the model’s control surfaces and predictive fidelity.

Probabilistic Prediction via LRAS

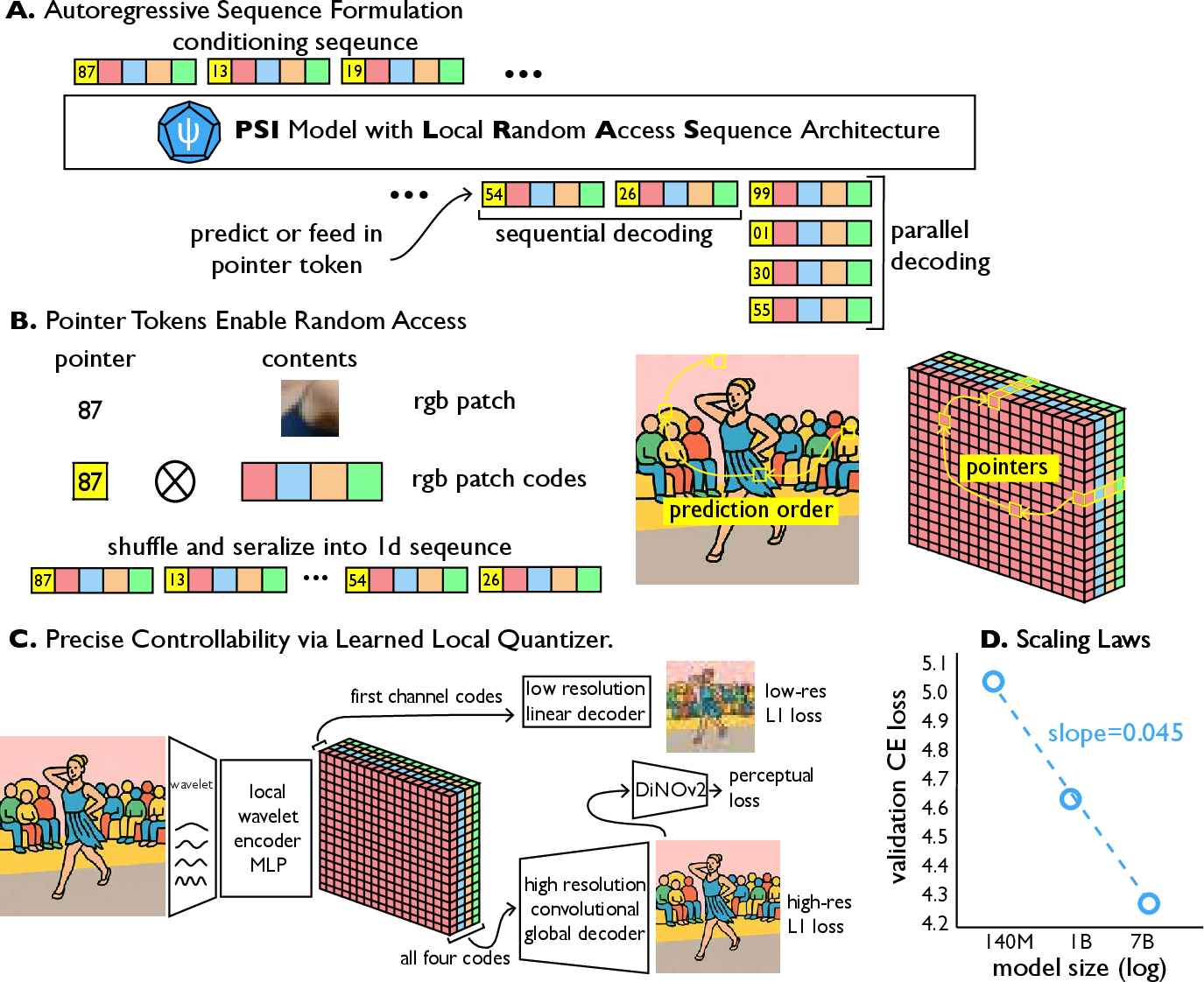

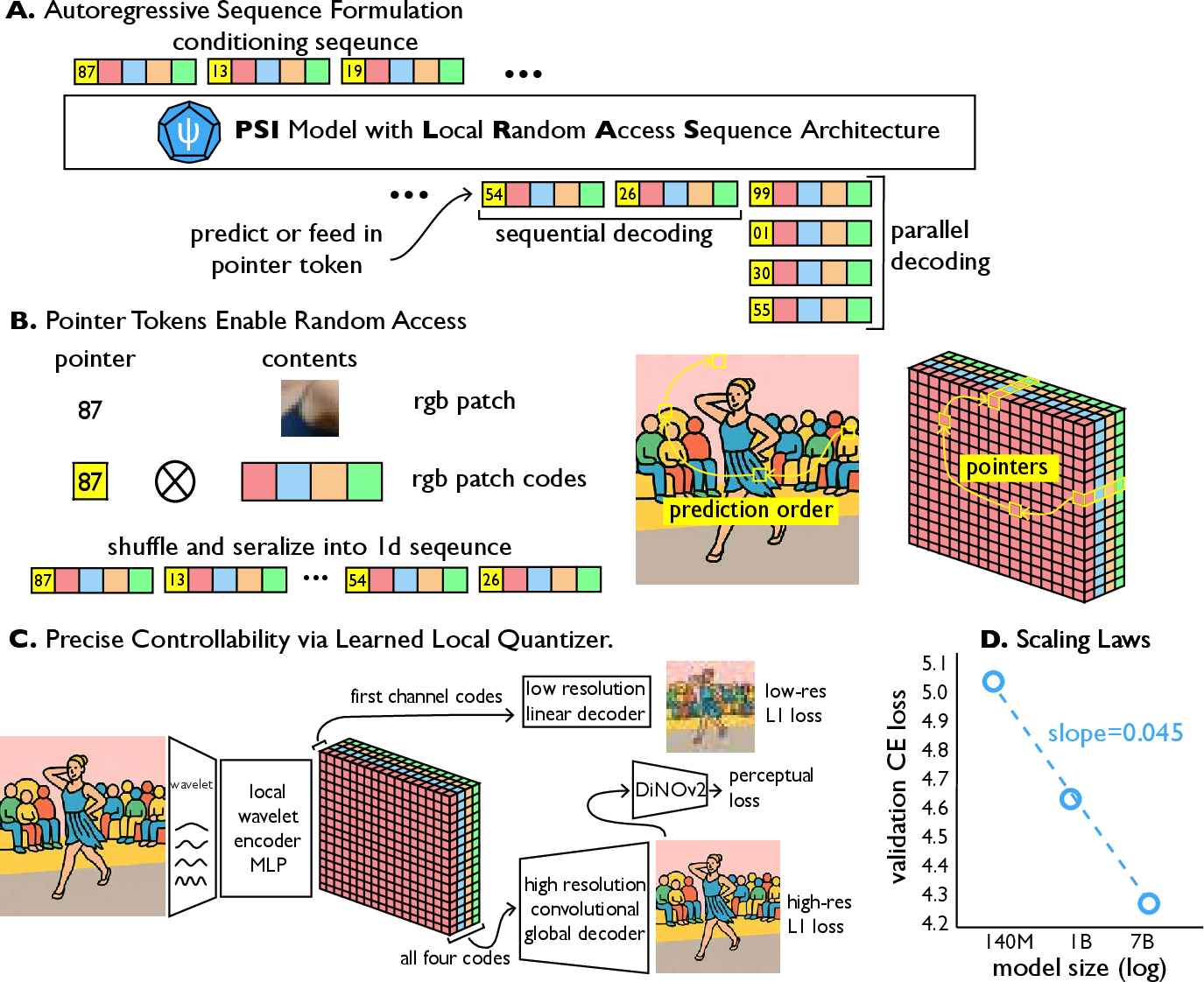

The core of PSI is the Ψ model, which learns a probabilistic graphical model over local variables (e.g., spatiotemporal patches in video). The Local Random-Access Sequence (LRAS) architecture enables scalable learning by serializing pointer-content pairs into sequences, allowing arbitrary conditioning and random access. This formulation leverages autoregressive transformers, pointer tokens for flexible conditioning, and a Hierarchical Local Quantizer (HLQ) for strict locality in tokenization.

Figure 1: LRAS architecture: HLQ encodes local patches, pointer-content representation enables random access, and an autoregressive transformer models the serialized sequence.

LRAS supports both sequential and parallel generation, trading off quality and efficiency. Sequential sampling maximizes consistency by attending to all previous patches, while parallel sampling enables fast inference with conditional independence assumptions. The architecture inherits predictable scaling laws from LLMs, with validation loss improving monotonically with model size up to 7B parameters.

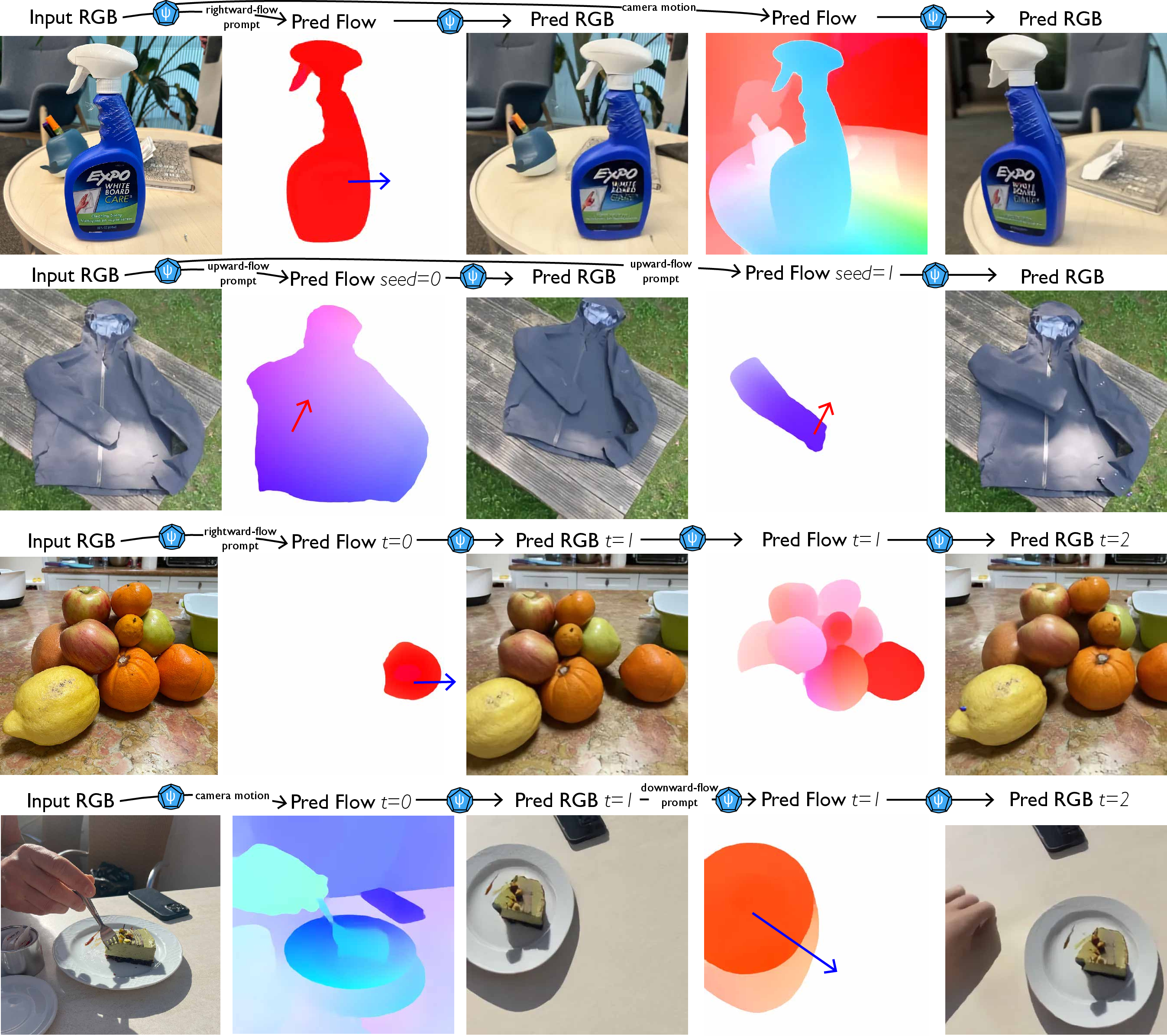

Rich Inference Pathways and Controllability

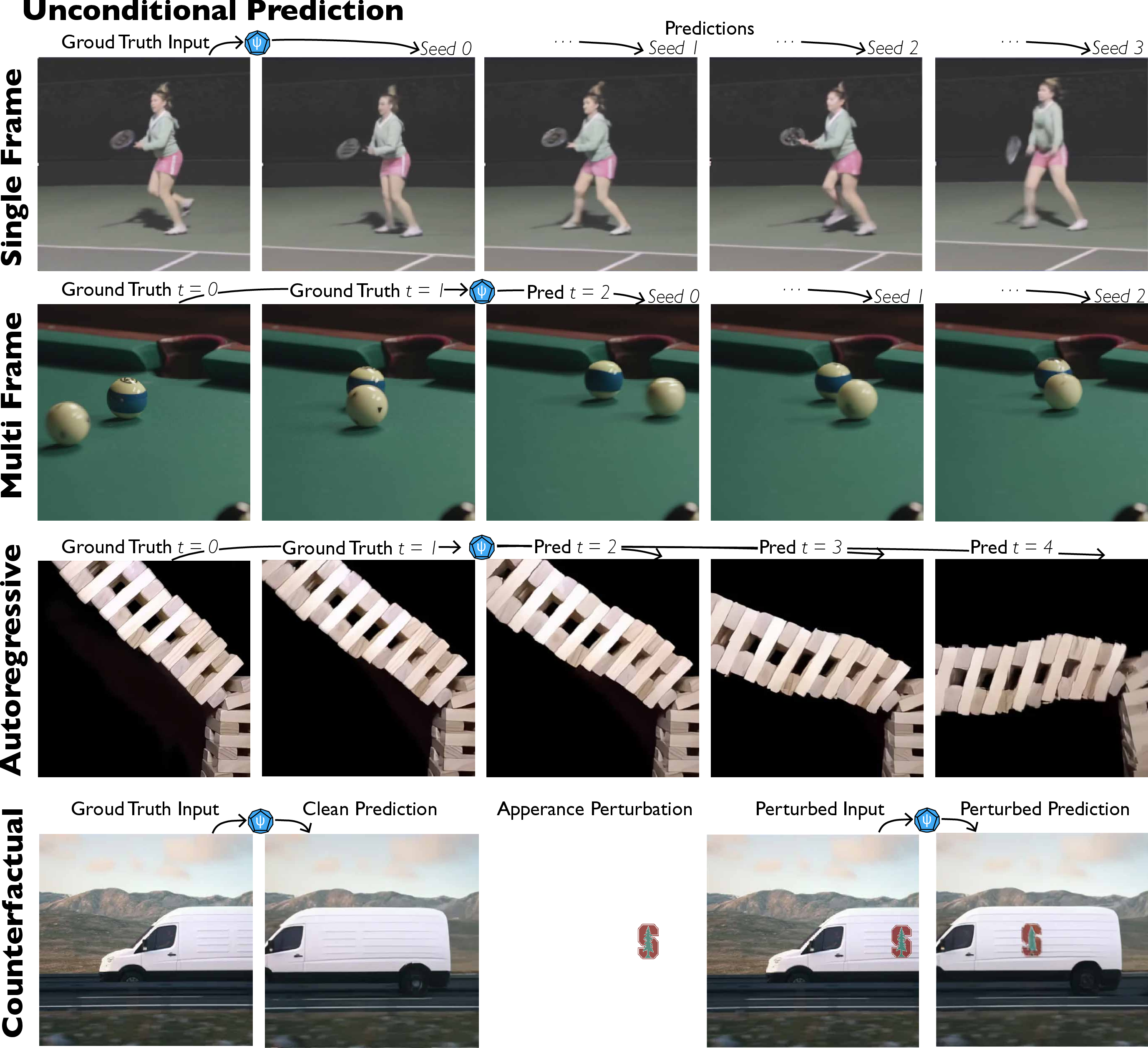

The pointer-based conditioning mechanism of LRAS enables diverse inference pathways:

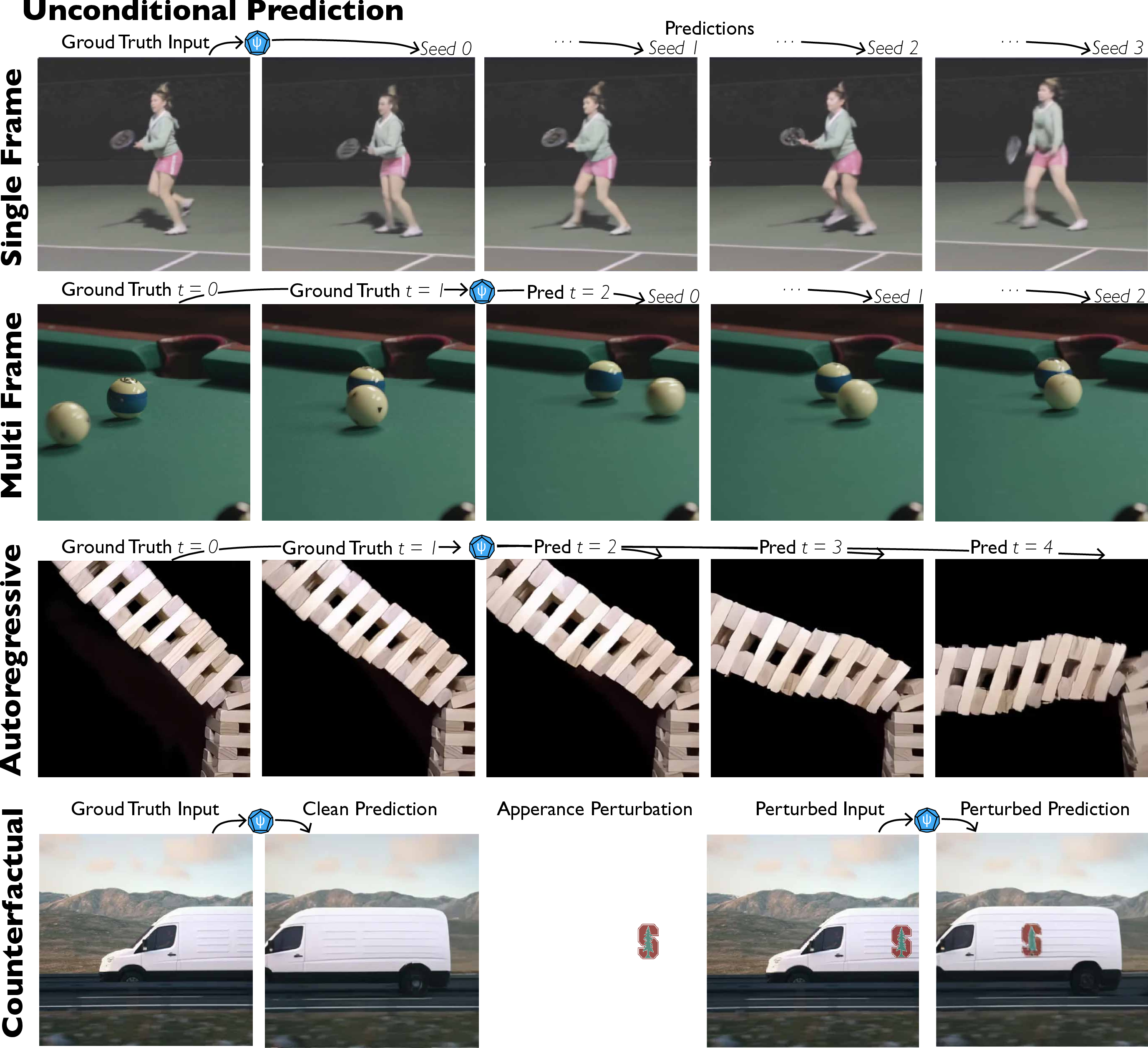

- Unconditional prediction: Sampling plausible futures from a single frame, capturing multimodal motion priors.

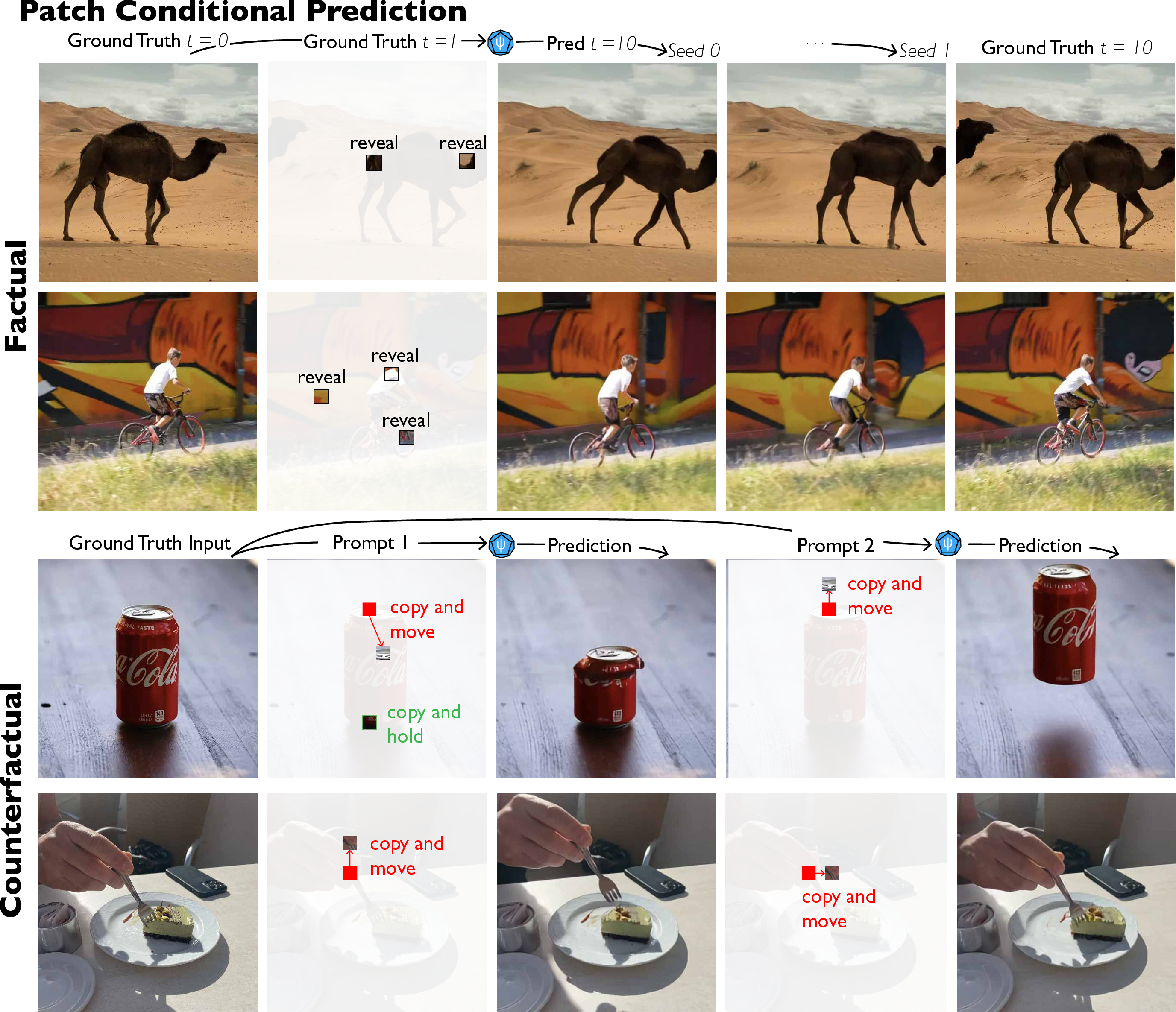

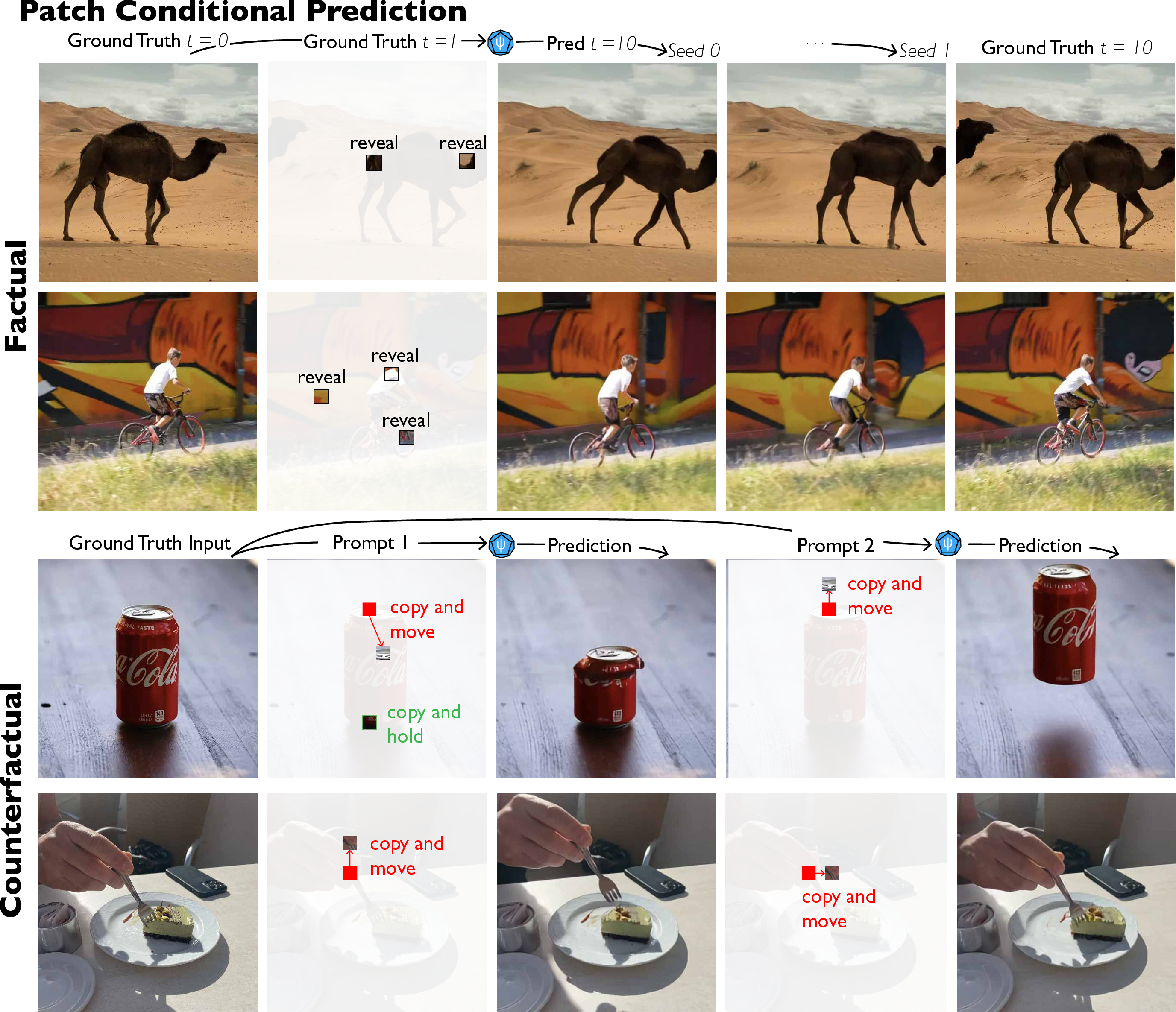

- Patch-conditional prediction: Conditioning on sparse patches from the target frame, collapsing uncertainty and enabling counterfactual manipulations.

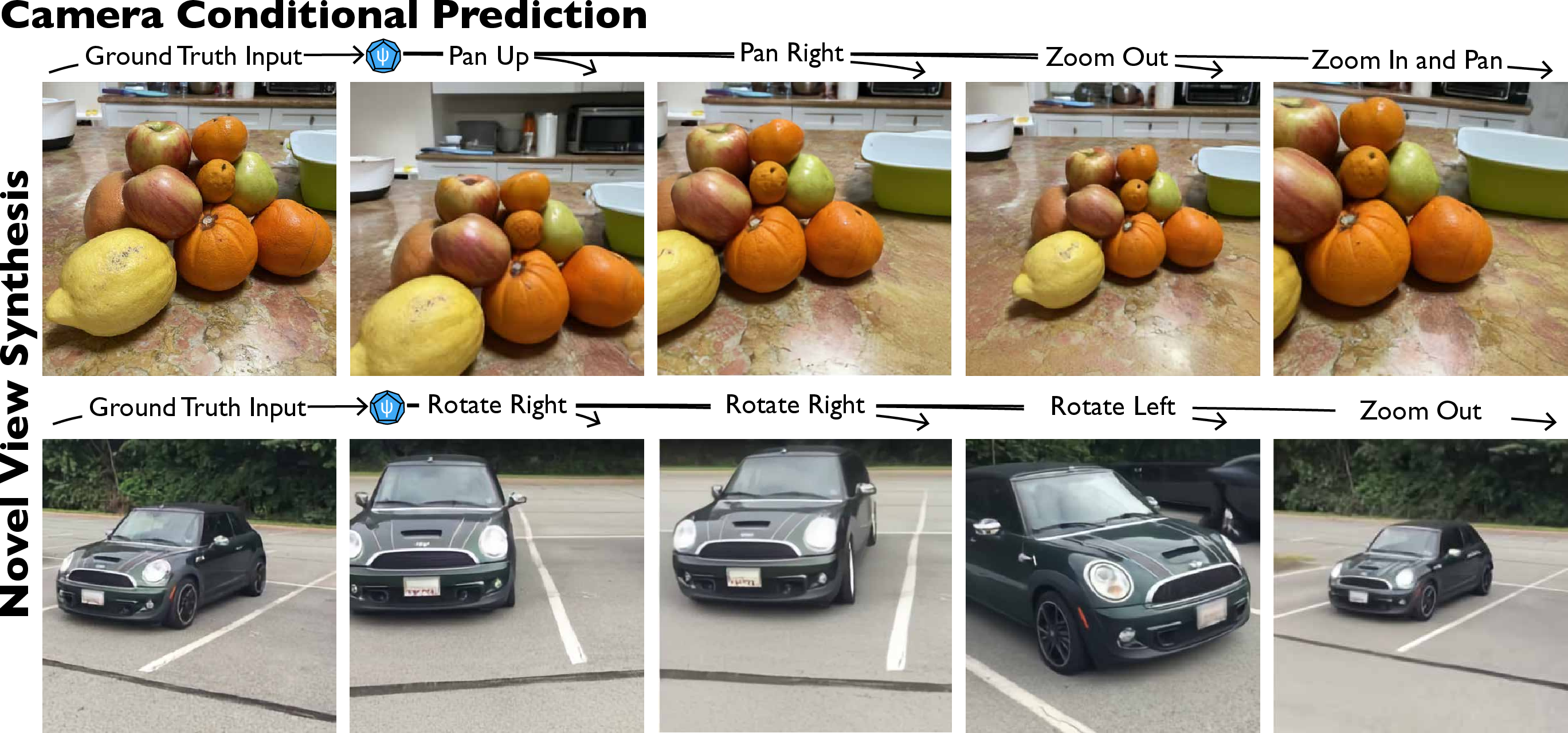

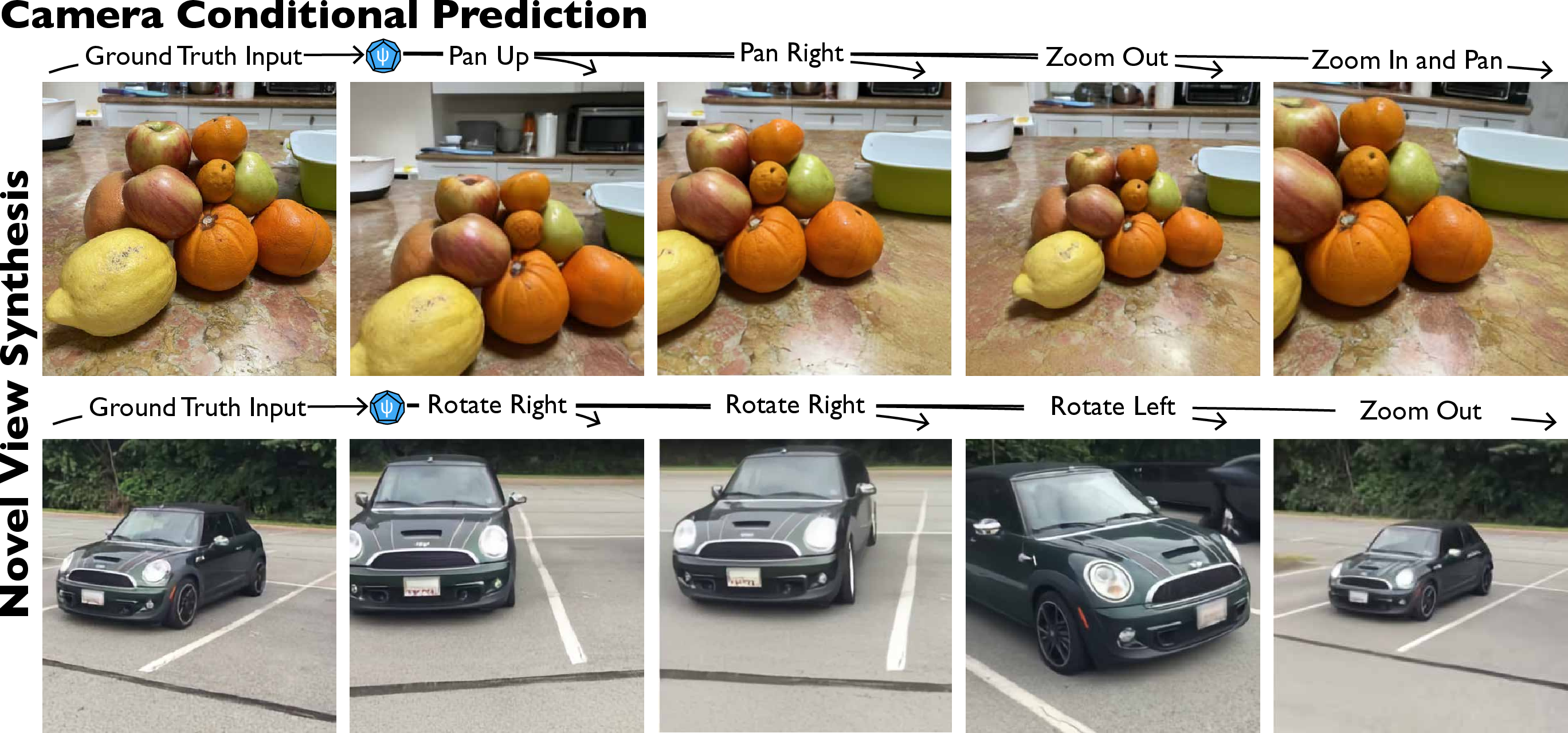

- Camera-conditional prediction: Incorporating camera pose tokens for novel view synthesis, hallucinating occluded regions while maintaining scene consistency.

Figure 2: Unconditional promptable prediction: diverse plausible futures generated from a single frame.

Figure 3: Patch-conditional prediction: sparse conditioning patches constrain completions and enable counterfactual edits.

Figure 4: Camera-conditional prediction: novel view synthesis with camera transformation tokens.

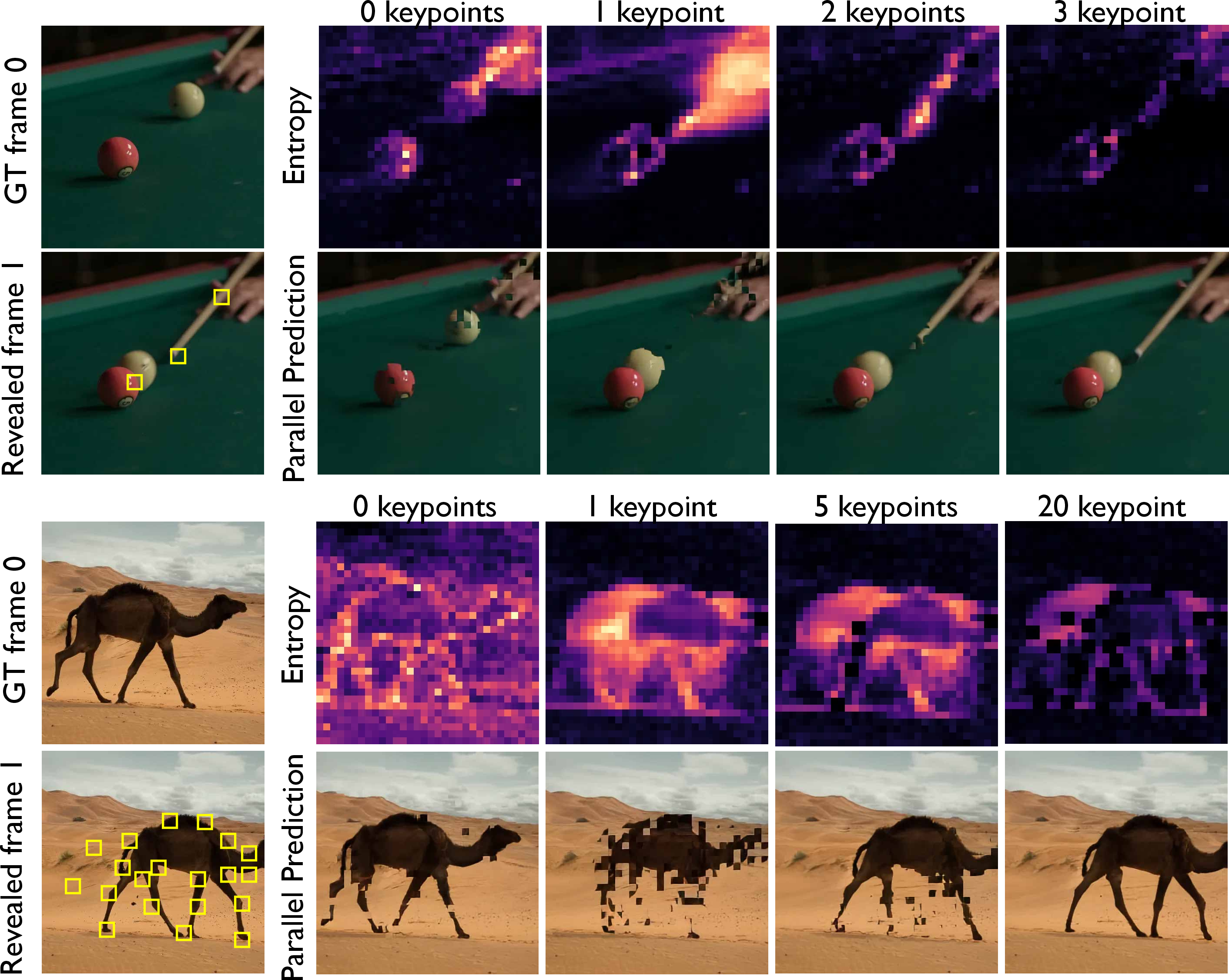

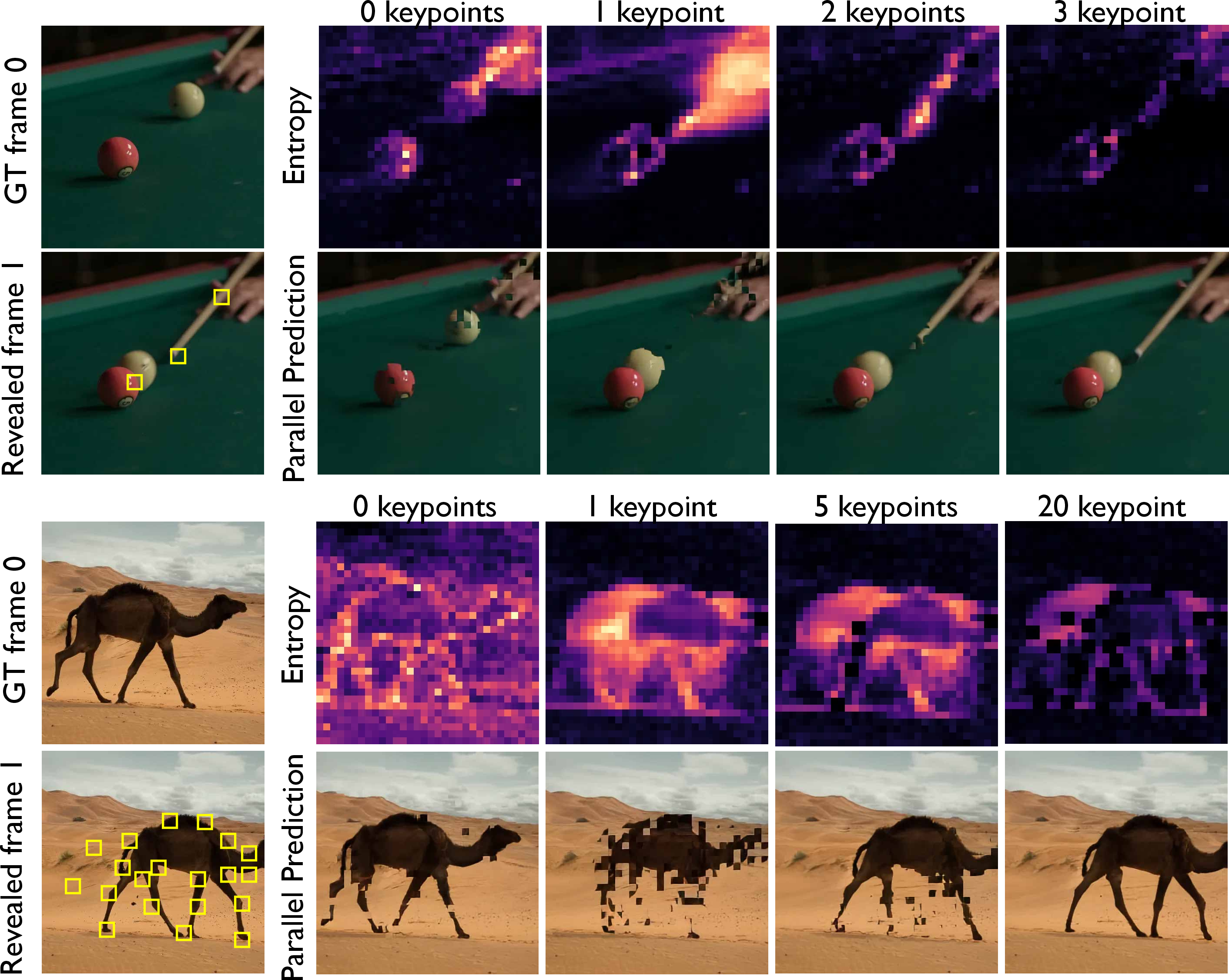

Entropy analysis of patch-wise logits enables progressive uncertainty reduction, guiding active perception and adaptive decoding strategies.

Figure 5: Progressive uncertainty reduction: sequential conditioning patches optimally resolve scene uncertainty.

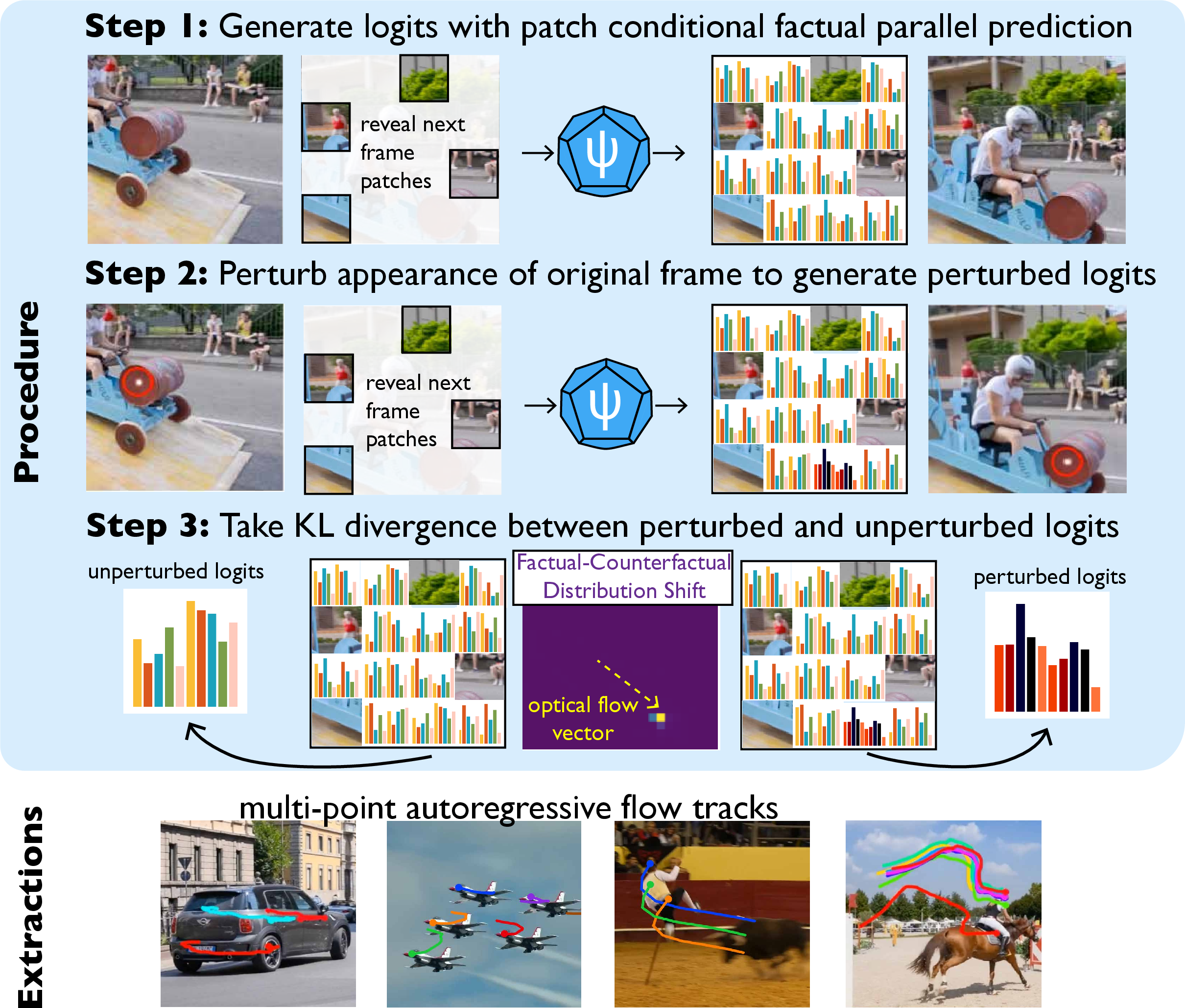

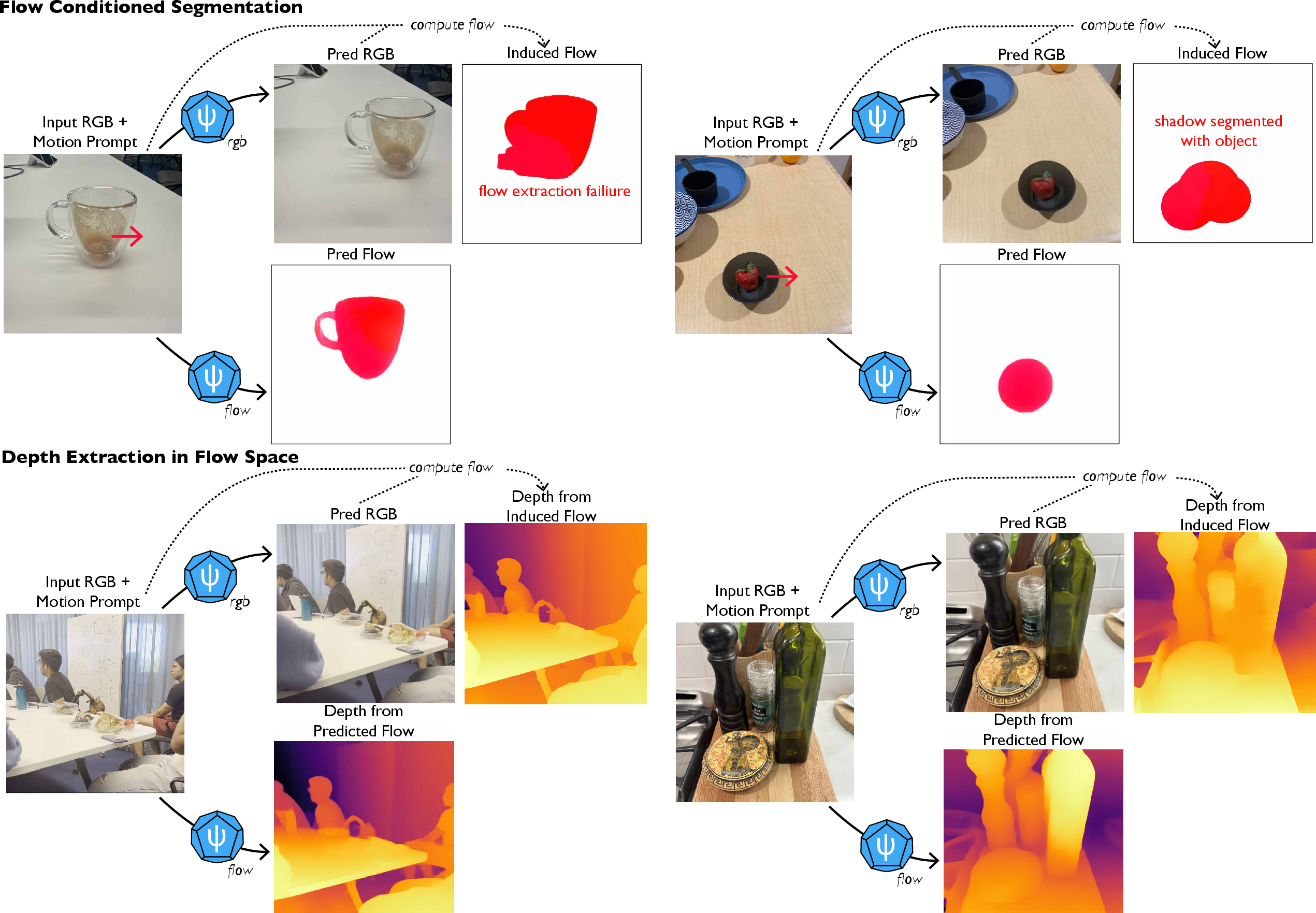

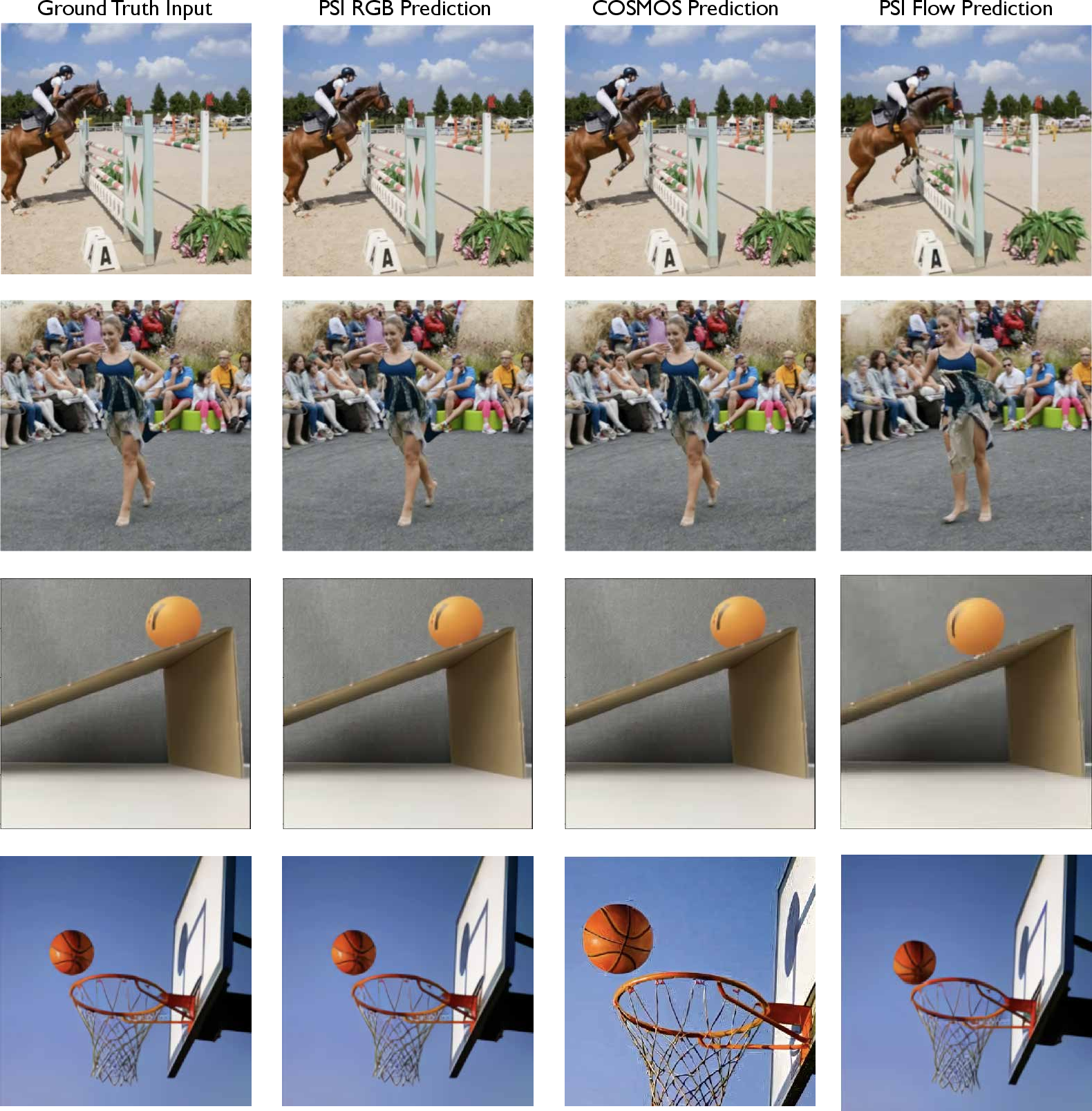

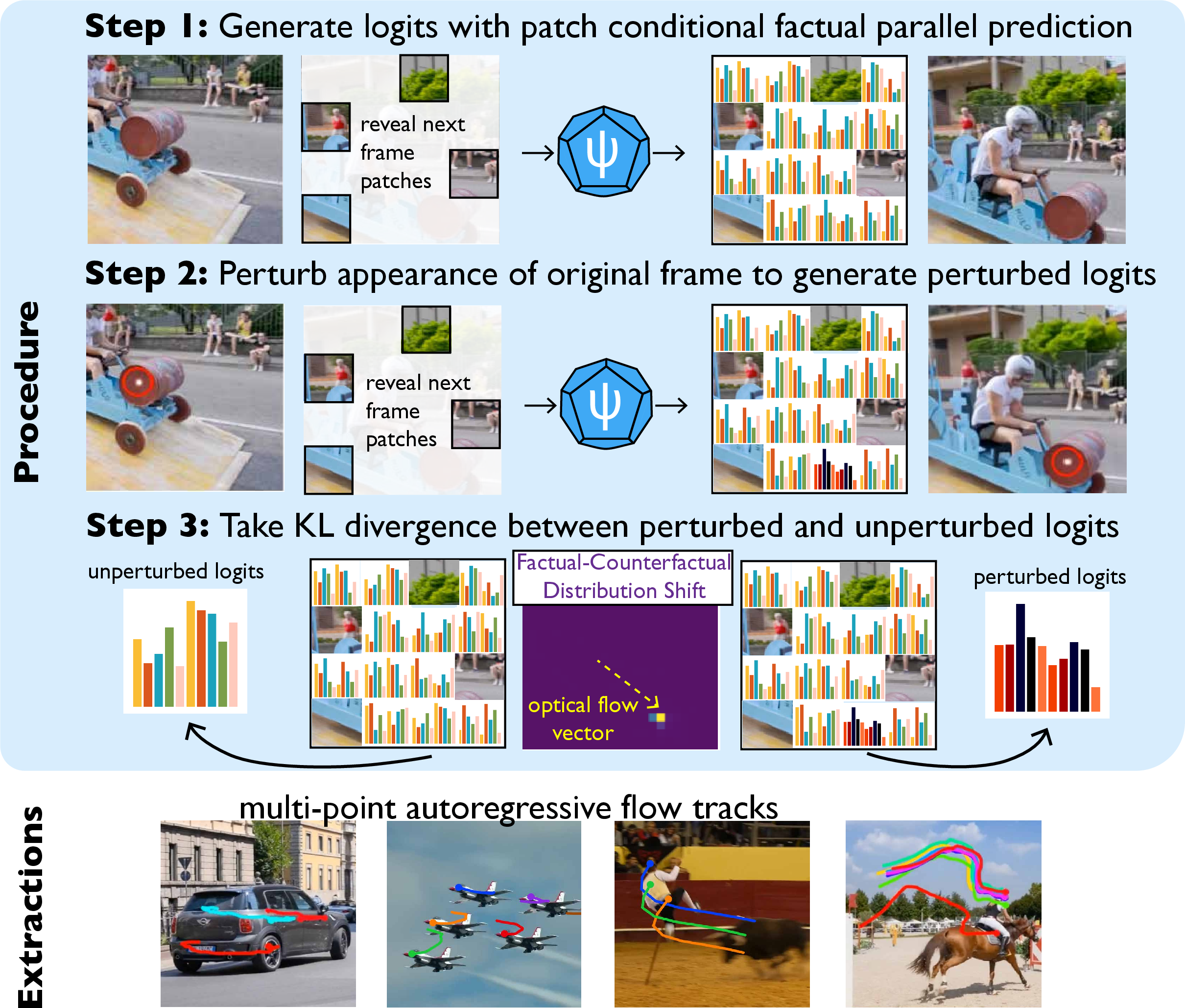

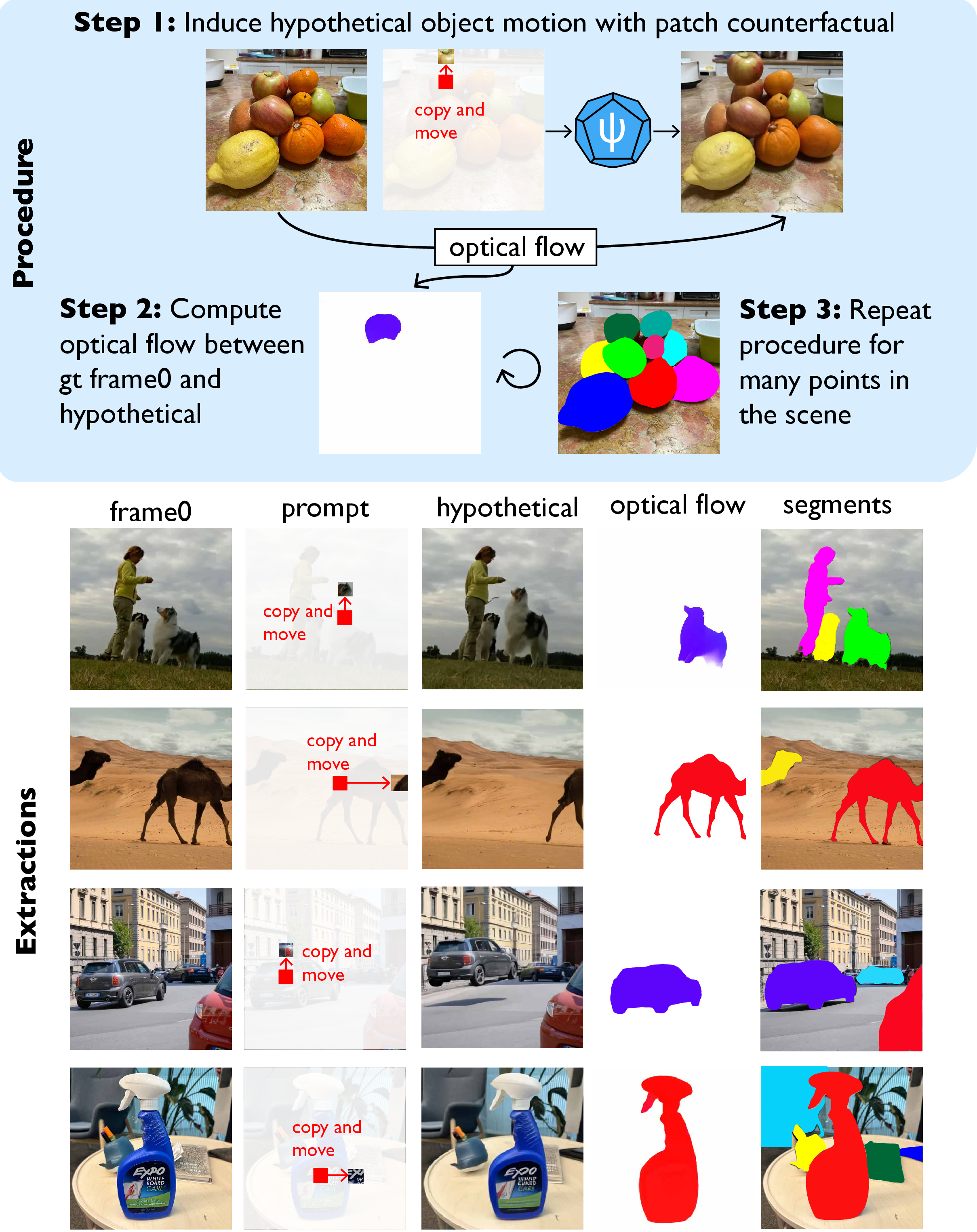

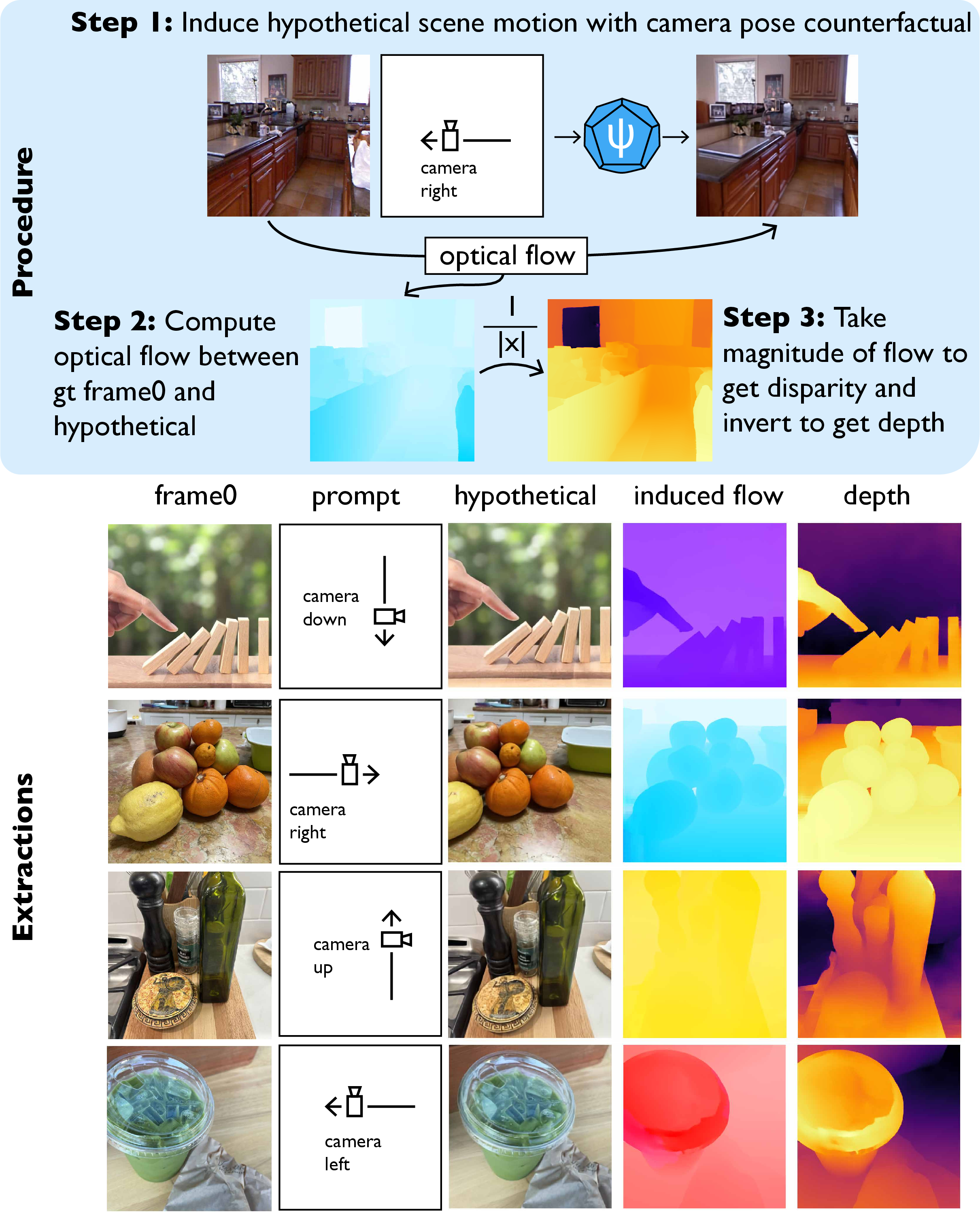

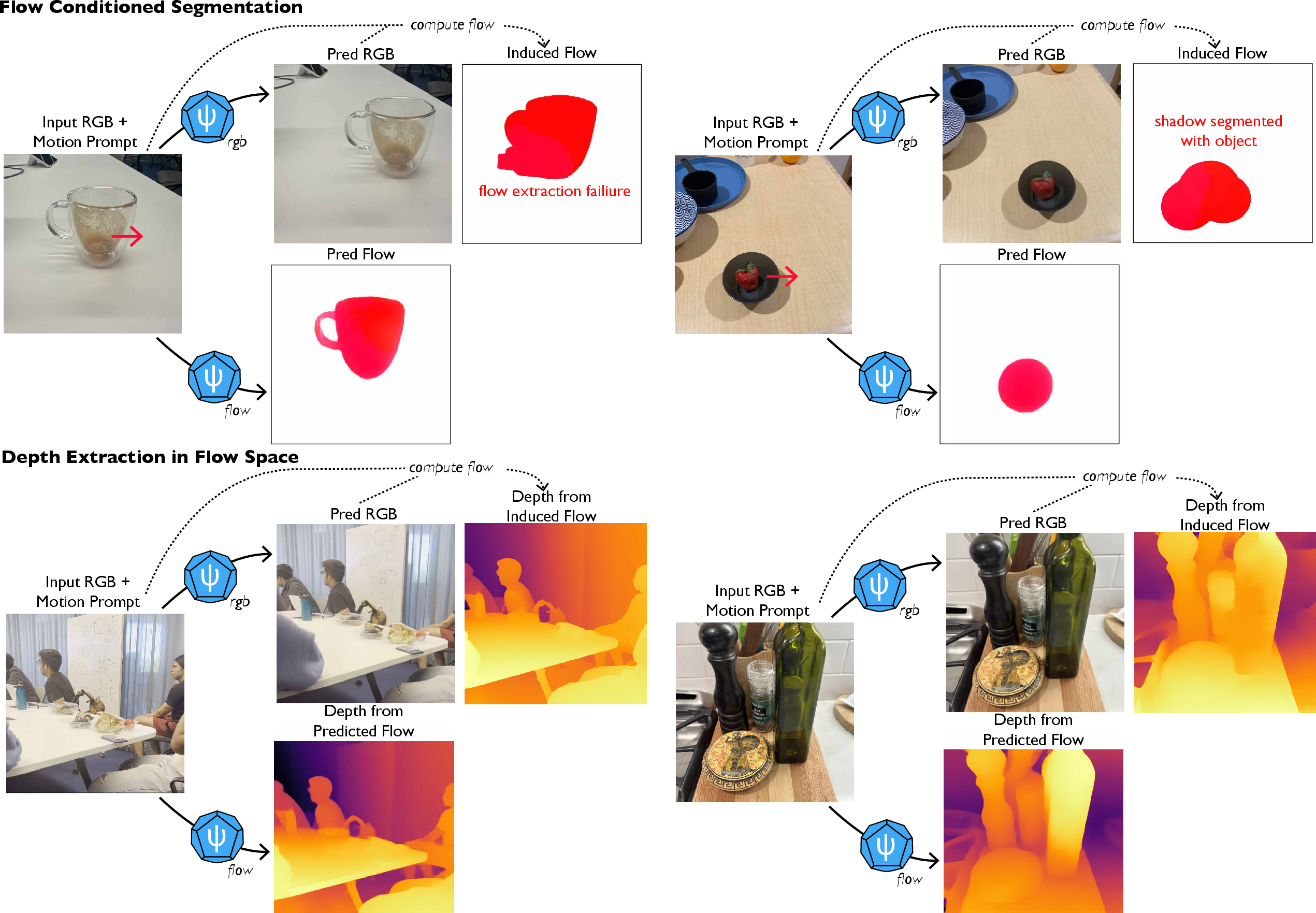

PSI leverages the distributional nature of Ψ to extract intermediate structures—optical flow, object segments, and depth—via zero-shot causal inference. These are obtained by comparing predictions under factual and counterfactual interventions, operationalizing the do() operator in the learned PGM.

- Optical flow: KL-tracing measures the effect of appearance perturbations on future predictions, yielding flow vectors that track motion correspondence.

Figure 6: Optical flow via KL tracing: perturbation and divergence analysis reveal motion correspondence.

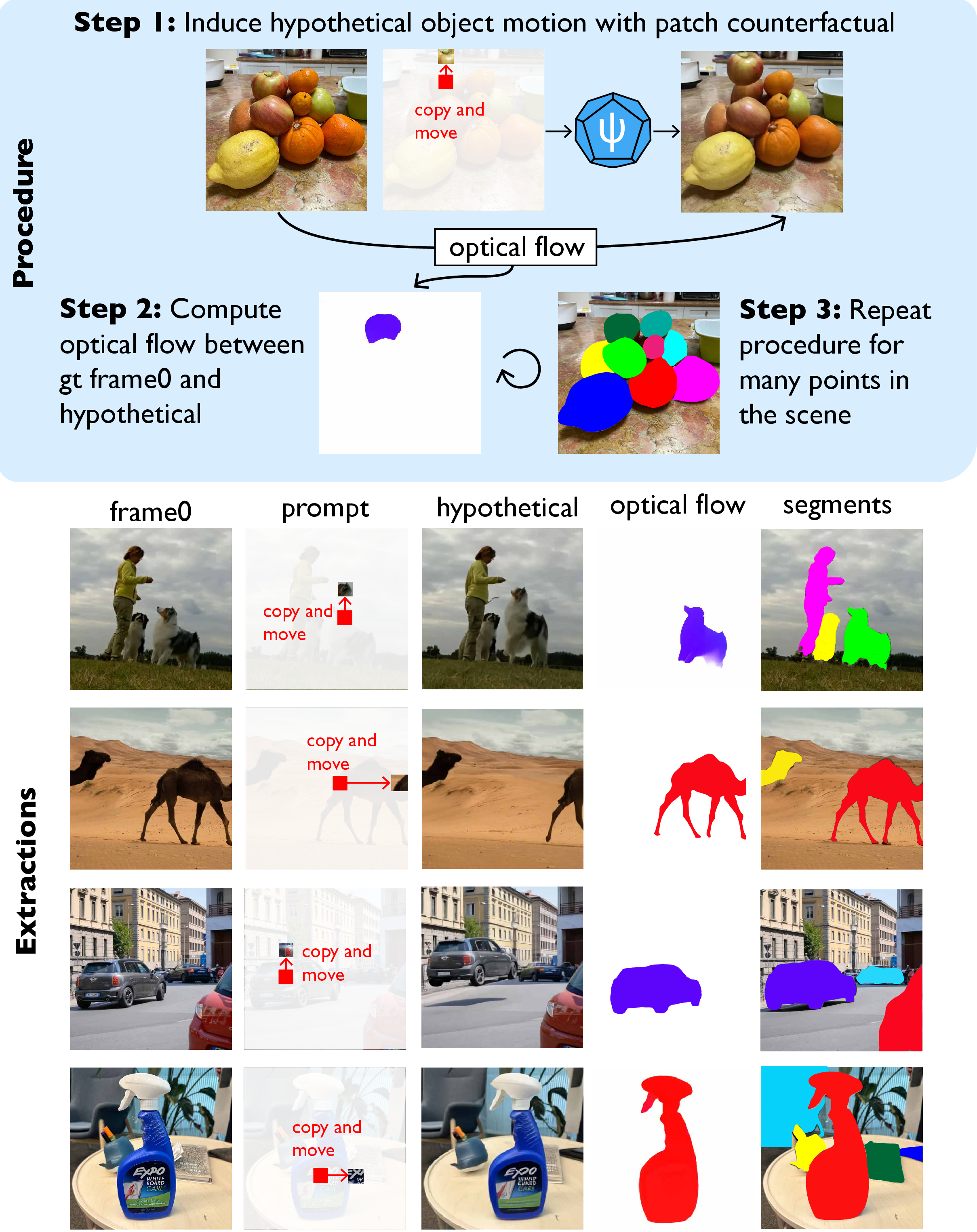

- Object segments: Motion hypotheticals induce coherent object displacement, with flow analysis delineating object boundaries.

Figure 7: Object segments from motion hypotheticals: counterfactual prompts isolate object membership.

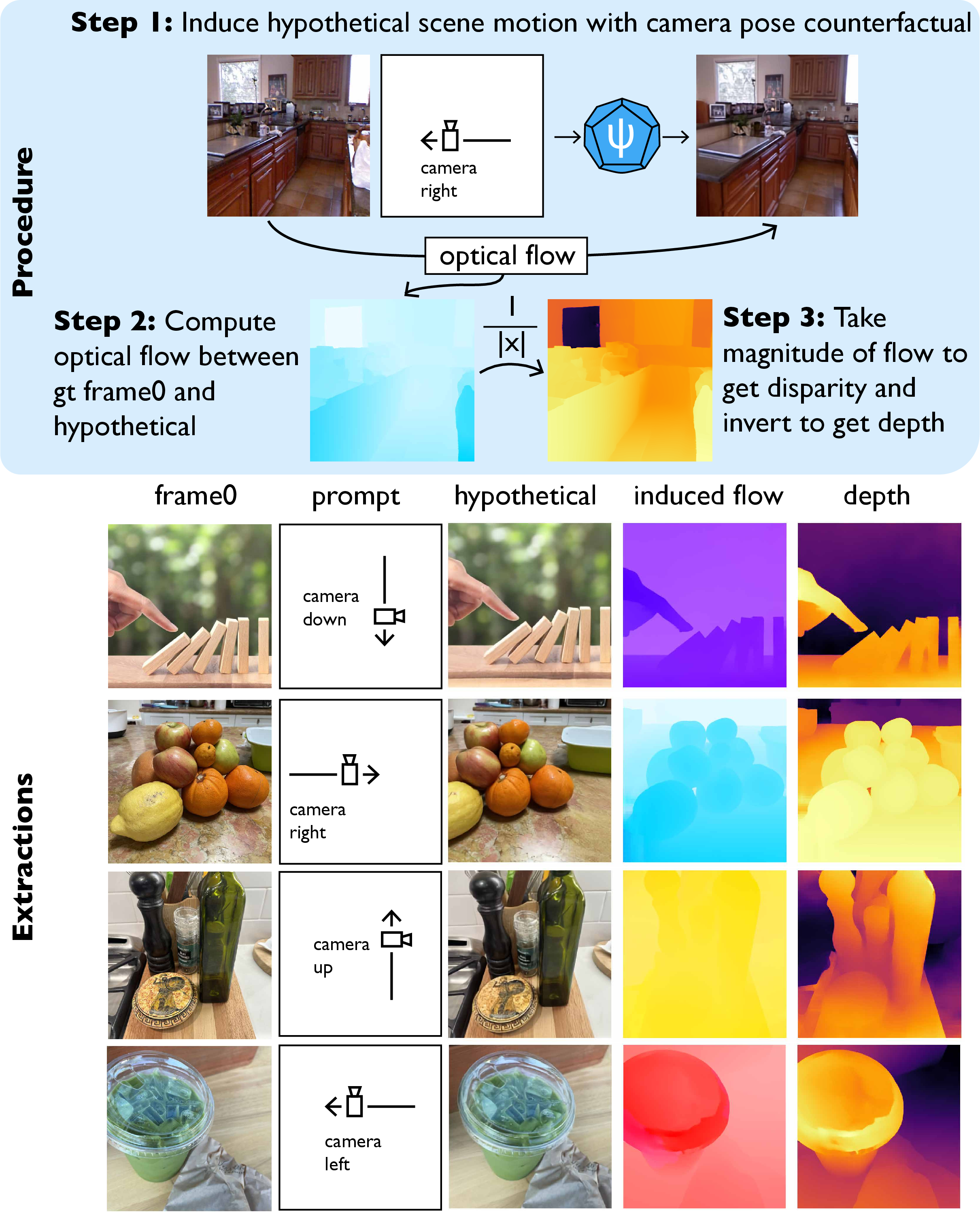

- Depth: Viewpoint hypotheticals generate stereo pairs, with flow magnitude as disparity for depth estimation.

Figure 8: Depth from viewpoint hypotheticals: camera translation prompts yield parallax-based depth maps.

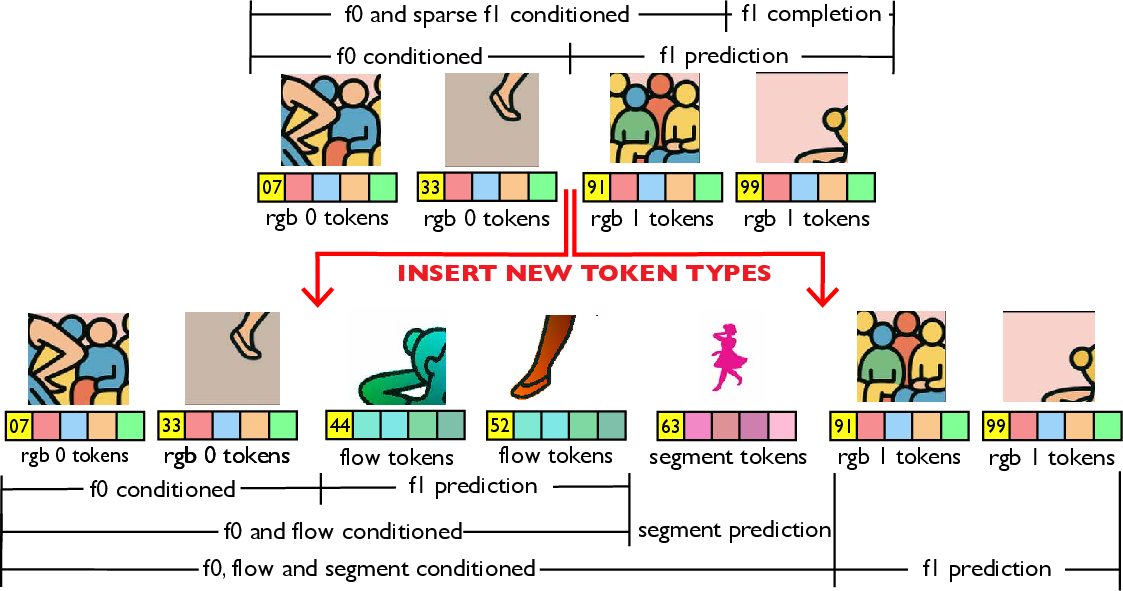

These extracted structures serve as new token types, enabling bidirectional conditioning and prediction.

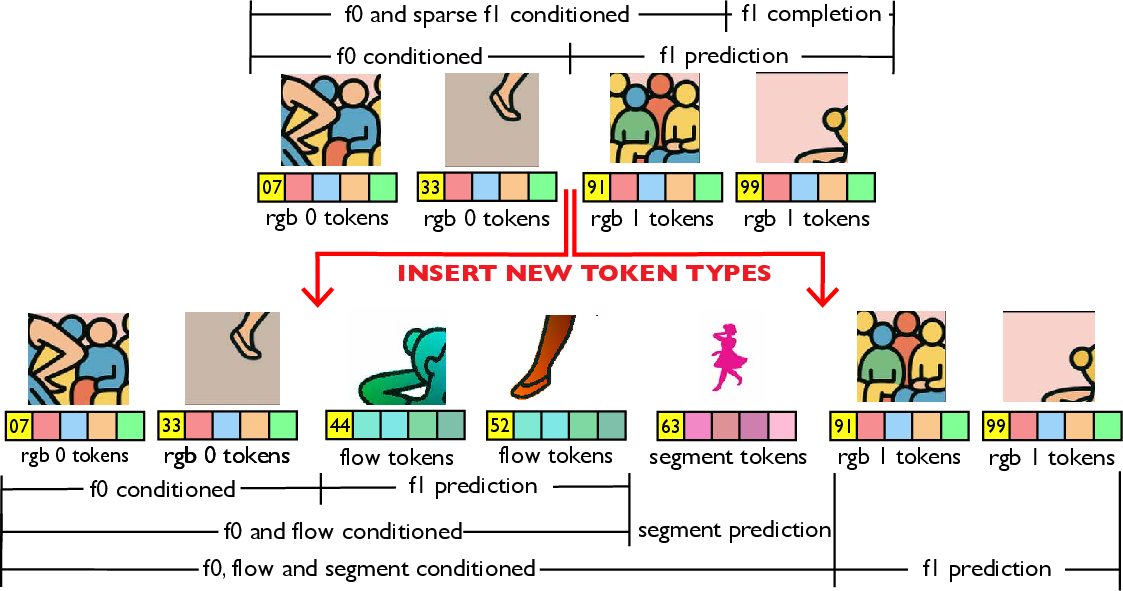

Structure Integration: Expanding Control Surfaces

PSI introduces a generic mechanism for integrating new token types:

- Tokenization: HLQ encodes intermediates (flow, depth, segments) with new pointer addresses.

- Sequence mixing: New tokens are interleaved in the autoregressive sequence, enabling all combinations of conditioning and prediction.

- Continual training: Warmup-Stable-Decay (WSD) schedule allows seamless integration without catastrophic forgetting.

Figure 9: Mixing new tokens into sequences: flow, depth, and segments are interleaved for joint modeling.

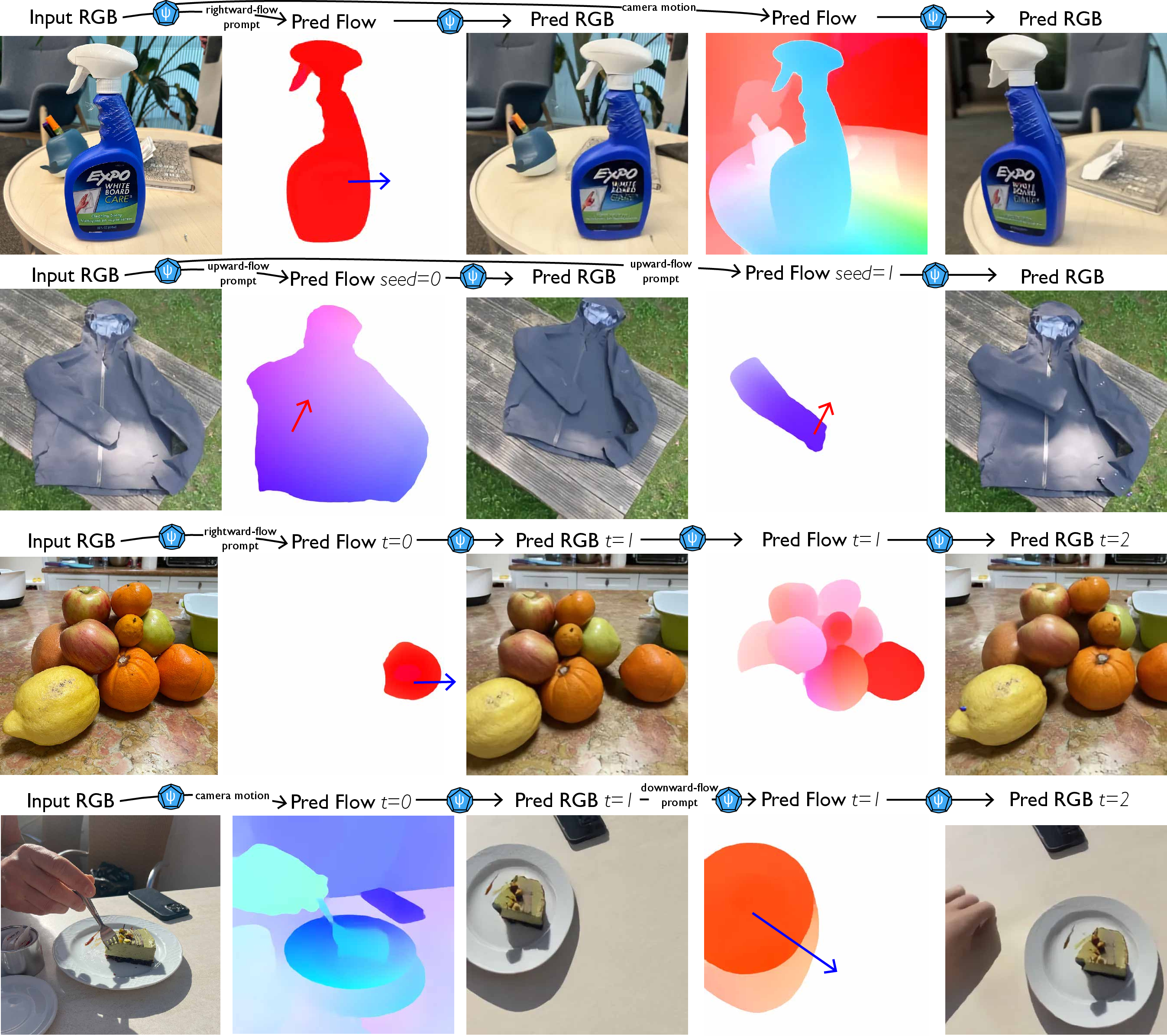

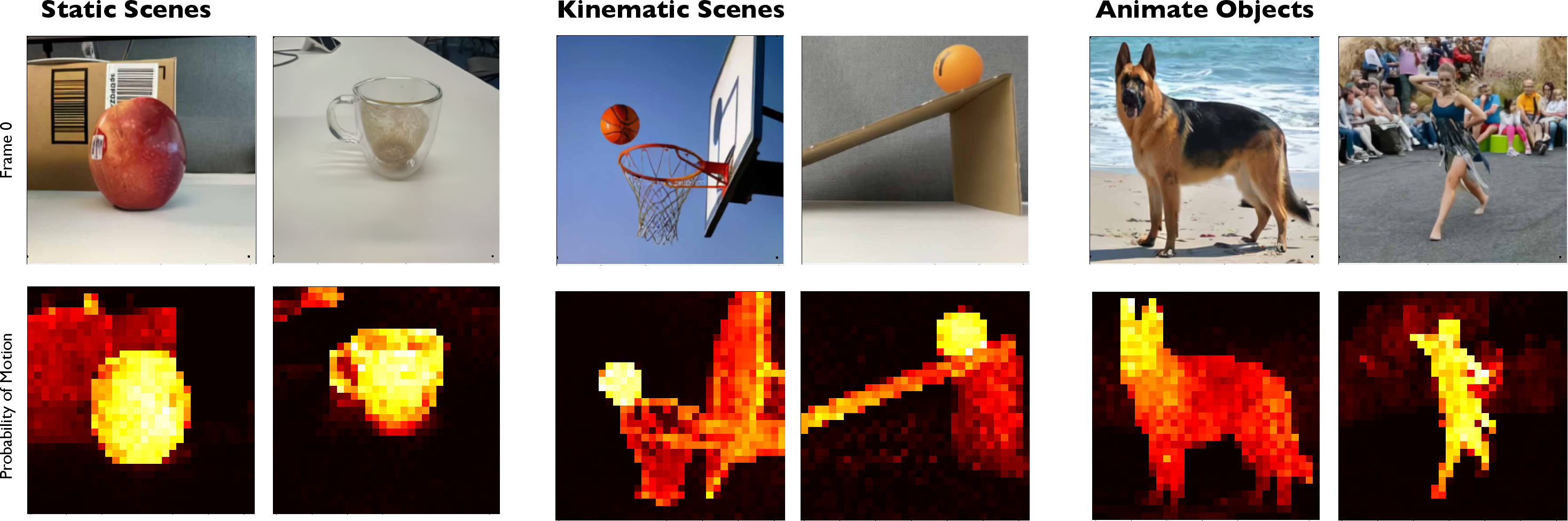

This integration enables precise control (e.g., specifying sparse flow vectors for object manipulation), improved extractions (direct prediction of intermediates yields cleaner segments and depth), and access to higher-order properties (e.g., probability of motion).

Figure 10: Improved generation control: flow tokens constrain motion, guiding plausible outputs.

Figure 11: Improved extractions: direct intermediate prediction yields cleaner object segments and sharper depth maps.

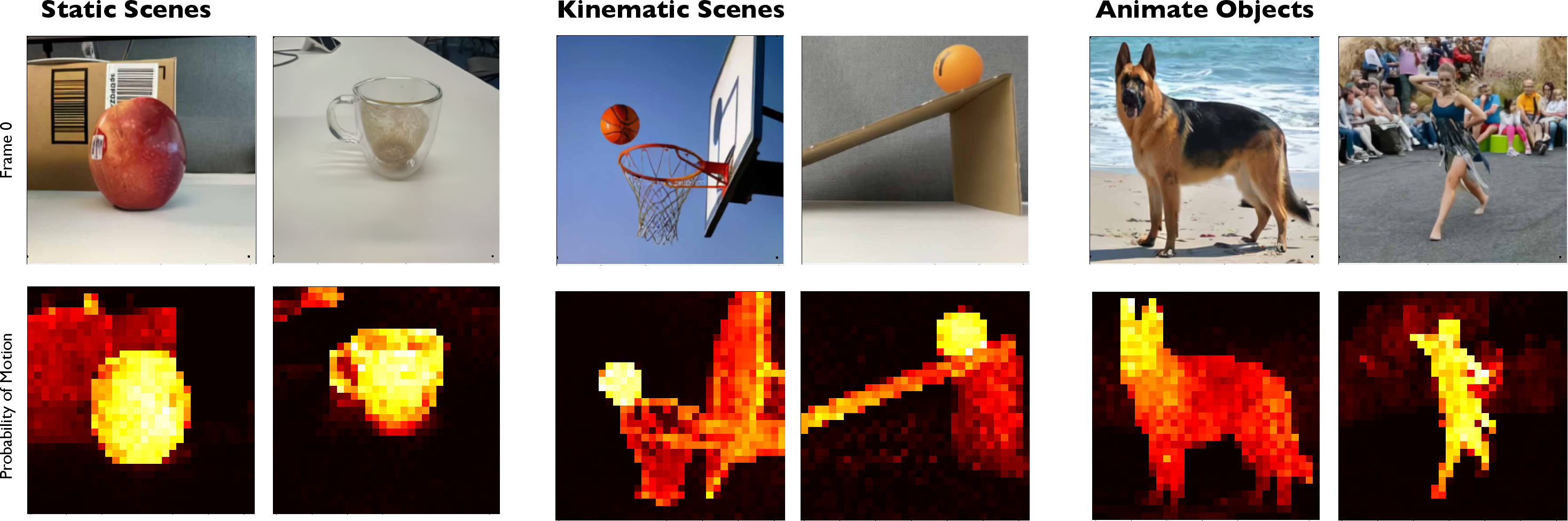

Bootstrapping Higher-Order Structure

Integrated intermediates enable extraction of higher-order statistics, such as probability of motion and expected displacement, by aggregating over the flow model’s predictions. This recursive bootstrapping supports increasingly sophisticated scene representations.

Figure 12: Probability of motion: heatmaps reveal objects poised for movement before any motion occurs.

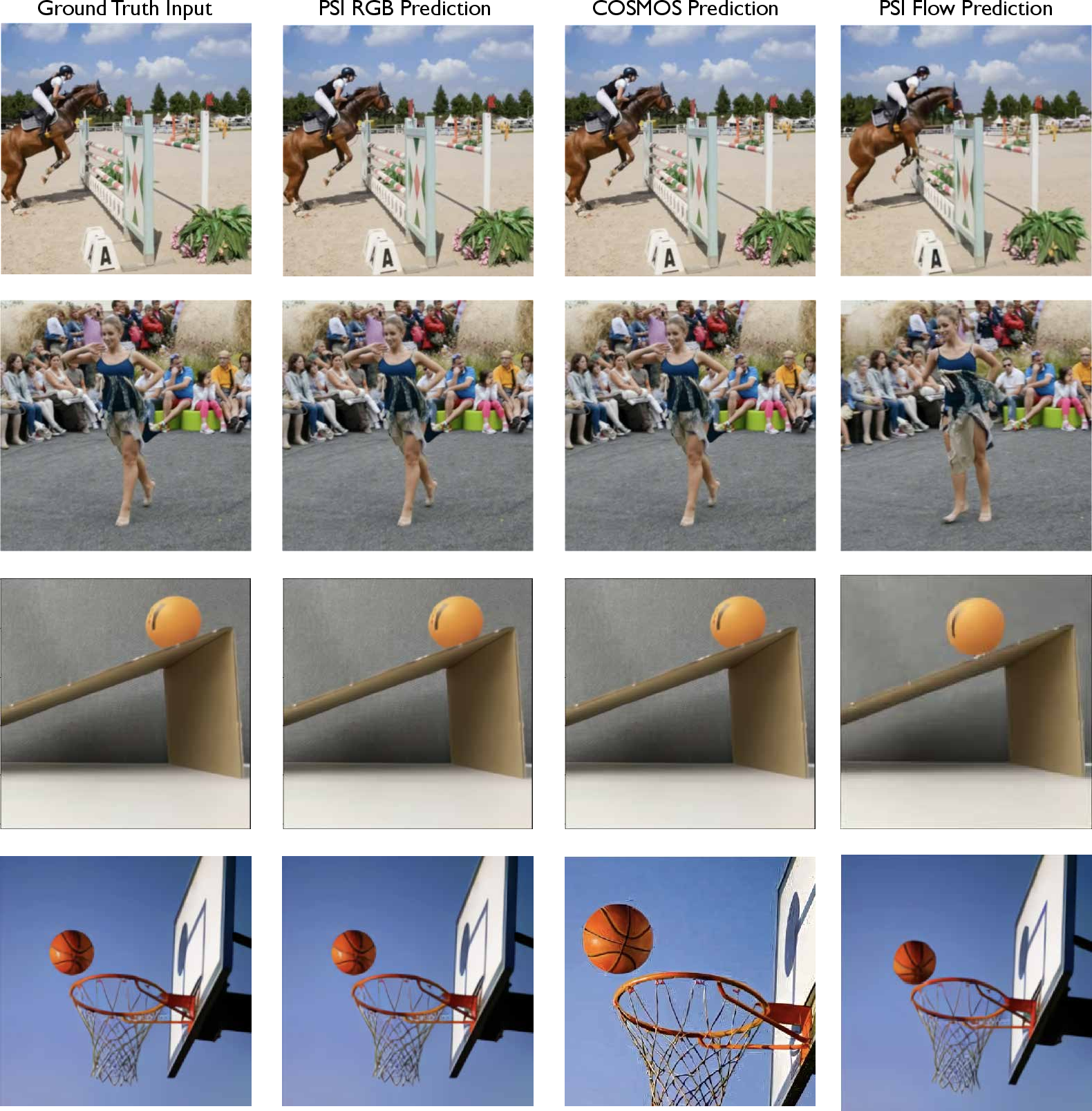

Upgrading Base Prediction and Avoiding Motion Collapse

Factoring prediction through intermediates (e.g., flow) prevents motion collapse in ambiguous scenarios. PSI with flow integration commits to specific motion hypotheses, generating dynamic scenes that RGB-only models fail to capture.

Figure 13: Unconditional prediction with flow intermediate: flow integration enables dynamic scene generation, avoiding motion collapse.

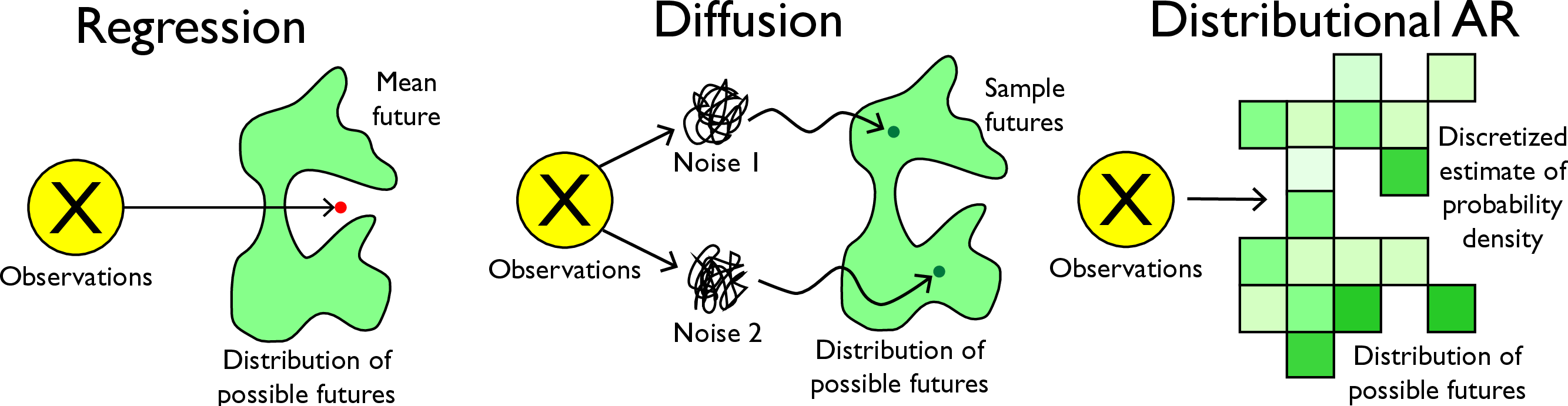

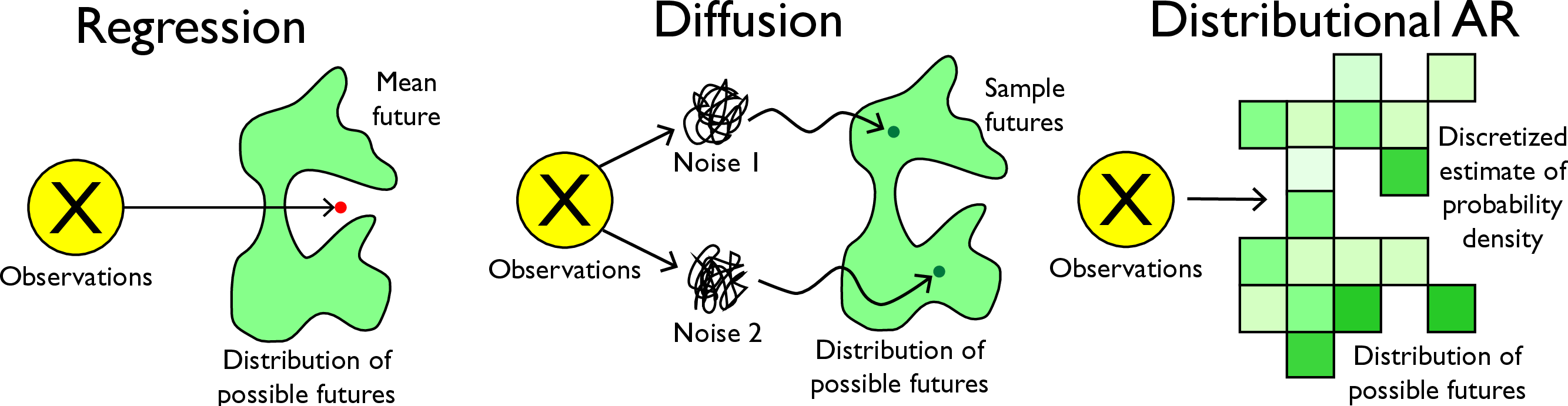

Comparative Analysis: Regression, Diffusion, and Distributional Prediction

PSI’s distributional autoregressive modeling enables explicit uncertainty estimation and statistical inference, contrasting with regression (mean prediction, off-manifold outputs) and diffusion (sample-wise generation, implicit density modeling).

Figure 14: Regression, diffusion, and distributional prediction: only distributional models output full probability distributions for explicit uncertainty.

Applications

PSI demonstrates utility in physical video editing, visual Jenga (object dependency analysis), and robotic motion planning (anticipating motion from static images). The unified prompting interface supports factual, hypothetical, and counterfactual reasoning across tasks.

Implications and Future Directions

PSI advances the state of world modeling by:

- Enabling scalable, controllable, and promptable models for non-linguistic domains.

- Providing a generic mechanism for continual self-improvement via structure integration.

- Supporting zero-shot extraction and manipulation of intermediate representations.

- Facilitating explicit uncertainty management and active perception.

Key open problems include automated discovery of useful intermediate structures, integration of global/object-centric representations, extension to domains beyond vision, and incorporation of semantic categories and long-range memory. The framework’s recursive bootstrapping principle suggests a path toward increasingly rich, hierarchical scene understanding.

Conclusion

Probabilistic Structure Integration offers a principled, scalable approach to world modeling, unifying generative, discriminative, and causal inference capabilities within a single autoregressive framework. By iteratively extracting and integrating intermediate structures, PSI enables precise control, flexible querying, and continual improvement, laying the groundwork for future advances in AI systems capable of deep physical reasoning and interactive manipulation.