- The paper introduces a scalable LLM-based scambaiting system that proactively collects actionable threat intelligence from over 2,600 scammer engagements.

- It employs a hybrid approach by combining automated LLM responses with human review, which accelerates information disclosure and enhances message quality.

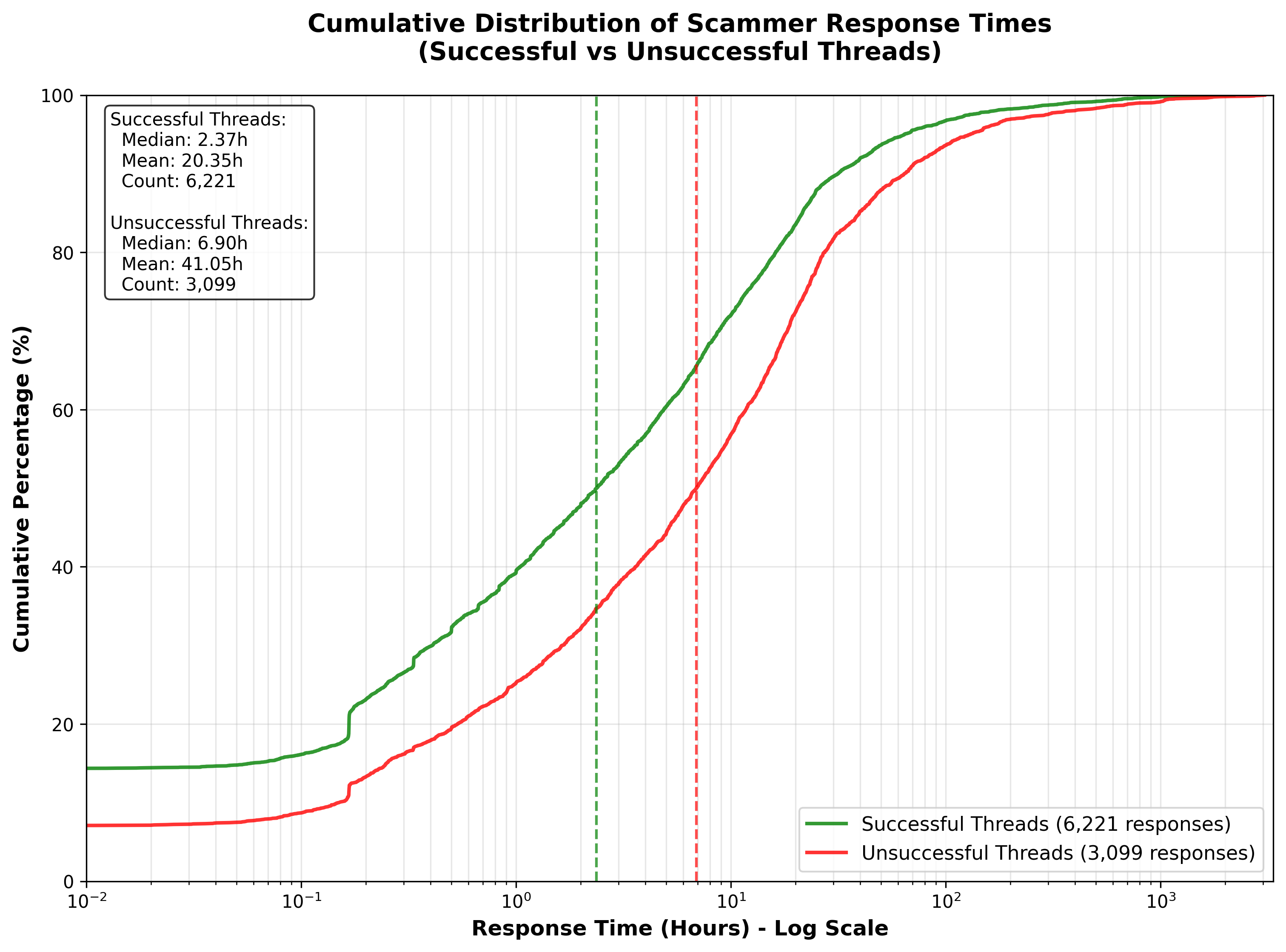

- Empirical results highlight that concise initial messaging and rapid scammer replies are critical for effective engagement and intelligence extraction.

Evaluation of an LLM-Based Scambaiting System for Proactive Scam Intelligence Collection

Introduction

The paper presents a rigorous, large-scale evaluation of an operational scambaiting system powered by LLMs, specifically targeting the extraction of actionable threat intelligence from real-world scammers. The system was deployed over five months, engaging in over 2,600 scammer interactions and generating a comprehensive dataset for analysis. The paper addresses the limitations of traditional scam defenses by focusing on proactive engagement to uncover the financial infrastructure—such as mule bank accounts and cryptocurrency wallets—that underpins scam operations. The research advances the field by introducing a suite of operational metrics and providing empirical insights into the effectiveness, efficiency, and design of automated scambaiting platforms.

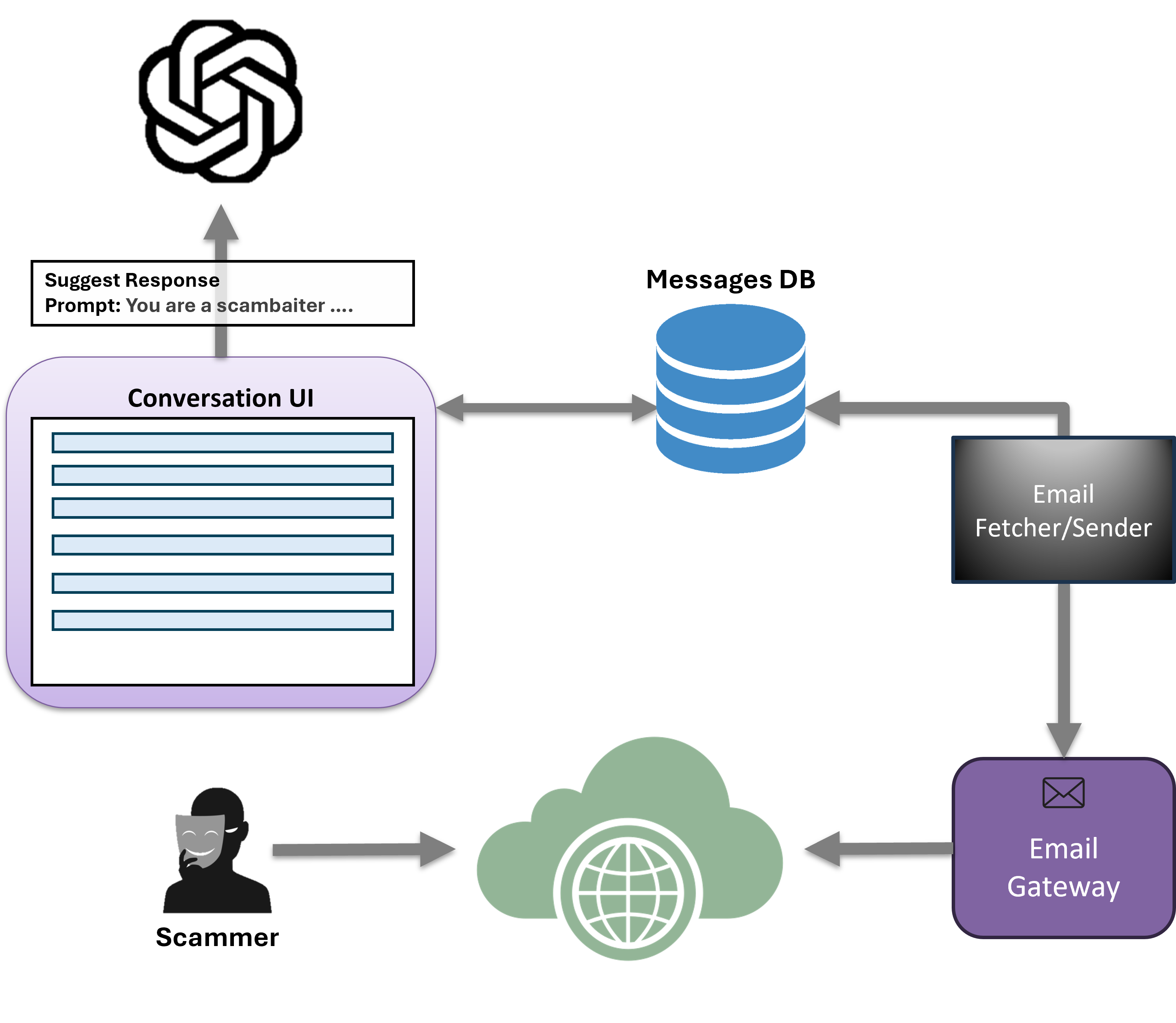

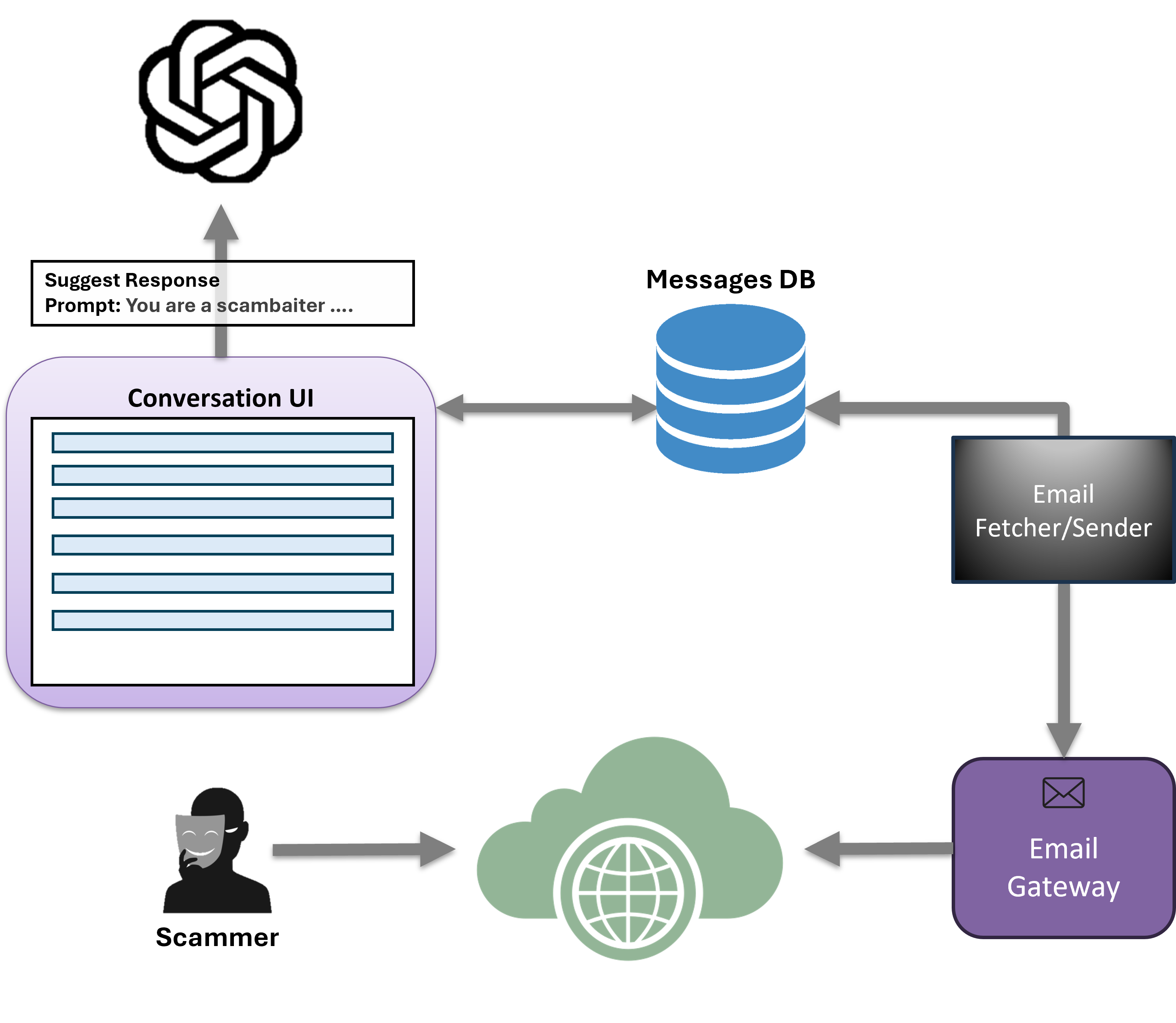

Figure 1: System architecture of the scambaiting platform, illustrating the integration of LLM-driven response generation, human-in-the-loop review, and message routing for live scammer engagement.

System Architecture and Deployment

The scambaiting platform utilizes a single-prompt architecture with ChatGPT as the core LLM. The system supports both fully automated (LLM-only) and human-in-the-loop (HITL) operational modes. In the HITL configuration, human defenders review and optionally edit LLM-generated responses before dispatch. The platform manages multiple victim personas and email accounts, enabling realistic and diverse engagement strategies. All interactions are logged in a centralized database, facilitating detailed longitudinal analysis.

Dataset and Operational Modes

The dataset comprises over 2,600 seeded engagements, with more than 18,000 exchanged messages. Two operational modes are distinguished:

- Mode I (LLM-Only): Autonomous response generation with mandatory human review for safety.

- Mode II (LLM + HITL): Human operators review and edit LLM suggestions prior to sending.

Mode I was active for 120 days, while Mode II operated for 34 days. The dataset includes metadata such as timestamps, sender/recipient addresses, and flags for financial information disclosures.

Evaluation Metrics

The paper introduces a comprehensive evaluation framework, encompassing three primary categories:

- Disclosure Success: Information Disclosure Rate (IDR), Information Disclosure Speed (IDS)

- Message Generation Quality: Human Acceptance Rate (HAR), Average Edit Distance, Message Freshness

- Engagement Dynamics: Takeoff Ratio, Engagement Endurance, Response Invocation

These metrics enable granular assessment of both the operational effectiveness and conversational quality of the scambaiting system.

Empirical Results

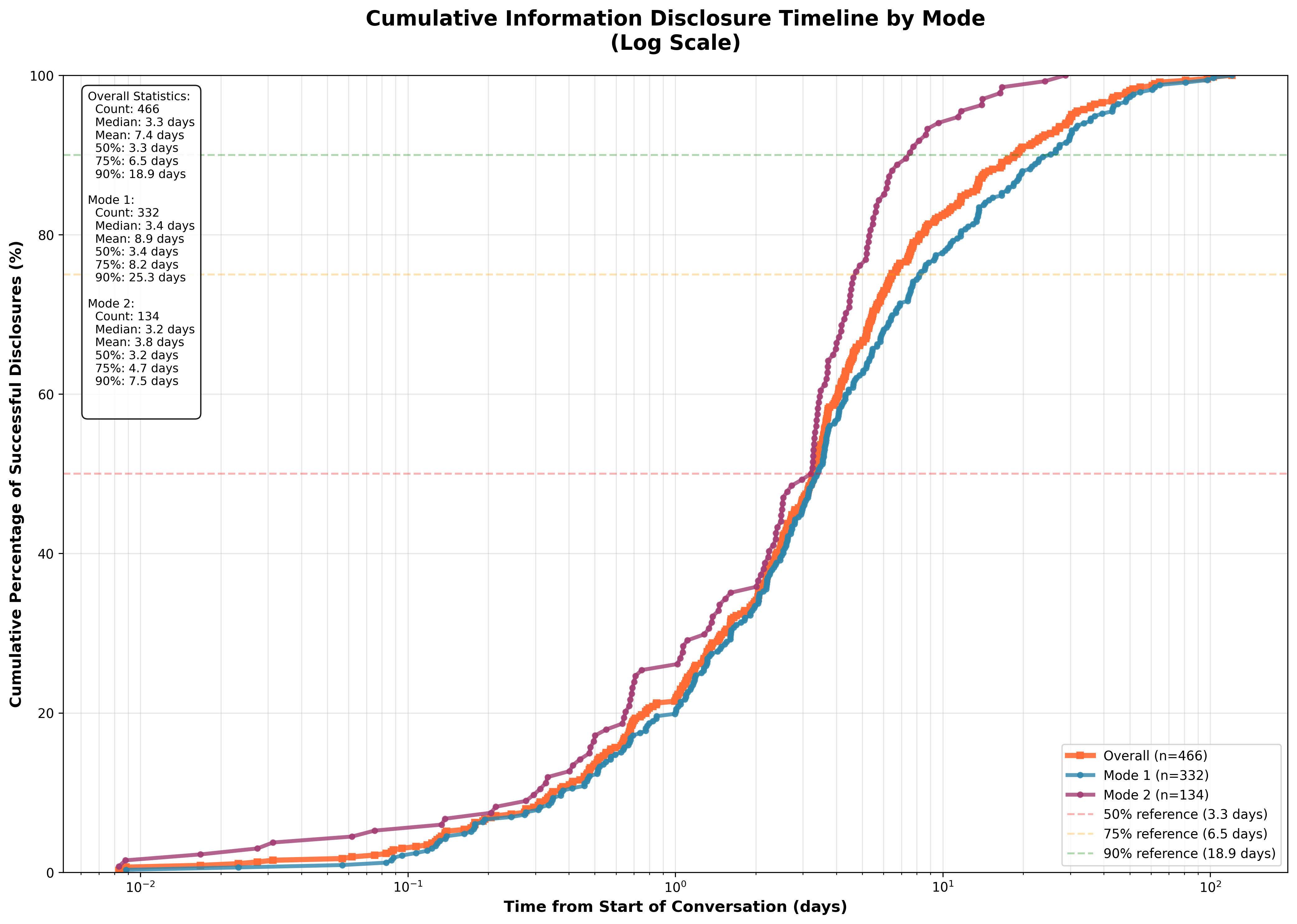

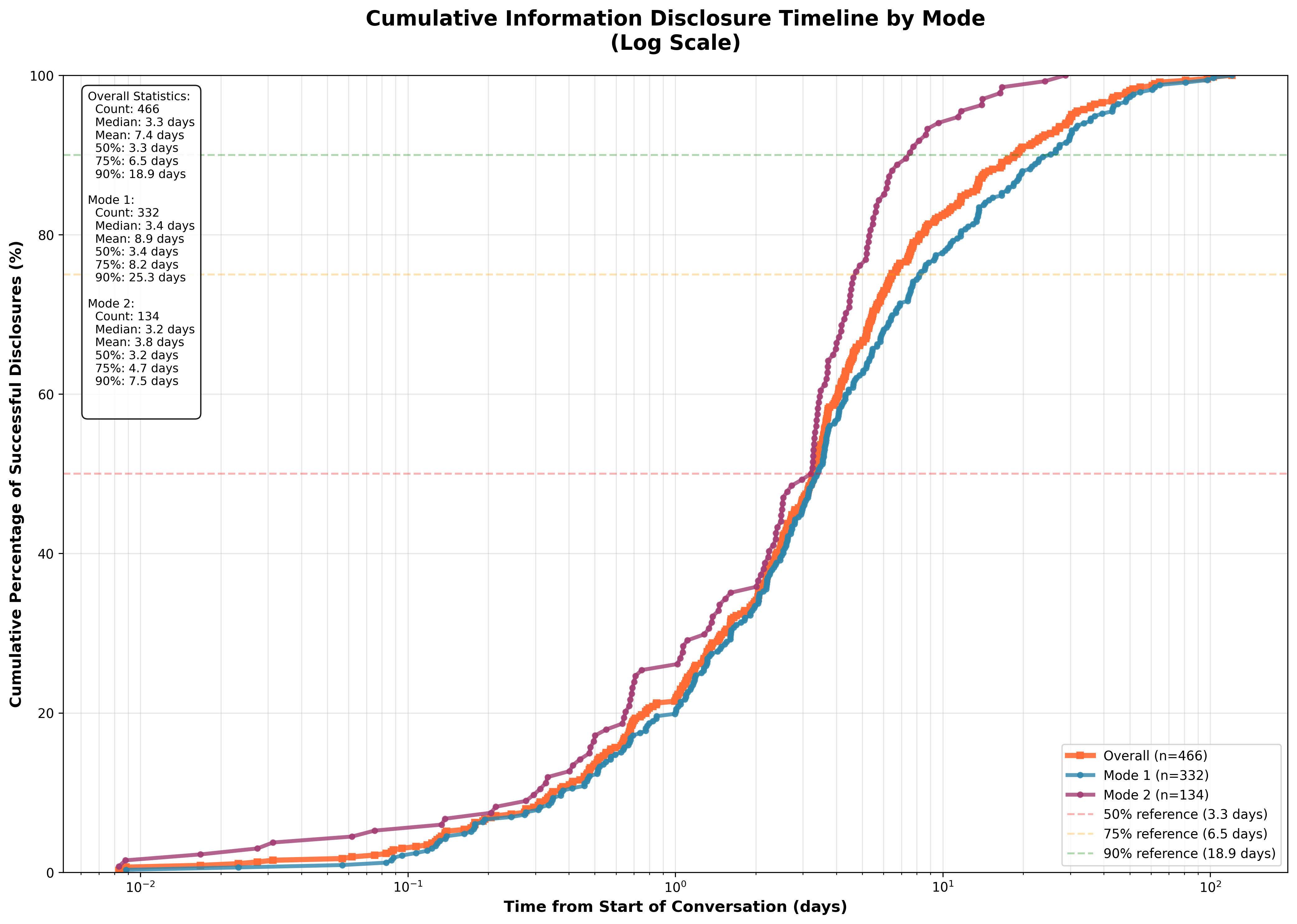

The system achieved an overall IDR of 17.66% across all engagements, rising to 31.74% for matured threads (i.e., those with at least one scammer response). Mode II (HITL) demonstrated a marginally higher IDR (34.01%) compared to Mode I (30.91%). Disclosures occurred rapidly: 50% of successful cases were completed within 3.3 days, and 90% within 18.9 days. Mode II facilitated faster disclosures, with a 90th percentile completion at 7.5 days versus 25.3 days for Mode I.

Figure 2: Cumulative timeline of successful information disclosures, highlighting the accelerated disclosure speed in HITL mode.

Message Generation Quality

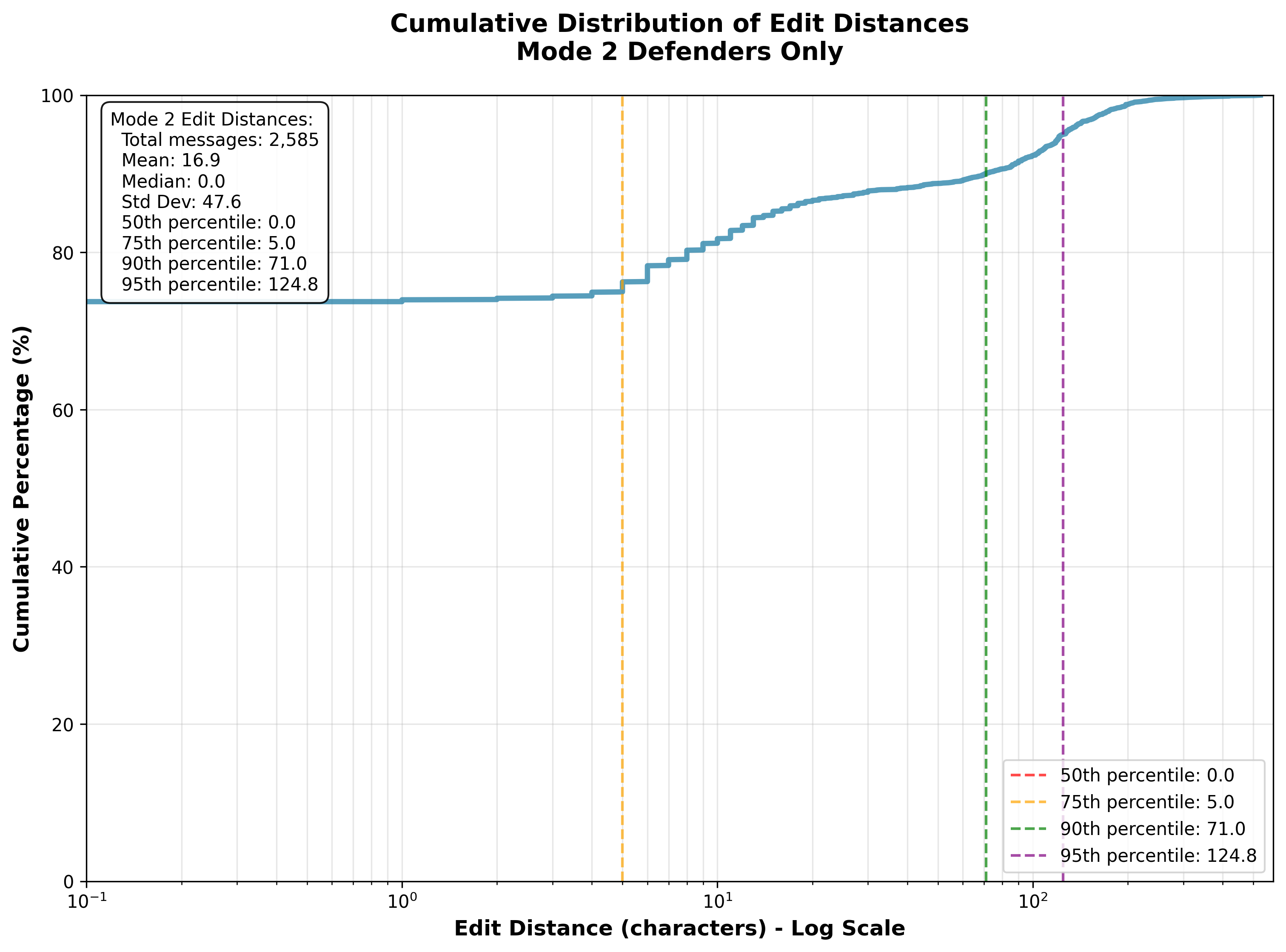

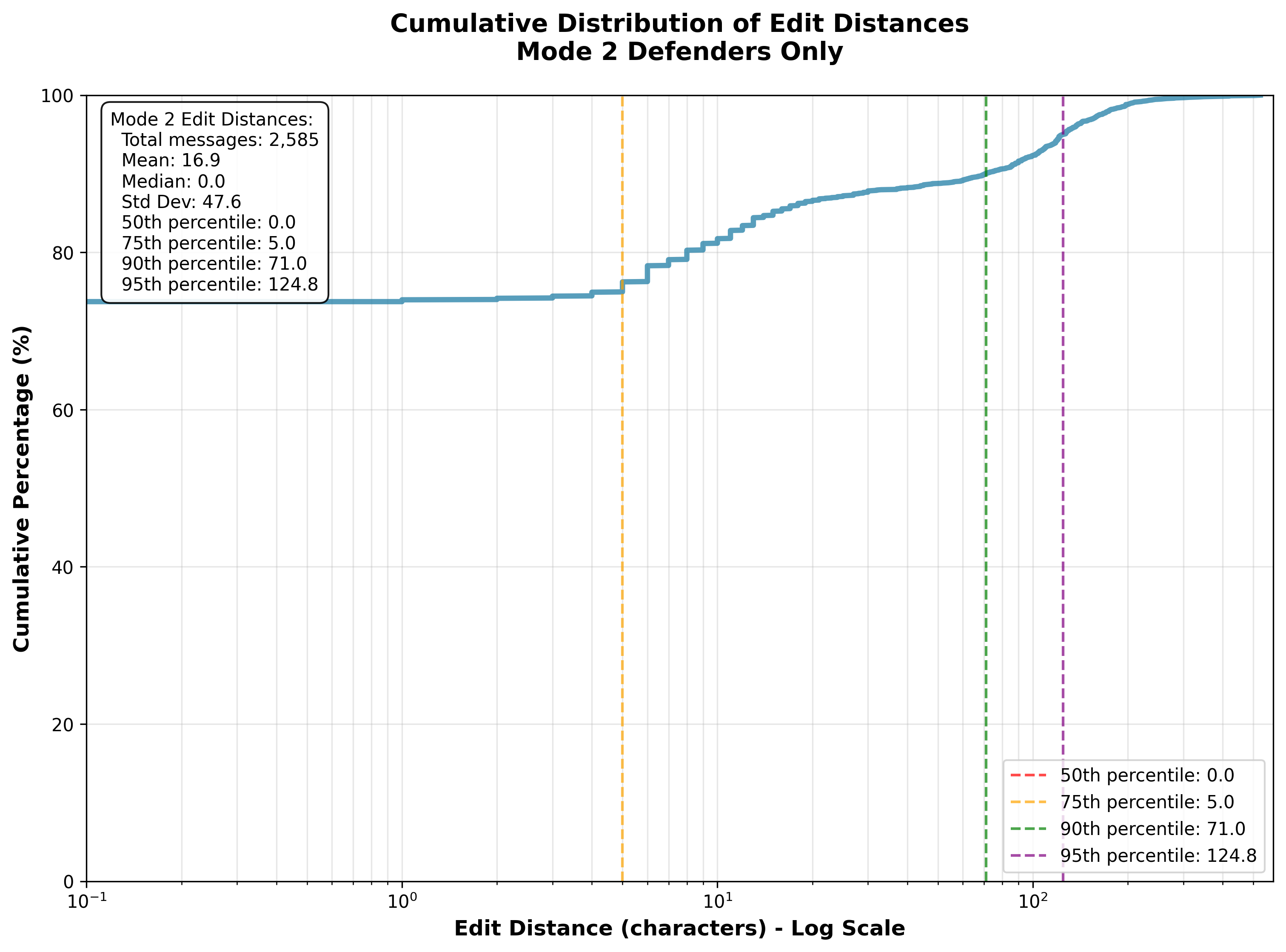

The Human Acceptance Rate (HAR) in Mode II was 69.02%, indicating strong alignment between LLM-generated responses and human defender preferences. The average edit distance for modified messages was 14.4 characters, with most edits being minor. Medium-length messages (51–200 characters) had the highest acceptance rate (72.83%). Successful engagements correlated with higher HAR, suggesting that conversational alignment is predictive of positive outcomes.

Figure 3: Cumulative distribution of edit distances for LLM-generated messages, showing that the majority were accepted without modification.

Message freshness analysis revealed that attacker messages were consistently more diverse than defender messages. Early defender responses relied heavily on templated language, while later turns exhibited greater linguistic novelty.

Engagement Dynamics

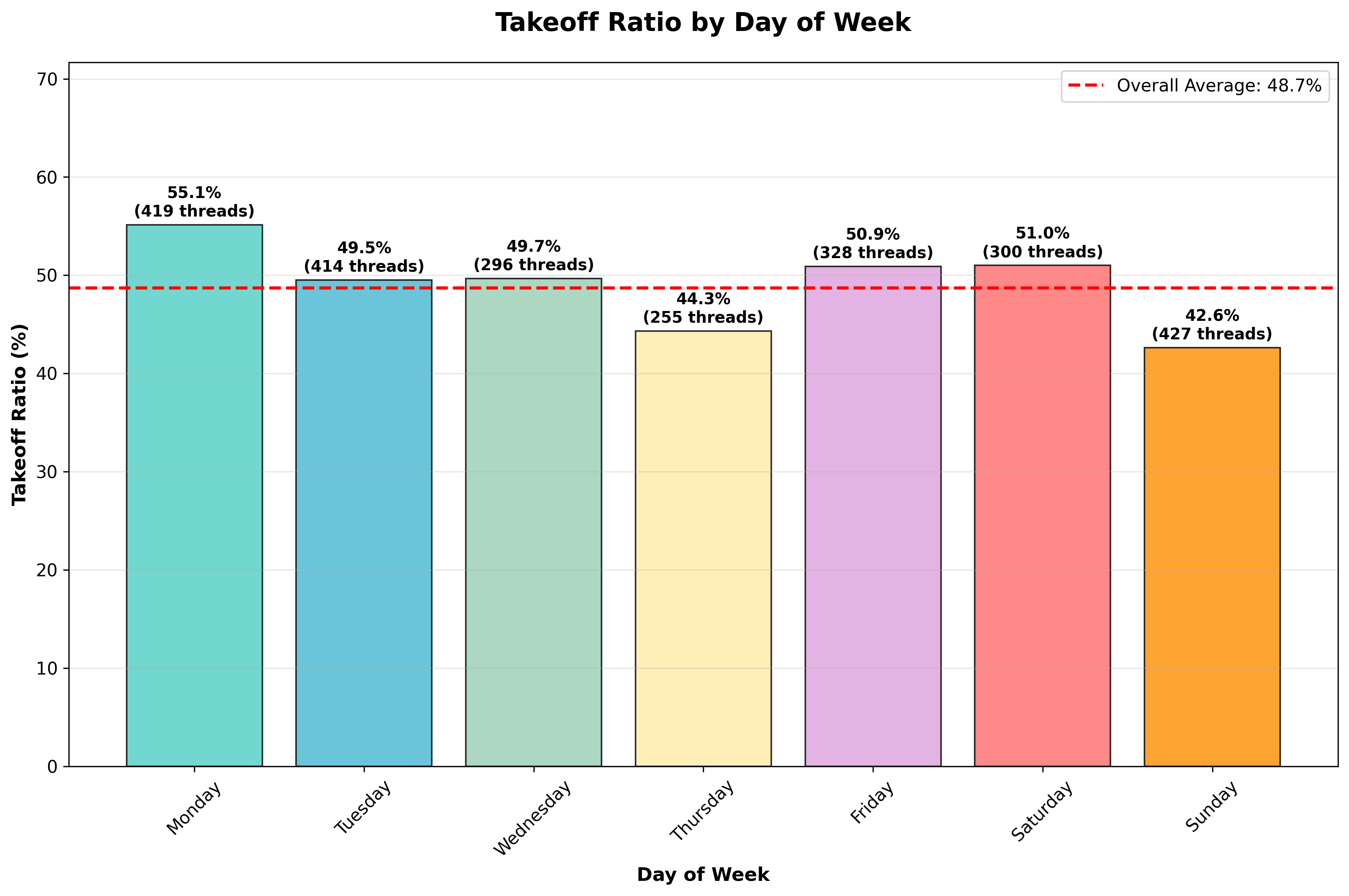

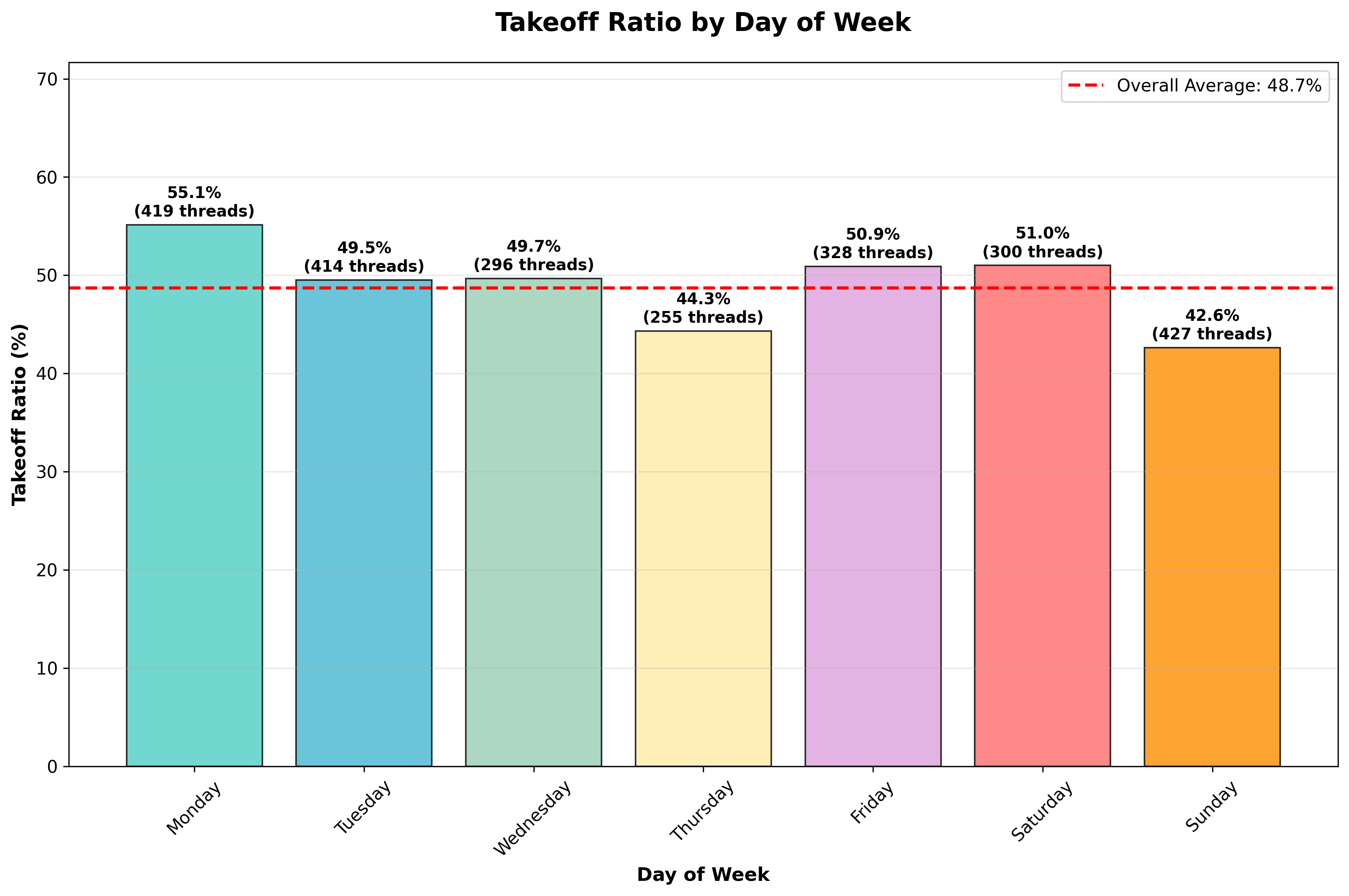

The takeoff ratio—the proportion of seeded engagements that received at least one scammer response—was 48.7%. Message brevity and timing were critical: shorter, well-phrased initial messages increased the likelihood of engagement. Day-of-week analysis showed moderate variation, with Mondays yielding the highest takeoff rates.

Figure 4: Day-of-week variation in takeoff ratio, with Mondays exhibiting the highest scammer responsiveness.

Engagement endurance was strongly associated with success. Successful engagements averaged 23.4 message turns and 14.8 days, compared to 6.9 turns and 9.1 days for unsuccessful ones. HITL mode achieved comparable conversational depth in significantly less time.

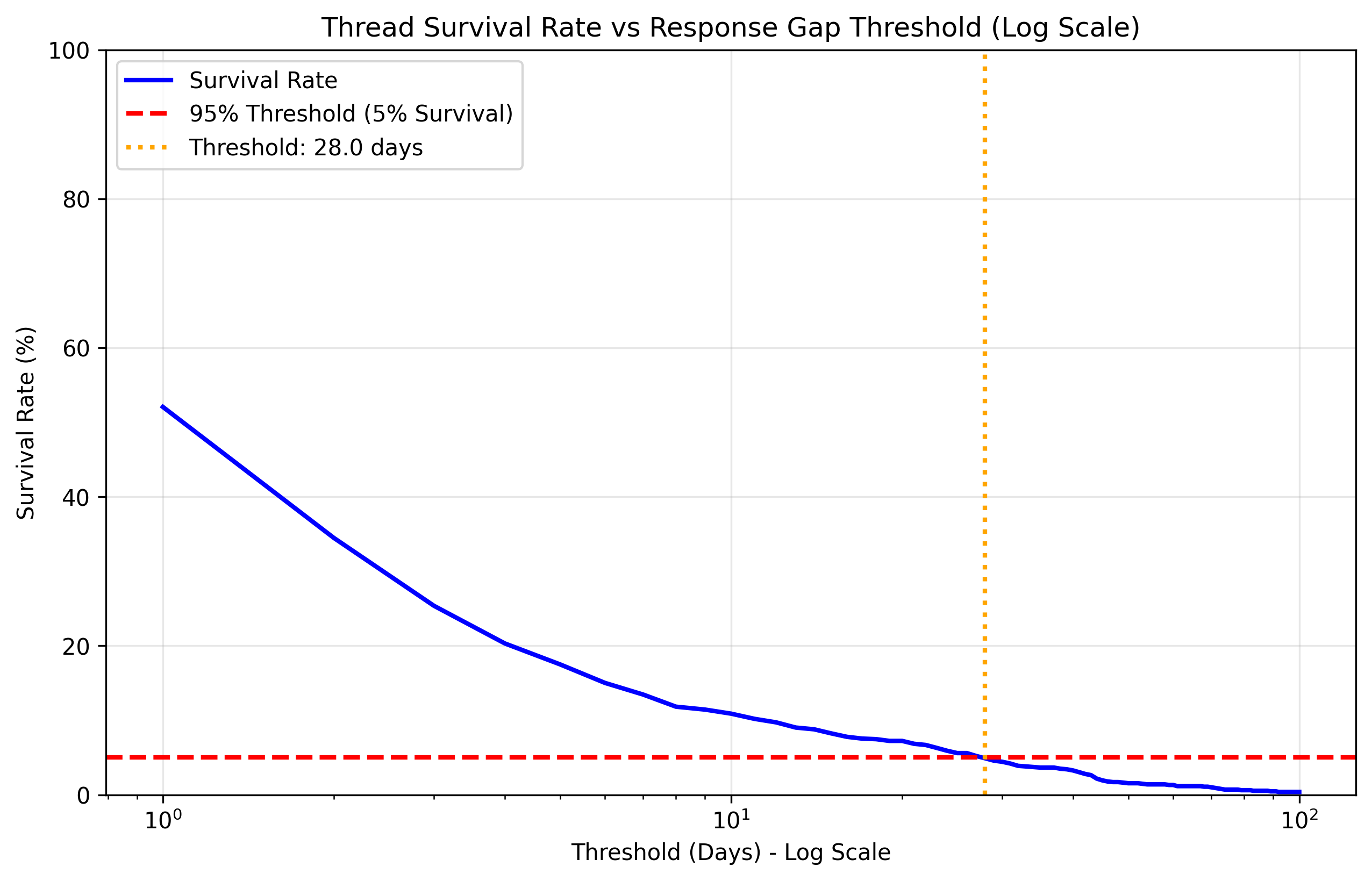

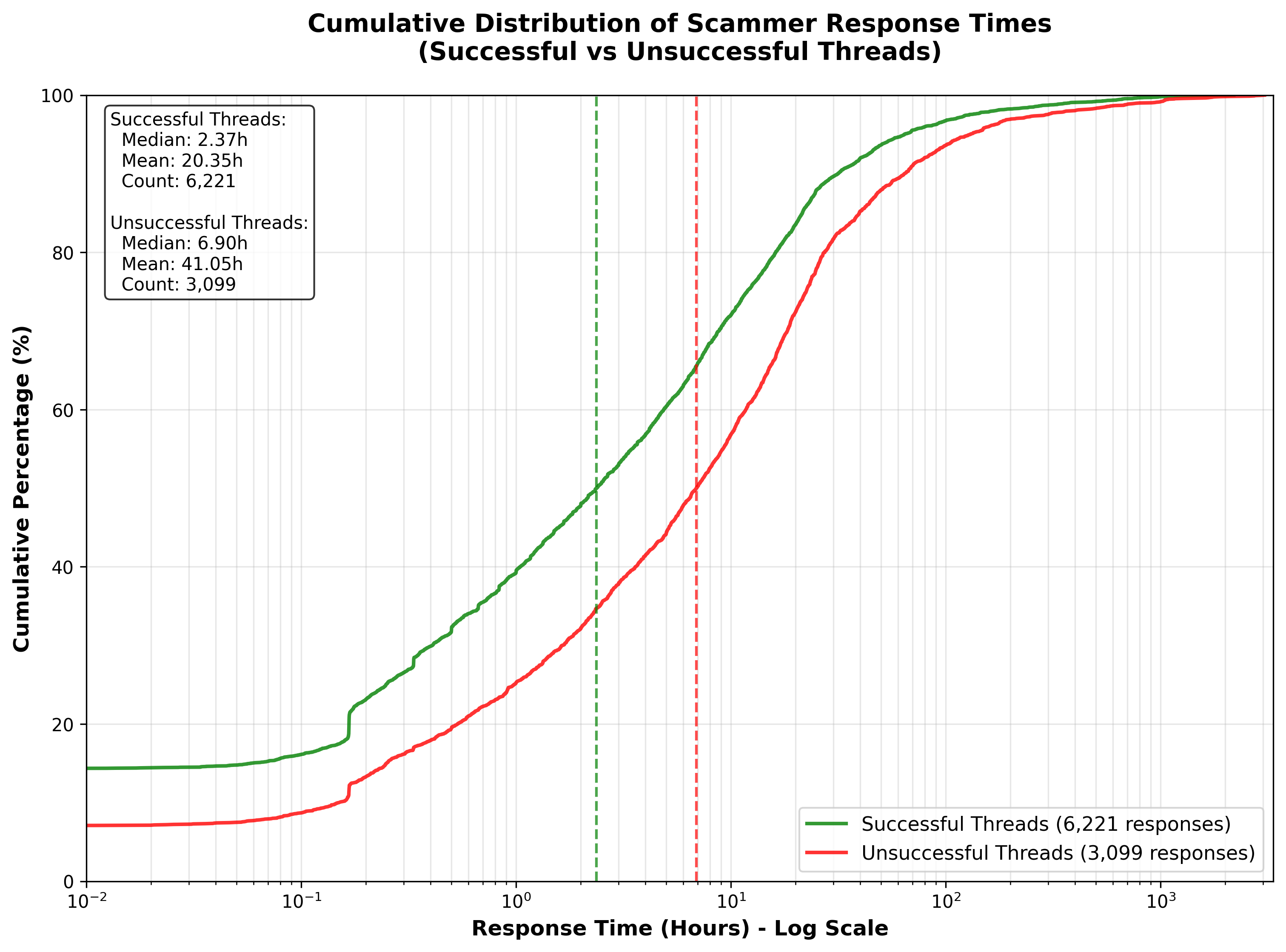

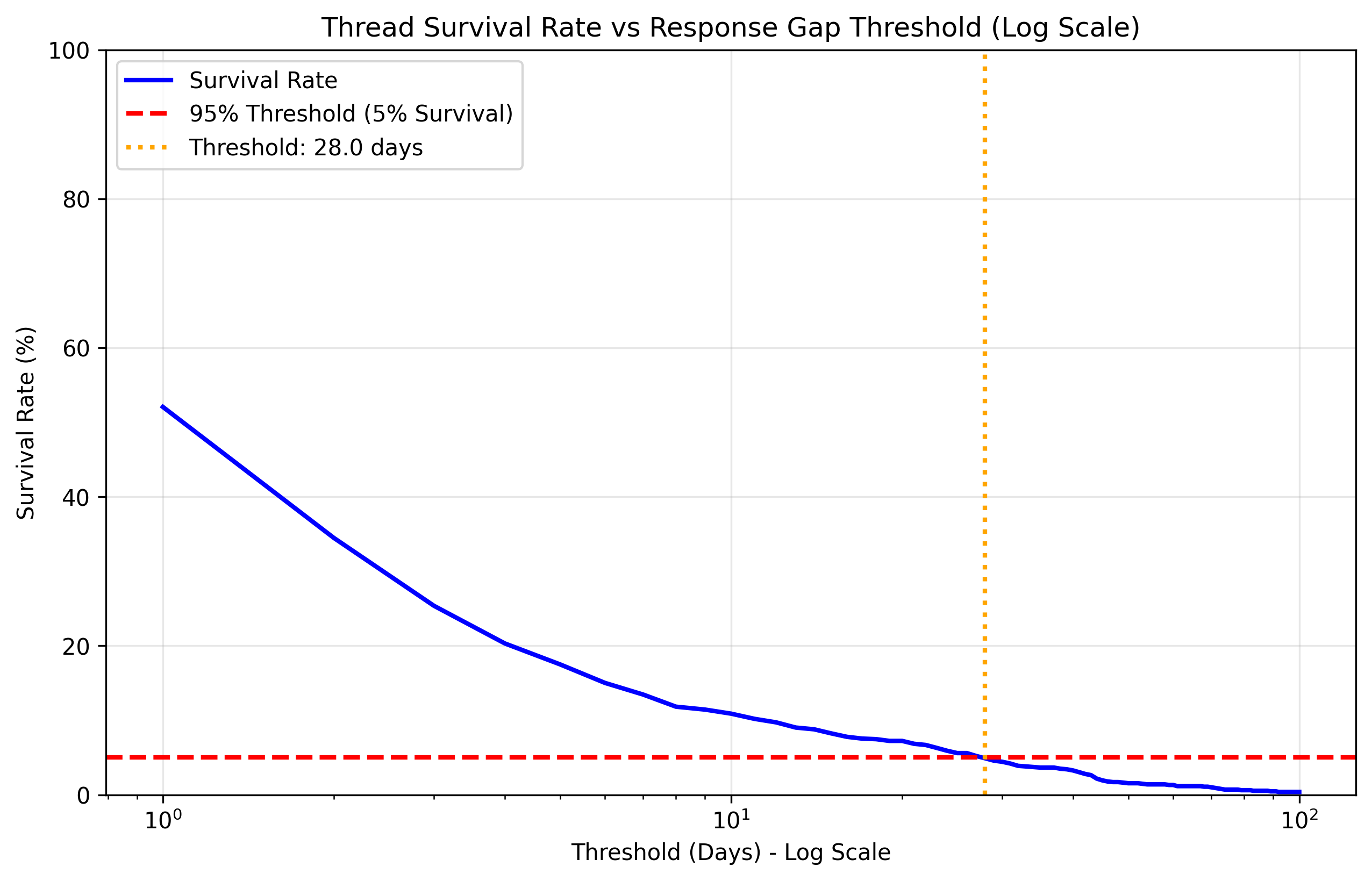

Scammer response latency was a robust predictor of engagement quality. Successful engagements featured faster scammer replies (mean: 20.35 hours) than unsuccessful ones (mean: 41.05 hours). Survival analysis indicated that 95% of engagements could be considered closed after 28 days of no response.

Figure 5: Cumulative distribution of scammer response times, demonstrating that rapid replies are strongly associated with successful intelligence extraction.

Figure 6: Survival rate of scammer responses over time, establishing a 28-day threshold for engagement closure with 95% confidence.

Design Insights and Operational Implications

The analysis yields several actionable insights:

- Persistence is critical: Multi-turn, sustained engagement dramatically increases the likelihood of sensitive disclosures.

- Human oversight accelerates success: HITL configurations yield faster and more efficient intelligence extraction.

- Message quality matters: HAR is a strong proxy for conversational alignment and outcome quality.

- Template diversity is necessary: Over-reliance on repetitive language in early turns may hinder engagement realism.

- Initial outreach optimization: Concise, well-timed first messages are essential for maximizing takeoff rates.

- Engagement depth drives outcomes: Longer threads are more likely to yield actionable intelligence.

- Scammer responsiveness is predictive: Fast replies signal higher engagement quality and increased probability of disclosure.

These findings inform the design of future scambaiting systems, emphasizing the value of hybrid automation, adaptive dialogue management, and strategic human intervention.

Limitations and Future Directions

While the system demonstrates robust performance, several limitations remain. The takeoff bottleneck—where half of outreach attempts fail to elicit any response—suggests a need for improved initial message generation and targeting. Further research should explore predictive modeling of scammer behavior, adaptive engagement strategies, and the integration of automated content evaluation metrics. Longitudinal analysis of conversational state transitions and the qualitative impact of human editing are promising avenues for future work.

Conclusion

This paper provides a detailed, empirical assessment of an LLM-powered scambaiting system, establishing operational benchmarks and design principles for proactive scam intelligence collection. The results demonstrate that hybrid systems combining generative AI and human oversight can efficiently and effectively extract actionable threat intelligence from real-world scammers. The proposed evaluation framework and insights lay the foundation for scalable, adaptive, and robust active-defense solutions in the evolving landscape of financial fraud and scam mitigation.