- The paper introduces a novel agentic framework that decomposes SAR generation into specialized tasks, enhancing speed and accuracy.

- It integrates an AI-Privacy Guard layer and external intelligence to ensure data confidentiality and timely, context-aware compliance.

- The system achieves 70% narrative completeness and 61% time savings, validated by domain experts, demonstrating its practical efficacy.

Agentic AI for Automated, Trustworthy SAR Narrative Generation in AML Compliance

Introduction and Motivation

The paper introduces Co-Investigator AI, a modular agentic framework designed to automate and enhance the generation of Suspicious Activity Reports (SARs) for Anti-Money Laundering (AML) compliance. SAR narratives are critical for regulatory and law enforcement review, yet their manual drafting is time-consuming (25–315 minutes per report), inconsistent, and increasingly overwhelmed by transaction volume and typological complexity. Existing LLM-based approaches, while fluent, suffer from factual hallucination, poor typology alignment, and limited explainability, making them unsuitable for compliance-critical domains. The proposed agentic system addresses these limitations by decomposing the SAR workflow into specialized, interacting agents, each responsible for distinct analytic, reasoning, and validation tasks, with human investigators firmly in the loop.

Limitations of Traditional and Monolithic GenAI SAR Workflows

Manual SAR drafting is characterized by fragmented tool usage, high latency, cognitive overload, and error risk. Investigators must synthesize data from disparate sources, leading to inconsistent narrative quality and scalability bottlenecks. Direct LLM-based generation, tested on real-world AML cases, exhibits strong performance on simple, template scenarios but degrades sharply with complexity, producing hallucinated details and requiring extensive manual review. Hallucination rates in LLM-generated compliance content frequently exceed 20–30%, nullifying time savings and introducing unacceptable compliance risks. These findings motivate a shift toward agentic decomposition, where modular agents reason, validate, and interact collaboratively with human experts.

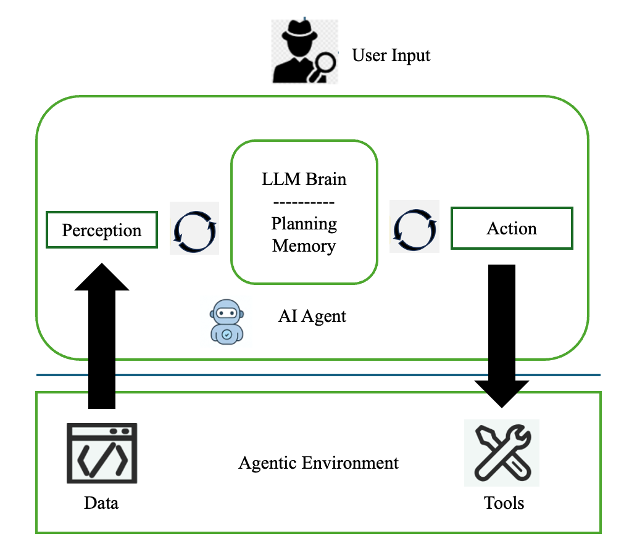

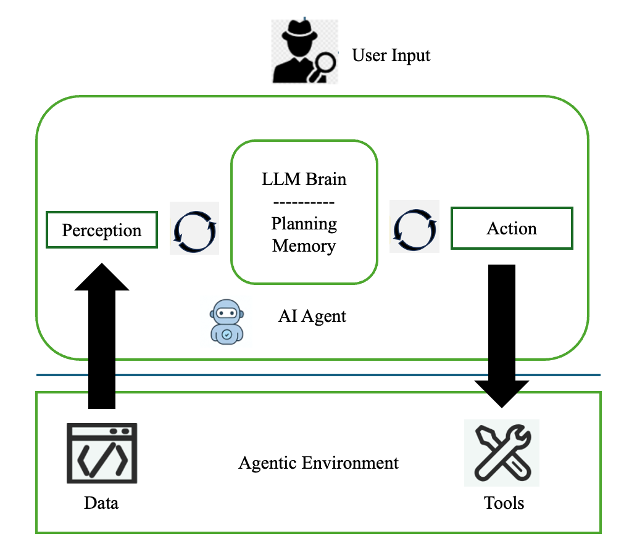

Agentic AI Architecture: Perceive–Reason–Act Paradigm

Co-Investigator AI adopts a Perceive–Reason–Act architecture, inspired by recent advances in autonomous agentic systems.

Figure 1: Perceive–Reason–Act agentic architecture for SAR generation, enabling modular data ingestion, reasoning, and action.

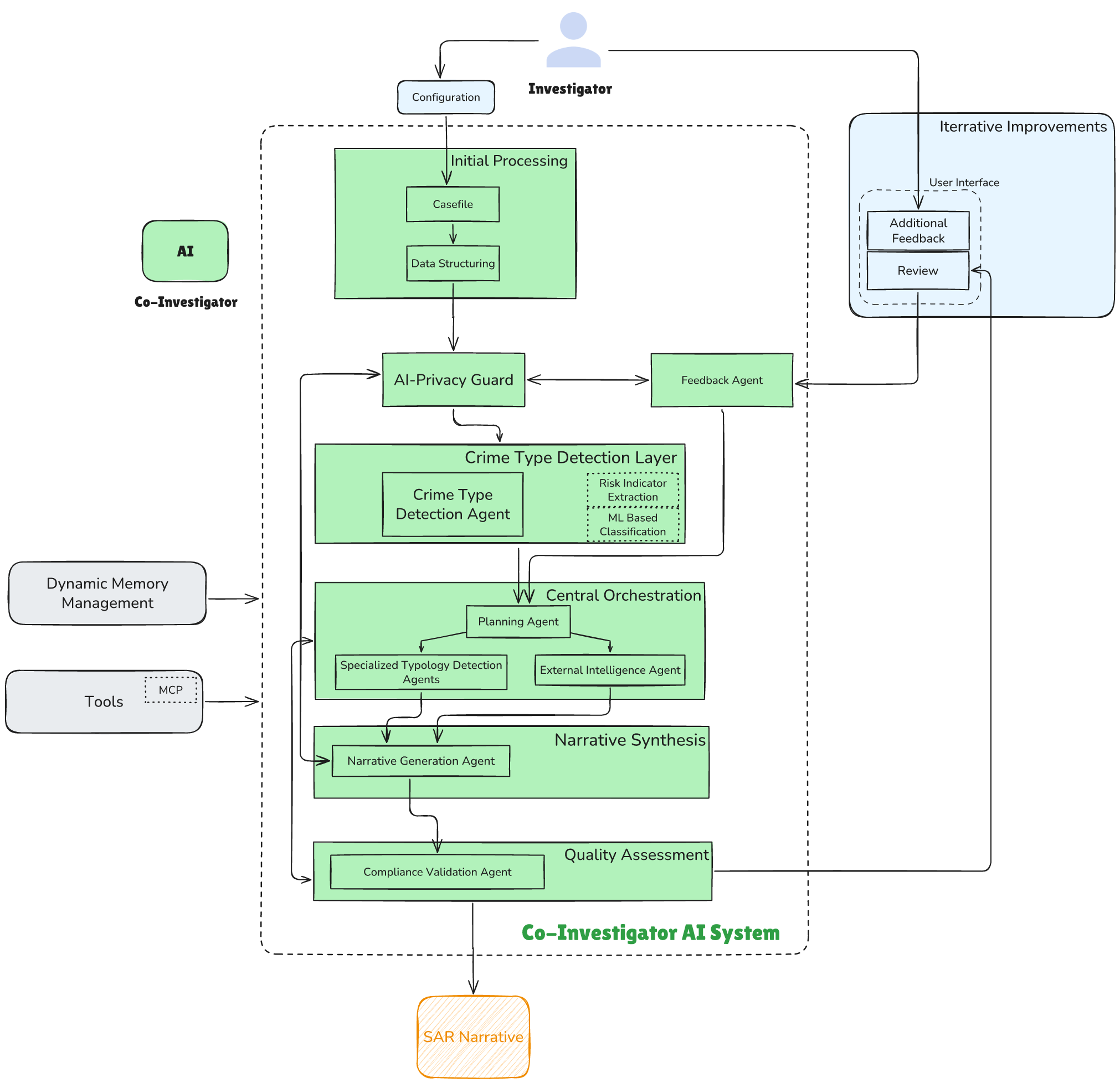

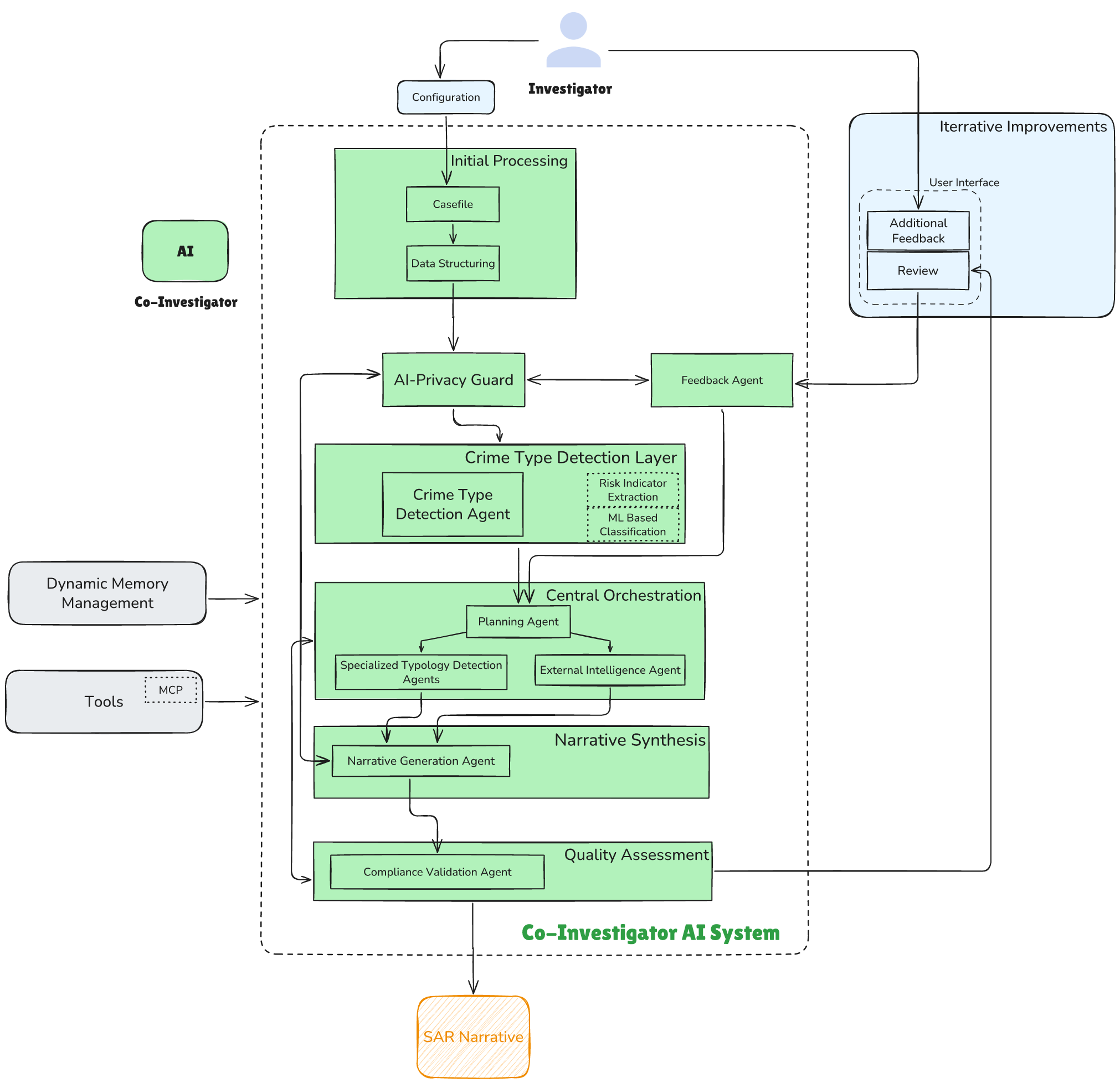

Agents are specialized for data ingestion, crime type detection, planning, typology analysis, external intelligence gathering, narrative generation, compliance validation, and feedback integration. The system is orchestrated by a Planning Agent, which dynamically spawns sub-agents based on detected crime typologies and investigator feedback. This modularity ensures isolation, fault tolerance, scalability, and maintainability, with each agent operating independently yet cohesively.

Figure 2: Modular agentic architecture of Co-Investigator AI, illustrating the interaction of specialized agents for SAR generation.

Data Privacy and Compliance: AI-Privacy Guard Layer

A dedicated AI-Privacy Guard layer precedes LLM processing, anonymizing sensitive entities (class-1/class-2 confidential data) using a RoBERTa+CRF model optimized for unstructured, lengthy inputs and strict SLA requirements. This layer operates across multiple workflow stages, ensuring robust data protection during agent interactions and human-in-the-loop review. The privacy guard is tightly integrated with pre-processing, typology detection, narrative generation, and feedback agents, maintaining compliance with regulatory mandates for data confidentiality.

Crime Type Detection and Specialized Analytical Agents

Crime type detection is achieved via a hybrid approach: automated risk-indicator extraction tools and tree-based ensemble ML models (Random Forest, Gradient Boosting) analyze structured and unstructured data to produce probabilistic, multi-typology classifications. Specialized agents further analyze transaction fraud, payment velocity, jurisdictional risk, textual anomalies, geographic inconsistencies, account health, and dispute patterns. This decomposition enables precise, context-sensitive risk assessment and narrative accuracy.

External Intelligence Integration via MCP

The External Intelligence Agent leverages the Model Context Protocol (MCP) for secure, tool-agnostic integration of external data sources (e.g., news, sanctions lists, regulatory advisories). MCP enables dynamic tool discovery and invocation, enriching SAR narratives with timely, relevant intelligence without bespoke API development.

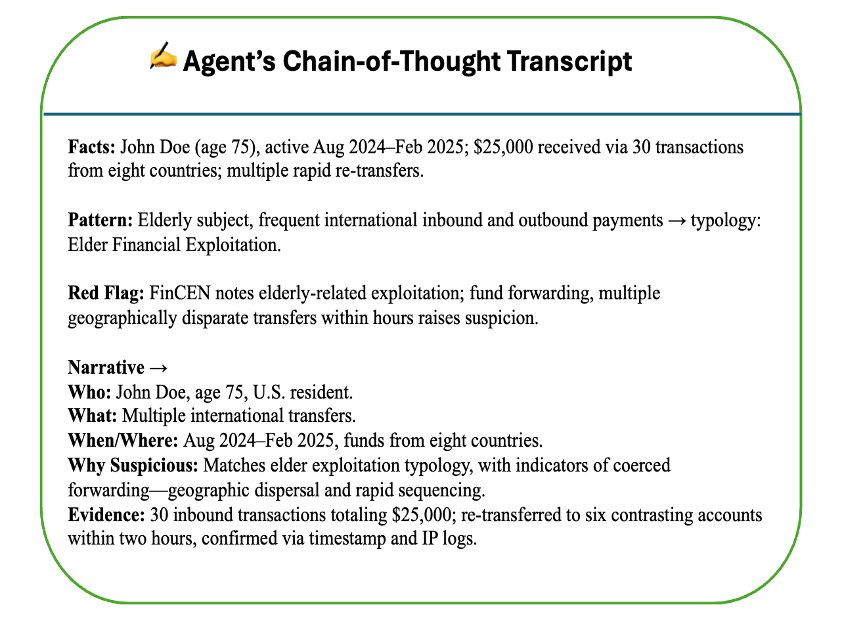

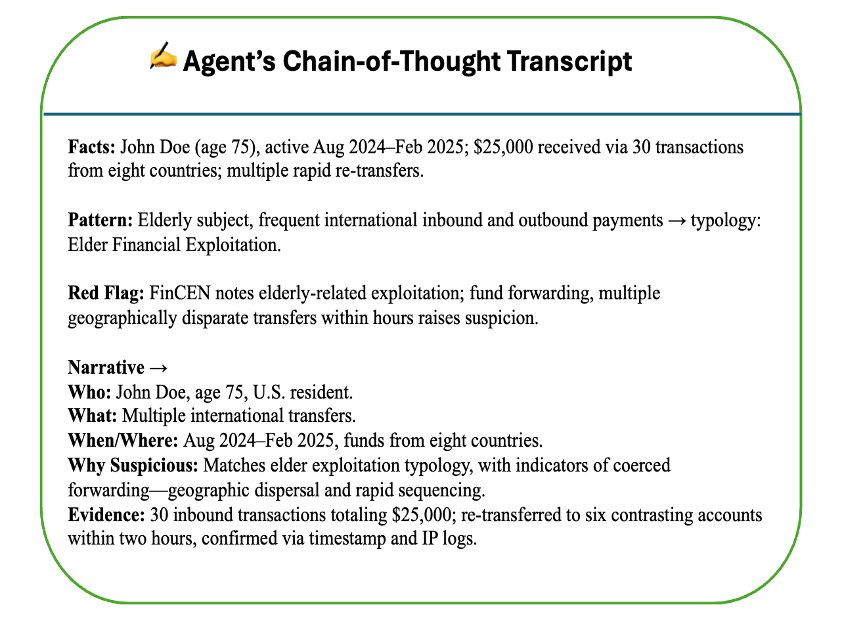

Chain-of-Thought Reasoning and Narrative Generation

Narrative generation employs explicit Chain-of-Thought (CoT) prompting, aggregating risk indicators, transaction details, external intelligence, and regulatory context. Agents assign structured confidence scores to narrative components, reflecting evidentiary strength and regulatory adherence, thereby enhancing interpretability and investigator trust.

Figure 3: Chain-of-Thought reasoning within Co-Investigator AI's narrative generation, illustrating transparent, stepwise reasoning.

Compliance Validation: Agent-as-a-Judge Paradigm

A Compliance Validation Agent implements the Agent-as-a-Judge methodology, autonomously and continuously evaluating narrative outputs for semantic coherence, factual accuracy, and regulatory alignment. The agent cross-validates narrative elements against specialized typology agent outputs and dynamic memory layers (regulatory, historical, typology-specific), flagging discrepancies for investigator review and iterative refinement.

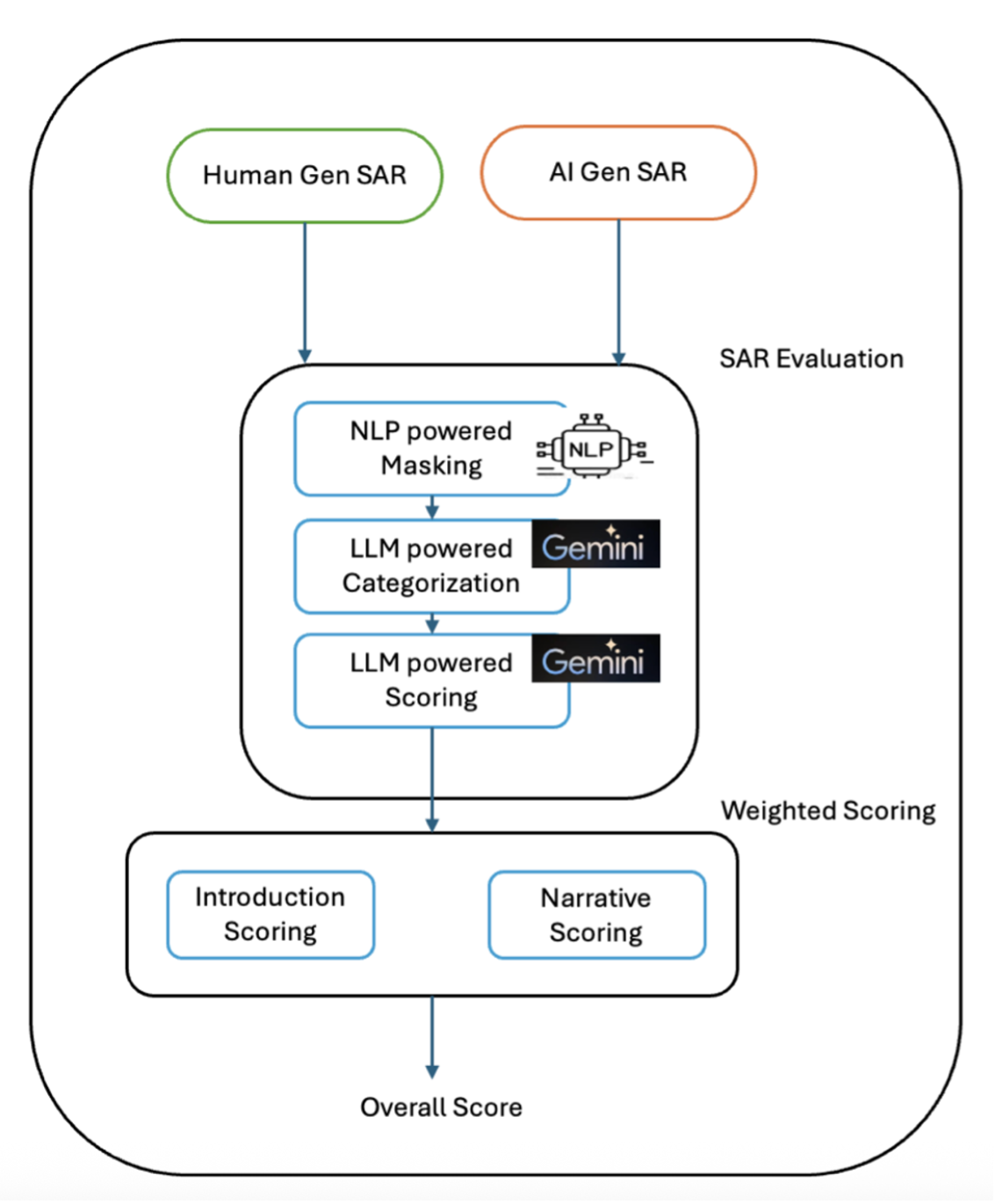

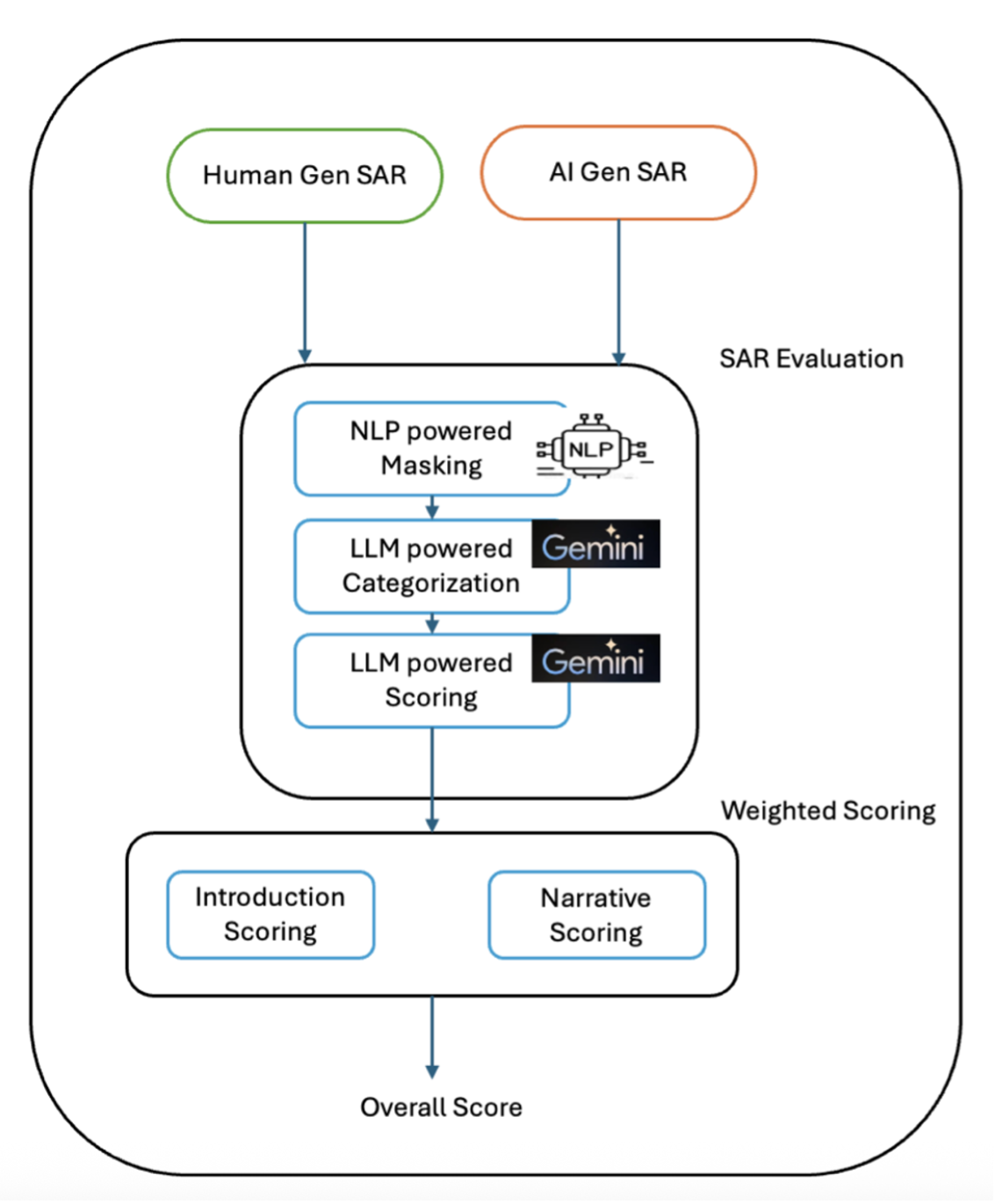

Automated Evaluation Framework

An automated pre-production evaluation framework, developed with compliance investigators, leverages expertly annotated golden datasets for objective benchmarking. The framework combines rule-based logical assessments and LLM-powered semantic similarity analyses, producing structured multi-component scores for narrative completeness and regulatory adherence.

Figure 4: Automated evaluation framework for SAR narrative quality and regulatory alignment, enabling rapid, quantitative benchmarking.

Empirical results show that Co-Investigator AI achieves 70% narrative completeness and 61% time savings on average, with specialized modules (location anomaly detection, account integrity monitoring, dispute analysis) reaching up to 100% accuracy. These metrics are validated by domain experts from a leading global fintech institution.

Human-Centered Design and Investigator Collaboration

The system is explicitly designed for human-in-the-loop collaboration, providing investigators with secure editing interfaces and structured feedback loops. Investigator inputs are systematically captured and integrated into iterative narrative refinement, ensuring regulatory alignment and trust. This approach aligns with best practices in human-AI interaction, emphasizing transparency, user agency, and joint decision-making.

Co-Investigator AI employs multi-tiered memory management (regulatory, historical, typology-specific) for persistent, updateable context across agent workflows, surpassing stateless RAG pipelines. Supporting analytical tools (risk indicator extraction, external intelligence search, account-linking analysis) further enhance investigative depth and narrative precision.

Lessons Learned and Future Directions

Key insights include the efficacy of modular agentic architectures, the critical role of human-AI collaboration, the benefits of real-time agent-based validation, and the importance of explicit reasoning and confidence scoring for explainability. Future work will focus on expanding crime typology coverage, advancing regulatory validation, enhancing explainability and auditability, and developing adaptive learning systems for continuous improvement.

Conclusion

Co-Investigator AI demonstrates that modular agentic architectures, combined with human-in-the-loop workflows, can substantially improve the efficiency, accuracy, and trustworthiness of SAR narrative generation in AML compliance. The system achieves strong empirical performance, validated by domain experts, and provides a scalable foundation for future developments in agentic AI for regulated domains. Ongoing research will address broader typological coverage, adaptive learning, and enhanced transparency to further strengthen compliance processes and investigator trust.