QualityFM: a Multimodal Physiological Signal Foundation Model with Self-Distillation for Signal Quality Challenges in Critically Ill Patients (2509.06516v1)

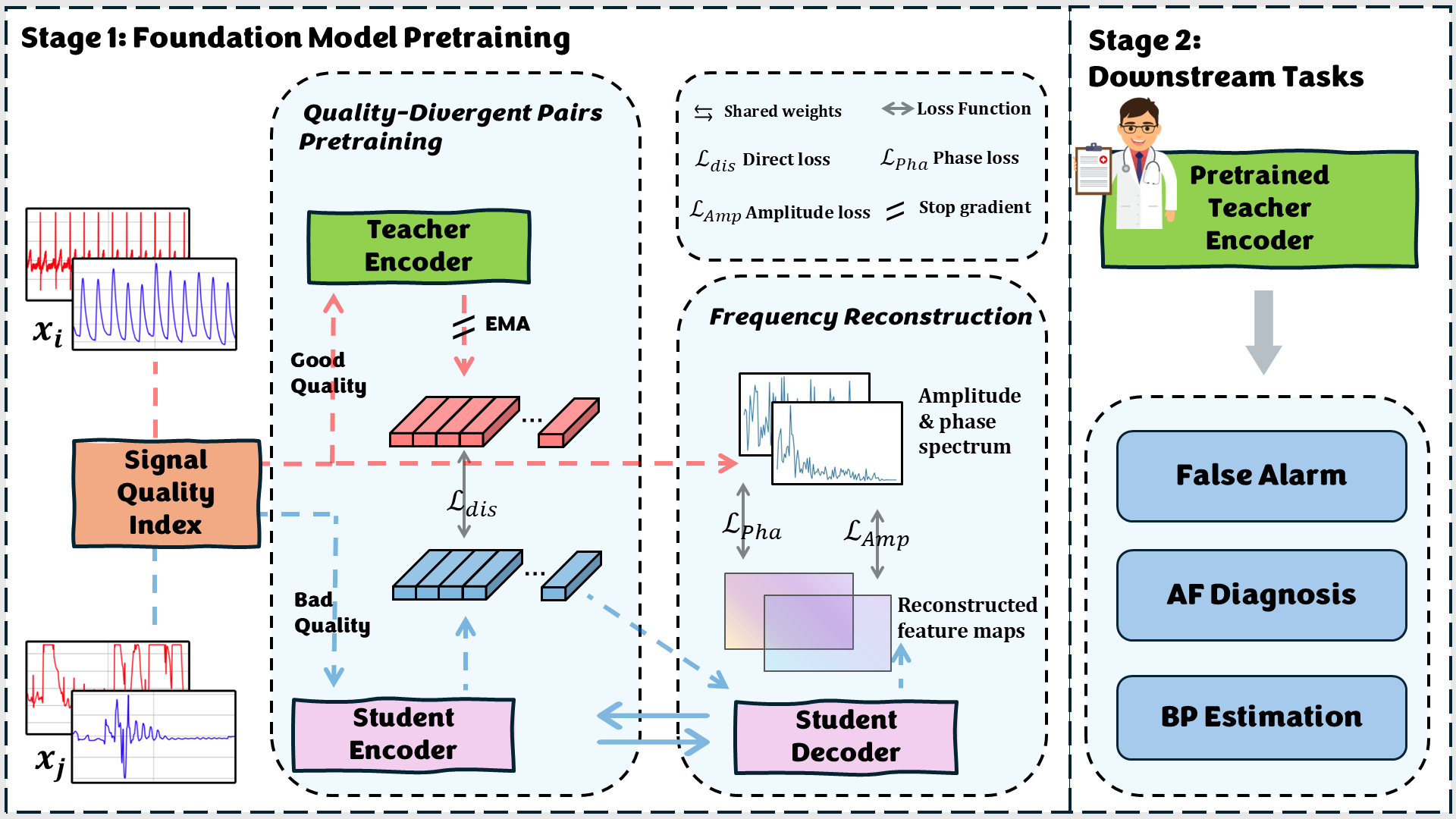

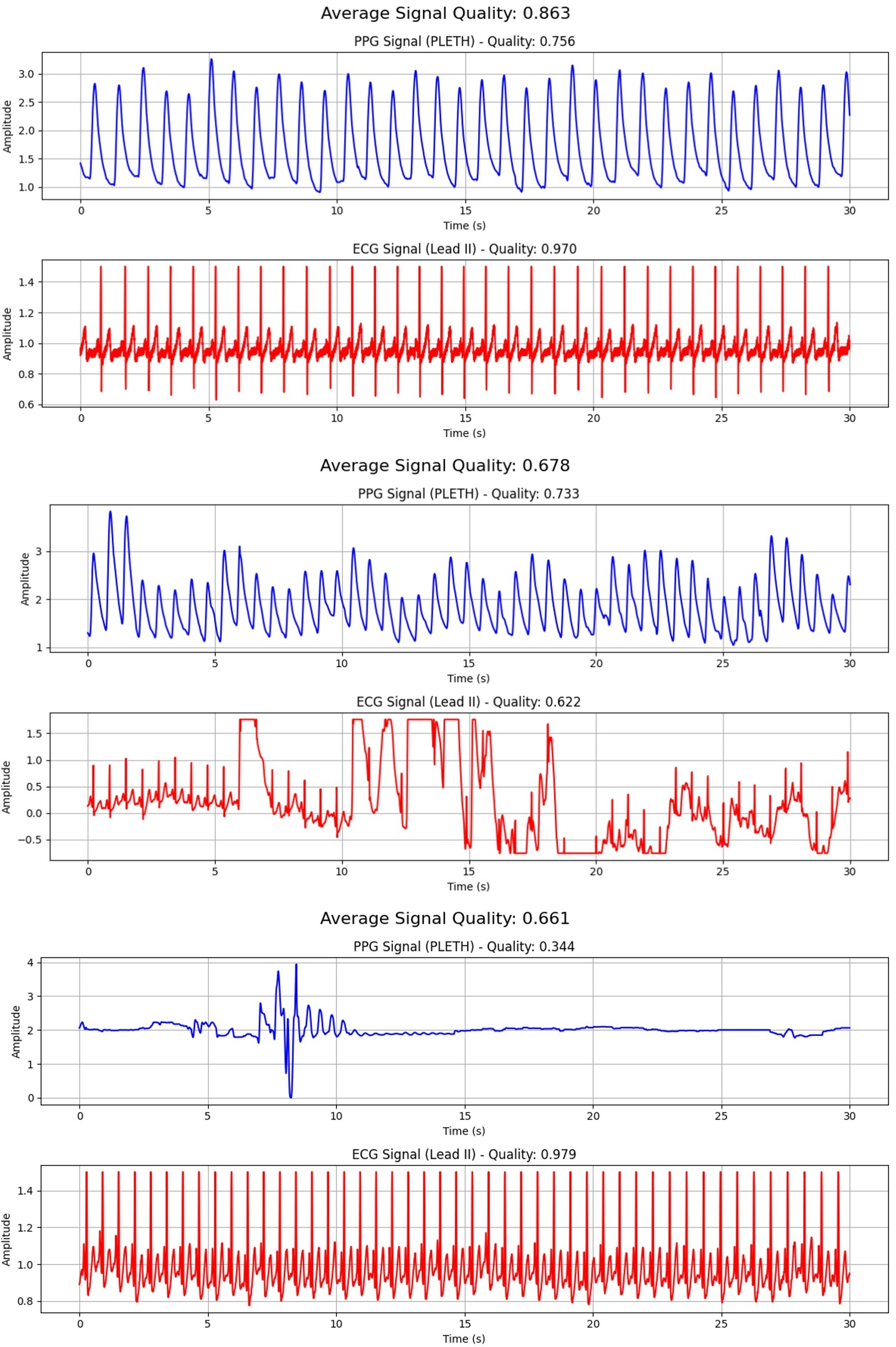

Abstract: Photoplethysmogram (PPG) and electrocardiogram (ECG) are commonly recorded in intesive care unit (ICU) and operating room (OR). However, the high incidence of poor, incomplete, and inconsistent signal quality, can lead to false alarms or diagnostic inaccuracies. The methods explored so far suffer from limited generalizability, reliance on extensive labeled data, and poor cross-task transferability. To overcome these challenges, we introduce QualityFM, a novel multimodal foundation model for these physiological signals, designed to acquire a general-purpose understanding of signal quality. Our model is pre-trained on an large-scale dataset comprising over 21 million 30-second waveforms and 179,757 hours of data. Our approach involves a dual-track architecture that processes paired physiological signals of differing quality, leveraging a self-distillation strategy where an encoder for high-quality signals is used to guide the training of an encoder for low-quality signals. To efficiently handle long sequential signals and capture essential local quasi-periodic patterns, we integrate a windowed sparse attention mechanism within our Transformer-based model. Furthermore, a composite loss function, which combines direct distillation loss on encoder outputs with indirect reconstruction loss based on power and phase spectra, ensures the preservation of frequency-domain characteristics of the signals. We pre-train three models with varying parameter counts (9.6 M to 319 M) and demonstrate their efficacy and practical value through transfer learning on three distinct clinical tasks: false alarm of ventricular tachycardia detection, the identification of atrial fibrillation and the estimation of arterial blood pressure (ABP) from PPG and ECG signals.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper builds a smart computer model, called QualityFM, to understand and deal with messy heart and blood-flow signals collected in hospitals. These signals include:

- ECG: the electrical activity of the heart (the squiggly lines you see on hospital monitors).

- PPG: how blood flow changes with each heartbeat, often measured by a finger clip using light.

In busy places like the ICU or the operating room, these signals often get noisy when patients move or sensors slip. That noise can cause false alarms and mistakes. QualityFM learns from huge amounts of data to spot useful patterns even when signals are noisy, and then helps with different medical tasks.

What questions were the researchers trying to answer?

They focused on three simple questions:

- How can we teach a model to understand “signal quality” so it still works well when the data is messy?

- Can one general model be reused for different jobs (like spotting false alarms, finding atrial fibrillation, or estimating blood pressure), instead of training a new model each time?

- Can we learn mostly without expensive, hand-made labels from doctors?

How did they do it? (Methods in simple terms)

They used a few clever ideas and a huge amount of data.

1) Lots of data from real hospitals

They gathered over 21 million short signal clips (each 30 seconds long), adding up to about 179,757 hours of ECG and PPG recordings from ICU/OR settings. These include both clean (easy to read) and noisy (hard to read) examples.

2) A “teacher–student” learning trick (self-distillation)

Think of a clear photo and a blurry photo taken a few minutes apart of the same scene. The model pairs a “high-quality” signal with a “low-quality” one from the same patient and time period:

- The “teacher” reads the high-quality signal and produces a strong, reliable summary.

- The “student” reads the low-quality signal and tries to produce a similar summary.

- The teacher’s knowledge is updated slowly (like a coach who averages what they’ve learned over time), which helps filter out noise.

This way, the student learns to find the real heartbeat patterns even when the signal is messy.

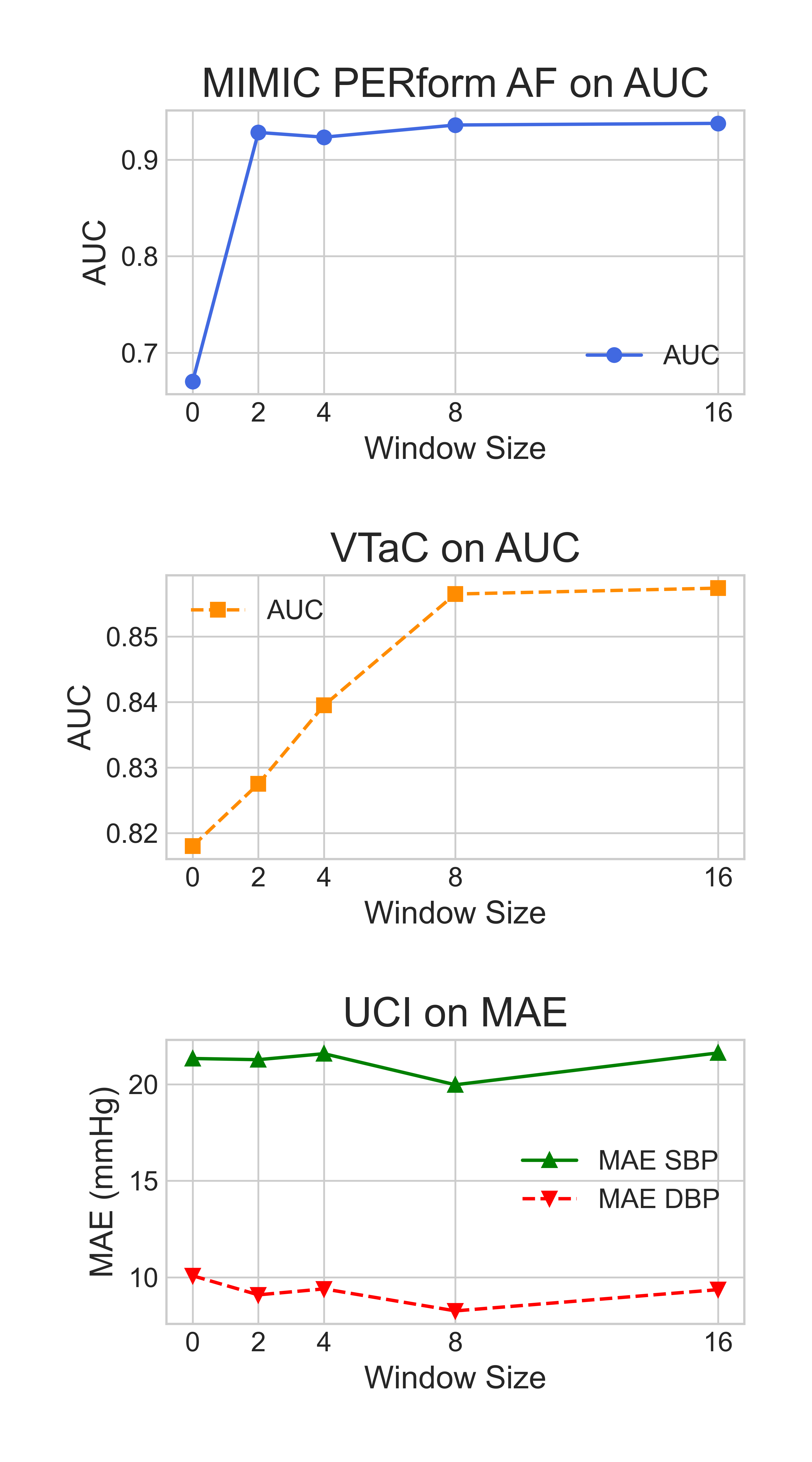

3) Handling very long signals with windows

Long signals are like very long books. Instead of reading every word at once, the model uses “windows,” like reading chapter by chapter. This is called windowed sparse attention:

- It focuses on nearby parts first (local details, like the shape of a single heartbeat).

- As it stacks layers, it understands longer trends (like rhythm over many beats).

- This makes it fast enough to handle long signals without running out of memory.

4) Learning from “frequency” too (like a music equalizer)

Signals aren’t just shapes over time; they also have frequency information (how often patterns repeat), like bass and treble in music. The model:

- Reconstructs the amplitude (how strong each frequency is) and the phase (how frequencies line up) of the signal.

- By matching the student’s reconstruction to the teacher’s, it keeps important rhythm details that matter for heart signals.

5) One model, many sizes

They trained three versions:

- Base (~9.6 million parameters)

- Large (~70 million)

- Huge (~319 million)

Bigger models generally learned more and did better.

What did they find?

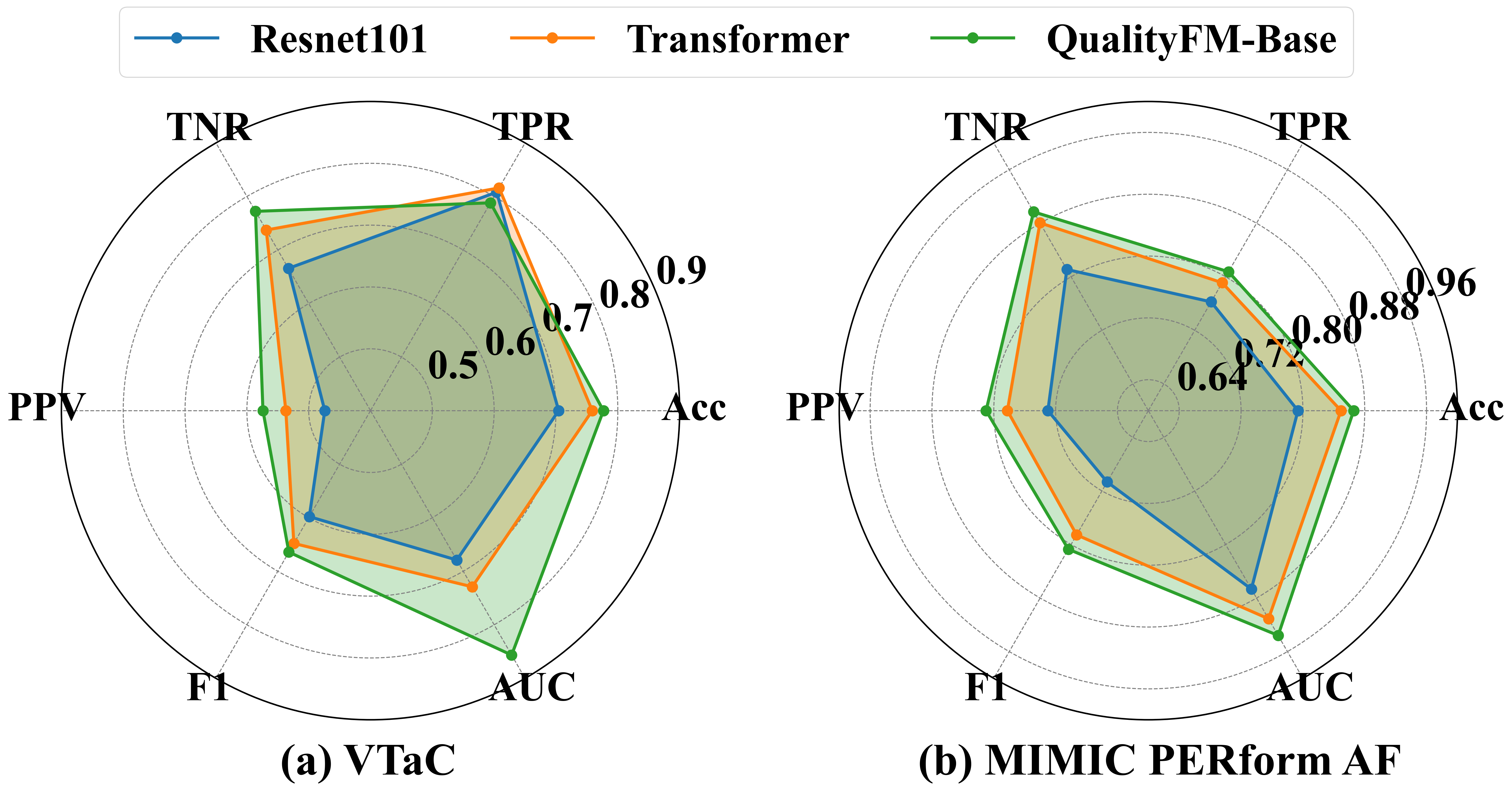

They tested QualityFM on three real medical tasks and compared it to other top methods:

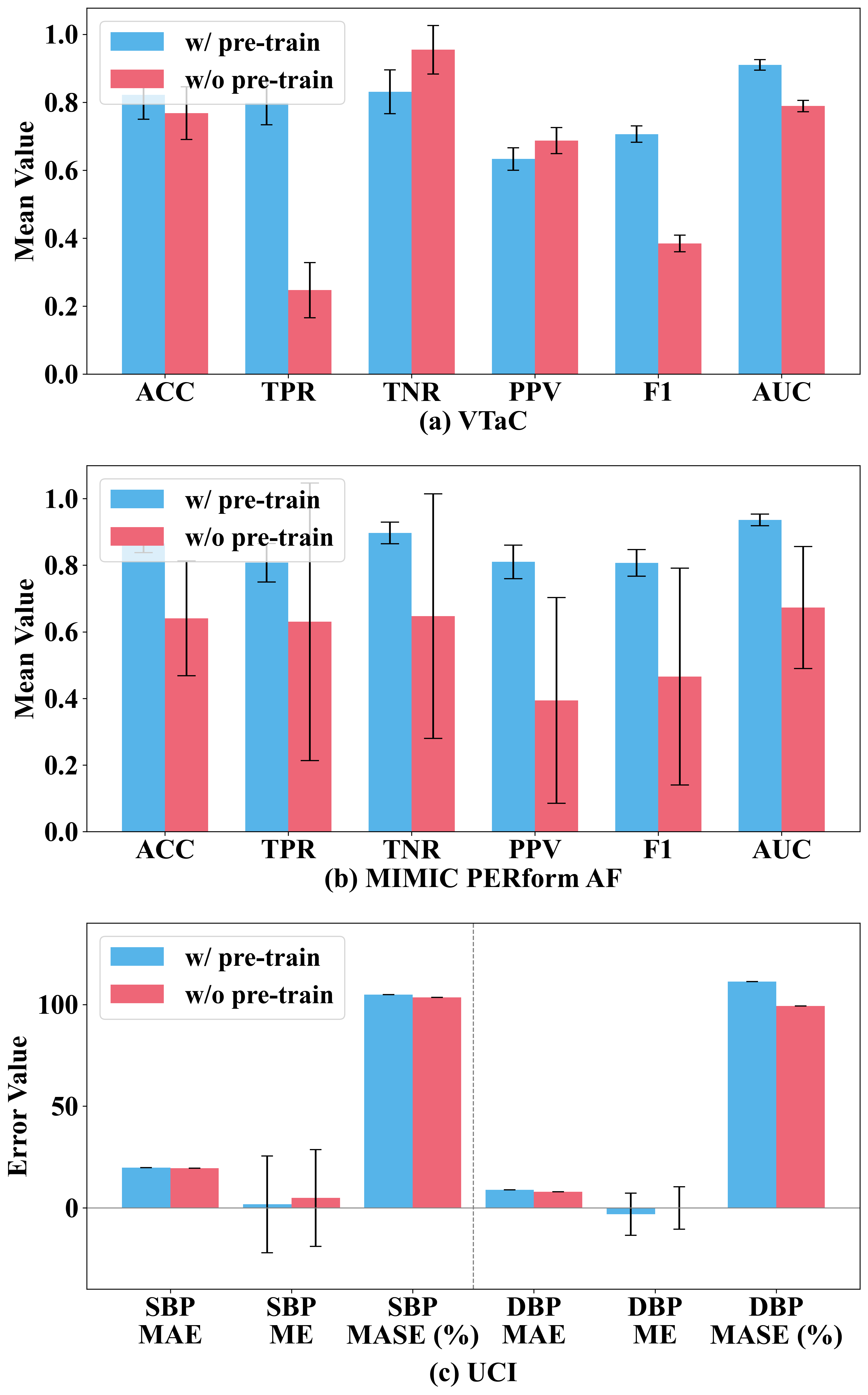

- False alarm detection for ventricular tachycardia (VT): helped reduce false alarms, especially improving the ability to say when there is NOT a real event (reducing alarm fatigue).

- Atrial fibrillation (AF) detection: performed very well at telling AF from normal rhythm.

- Blood pressure estimation (systolic and diastolic) from ECG and PPG: gave lower errors than previous approaches, meaning more accurate estimates without a cuff.

Key takeaways:

- Pretraining on massive, mixed-quality data made a big difference. Models trained from scratch (with no pretraining) did worse.

- Larger models did better across tasks.

- The windowed attention and the frequency-based learning (amplitude + phase) both improved results.

- Compared with other modern methods, QualityFM either matched or outperformed them on the reported metrics.

Why does this matter?

- Fewer false alarms: Less “beeping” for no reason helps nurses and doctors focus on real emergencies.

- More reliable readings: When signals are messy, this model can still find the truth, improving monitoring and diagnosis.

- One model, many jobs: Instead of building a new model for every task, QualityFM can be adapted to new problems.

- Less labeling needed: Since it learns a lot without hand-labeled data, it saves time and expert effort.

Final thoughts: impact and what’s next

QualityFM shows that a single, large, pretrained model can understand hospital signals well enough to improve multiple important tasks. This could make patient monitoring more accurate and less stressful for staff. In the future, the authors plan to:

- Train even bigger models on more data.

- Allow flexible inputs (different kinds and numbers of signals).

- Use lighter fine-tuning methods so hospitals with fewer computers can still benefit.

In short, QualityFM is like a trained “ear” that listens through the noise of busy hospital signals and still hears the heartbeat clearly.

Practical Applications

Practical Applications of QualityFM

Below are actionable applications derived from the paper’s findings, methods, and innovations, grouped by deployment horizon. Each item notes relevant sectors, anticipated tools/products/workflows, and key assumptions/dependencies that affect feasibility.

Immediate Applications

These can be piloted or integrated now with standard engineering and clinical validation cycles.

- ICU/OR false arrhythmia alarm reduction (ventricular tachycardia)

- Sectors: Healthcare, MedTech, Clinical Software

- What: Integrate the pretrained QualityFM encoder into bedside monitor middleware to score signal quality and reduce false VT alarms in real time; adjust alarm routing and thresholds when quality is low vs high.

- Tools/workflows: Edge inference module or on-prem server; HL7/FHIR integration; alarm management dashboard; clinician-in-the-loop override.

- Dependencies/assumptions:

- Access to synchronized PPG and lead II ECG streams (≥125–300 Hz).

- On-prem compute for real-time inference; model size selection (Base/Large) to meet latency.

- Regulatory pathway (clinical decision support vs SaMD) and local validation per site.

- Robust AF detection in monitored care and telemetry

- Sectors: Healthcare, Telemedicine, Remote Patient Monitoring (RPM)

- What: Use QualityFM as a feature extractor to improve AF detection robustness under noisy conditions in wards, telemetry, and RPM hubs.

- Tools/workflows: Plugin for existing AF classifiers; EHR event flagging; quality-aware triage of AF episodes for clinician review.

- Dependencies/assumptions:

- Telemetry devices provide PPG+ECG or at least one modality with known performance trade-offs.

- Local re-tuning to device-specific noise/artifacts (domain shift).

- Cuffless ABP estimation as decision support

- Sectors: Healthcare, MedTech

- What: Provide continuous ABP estimates from PPG+ECG when arterial line is unavailable; display uncertainty calibrated by signal quality.

- Tools/workflows: “ABP-lite” bedside app panel; quality-gated readings; alerts for calibration or arterial-line placement when uncertainty is high.

- Dependencies/assumptions:

- Site-level calibration and governance; estimates are decision support, not a replacement for invasive monitoring.

- Validation on local patient cohorts with diverse hemodynamics.

- Real-time signal quality monitoring and bedside coaching

- Sectors: Healthcare; Nursing Informatics; Wearables (prosumer/clinical-grade)

- What: Continuous SQI scoring with actionable feedback (e.g., “reposition finger probe,” “check ECG electrode contact”) to reduce artifact-related data loss.

- Tools/workflows: Bedside quality bar/gauge; nurse-facing mobile notifications; patient coaching on wearable apps.

- Dependencies/assumptions:

- UI integration with monitor vendors or nurse station dashboards.

- Human factors/UX testing to avoid alert overload.

- Data curation and triage in research and clinical data lakes

- Sectors: Academia, Health-IT, Pharma/MedTech R&D

- What: Automatically tag segments with SQI and filter/weight low-quality data for analytics, model training, and trial endpoints.

- Tools/workflows: ETL pipeline plug-in; data lake SQI metadata fields; reproducible “quality-gated” cohorts.

- Dependencies/assumptions:

- Access to raw or lightly processed waveforms for offline processing.

- Clear policies to avoid discarding rare-but-important events; use weighting rather than hard deletion.

- Foundation model feature extractor for downstream tasks (low-label regimes)

- Sectors: MedTech, Software/AI, Academia

- What: Use QualityFM embeddings to boost performance on new ICU/OR tasks (e.g., arrhythmia subtyping, motion artifact detection) with limited labels.

- Tools/workflows: SDK/API exposing embeddings; PEFT/LoRA adapters for rapid fine-tuning; model zoo with task heads.

- Dependencies/assumptions:

- Compute for fine-tuning; device/domain adaptation for new sensors.

- MLOps for model versioning and monitoring.

- Quality-aware alert routing and escalation policies

- Sectors: Healthcare Operations, Clinical Safety/Quality

- What: Add SQI as a factor in routing (e.g., route high-SQI alarms to primary nurse immediately; low-SQI alarms prompt sensor check first).

- Tools/workflows: Alarm policy engine with SQI inputs; audit logs to track reductions in alarm fatigue.

- Dependencies/assumptions:

- Policy approval by clinical governance and safety committees.

- Transparent logic; clinician education to maintain trust.

- Device QA and manufacturing test harness

- Sectors: MedTech Manufacturing, Quality Assurance

- What: Use QualityFM to benchmark sensor prototypes and adhesives under motion/noise; quantify SQI distribution across test matrices.

- Tools/workflows: Automated test rigs; acceptance criteria using SQI/AUC deltas across noise profiles.

- Dependencies/assumptions:

- Representative motion/noise simulators; correlation to clinical environments.

- EHR enrichment with signal quality metadata

- Sectors: Health-IT, Analytics

- What: Store per-window SQI, enabling quality-weighted analytics and reducing bias in outcome studies.

- Tools/workflows: FHIR extensions for signal quality; analytics pipelines that weight by SQI.

- Dependencies/assumptions:

- Vendor support for new fields; data governance approval.

- Education and training in biomedical signal processing

- Sectors: Academia, Professional Education

- What: Use the paper’s methods (self-distillation on quality-divergent pairs; spectral amplitude/phase loss; windowed sparse attention) for hands-on labs and courses.

- Tools/workflows: Teaching notebooks; reproducible baseline models; curated subsets of MIMIC/VitalDB.

- Dependencies/assumptions:

- Access to public datasets; GPU time for coursework.

Long-Term Applications

These require further scaling, validation, or development before broad deployment.

- General-purpose multimodal bedside foundation model

- Sectors: Healthcare, MedTech, AI

- What: Extend QualityFM to additional channels (ABP, respiration, capnography, impedance, EEG), with adaptive inputs and missing-modality robustness to become a universal physiologic representation model.

- Dependencies/assumptions:

- Larger pretraining corpora; architectural support for variable modality sets; multi-center validation.

- Regulatory-grade alarm management AI (SaMD)

- Sectors: Healthcare, Policy/Regulation

- What: Certify quality-aware alarm reduction systems that demonstrably reduce alarm fatigue while maintaining safety.

- Dependencies/assumptions:

- Prospective clinical trials; post-market surveillance; human factors studies; harmonization with IEC/ISO alarm standards.

- Cuffless, calibration-free continuous BP in consumer wearables

- Sectors: Wearables, Digital Health, Insurance

- What: Translate ABP estimation to wrist wearables using PPG (+optional ECG), with quality-aware uncertainty estimates for hypertension screening and trend monitoring.

- Dependencies/assumptions:

- Domain adaptation to optical sensors, skin tones, motion; FDA/CE validation; long-term drift management.

- Closed-loop, quality-robust physiological controllers

- Sectors: ICU/OR Automation, Robotics

- What: Use quality-aware features as inputs to closed-loop systems (e.g., vasopressor titration, sedation depth control) that pause or degrade gracefully under low SQI.

- Dependencies/assumptions:

- Extensive safety validation and simulation; clinician override; redundancy/ensemble sensing.

- Quality-aware early warning systems at population scale

- Sectors: Public Health, Telemedicine, Payers

- What: Deploy robust early warning scores (EWS) that incorporate SQI to minimize false positives in home/RPM settings and prioritize high-confidence escalations.

- Dependencies/assumptions:

- Integration with RPM ecosystems; reimbursement models; demographic fairness checks.

- Parameter-efficient on-device deployment

- Sectors: Edge AI, Wearables, IoT

- What: Distill QualityFM into tiny models with PEFT/LoRA, quantization and pruning for microcontrollers and low-power chips.

- Dependencies/assumptions:

- Hardware-aware NAS/compilers; battery/latency constraints; privacy-preserving inference.

- Standards and policy for Signal Quality Index (SQI)

- Sectors: Policy/Standards, Health-IT

- What: Establish SQI taxonomies and interoperability profiles (e.g., HL7/FHIR extensions) to standardize storage and use across vendors and EHRs.

- Dependencies/assumptions:

- Multi-stakeholder consensus (clinicians, vendors, regulators); demonstration of clinical utility.

- Cross-device/domain adaptation marketplace

- Sectors: MedTech, AI Platforms

- What: Offer validated per-device adapters (LoRA weights) that tune QualityFM to specific sensors/vendors, accelerating integration.

- Dependencies/assumptions:

- IP/licensing frameworks with device OEMs; MLOps for distribution, monitoring, and rollback.

- Synthetic augmentation guided by spectral constraints

- Sectors: AI Research, MedTech R&D

- What: Develop generators that preserve physiologic amplitude/phase spectra to augment rare pathologies and stress-test downstream models.

- Dependencies/assumptions:

- Additional generative components; clinical realism evaluation; bias mitigation.

- Human-in-the-loop quality triage and labeling efficiency

- Sectors: Clinical AI Ops, Academia

- What: Active-learning pipelines that surface low-quality/ambiguous segments to experts, reducing labeling burden while improving robustness.

- Dependencies/assumptions:

- Annotation tools; inter-rater agreement protocols; privacy/IRB approvals.

- Digital twins for alarm policy testing

- Sectors: Hospital Operations, Safety Engineering

- What: Simulate ICU/OR signal streams with realistic quality fluctuations to evaluate alarm strategies and staffing impacts before live deployment.

- Dependencies/assumptions:

- High-fidelity simulators; historical data to parameterize quality dynamics.

- Multimodal clinical reasoning with quality-aware agents

- Sectors: Clinical Decision Support, AI

- What: Combine QualityFM embeddings with LLMs to build agents that reason over signals, notes, and labs, weighting evidence by SQI.

- Dependencies/assumptions:

- Safe multimodal fusion; guardrails; rigorous evaluation on real-world cases.

Cross-cutting assumptions and risks

- Data and devices: Access to synchronized PPG and ECG at sufficient sampling rates; model performance may degrade with single-modality inputs or lower fidelity.

- Generalizability: Pretraining on VitalDB and MIMIC-III may not fully cover pediatrics, specific devices, or diverse populations; local validation is required.

- Compute and latency: Inference constraints on bedside/wearable hardware necessitate careful model sizing and optimization.

- Regulatory and ethics: Clinical deployment needs governance, bias/fairness analyses, and post-market monitoring; data use must comply with HIPAA/GDPR.

- Integration: Vendor APIs for real-time streaming and EHR write-back; human factors to prevent new sources of alarm fatigue.

- Safety: All clinical outputs should be framed as decision support with clinician oversight until regulatory approvals are obtained.

Collections

Sign up for free to add this paper to one or more collections.