- The paper demonstrates that conversational AI is as effective as self-directed internet search in enhancing belief accuracy and reducing misinformation.

- The paper employs a large-scale representative survey and randomized controlled trials with hierarchical Bayesian modeling to ensure robust causal inference.

- The paper finds a modest efficiency advantage for chatbots, reducing information procurement time by 6–10% compared to traditional search methods.

Conversational AI and Political Knowledge: An Empirical Assessment

Introduction

The proliferation of conversational AI systems, particularly LLM-based chatbots such as GPT-4, Claude, and Mistral, has raised significant concerns regarding their role in political information-seeking. The central question addressed in "Conversational AI increases political knowledge as effectively as self-directed internet search" (2509.05219) is whether these systems, when used by the public to research political issues, affect users' epistemic health—specifically, their beliefs in true versus false information, trust in institutions, and political extremism—differently than traditional self-directed internet search. The paper combines a large-scale representative survey with a series of randomized controlled trials (RCTs) to provide a comprehensive empirical analysis of both usage patterns and causal effects.

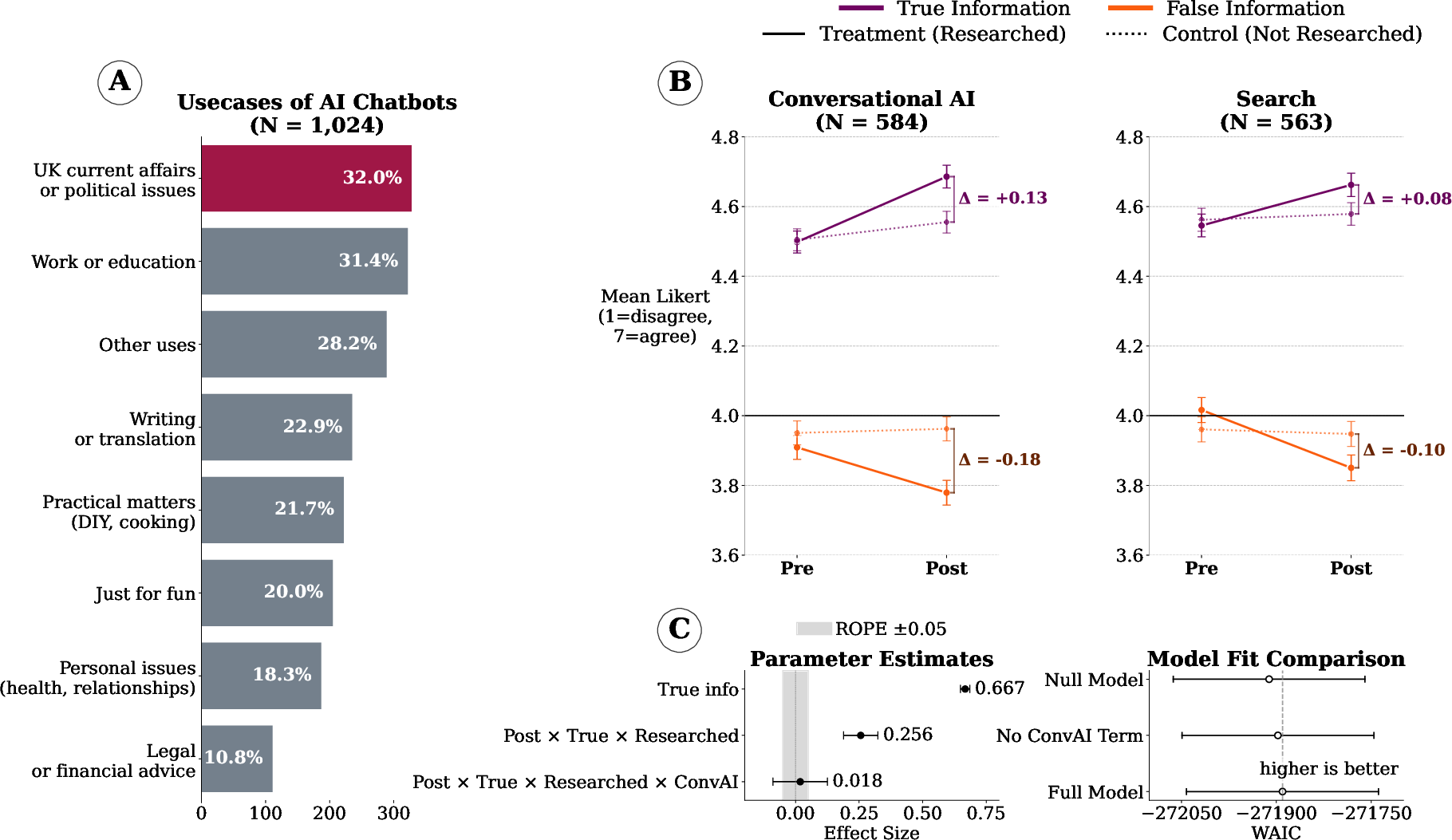

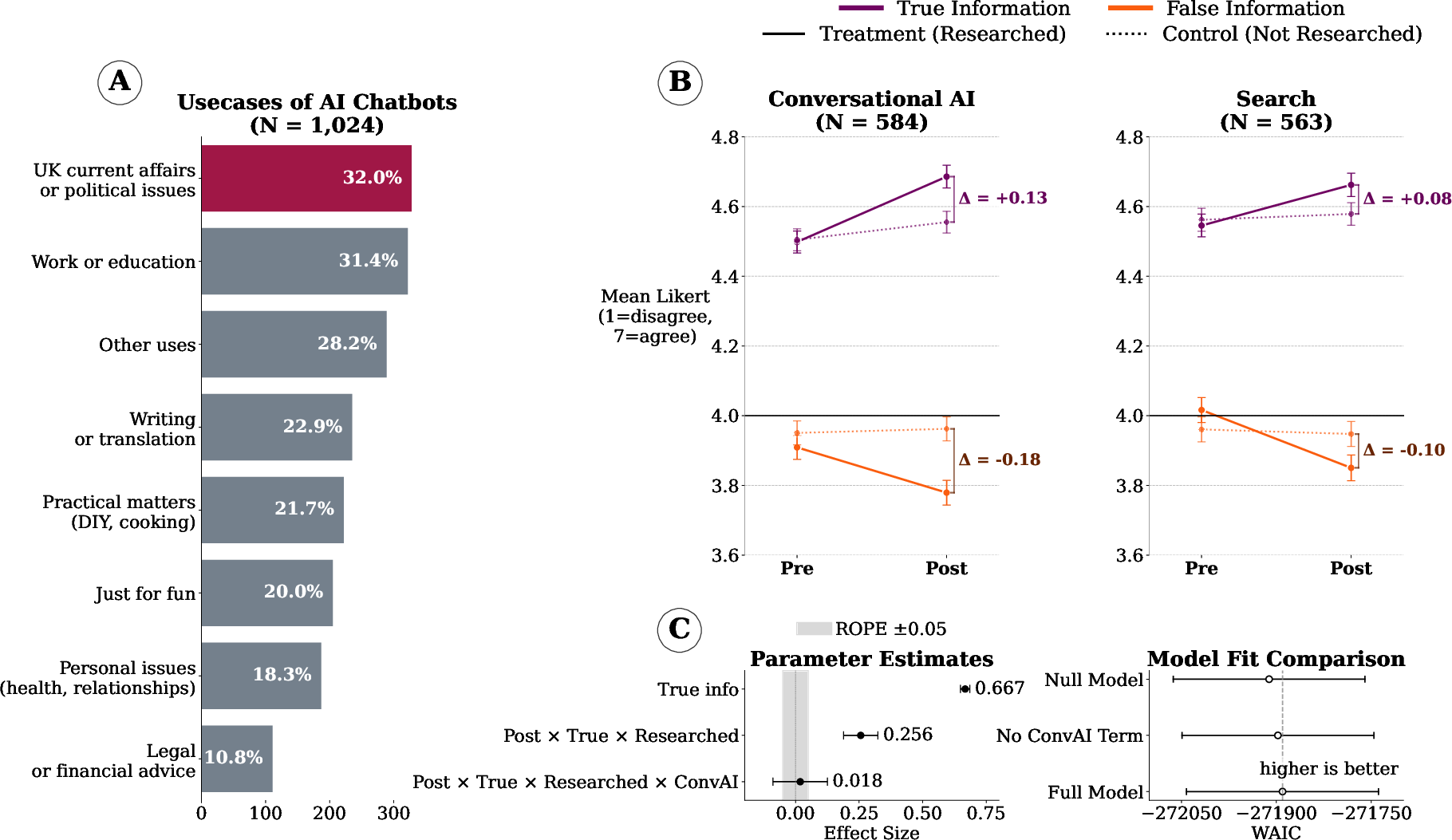

A representative survey of UK adults (N=2,499) conducted immediately after the 2024 general election reveals that conversational AI is now a non-trivial component of the information ecosystem. While traditional media (television, newspapers, radio) and internet search remain dominant, 9% of respondents reported using AI chatbots for political information in the preceding month, and among chatbot users, 32% did so specifically for election-relevant research. This translates to approximately 13% of eligible UK voters using chatbots for political information.

Respondents rated chatbots as both useful (89%) and accurate (87%) for political information, with a majority perceiving them as politically neutral (62%). Notably, among those who felt influenced by chatbot responses, liberal influence was more frequently reported than conservative (63% vs 37%), but the majority reported no change in voting intentions.

Figure 1: Patterns of chatbot use, belief change in true/false information, and Bayesian GLM results comparing conversational AI and internet search.

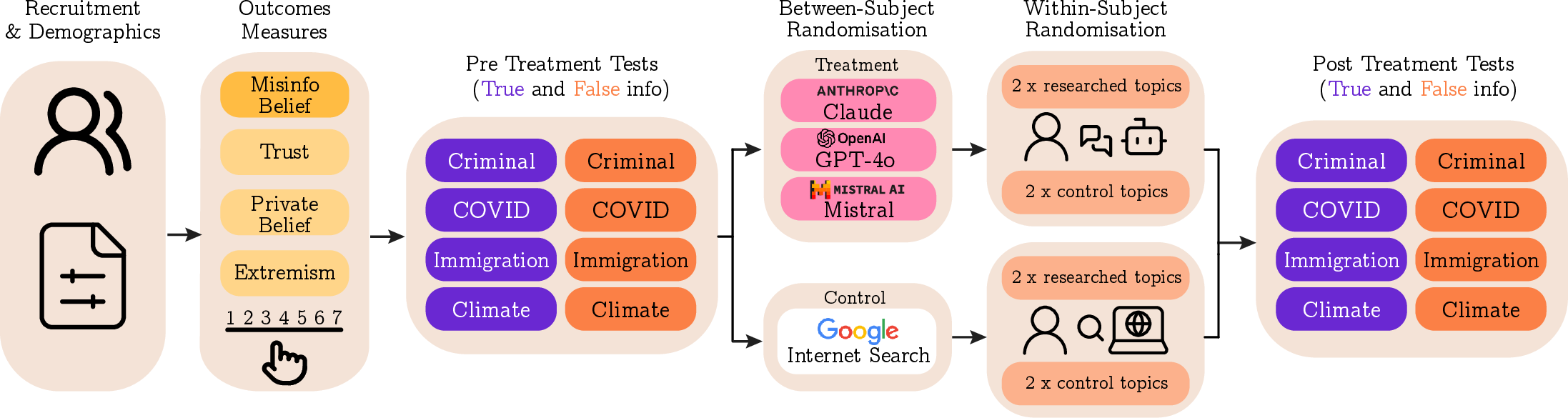

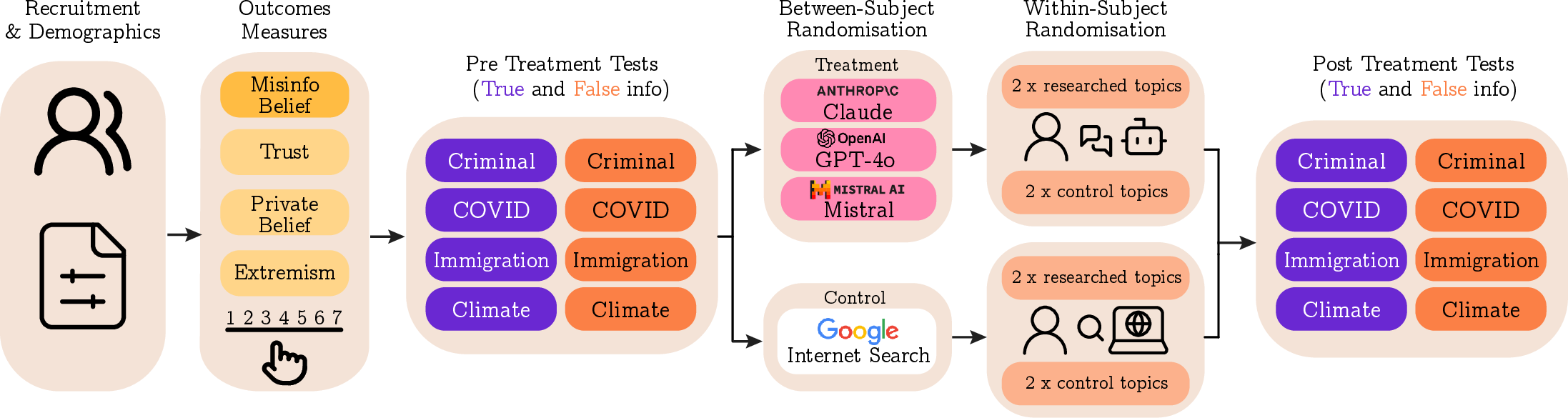

Experimental Design and Methodology

The RCT component (N=2,858) employed a pre-post design to measure changes in belief in true and false statements, trust, private political beliefs, and extremism across four salient political topics: climate change, immigration, criminal justice, and COVID-19. Participants were randomized to research two topics using either a conversational AI (GPT-4o, Claude-3.5, or Mistral) or a standard internet search engine, with the remaining two topics serving as within-subject controls.

Figure 2: Experimental design for measuring the impact of conversational AI versus internet search on political knowledge and epistemic health.

The primary outcome was the change in agreement with true versus false statements, measured on a 7-point Likert scale. Secondary outcomes included trust in institutions, private political beliefs, and extremism (operationalized as sign flips in beliefs relative to the Likert midpoint). Bayesian multilevel GLMs with ordered-logistic and binomial likelihoods were used for inference, with model selection based on WAIC.

Main Findings

Equivalence of Conversational AI and Internet Search

The central empirical result is the absence of a statistically or practically significant difference between the effects of conversational AI and internet search on belief in true versus false information. Both modalities increased agreement with true statements and decreased agreement with false statements to a similar extent (βPOST×TRUE×RESEARCHED×CONVAI=0.02, 95% HPDI [–0.09, 0.13]), with the HPDI fully contained within the region of practical equivalence. This pattern was robust across all tested LLMs and topics.

Secondary outcomes mirrored this null effect: no differential impact was observed on trust, private political beliefs, or extremism. The effect sizes for all interaction terms involving the research modality (AI vs search) were close to zero and not credibly different from the null.

Robustness to Prompt Engineering

To probe the potential for prompt-induced bias, additional RCTs compared standard LLM prompting to sycophantic (reinforcing user priors) and persuasive (randomly assigned stance) system prompts. Again, no significant differences were observed in belief change, trust, or extremism between these conditions and the baseline, indicating that prompt engineering of this type does not measurably alter the epistemic impact of LLMs in this context.

Efficiency Advantage

A secondary but notable finding is that conversational AI reduced information procurement time by 6–10% relative to internet search (mean: 17.94 vs 19.82 minutes), suggesting a modest efficiency advantage for chatbots in information-seeking tasks.

Statistical and Modeling Considerations

The paper employs hierarchical Bayesian modeling with weakly informative priors, leveraging Numpyro for efficient MCMC sampling. Model comparison via WAIC supports the conclusion that including terms for research modality (AI vs search) does not improve predictive accuracy, further substantiating the null effect. The use of a region of practical equivalence (ROPE) for parameter interpretation is methodologically rigorous, ensuring that reported null effects are not artifacts of insufficient power or arbitrary thresholding.

Implications and Limitations

Practical Implications

The findings challenge the prevailing narrative that conversational AI inherently increases susceptibility to political misinformation. In a real-world, high-stakes context (a national election), LLM-based chatbots neither eroded nor enhanced political knowledge relative to traditional search, even under prompt conditions designed to maximize persuasive or sycophantic effects. This suggests that current guardrails and model architectures are sufficient, on average, to prevent systematic epistemic harm in everyday information-seeking.

The efficiency advantage of chatbots may drive further adoption, with implications for the future of information access and the design of public-facing AI systems.

Theoretical Implications

The results imply that, at least in the current generation of LLMs and under typical usage, the risk of large-scale epistemic distortion via conversational AI is limited. This stands in contrast to concerns raised in the literature regarding LLM hallucinations, bias, and manipulation. The null effects observed here may reflect both improvements in model factuality and the resilience of users in cross-referencing or discounting AI-generated information.

Limitations and Future Directions

The paper is limited to a single national context, a specific set of political issues, and relatively short interaction windows. It does not address long-term attitude change, behavioral outcomes, or the effects of more adversarial or coordinated manipulation campaigns. Additionally, the sample is restricted to UK residents and may not generalize to other political cultures or information environments.

Future research should extend this paradigm to other countries, longer time horizons, and more diverse user populations. There is also a need for field studies linking chatbot use to downstream political behaviors and for adversarial testing of LLM robustness under more sophisticated manipulation strategies.

Conclusion

This paper provides robust empirical evidence that conversational AI, as currently deployed, does not differentially affect political knowledge, trust, or extremism compared to self-directed internet search. The efficiency gains associated with chatbots may accelerate their adoption, but concerns about their unique epistemic risks are not supported by the data in this context. Ongoing monitoring and adversarial evaluation remain warranted, but the present results suggest that, for everyday information-seeking, LLM-based chatbots are functionally equivalent to traditional search engines in their impact on political knowledge.