- The paper introduces a novel DSL-driven AI modeling framework that enhances transparency and iterative refinement with multi-modal input and real-time visualization.

- The methodology integrates state-of-the-art LLM-based synthesis with domain-specific IDEs, leveraging speech, text, and code editing for immediate feedback.

- The prototype demonstrates low-latency interactions and improved error correction using automated Tool API functions and a visual feedback loop.

AI-Assisted Modeling: DSL-Driven AI Interactions

Introduction and Motivation

The paper "AI-Assisted Modeling: DSL-Driven AI Interactions" (2509.05160) addresses the integration of AI—specifically LLMs—into domain-specific modeling workflows, with a focus on enhancing transparency, controllability, and iterative refinement. The authors identify the limitations of current AI-assisted programming tools, which are predominantly tailored to general-purpose languages and often rely on one-shot prompt paradigms. These approaches are insufficient for domain-specific languages (DSLs), especially those with complex or underrepresented syntax, such as Lingua Franca or SCCharts. The paper proposes a hybrid methodology that combines state-of-the-art AI-assisted IDEs with domain-specific modeling techniques, emphasizing instantaneous graphical visualizations and fine-grained observation and interaction points throughout the modeling process.

Conceptual Framework: Iterative, Multi-Modal Modeling

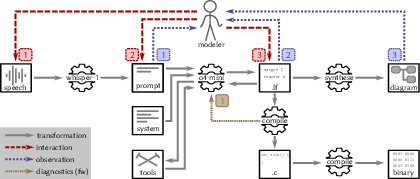

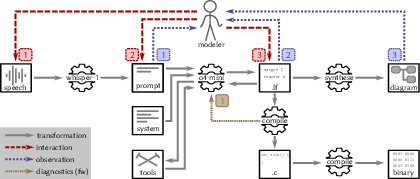

The core contribution is a workflow that enables modelers to interact with the system via speech, text, or direct code editing, receiving immediate feedback at multiple transformation stages. This is illustrated in the modeling concept diagram, which formalizes the feedback loop between the modeler and the system.

Figure 1: The modeling concept of DSL-driven AI interaction: A modeler interacts with the system through speech or text inputs and receives feedback from multiple transformation stages, including instantaneous diagram synthesis and compilation results. This workflow forms a feedback loop, enabling both the modeler and the system to incrementally refine the model.

The workflow is characterized by:

- Multi-modal input: Speech, natural language, and direct code editing are all supported as entry points.

- Incremental transformation: Each input is processed through a series of transformation stages, with observation points (OPs) and interaction points (IPs) at each stage.

- Immediate visualization: Textual models are automatically synthesized into semantically accurate diagrams, facilitating rapid inspection and correction.

- System-level validation: Automata learning and model checking are integrated for formal verification.

This approach is particularly effective for DSLs with graphical representations, where visual feedback is essential for understanding complex relationships and semantics.

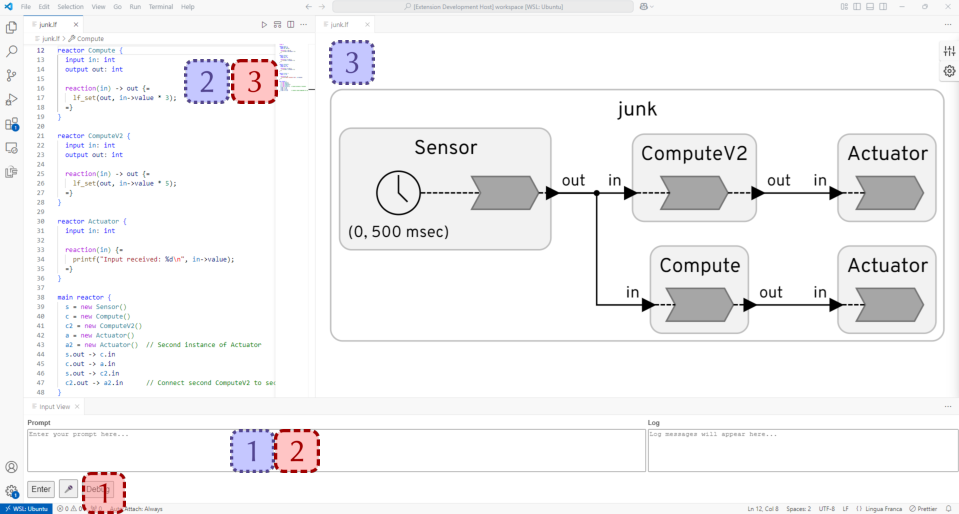

Prototype Implementation: Visual Studio Code Extension

The authors present a prototype implemented as a Visual Studio Code extension for the Lingua Franca language, targeting cyber-physical systems. The extension leverages the OpenAI Tool API for context-aware code generation and integrates the KIELER diagram engine for automatic diagram synthesis. The workflow is demonstrated in the editor, where the modeler can interact via voice, text, or direct editing, and observe the outputs at each stage.

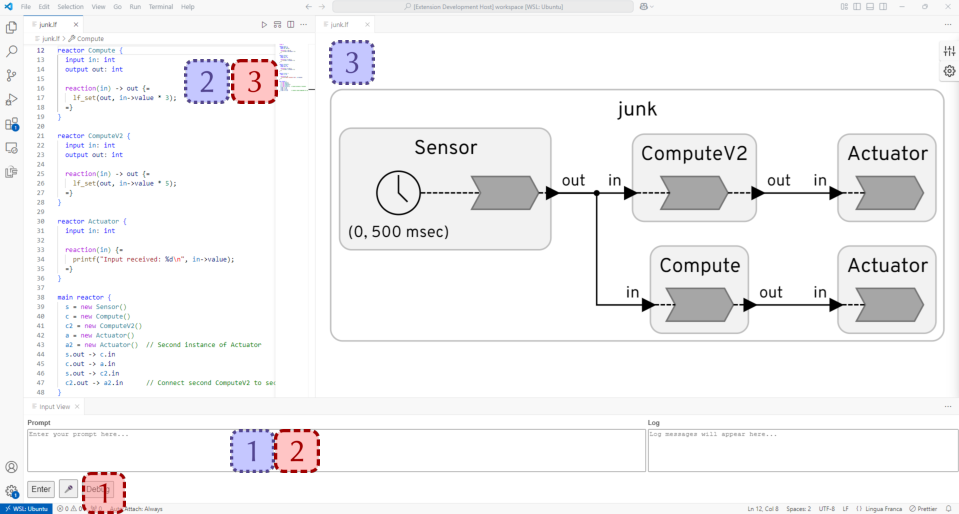

Figure 2: Demonstrator of rapid, natural modeling with Lingua Franca in Visual Studio Code. The observation points display the outputs of the speech transcription, the generated textual model, and the synthesized diagram, respectively. The interaction points allow the modeler to provide input via speech, text prompt, or direct editing of the model.

The prototype supports:

- Speech-to-text transcription (using Whisper-1 or similar models)

- LLM-based model synthesis (using o4-mini or interchangeable LLMs)

- Automatic diagram generation (via KIELER/ELK)

- Simultaneous observation and interaction at all stages

The demonstrator showcases the creation of a Lingua Franca model in under two minutes, with all prompts spoken, transcribed, and synthesized into both textual and graphical representations.

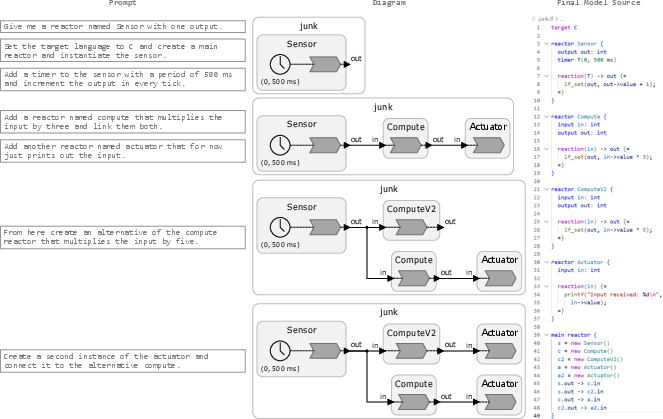

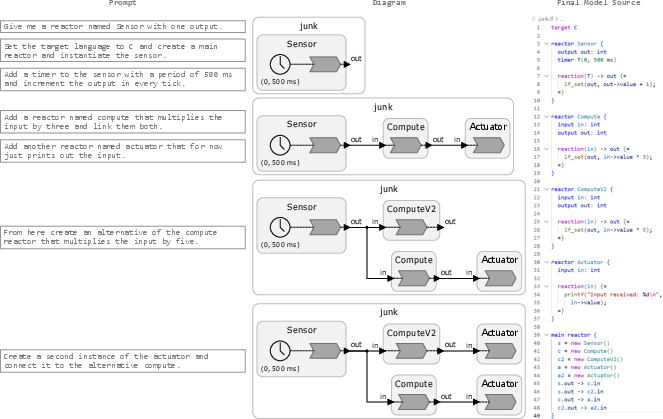

Figure 3: Demonstration of the prototype in action, following the transcript from the accompanying video. The time progresses from top to bottom and the model was created in less than 2 min. All prompts on the left side were spoken by the modeler, transcribed, and then sent to the LLM to generate the Lingua Franca source model, which is then synthesized into the corresponding diagram. The final Lingua Franca model source is shown to the right.

A key technical innovation is the use of the Tool API to expose DSL grammar constructs to the LLM. Rather than relying solely on prompt engineering, the Tool API provides structured, grammar-aligned functions that the LLM can invoke to generate syntactically correct code fragments. This approach offloads contextualization from the prompt to the tool layer, improving reliability and scalability, especially for DSLs with complex or polyglot syntax.

The authors demonstrate that Tool API definitions can be generated automatically from annotated grammar files, reducing manual effort and ensuring consistency between the DSL specification and the AI assistant's capabilities. This meta-tooling approach is extensible to other DSLs and can be further automated by associating formal comments with grammar parameters.

Evaluation and Discussion

The prototype demonstrates fluent, low-latency interaction, with speech-to-text and LLM response times suitable for real-time modeling. The integration of observation and interaction points enables rapid correction and iterative refinement, addressing the shortcomings of black-box, one-shot prompt engineering. The graphical feedback loop is particularly effective for non-experts and non-native speakers, lowering the barrier to entry for DSL modeling.

The authors note that the optimal granularity of Tool API functions and the combination of retrieval, fine-tuning, and prompt engineering techniques remain open research questions. The approach is orthogonal to improvements in LLM architectures and can benefit from advances in retrieval-augmented generation and model fine-tuning.

Implications and Future Directions

The proposed methodology has significant implications for both practical tooling and theoretical modeling workflows:

- Enhanced controllability: Fine-grained observation and interaction points enable modelers to guide the AI assistant more effectively, improving reliability and reducing the risk of semantic errors.

- Transparency and verification: Instantaneous graphical synthesis and system-level validation facilitate formal verification and visual inspection, critical for safety-critical and cyber-physical systems.

- Meta-tooling automation: The ability to generate Tool API definitions from DSL grammars paves the way for self-adaptive modeling environments, where the modeling tools themselves can be partially synthesized by AI.

- Generalizability: While demonstrated for Lingua Franca, the approach is applicable to other DSLs, particularly those with graphical semantics or modular architectures.

Future research directions include optimizing the granularity of Tool API functions, integrating richer diagnostic feedback (e.g., compiler errors, model checking results) into the feedback loop, and extending the methodology to support collaborative, multi-agent modeling scenarios.

Conclusion

This work presents a comprehensive framework for AI-assisted modeling in DSLs, combining LLM-based synthesis, multi-modal interaction, and instantaneous graphical feedback. The prototype implementation in Visual Studio Code for Lingua Franca demonstrates the feasibility and advantages of the approach, particularly in terms of iterative refinement, transparency, and accessibility. The methodology is extensible, tool-agnostic, and compatible with ongoing advances in LLMs and AI-assisted development environments. The integration of meta-tooling and formal grammar alignment represents a promising direction for the future of intelligent, adaptive modeling systems.