- The paper demonstrates that integrating constraint scaffolding and iterative self-correction enables LLMs to generate effective scoring functions for the 3D Packing Problem.

- It shows that the evolutionary process transitions from simple heuristics to refined scoring functions, though it remains confined to modular mathematical expressions.

- The study highlights significant limitations in LLM autonomy, noting the need for manual interventions and the model's bias toward component-level optimization.

Re-evaluating LLM-based Heuristic Search: A Case Study on the 3D Packing Problem

Introduction

This paper presents a rigorous investigation into the capabilities and limitations of LLMs for automated heuristic design, focusing on the constrained 3D Packing Problem. Unlike prior work that leverages LLMs for component-level optimization within established algorithmic frameworks, this paper probes the feasibility of end-to-end solver generation in a "knowledge-poor" domain characterized by high-dimensional geometry and complex constraints. The authors identify critical barriers to LLM-driven heuristic search, propose engineering interventions to mitigate these issues, and empirically evaluate the resulting heuristics against state-of-the-art human-designed methods.

The 3D Packing Problem addressed is the Input Minimization variant, where the objective is to pack a set of items into the minimum number of containers, subject to geometric and real-world constraints such as incompatibility and vertical stability. The solution space is combinatorially large, with each item requiring assignment of container, orientation, and continuous placement coordinates, while satisfying non-overlap and support constraints.

The Evolution of Heuristics (EoH) framework is employed as the search paradigm. EoH iteratively evolves a population of candidate heuristics, represented as (thought, code) pairs, using an LLM as a mutation operator. Five prompt strategies (exploration and modification operators) guide the generation of new heuristics, which are evaluated for fitness and feasibility.

Engineering Interventions: Constraint Scaffolding and Iterative Self-Correction

Direct application of EoH to the 3D Packing Problem proved infeasible due to the fragility of LLM-generated code. The authors document a high incidence of logical errors, constraint violations, and computational inefficiency, which severely impede population initialization and evolutionary progress.

To address these challenges, two interventions are introduced:

- Constraint Scaffolding: A verified API encapsulates all geometric and physical constraint checks, abstracting away low-level logic from the LLM. The LLM is tasked only with orchestrating calls to this API, focusing its generative capacity on high-level strategy.

- Iterative Self-Correction: Diagnostic feedback from failed candidate programs (syntax errors, constraint violations, timeouts) is appended to the prompt, enabling the LLM to repair faults over multiple refinement cycles. This process systematically increases the proportion of feasible and efficient heuristics in the population.

Empirical validation demonstrates that constraint scaffolding dramatically reduces code errors, while iterative self-correction further increases the success rate by resolving inefficiencies and residual logical faults.

Heuristic Discovery and Evolutionary Dynamics

With the interventions in place, the evolutionary process successfully discovers functional packing heuristics. Analysis of the evolutionary trajectory reveals that the LLM's optimization is almost exclusively concentrated on the scoring function used to guide item selection and placement, rather than inventing novel algorithmic structures.

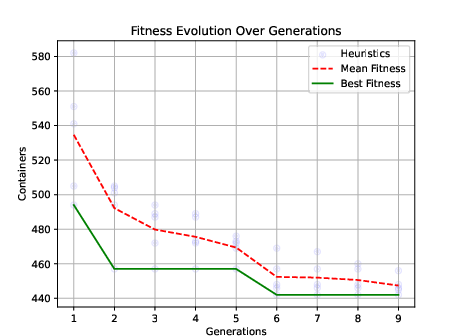

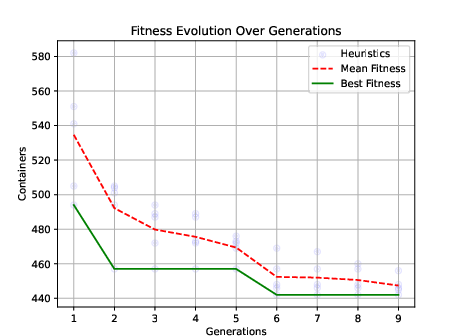

Figure 1: The evolutionary trajectory for one run, plotting the fitness of the best-performing heuristic in each generation evaluated on training datasets.

The population evolves from simple "largest-item-first" heuristics to more sophisticated scoring functions that incorporate volume utilization, item quantity, cubeness, adjacency, and placement efficiency. However, the search space remains narrowly focused on modular mathematical expressions, with no emergence of advanced procedural logic such as wall-building or layer-based packing.

The best LLM-discovered heuristic, operating within a greedy framework, achieves performance comparable to human-designed greedy algorithms (e.g., S-GRASP), but lags behind advanced metaheuristics and exact solvers. Notably, the scoring function itself is identified as a high-quality component. When transplanted into a two-stage metaheuristic framework (Randomized Search + Set Partitioning), the LLM-generated scoring function enables near-optimal performance, rivaling state-of-the-art methods on unconstrained benchmarks.

However, as additional constraints (load stability, item separation) are introduced, the effectiveness of the LLM-discovered heuristic diminishes. The scoring function alone is insufficient to navigate the complex trade-offs required by real-world constraints, resulting in a widening performance gap relative to leading solvers.

Limitations and Implications

The paper highlights two major limitations of current LLM-based heuristic search:

- Engineering Overhead: Significant manual intervention is required to scaffold constraints and enable robust code generation. Autonomous solver synthesis remains out of reach for current LLMs in complex domains.

- Pretrained Biases: LLMs exhibit a strong bias toward refining modular scoring functions, with limited capacity for inventing novel algorithmic paradigms or procedural logic.

These findings have direct implications for the future of automated algorithm design. Progress will require either advances in LLM capabilities (e.g., fine-tuning for domain-specific reasoning) or reframing the generation task to leverage LLM strengths (e.g., formula generation for dedicated solvers). The generalizability of these results to other combinatorial optimization problems and more powerful LLMs remains an open question, constrained by practical issues of model accessibility and prompt sensitivity.

Future Directions

Potential avenues for future research include:

- Model Fine-Tuning: Training LLMs on domain-specific data to improve their handling of geometric and logical constraints.

- Hybrid Architectures: Integrating LLMs with symbolic solvers or physics engines to offload deterministic reasoning.

- Prompt Engineering: Developing more effective prompt strategies to elicit procedural innovation rather than component-level optimization.

- Benchmark Expansion: Systematic evaluation across a broader set of combinatorial problems and LLM architectures.

Conclusion

This work provides a detailed empirical and methodological analysis of LLM-based heuristic search in the context of the 3D Packing Problem. The authors demonstrate that, with appropriate engineering interventions, LLMs can discover competitive scoring functions for greedy algorithms, which, when integrated into advanced metaheuristics, yield strong performance on unconstrained benchmarks. However, the approach is fundamentally limited by the fragility of LLM-generated code and the model's bias toward component-level optimization. Overcoming these barriers will be essential for realizing the full potential of automated algorithm design in complex, real-world domains.