- The paper introduces BSNeRF, extending neural radiance fields to reconstruct spatial, angular, and spectral data from a single exposure.

- It employs a kaleidoscopic optical assembly with nine broadband filters and joint camera parameter optimization to boost light throughput and reconstruction fidelity.

- Experimental results show improved spectral accuracy and image consistency, establishing a new baseline for snapshot multispectral light-field imaging.

Broadband Spectral Neural Radiance Fields for Snapshot Multispectral Light-field Imaging

Introduction and Motivation

The paper introduces BSNeRF, a broadband spectral neural radiance field tailored for snapshot multispectral light-field imaging (SMLI). SMLI aims to capture high-dimensional data—spatial, angular, and spectral—in a single exposure, but the inherent underdetermined nature of the problem complicates accurate reconstruction. Existing SMLI systems often trade off light throughput or acquisition speed, either by using narrow-band filters or by requiring multiple exposures, which limits their practical utility. BSNeRF addresses these limitations by enabling high-throughput, single-shot acquisition and robust decoupling of broadband spectral information, leveraging a self-supervised neural field approach.

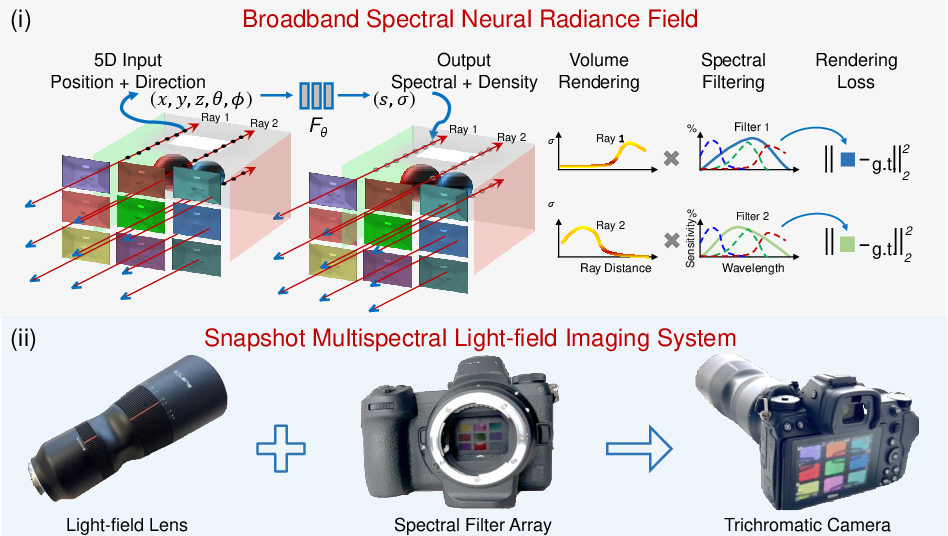

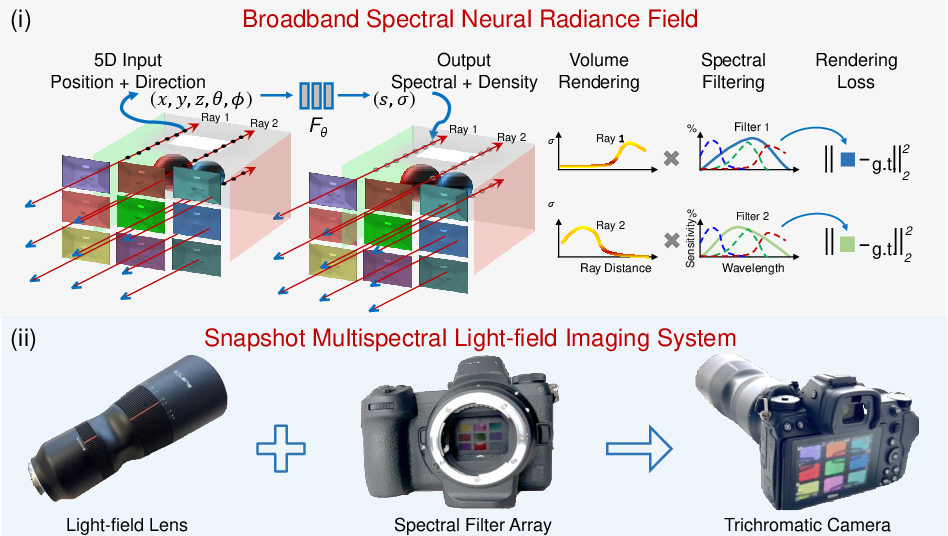

Figure 1: (i) Overview of the BSNeRF scene representation and differentiable rendering. (ii) Assembly of the kaleidoscopic SMLI system with light-field lens, spectral filter, and trichromatic camera.

System Architecture and Data Acquisition

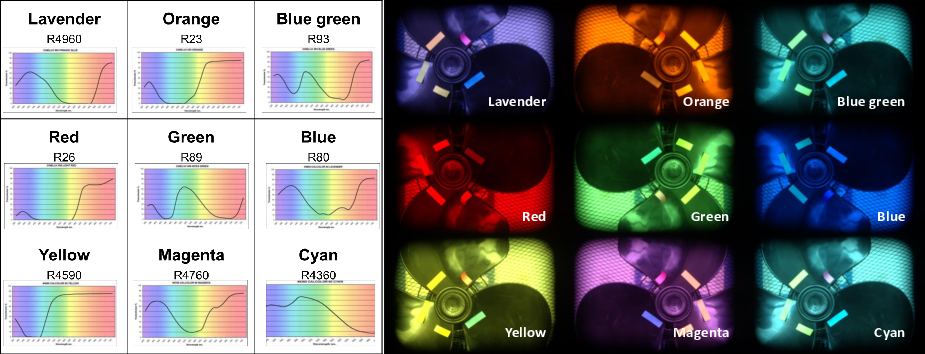

The proposed SMLI system employs a kaleidoscopic optical assembly, integrating a 3×3 array of broadband spectral filters with a commercial light-field lens and a trichromatic SLR camera. Each filter exhibits a distinct spectral transmission curve, enabling the system to multiplex nine spectral channels per spatial subview. The camera captures a single RAW image, which is then decomposed into 9×9 subviews, each corresponding to a unique combination of spatial, angular, and spectral information.

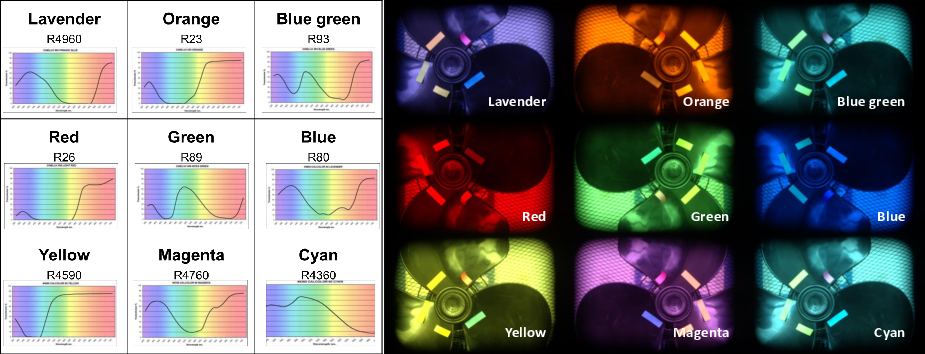

Figure 2: Left: Spectral transmission curves for each filter. Right: Subviews captured with the prototype, each corresponding to a different filter.

The system's forward model is formulated as an integral over the visible spectrum, modulated by both the sensor's spectral sensitivity and the filter's transmission function. This multiplexed acquisition strategy maximizes light throughput and acquisition speed, but introduces significant challenges for spectral decoupling and image reconstruction.

BSNeRF extends the NeRF paradigm to the multispectral light-field domain by modeling the scene as a continuous function FΘ:(x,d)→(s,σ), where x denotes 3D position, d the ray direction, s the spectral intensity, and σ the volume density. The rendering equation integrates over both the spectral and spatial domains, accounting for the broadband nature of the filters and the sensor response.

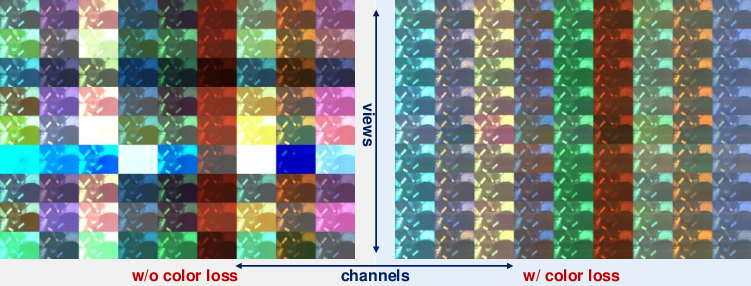

A key technical challenge is the joint optimization of intrinsic and extrinsic camera parameters in an uncalibrated setting. The model leverages the Rodrigues formula for rotation matrix parameterization, enabling end-to-end differentiable pose estimation during training. The loss function combines a fidelity term (pixel-wise MSE between rendered and observed images) and a color loss that aligns the mean and standard deviation of the generated and measured color distributions. The color loss is critical for ensuring consistency across views and spectral channels, especially given the underconstrained nature of the problem.

Experimental Results and Analysis

Experiments are conducted on real-world data acquired with the proposed SMLI system. The model is implemented in PyTorch and trained on an NVIDIA P100 GPU for 10,000 epochs, using Adam optimizers for the NeRF weights, camera poses, and focal lengths. The reconstructed output is a 9×9 array of RGB images, each corresponding to a unique subview and spectral filter, effectively covering 27 spectral channels.

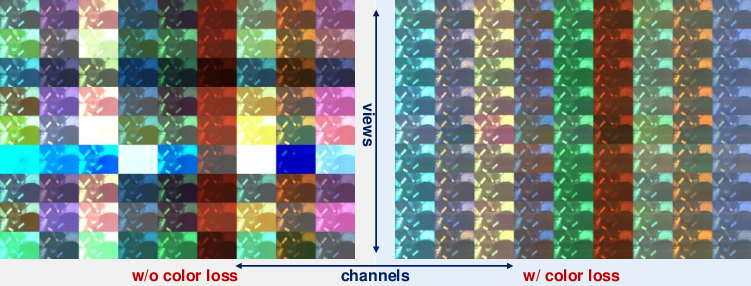

Figure 3: Left: Reconstructed multispectral light-field images without color loss. Right: With color loss, showing improved consistency across views and spectral channels.

The results demonstrate that the inclusion of the color loss significantly improves the consistency and fidelity of the reconstructed images, both across spatial views and spectral channels. The model achieves high spectral accuracy and preserves fine textural details, outperforming prior SMLI approaches that rely on narrow-band filtering or multi-shot acquisition. Notably, the system achieves these results in a single snapshot, with no requirement for pre-calibrated camera parameters or scene-specific registration.

Implications and Future Directions

BSNeRF represents a significant advance in the practical deployment of SMLI systems, enabling high-throughput, single-shot acquisition of high-dimensional light-field data. The joint optimization of camera parameters and the use of a color statistic prior make the approach robust to uncalibrated settings and diverse scenes. The method's ability to decouple broadband spectral information without sacrificing light efficiency or acquisition speed has direct implications for applications in computational photography, remote sensing, and biomedical imaging.

Theoretically, the work extends the NeRF framework to a broader class of inverse problems involving multiplexed, underdetermined measurements. The explicit modeling of spectral, spatial, and angular dimensions within a unified neural field opens avenues for further research in high-dimensional scene representation and rendering.

Future work could incorporate the temporal dimension, enabling dynamic plenoptic imaging and further expanding the applicability of the approach to video-rate multispectral light-field capture. Additionally, scaling the system to higher spatial and spectral resolutions, or integrating more advanced priors (e.g., physics-based constraints or learned spectral bases), could further enhance reconstruction quality and generalization.

Conclusion

BSNeRF provides a robust, self-supervised solution for snapshot multispectral light-field imaging, achieving high-fidelity reconstruction of spatial, angular, and spectral information from a single exposure. The approach overcomes the limitations of prior SMLI systems by maximizing light throughput and acquisition speed, while maintaining flexibility and generalizability through joint camera parameter optimization and color-statistic regularization. The results establish a new baseline for practical, high-dimensional plenoptic imaging and suggest promising directions for future research in neural scene representation and computational imaging.