- The paper proposes PILOT, which integrates human preference priors with contextual bandit feedback to enable efficient LLM routing under budget constraints.

- PILOT achieves 93% of GPT-4's performance at only 25% of its cost in multi-LLM routing, demonstrating significant improvements over traditional approaches.

- The study validates PILOT via extensive experiments on diverse tasks, highlighting its robustness, rapid adaptation, and low computational overhead.

Adaptive LLM Routing under Budget Constraints: A Technical Analysis

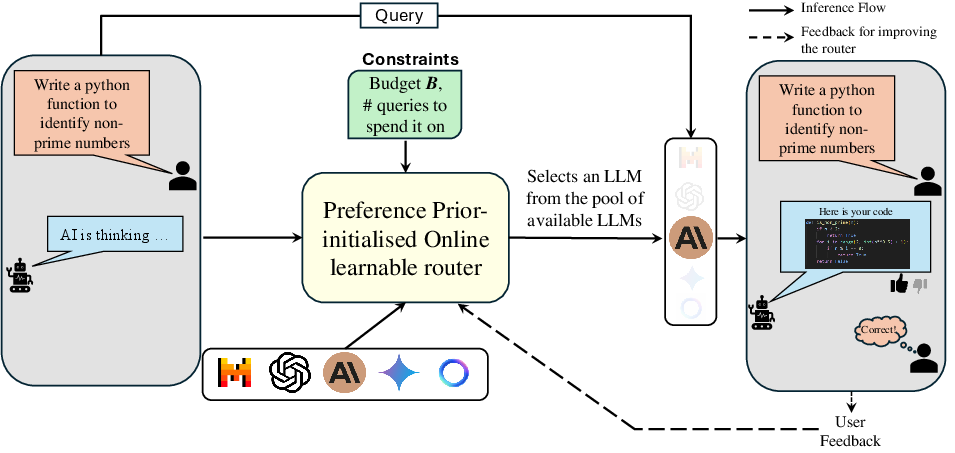

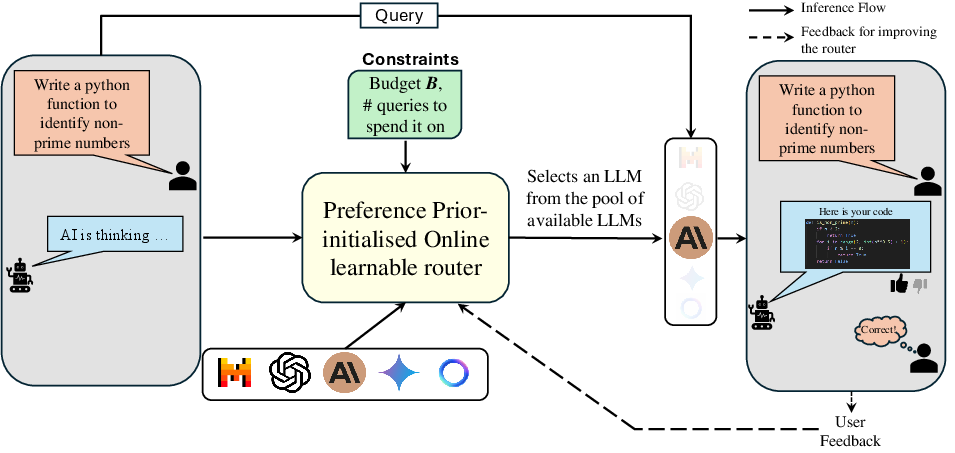

The paper addresses the challenge of deploying multiple LLMs in real-world systems where both performance and cost constraints are critical. The central problem is dynamic LLM routing: selecting the most suitable LLM for each incoming query, given a pool of models with varying capabilities and costs. Unlike prior work that treats routing as a supervised learning problem requiring exhaustive query-LLM pairings, this work reformulates routing as a contextual bandit problem, leveraging only bandit feedback (i.e., user evaluation of the selected model's response) and enforcing budget constraints via an online cost policy.

The proposed solution, PILOT, extends the LinUCB algorithm by incorporating human preference priors and an online cost policy. The approach consists of three main components:

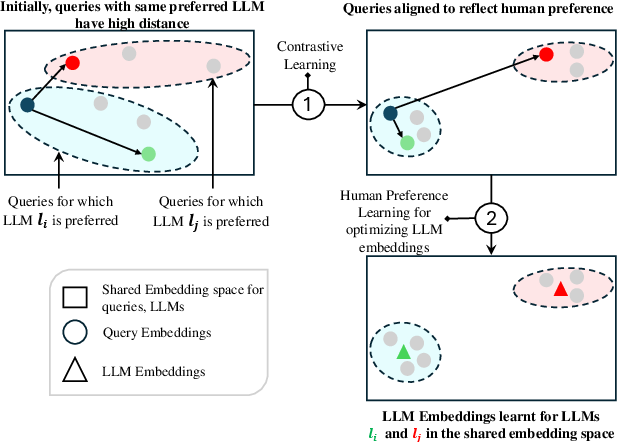

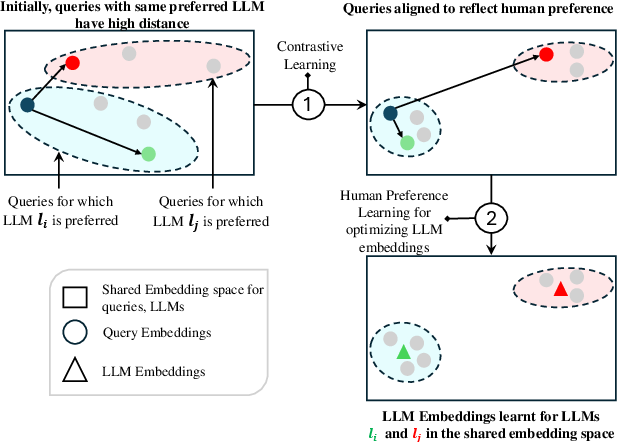

- Shared Embedding Space Pretraining: Queries and LLMs are embedded into a shared space, pretrained using human preference data. Query embeddings are projected via a learned linear transformation, and LLM embeddings are optimized to align with preferred responses.

- Online Bandit Feedback Adaptation: The router adapts LLM embeddings online using contextual bandit feedback. The expected reward for a query-LLM pair is modeled as the cosine similarity between their normalized embeddings. PILOT initializes the bandit algorithm with preference-based priors, theoretically achieving lower regret bounds than standard LinUCB/OFUL when the prior is close to the true reward vector.

- Budget-Constrained Routing via Online Cost Policy: The cost policy is formulated as an online multi-choice knapsack problem, using the ZCL algorithm to allocate budget across queries. The policy dynamically selects eligible LLMs based on estimated reward-to-cost ratios and current budget utilization, with binning to avoid underutilization in finite-horizon settings.

Figure 1: Overview of the two-phase pretraining process for query and LLM embeddings using human preference data.

Figure 2: Bandit router framework: the router receives user queries, cost constraints, and a model pool, adapting LLM selection based on user feedback.

Experimental Setup

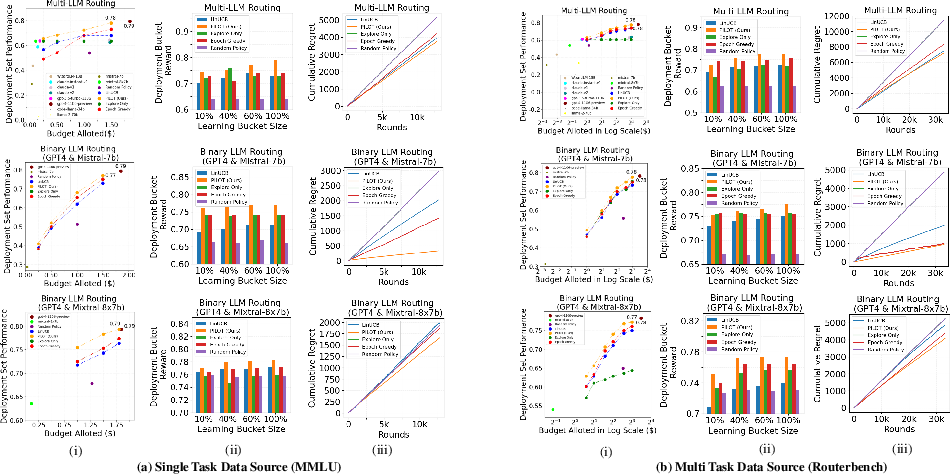

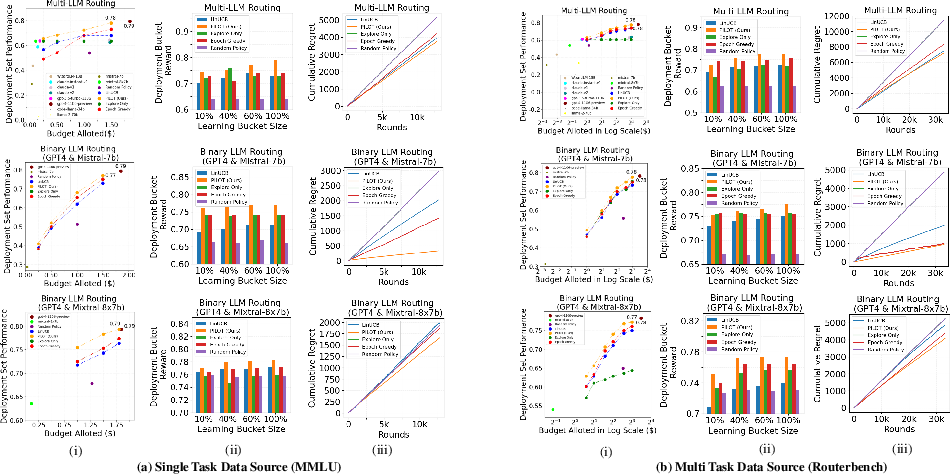

Experiments are conducted on the Routerbench dataset, which includes 36,497 samples across 64 tasks and 11 LLMs (both open-source and proprietary). The evaluation simulates online learning with a split into tuning, learning, and deployment buckets. Baselines include all-to-one routers, HybridLLM (supervised), and several contextual bandit algorithms (LinUCB, Epoch-Greedy, Explore Only, Random Policy). The cost policy is uniformly applied across all baselines for fair comparison.

Results

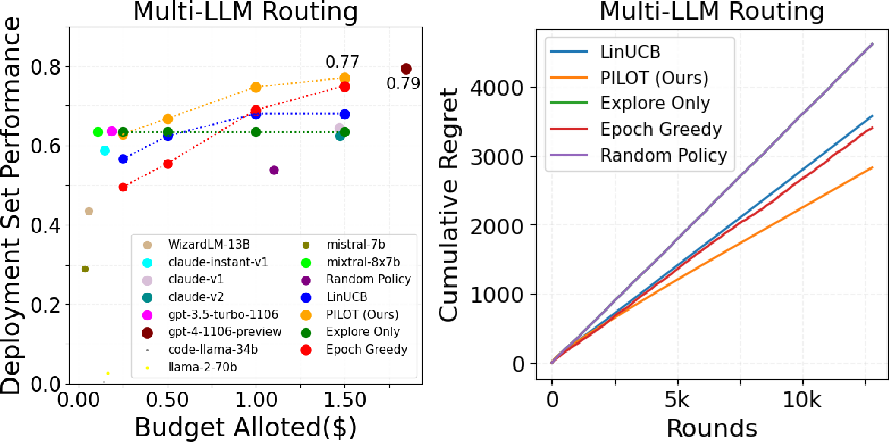

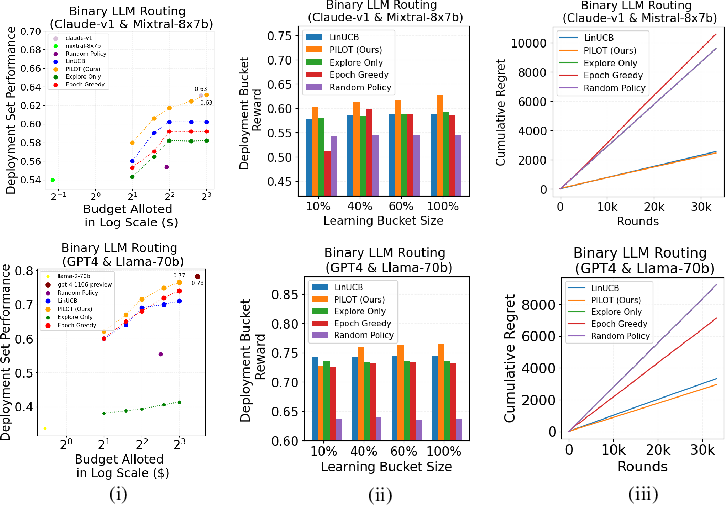

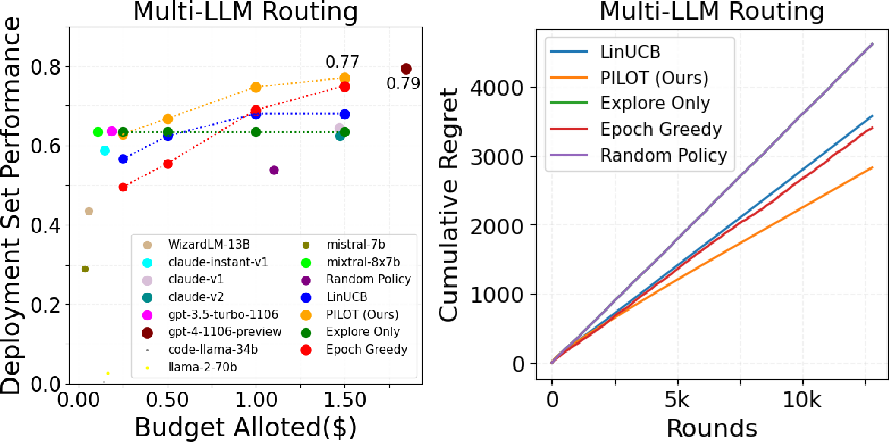

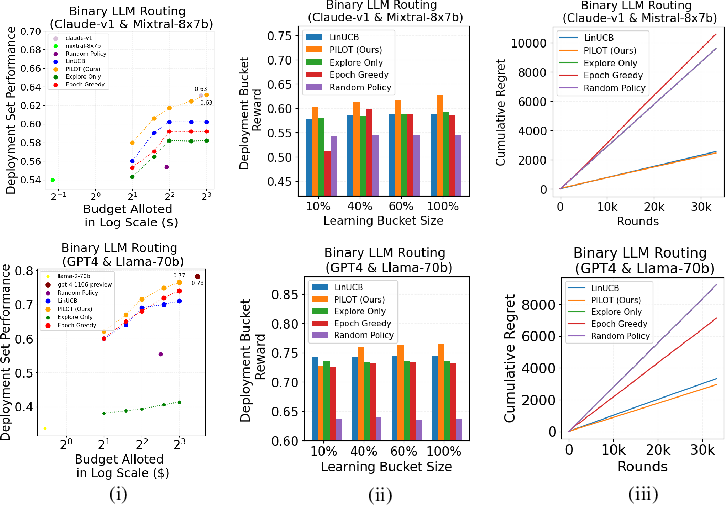

PILOT achieves 93% of GPT-4's performance at only 25% of its cost in multi-LLM routing, and 86% of GPT-4's performance at 27% of its cost in single-task routing. Across all cost budgets and learning bucket sizes, PILOT consistently outperforms bandit and supervised baselines in both deployment set performance and cumulative regret.

Figure 3: Performance vs cost, learning bucket size, and cumulative regret for PILOT and baselines on single-task and multi-task settings.

Qualitative Routing Analysis

PILOT demonstrates task-aware routing: for complex reasoning tasks (MMLU, ARC Challenge), it routes ~90% of queries to GPT-4; for coding (MBPP), Claude models handle 28% of queries; for math (GSM8K), Claude-v1 is selected for 94% of queries, reflecting cost-effective exploitation of model strengths.

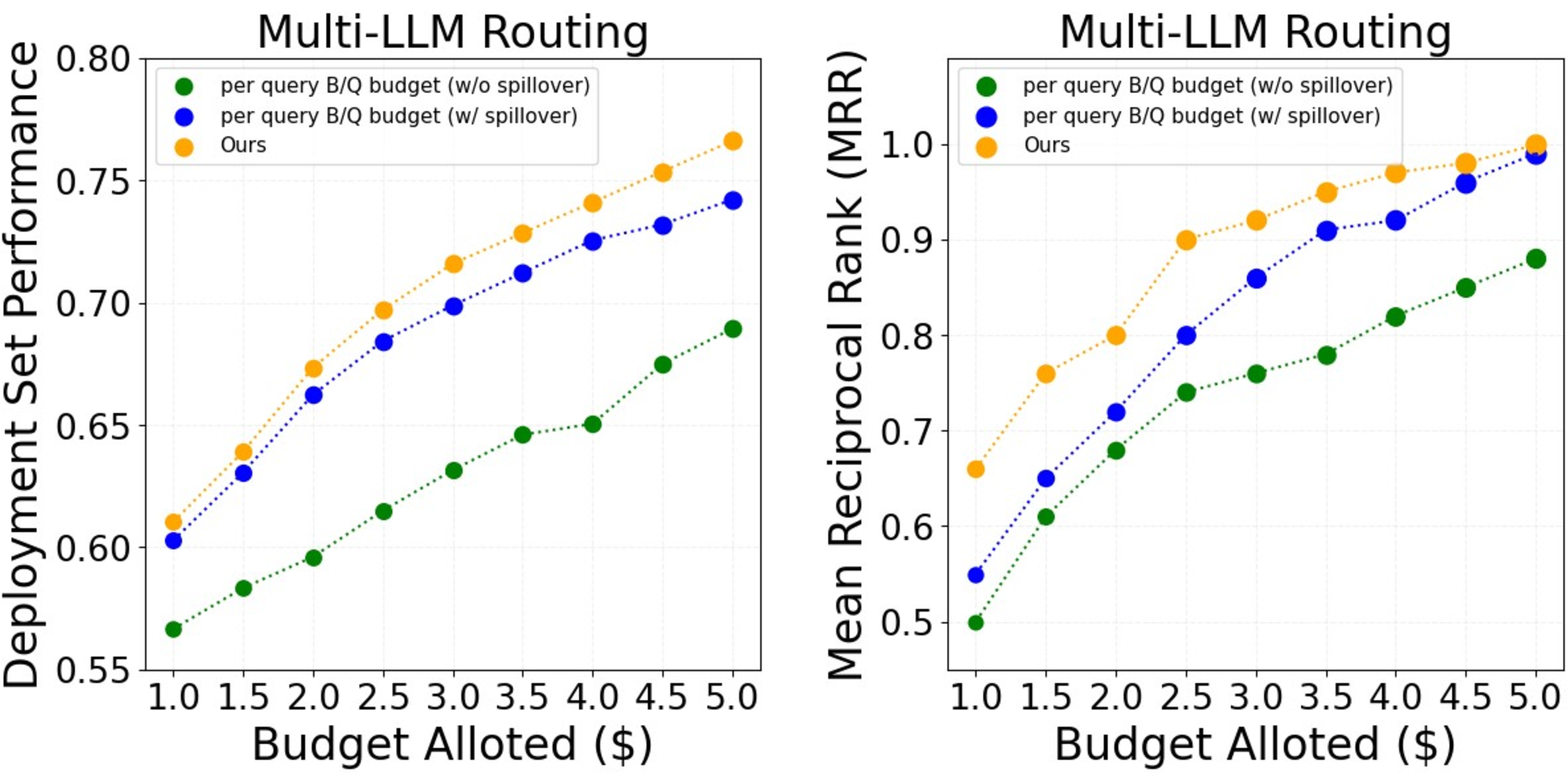

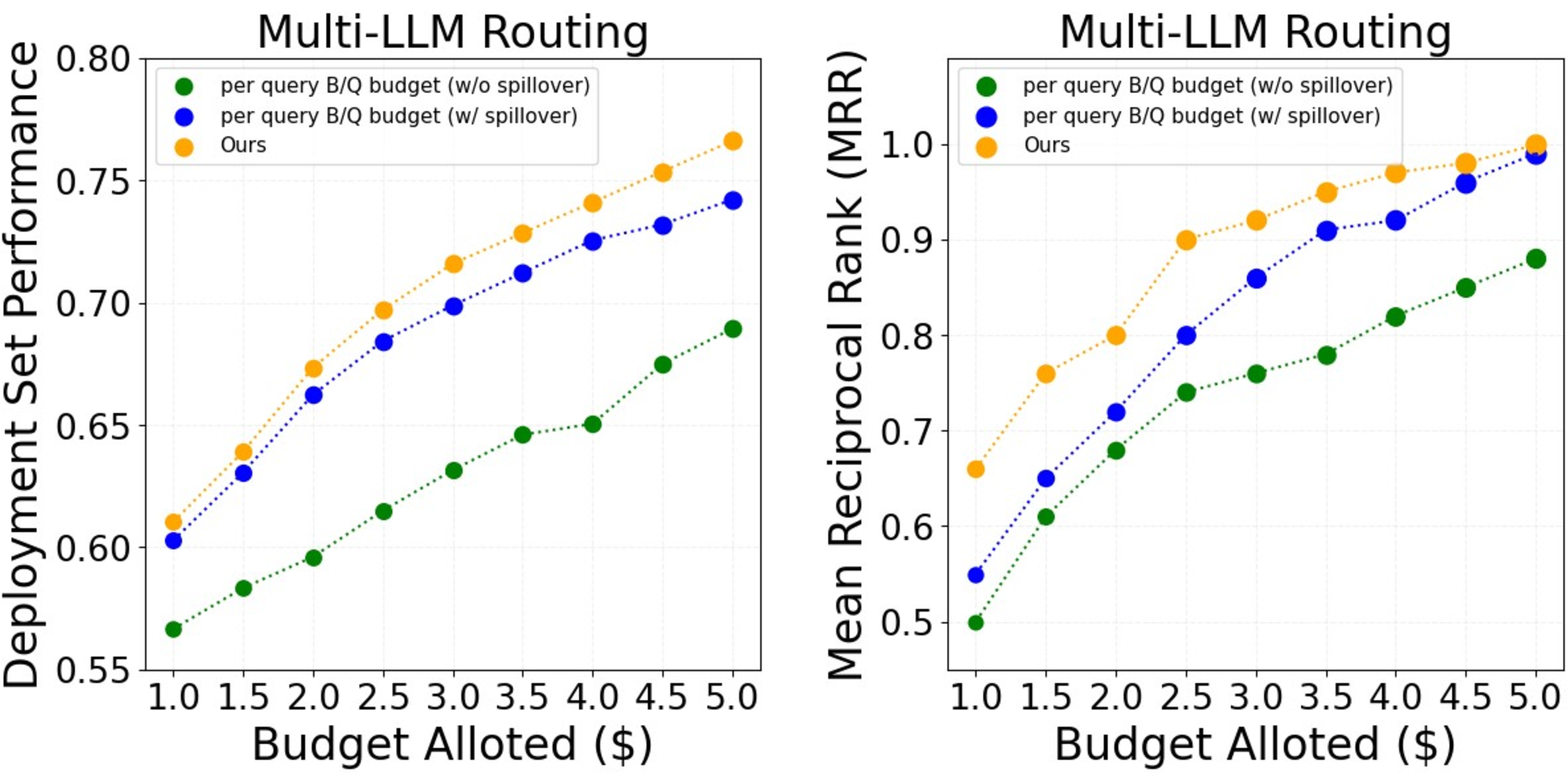

Cost Policy Evaluation

The online cost policy outperforms simple per-query budget allocation and even an offline policy with perfect hindsight, as shown by higher mean reciprocal rank and deployment set performance.

Figure 4: Comparison of cost policies: mean reciprocal rank and performance across budgets.

Computational Overhead

PILOT's routing time is negligible compared to LLM inference: 0.065–0.239s for routing vs. 2.5s for GPT-4 inference, ensuring minimal latency impact.

Embedding Model Sensitivity

PILOT maintains superior performance over baselines when using alternative embedding models (Instructor-XL), indicating robustness to the choice of embedder.

Figure 5: Sensitivity analysis of PILOT's performance with different embedding models.

Binary LLM Routing and Adaptability

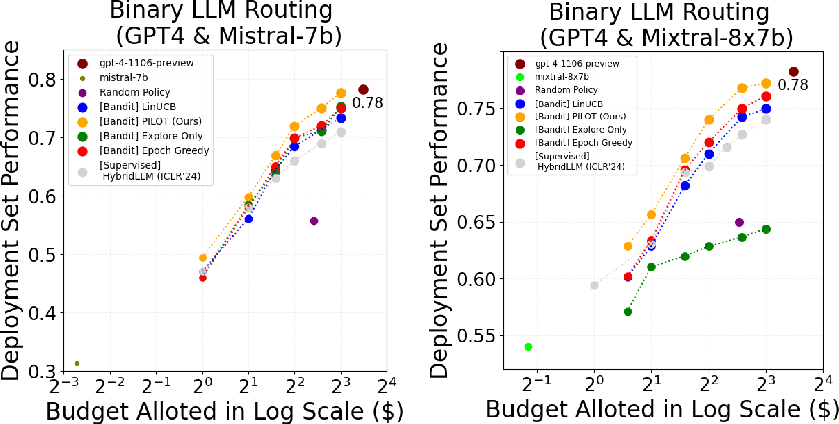

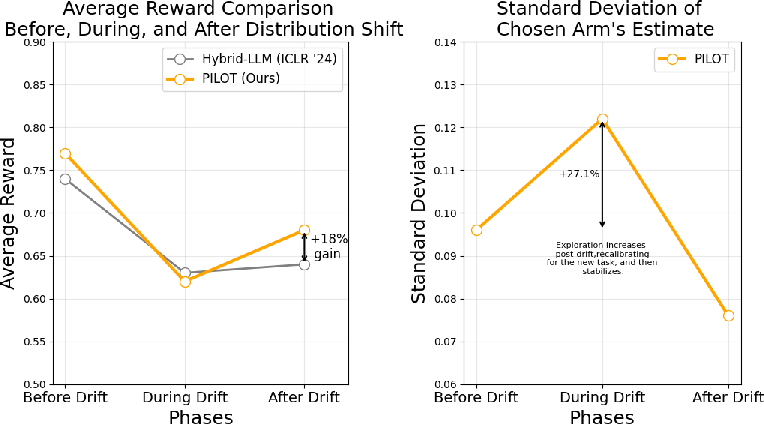

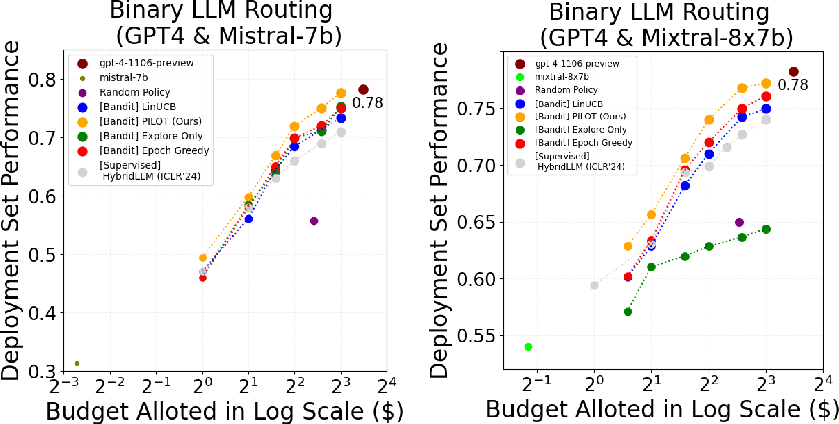

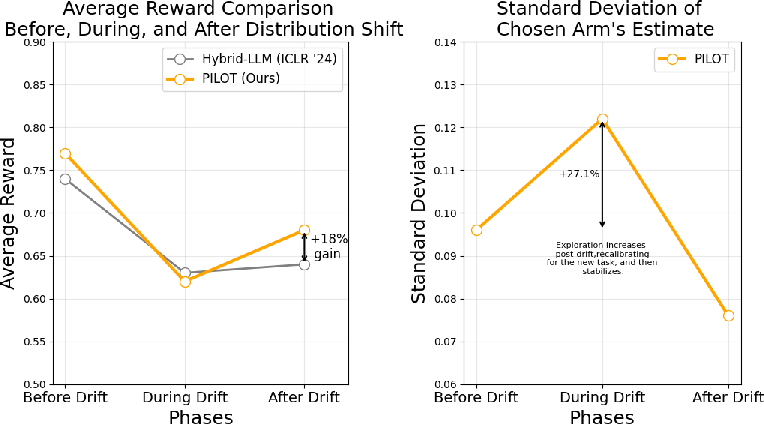

PILOT matches or surpasses HybridLLM in binary routing (GPT-4 vs. Mistral-7b/Mixtral-8x7b), despite requiring only bandit feedback. It adapts rapidly to shifts in query distribution, increasing exploration during drift and stabilizing post-drift.

Figure 6: Performance vs cost for PILOT and HybridLLM in binary routing scenarios.

Figure 7: Binary LLM routing evaluation: performance, learning bucket size, and cumulative regret for different LLM pairs.

Theoretical Implications

The preference-prior informed initialization in PILOT is shown to yield lower cumulative regret than standard bandit algorithms when the prior is close to the true reward vector. This provides a formal justification for leveraging human preference data in contextual bandit settings for LLM routing.

Practical Implications and Future Directions

PILOT enables adaptive, cost-efficient LLM deployment in dynamic environments, requiring only partial supervision and minimal annotation. The decoupling of bandit learning and cost policy allows for robust, user-controllable budget management. Limitations include the lack of budget constraints during online learning and focus on single-turn queries; future work should address budget-aware online learning and multi-turn conversational routing.

Conclusion

The paper presents a principled, empirically validated approach to adaptive LLM routing under budget constraints, combining preference-informed contextual bandit learning with an efficient online cost policy. PILOT achieves near state-of-the-art performance at a fraction of the cost, adapts to evolving query distributions, and is robust to embedding model choices, making it suitable for practical LLM deployment in cost-sensitive, dynamic settings.