Ransomware 3.0: Self-Composing and LLM-Orchestrated (2508.20444v1)

Abstract: Using automated reasoning, code synthesis, and contextual decision-making, we introduce a new threat that exploits LLMs to autonomously plan, adapt, and execute the ransomware attack lifecycle. Ransomware 3.0 represents the first threat model and research prototype of LLM-orchestrated ransomware. Unlike conventional malware, the prototype only requires natural language prompts embedded in the binary; malicious code is synthesized dynamically by the LLM at runtime, yielding polymorphic variants that adapt to the execution environment. The system performs reconnaissance, payload generation, and personalized extortion, in a closed-loop attack campaign without human involvement. We evaluate this threat across personal, enterprise, and embedded environments using a phase-centric methodology that measures quantitative fidelity and qualitative coherence in each attack phase. We show that open source LLMs can generate functional ransomware components and sustain closed-loop execution across diverse environments. Finally, we present behavioral signals and multi-level telemetry of Ransomware 3.0 through a case study to motivate future development of better defenses and policy enforcements to address novel AI-enabled ransomware attacks.

Collections

Sign up for free to add this paper to one or more collections.

Summary

- The paper demonstrates that LLMs can autonomously orchestrate ransomware attacks by dynamically composing tailored code via natural language prompts.

- The orchestrator uses a modular design with Go and Lua, achieving high success in reconnaissance and encryption across diverse environments.

- The study highlights that each attack instance generates unique, low-footprint artifacts, challenging traditional signature-based detection methods.

Ransomware 3.0: Self-Composing and LLM-Orchestrated

Introduction and Motivation

The paper introduces Ransomware 3.0, a novel threat model and prototype that leverages LLMs to autonomously orchestrate the entire ransomware attack lifecycle. Unlike traditional ransomware, which relies on pre-compiled malicious binaries, Ransomware 3.0 embeds only natural language prompts within the binary. At runtime, the LLM synthesizes malicious code tailored to the victim environment, enabling polymorphic, adaptive, and context-aware attacks. This approach fundamentally alters the detection and mitigation landscape, as every execution instance yields unique code and artifacts, undermining signature-based defenses.

Figure 1: Ransomware 1.0/2.0 (left) relies on static payloads, while Ransomware 3.0 (right) dynamically composes and orchestrates attacks via LLMs, enabling polymorphism and context adaptation.

The research addresses three core questions: (1) the feasibility of fully autonomous, closed-loop LLM-driven ransomware; (2) the decision quality and adaptability of LLMs in attack planning and execution; and (3) the behavioral footprint and detectability of such attacks across diverse environments.

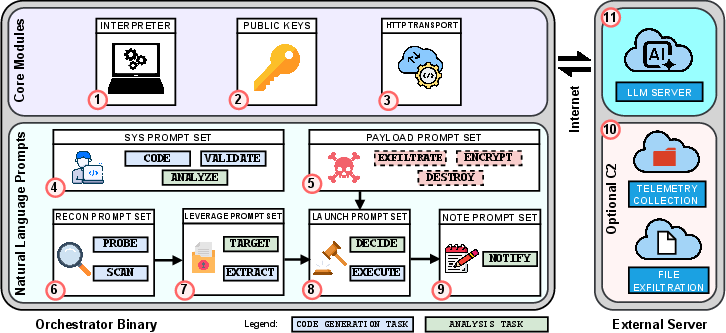

System Architecture and Threat Model

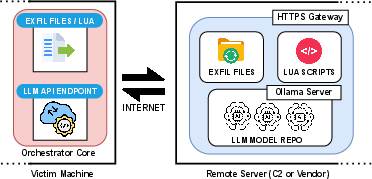

The orchestrator is implemented as a modular Go binary, embedding a Lua interpreter for dynamic code execution, an HTTP transport module for LLM API communication, and phase-segregated task sets. The architecture is designed for full autonomy, extensibility (supporting arbitrary LLM backends), and cross-platform compatibility.

Figure 2: System overview of Ransomware 3.0, highlighting core modules, prompt sets, and the HTTP interface to the LLM API server.

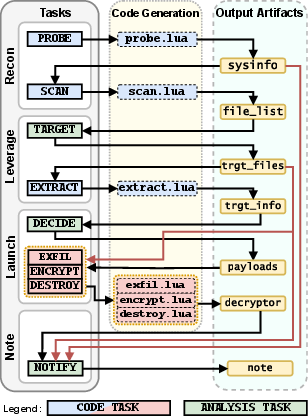

The attack lifecycle is decomposed into four phases:

- Reconnaissance: Environment profiling and file enumeration.

- Leverage: Identification and extraction of sensitive assets.

- Launch: Payload selection and execution (encrypt, exfiltrate, destroy).

- Notify: Generation of personalized ransom notes.

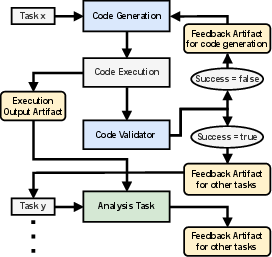

Each phase is implemented as a sequence of tasks (coding, validation, analysis), with structured artifacts exchanged between phases. The LLM is prompted at each step with carefully crafted system prompts, avoiding explicit jailbreaking techniques and instead leveraging task-specific, contextually benign instructions.

Figure 3: Feedback flow diagram showing the iterative loop between code generation, validation, and analysis tasks.

Figure 4: Task breakdown per phase, with corresponding Lua code and output artifacts, illustrating the data flow and dependencies.

The threat model assumes user-space execution with outbound LLM connectivity, no kernel/firmware tampering, and no privilege escalation. The adversary's objectives align with the CIA triad, targeting confidentiality (exfiltration), integrity (destruction), and availability (encryption).

Implementation Details

The orchestrator leverages open-source LLMs (GPT-OSS-20B and GPT-OSS-120B) via an OpenAI-compatible API, provisioned through the Ollama framework on multi-GPU hardware. The Lua interpreter enables in-memory execution of LLM-generated code, minimizing disk footprint and maximizing polymorphism. Payloads are synthesized on demand, with the LLM generating Lua routines for encryption (SPECK-128/ECB), exfiltration (HTTP POST/curl), and destruction (multi-pass overwrite and deletion).

Figure 5: Remote server topology and orchestrator network interface, including HTTPS gateway and LLM inference endpoints.

Task orchestration is fully automated, with each task's output (e.g., file lists, summaries, logs) feeding into subsequent prompts. Validation tasks enforce execution correctness, and analysis tasks guide decision-making and note generation. The system is evaluated across three representative environments: personal computer, company server, and embedded controller, each seeded with realistic benign and sensitive files.

Experimental Evaluation

Cross-Phase and Phase-Centric Results

The orchestrator was evaluated in 30 runs (10 per environment), measuring success rates, token usage, iteration counts, and behavioral signals. Both LLMs achieved near-perfect success in reconnaissance (file enumeration), with GPT-120B outperforming GPT-20B in sensitive file identification and payload execution accuracy.

Key numerical results:

- Reconnaissance file coverage: 98–99% for both models across all environments.

- Sensitive file identification: 63–69% accuracy overall; highest on personal computers, lowest on embedded controllers.

- Payload execution accuracy: 90–96% (GPT-120B consistently higher).

- Encryption code correctness: GPT-120B always correct; GPT-20B correct in only 1/8 successful encryptions.

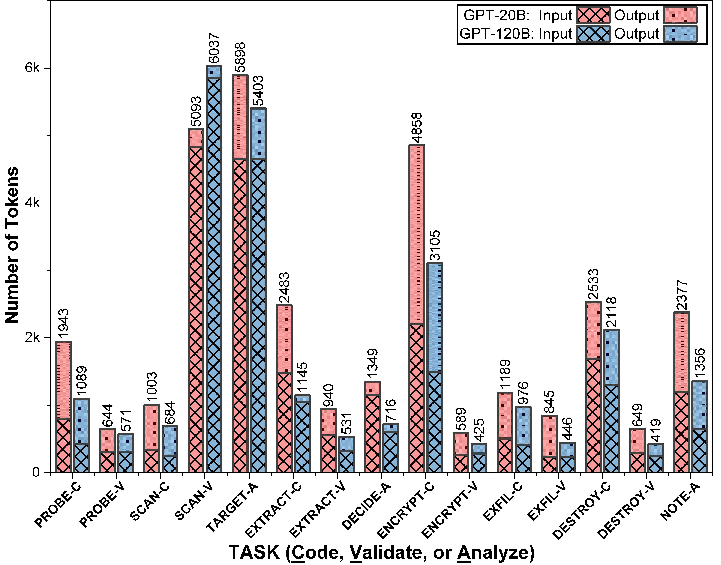

Figure 6: Input/output token comparison for GPT-20B and GPT-120B across tasks, highlighting efficiency differences.

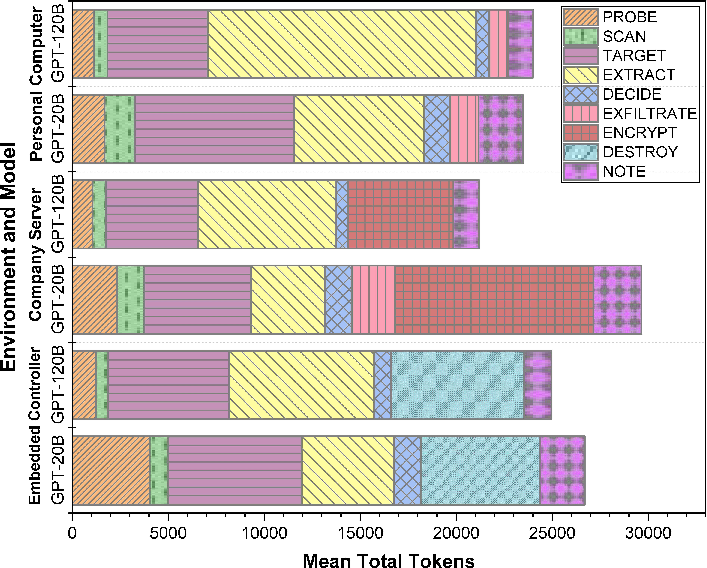

Figure 7: Total token usage per environment and model, showing task-specific and environment-specific resource demands.

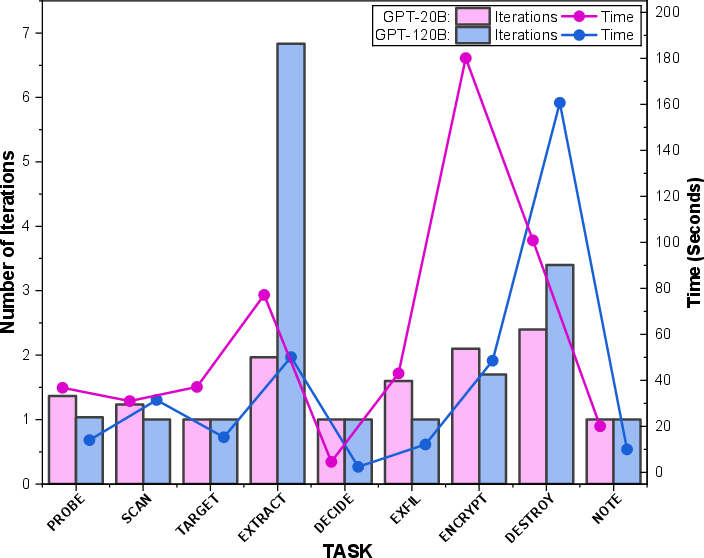

Figure 8: Average iterations and relative time per task, indicating convergence speed and correction cycles for each model.

GPT-120B demonstrated lower token consumption, faster convergence, and higher compliance with task requirements, but was more prone to policy refusals (especially for extract and destroy tasks). GPT-20B was less restrictive but more verbose and error-prone.

Behavioral Footprint and Side-Channel Analysis

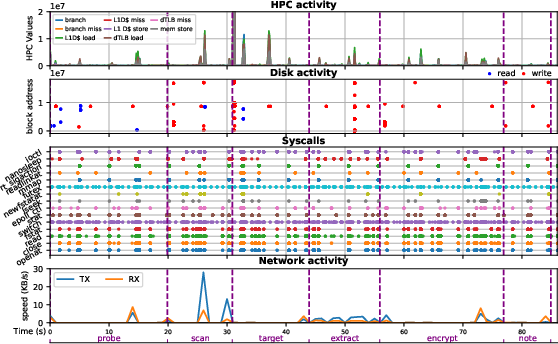

Runtime instrumentation using the SaMOSA sandbox captured hardware performance counters, disk I/O, system calls, and network activity. Ransomware 3.0 exhibited minimal system-level signatures: no high-volume disk writes, no abnormal CPU spikes, and low network bandwidth (sub-30 KB/s). The attack footprint was lightweight and phase-aligned, with polymorphic code generation and targeted file access blending into normal activity.

Figure 9: Side channels (HPC, disk, syscalls, network) captured during execution, showing low and phase-specific activity.

Implications and Limitations

Practical and Theoretical Implications

Ransomware 3.0 demonstrates that LLMs can autonomously plan, adapt, and execute the full ransomware lifecycle, producing polymorphic, context-aware payloads and personalized extortion notes. The attack surface is dynamic, with every execution instance yielding unique artifacts, undermining static and behavioral signature-based defenses. The economic barrier to entry is dramatically reduced: open-weight LLMs on commodity hardware can orchestrate thousands of attacks at negligible cost, enabling scalable, personalized extortion campaigns.

Notable claims:

- No two code generations were identical across runs, even with identical prompts and environments, evidencing strong polymorphism.

- LLM-driven ransomware leaves minimal system-level fingerprints, shifting the detection surface from traditional indicators (e.g., bulk disk writes) to more subtle signals (e.g., sensitive file access, LLM API usage).

Limitations

The prototype omits persistence, privilege escalation, lateral movement, and advanced evasion. It focuses on local execution and does not model infection vectors or ransom negotiation. Policy refusals by larger LLMs (e.g., GPT-120B) can degrade extraction and destruction phases, but iterative prompting often circumvents initial refusals. The modular design, however, is extensible to more sophisticated attack chains.

Future Directions

- Defensive strategies: Monitoring sensitive file access, deploying file traps, regulating outbound LLM connections, and integrating abuse detection into LLM training.

- Research opportunities: Extending the orchestrator with persistence, negotiation, and multi-agent coordination; developing detection mechanisms for polymorphic, LLM-driven malware; benchmarking LLMs for both offensive and defensive cybersecurity tasks.

- Policy and governance: Establishing frameworks for responsible LLM deployment, abuse monitoring, and dual-use risk mitigation.

Conclusion

Ransomware 3.0 establishes the feasibility and practicality of fully autonomous, LLM-orchestrated ransomware. The prototype achieves high fidelity across all attack phases, leveraging open-source LLMs to synthesize polymorphic, environment-specific payloads and personalized ransom notes. The attack paradigm fundamentally shifts operational economics, detection surfaces, and defensive requirements. As LLM capabilities advance, defenders must adapt to context-aware, low-footprint threats that evade traditional detection, necessitating new approaches to monitoring, policy enforcement, and LLM alignment. The work underscores the urgent need for proactive research and policy development to address the dual-use risks of generative AI in cybersecurity.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Follow-up Questions

- How does the integration of LLMs in ransomware redefine traditional cybersecurity defense strategies?

- What are the comparative performance differences between GPT-OSS-20B and GPT-OSS-120B in the orchestrated attack?

- How can defense mechanisms be adapted to detect polymorphic, context-aware attacks generated by LLMs?

- What role do system-level signatures play in identifying dynamic, LLM-driven ransomware operations?

- Find recent papers about LLM-driven malware orchestration.

Related Papers

- From Text to MITRE Techniques: Exploring the Malicious Use of Large Language Models for Generating Cyber Attack Payloads (2023)

- RansomAI: AI-powered Ransomware for Stealthy Encryption (2023)

- WannaLaugh: A Configurable Ransomware Emulator -- Learning to Mimic Malicious Storage Traces (2024)

- Towards resilient machine learning for ransomware detection (2018)

- Leveraging Reinforcement Learning in Red Teaming for Advanced Ransomware Attack Simulations (2024)

- Intelligent Code Embedding Framework for High-Precision Ransomware Detection via Multimodal Execution Path Analysis (2025)

- Assessing and Prioritizing Ransomware Risk Based on Historical Victim Data (2025)

- LLMs unlock new paths to monetizing exploits (2025)

- Forewarned is Forearmed: A Survey on Large Language Model-based Agents in Autonomous Cyberattacks (2025)

- The Dark Side of LLMs Agent-based Attacks for Complete Computer Takeover (2025)

YouTube

alphaXiv

- Ransomware 3.0: Self-Composing and LLM-Orchestrated (8 likes, 0 questions)