- The paper introduces the M2N2 algorithm, which dynamically evolves merging boundaries and preserves model diversity through natural competition.

- It employs spherical linear interpolation and an attraction-based mate selection to efficiently combine model parameters across different domains.

- Experimental results demonstrate significant improvements in accuracy and efficiency for tasks like MNIST classification, LLM merging, and image generation over CMA-ES baselines.

Competition and Attraction Improve Model Fusion: An Expert Analysis

Introduction and Motivation

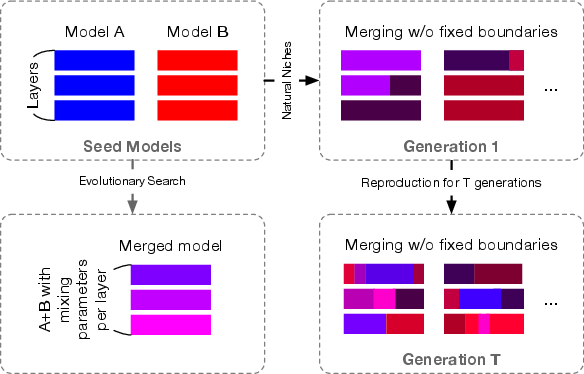

The proliferation of open-source generative models has led to a landscape rich with specialized variants, each fine-tuned for distinct tasks or domains. Model merging, the process of integrating multiple models into a single entity, offers a mechanism to consolidate this distributed expertise. However, prior approaches have been constrained by manual partitioning of model parameters and limited diversity management, restricting the search space and the efficacy of fusion. The paper introduces Model Merging of Natural Niches (M2N2), an evolutionary algorithm that addresses these limitations through dynamic boundary evolution, implicit diversity preservation via competition, and a heuristic attraction metric for mate selection.

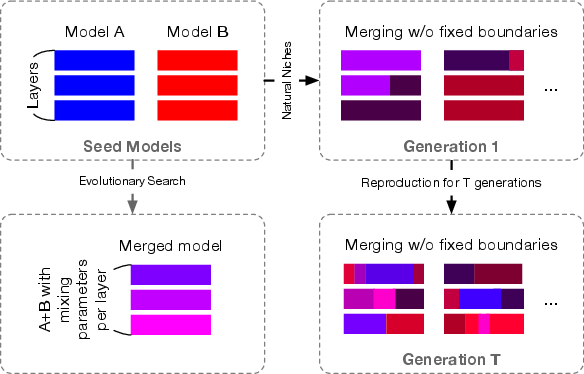

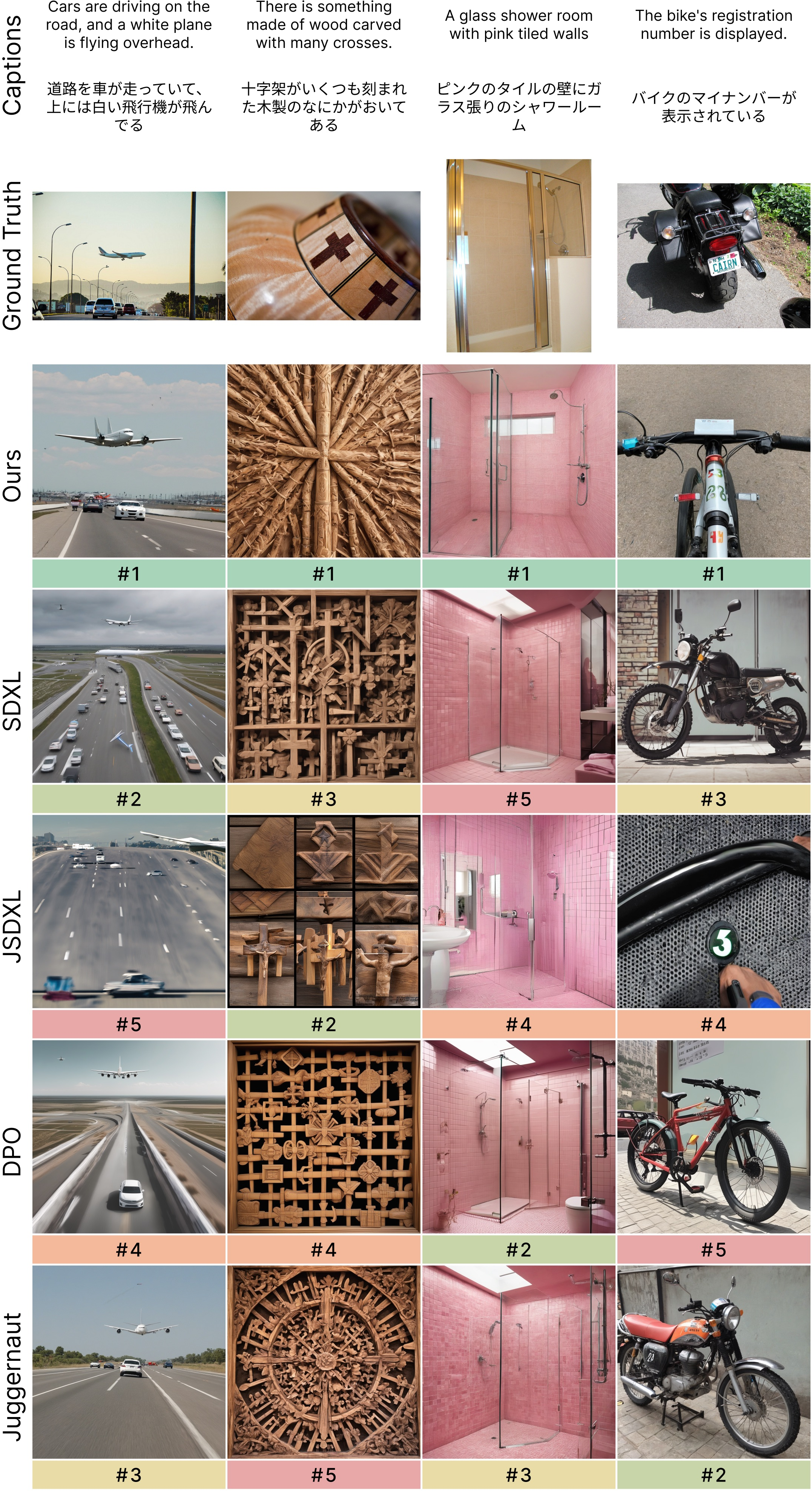

Figure 1: Previous methods use fixed boundaries for merging, while M2N2 incrementally explores a broader set of boundaries and coefficients via random split-points.

Methodology: M2N2 Algorithm

Dynamic Merging Boundaries

Traditional model merging methods rely on static parameter groupings (e.g., by layer), optimizing mixing coefficients within these fixed boundaries. M2N2 eliminates this constraint by evolving both the merging boundaries and the mixing coefficients. At each evolutionary step, two models are selected from the archive, and a split-point is sampled to determine where in the parameter space the merge occurs. Spherical linear interpolation (SLERP) is applied before and after the split-point, enabling flexible and granular recombination of model parameters.

Diversity Preservation via Competition

Diversity is essential for effective crossover operations, yet defining meaningful diversity metrics is increasingly challenging for high-dimensional models. M2N2 leverages implicit fitness sharing, inspired by natural resource competition, to maintain a population of diverse, high-performing models. The fitness derived from each data point is capped, and individuals compete for these limited resources, favoring those that excel in less contested niches. This mechanism obviates the need for hand-engineered diversity metrics and scales naturally with model and task complexity.

Attraction-Based Mate Selection

Crossover operations are computationally expensive, especially for large models. M2N2 introduces a heuristic attraction score to select parent pairs with complementary strengths, improving both efficiency and final model performance. The attraction score prioritizes pairs where one model excels in areas where the other is deficient, weighted by resource capacity and competition. This approach enhances the probability of producing offspring with aggregated capabilities.

Experimental Results

Evolving MNIST Classifiers

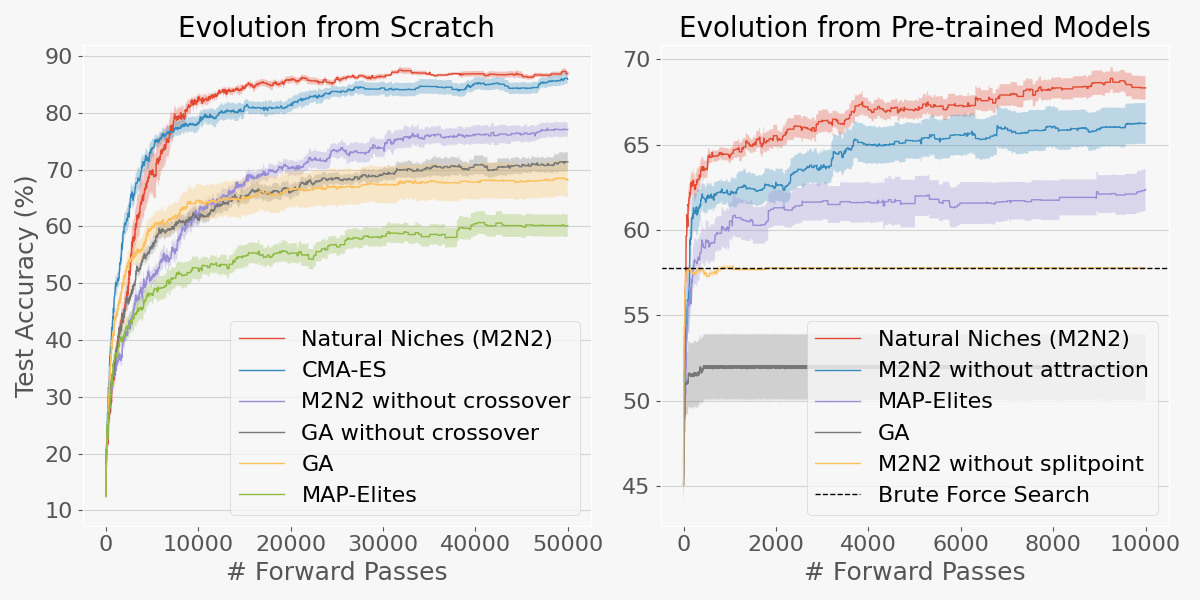

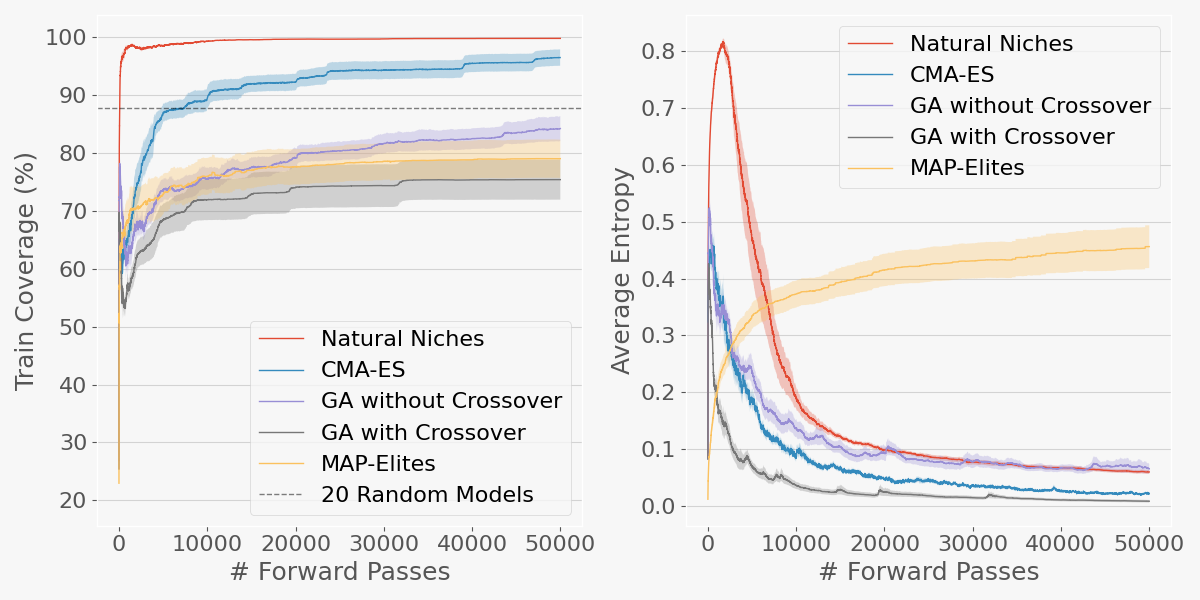

M2N2 is evaluated on the MNIST dataset, both from scratch and from pre-trained models. The method outperforms CMA-ES and other evolutionary baselines in both accuracy and computational efficiency. Notably, M2N2 maintains high coverage of the training data and preserves diversity throughout training, systematically discarding weaker models.

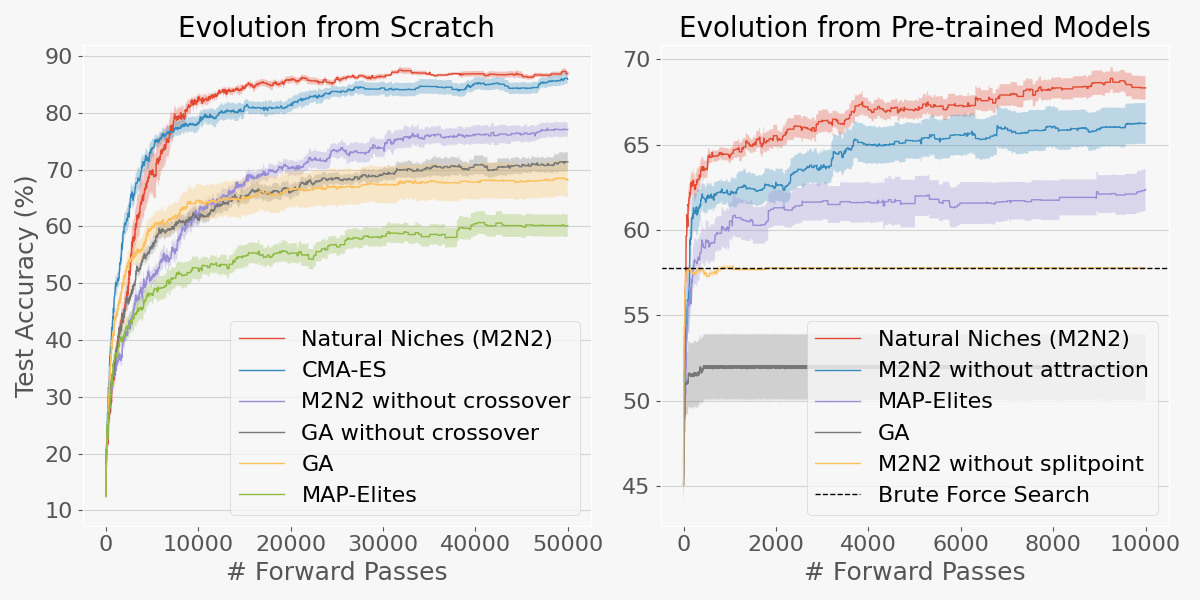

Figure 2: Test accuracy vs. number of forward passes for randomly initialized and pre-trained models, showing M2N2's superior performance and efficiency.

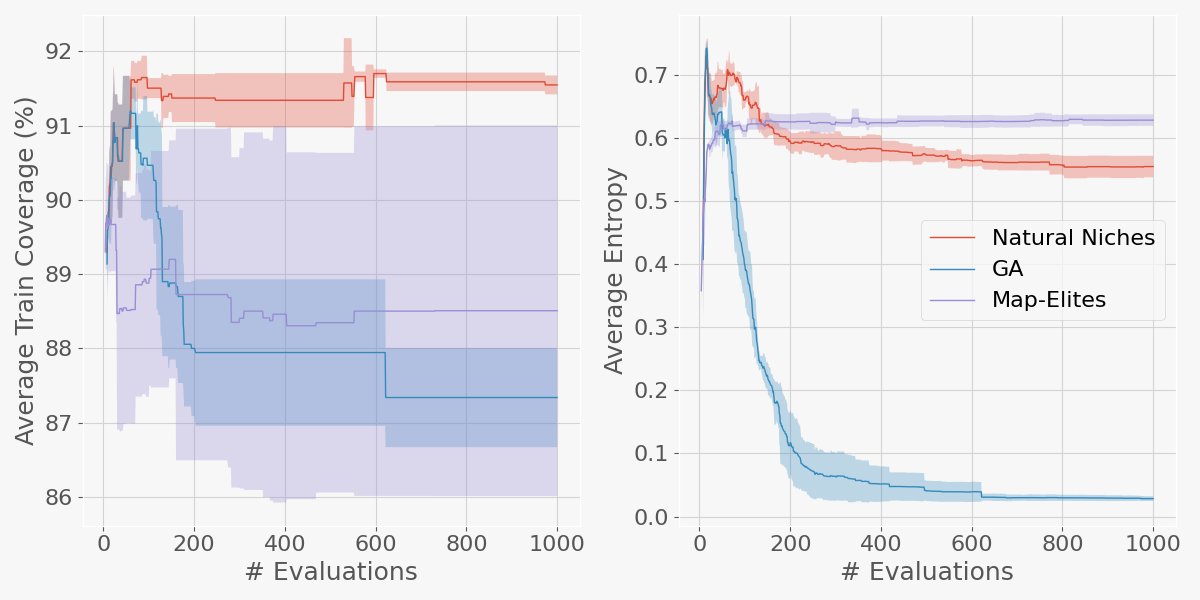

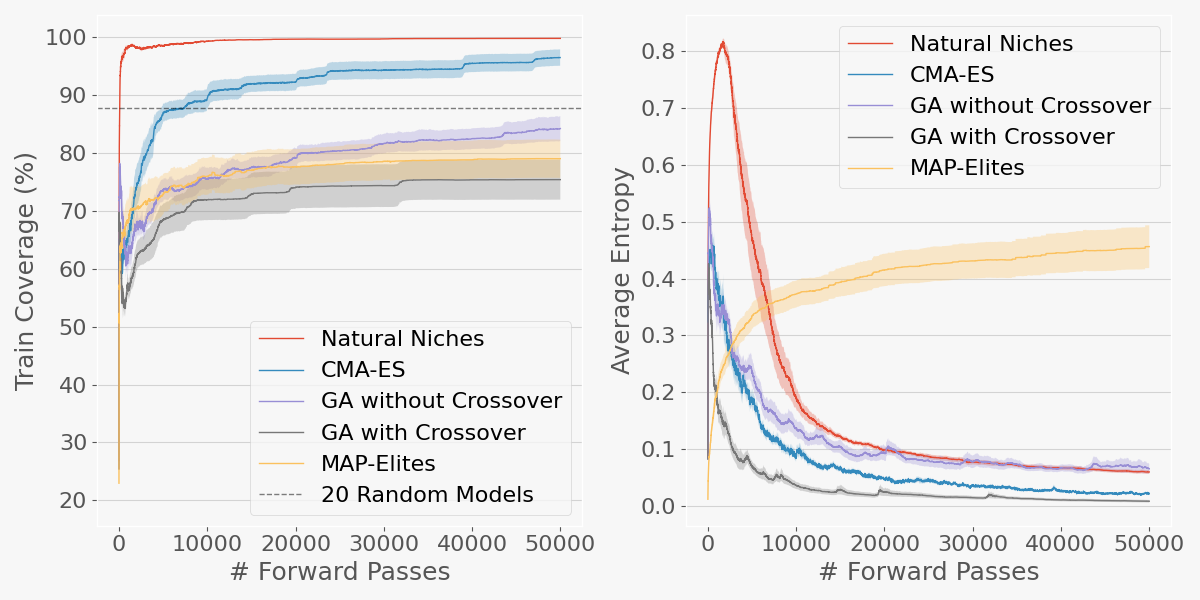

Figure 3: Training coverage and entropy evolution, demonstrating M2N2's ability to maintain diverse, high-performing populations.

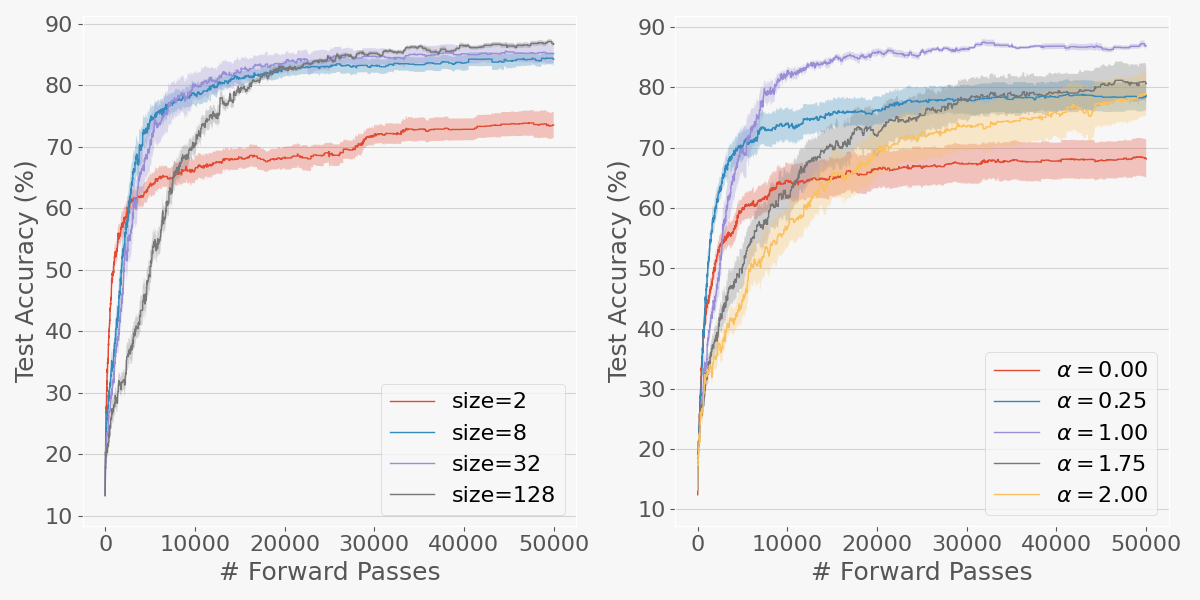

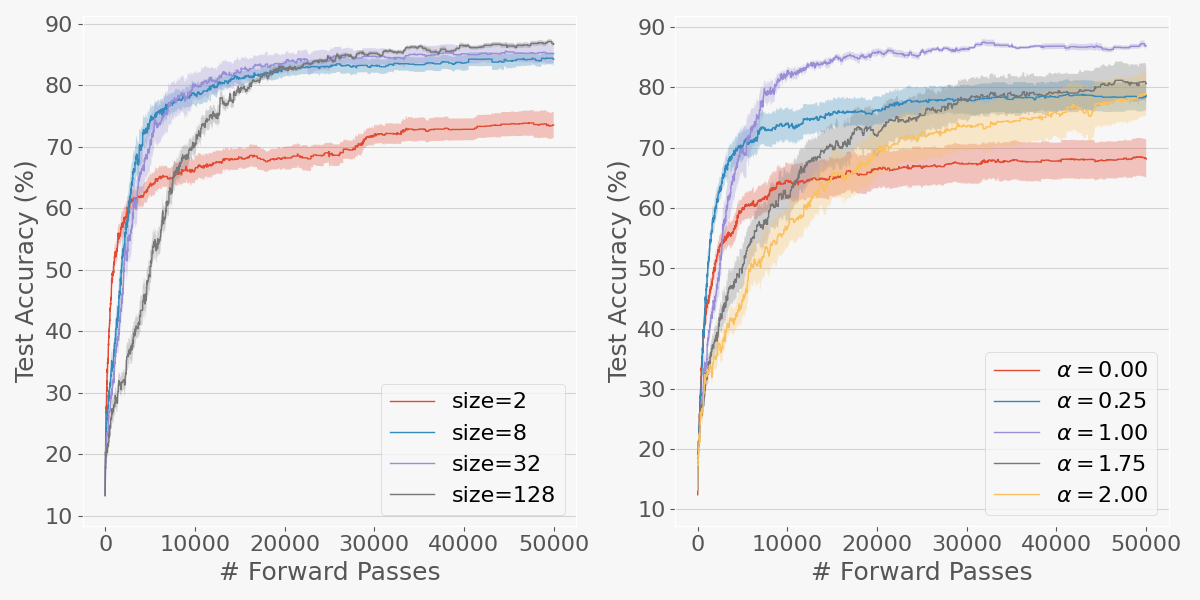

Ablation studies reveal that the split-point mechanism is critical when merging pre-trained models, while attraction improves performance across all settings. Archive size and competition intensity are shown to affect convergence and final accuracy, with larger archives and higher competition preserving diversity and preventing premature convergence.

Figure 4: Test accuracy across archive sizes and competition intensities (α), illustrating trade-offs between exploration and exploitation.

Merging LLMs: Math and Agentic Skills

M2N2 is applied to merge WizardMath-7B-V1.0 (math specialist) and AgentEvol-7B (agentic specialist), both based on Llama-2-7B. The merged models achieve higher average scores on GSM8k and WebShop benchmarks compared to baselines, including CMA-ES, which suffers from suboptimal parameter partitioning.

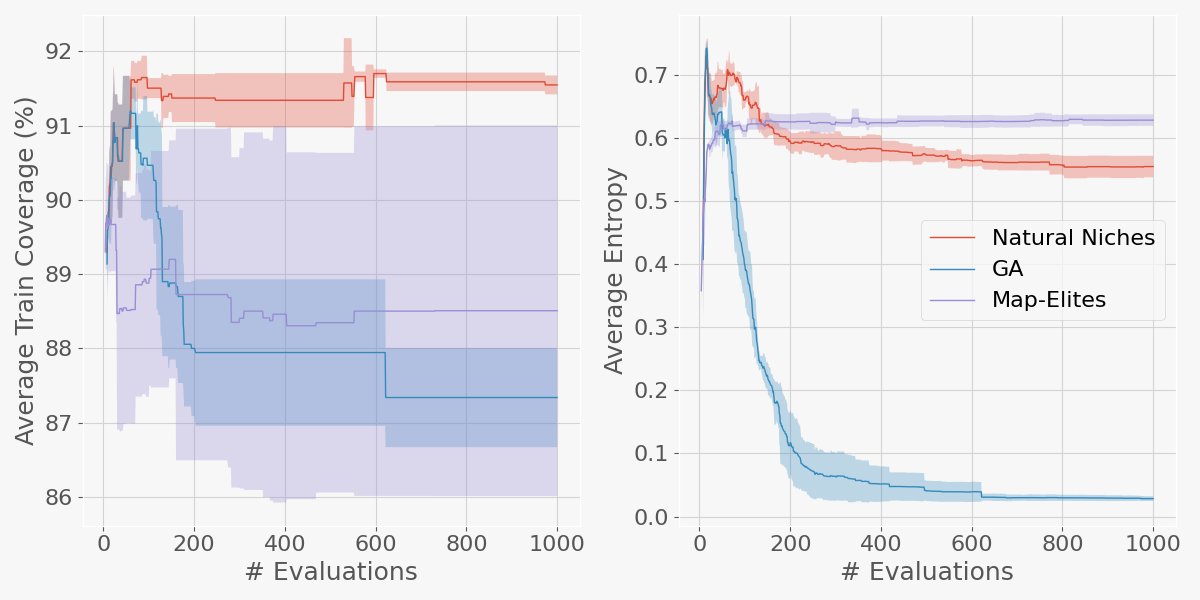

Figure 5: Training coverage and entropy for LLM merging, confirming M2N2's generalization to large-scale models and multi-task settings.

Diffusion-Based Image Generation Model Fusion

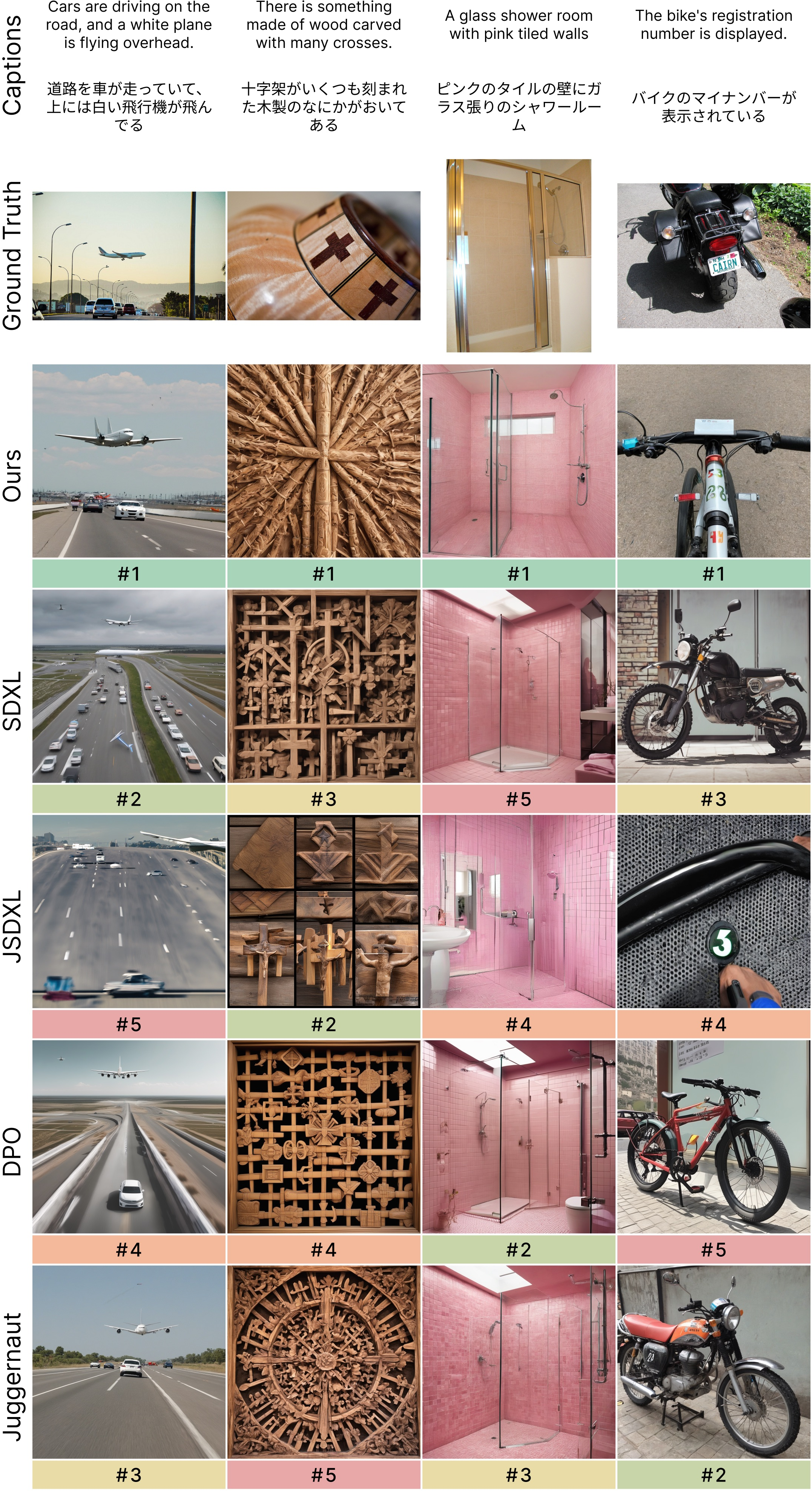

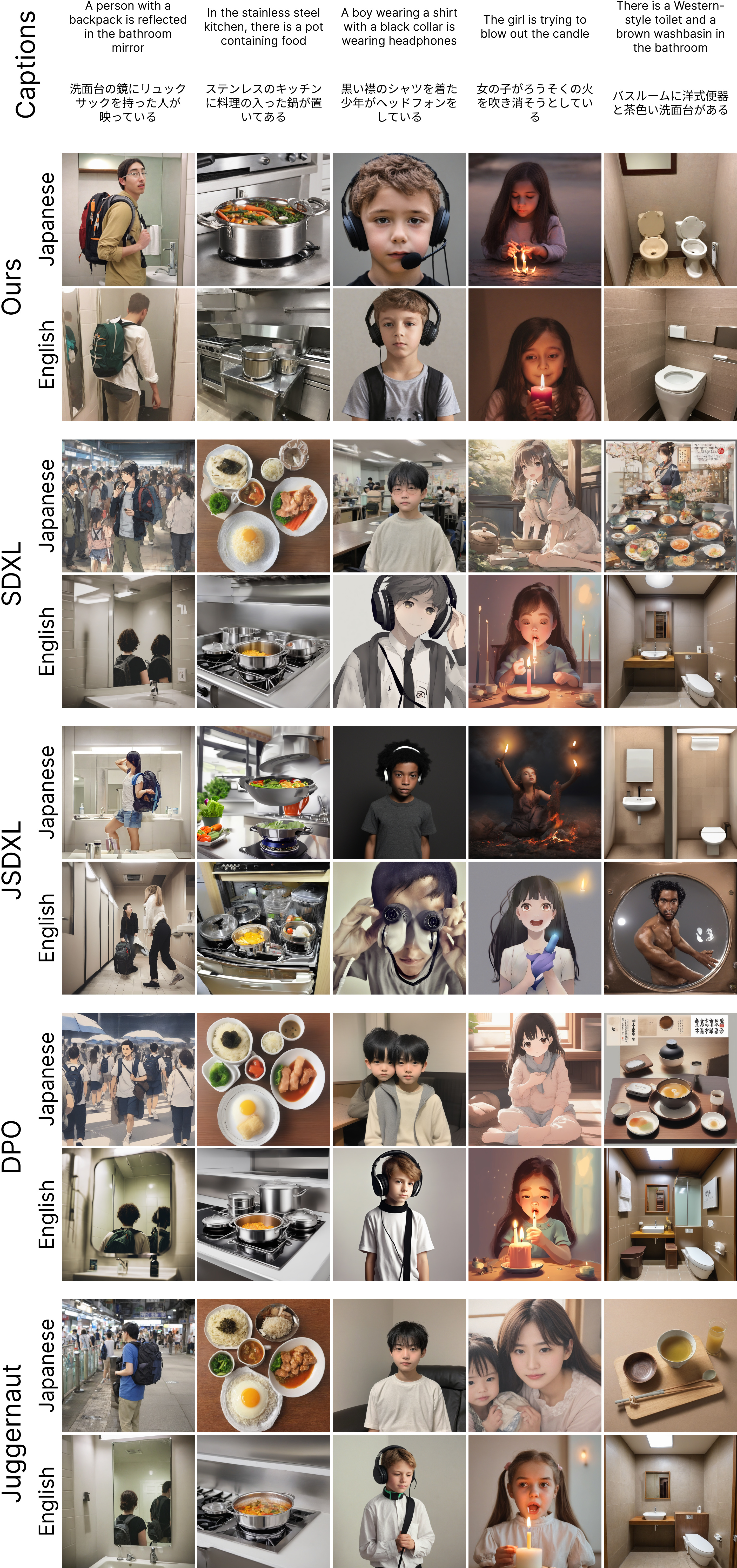

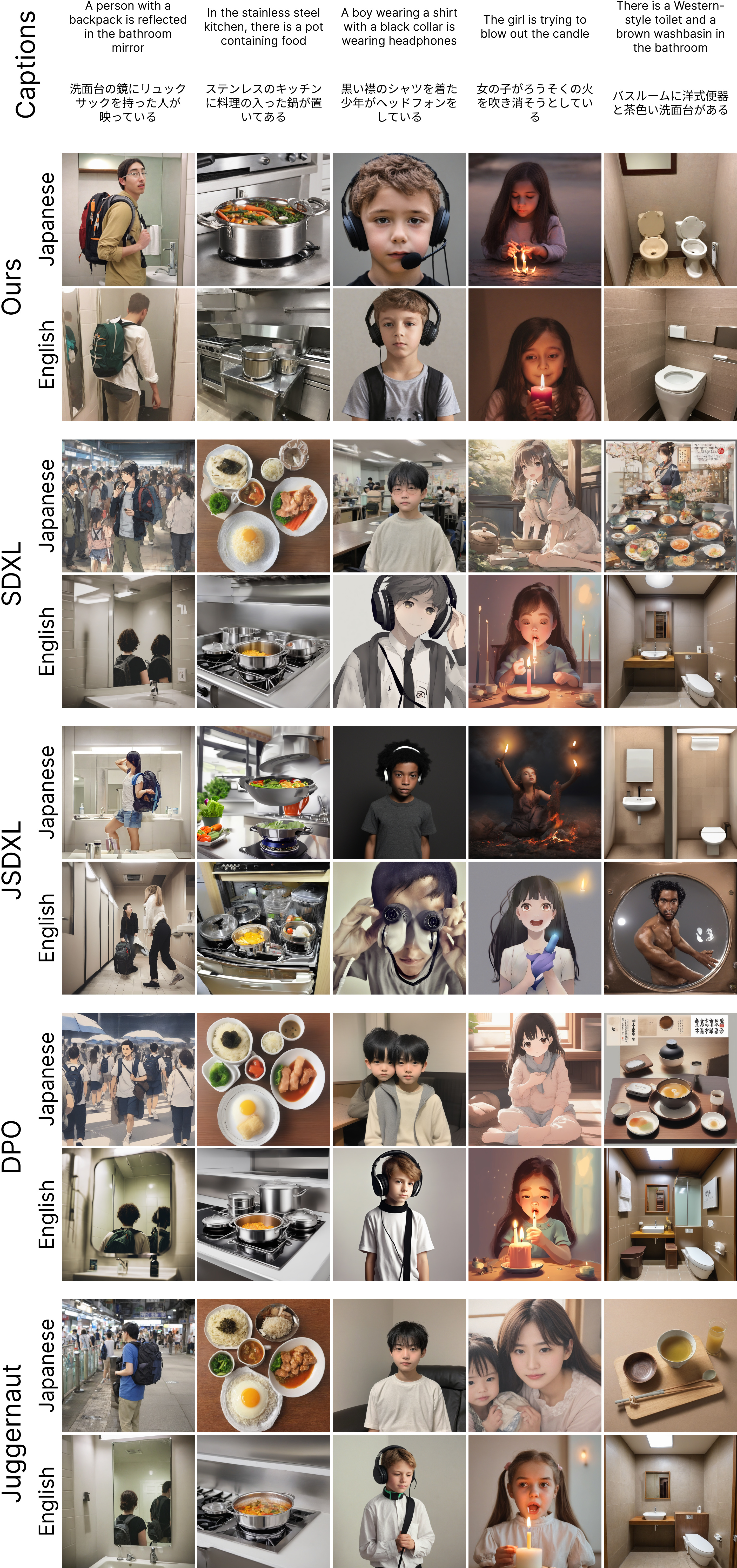

M2N2 is further evaluated on merging diverse text-to-image models, including JSDXL (Japanese prompts) and several English-trained SDXL variants. The merged model aggregates the strengths of all seed models, achieving superior photorealism and semantic fidelity. Importantly, the merged model retains bilingual prompt understanding, despite being optimized exclusively for Japanese tasks.

Figure 6: Generated image comparison across seed and merged models, demonstrating successful aggregation of diverse capabilities.

Figure 7: Images generated from Japanese and English prompts, showing emergent bilingual ability in the merged model.

Quantitative metrics confirm that M2N2 achieves the highest Normalized CLIP Similarity (NCS) and FID scores, outperforming both individual models and CMA-ES-based merging.

Limitations and Future Directions

The efficacy of model merging is contingent on the compatibility of seed models. Excessive divergence during fine-tuning can render models incompatible for merging, as their internal representations become misaligned. The absence of a standardized compatibility metric is a notable gap; future work should focus on developing such metrics and integrating them into the attraction heuristic. Co-evolutionary dynamics may naturally favor compatible models, but explicit regularization during fine-tuning could further enhance mergeability.

Theoretical and Practical Implications

M2N2 demonstrates that model merging can be used not only for integrating pre-trained models but also for evolving models from scratch. The approach is gradient-free, avoiding catastrophic forgetting and enabling fusion across models trained on disparate objectives. The implicit diversity preservation mechanism scales with model and task complexity, and the attraction heuristic offers a principled approach to mate selection in evolutionary algorithms.

Practically, M2N2 enables the creation of multi-task models without access to original training data, reduces memory footprint by obviating gradient computations, and preserves specialized capabilities. The method is computationally efficient, with resource requirements scaling primarily with archive size and model dimensionality.

Conclusion

The paper establishes M2N2 as a robust, scalable framework for model fusion, leveraging competition and attraction to overcome the limitations of prior methods. The dynamic evolution of merging boundaries, implicit diversity preservation, and attraction-based mate selection collectively enhance the efficacy of model merging. The results generalize across domains, from image classification to LLMs and diffusion models, and highlight the potential for future research in compatibility metrics, co-evolution, and advanced mate selection algorithms. M2N2 represents a significant advancement in the practical and theoretical foundations of model fusion and evolutionary computation.