- The paper introduces a full-stack measurement method that captures energy consumption, carbon emissions, and water usage across the entire AI serving infrastructure.

- It leverages internal telemetry to include active accelerators, idle machines, and data center overhead, revealing a 33x energy and 44x emissions reduction over time.

- Empirical results from Gemini Apps highlight significant efficiency gains driven by hardware-software optimizations and aggressive clean energy procurement.

Comprehensive Measurement of AI Serving Environmental Impact at Google Scale

Introduction

This paper presents a rigorous, production-scale methodology for quantifying the environmental impact of AI inference, specifically focusing on energy consumption, carbon emissions, and water usage associated with serving LLMs at Google. The work addresses the lack of first-party, in-situ data from major AI providers and proposes a comprehensive measurement boundary that encompasses all material energy sources under operational control, including active AI accelerators, host CPU/DRAM, idle machine capacity, and data center overhead. The methodology is applied to Google's Gemini Apps product, yielding detailed metrics and revealing substantial efficiency improvements over time.

Measurement Boundaries and Methodological Advances

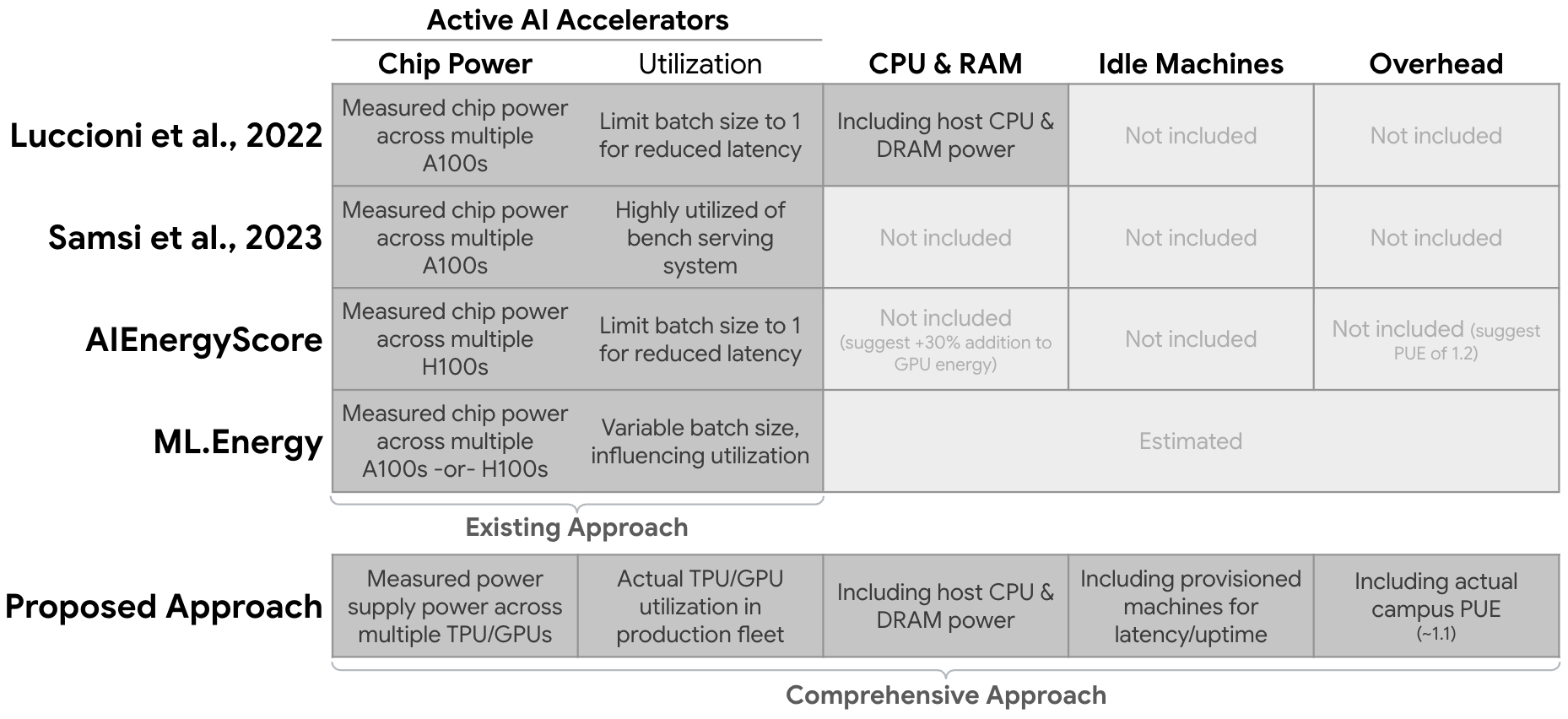

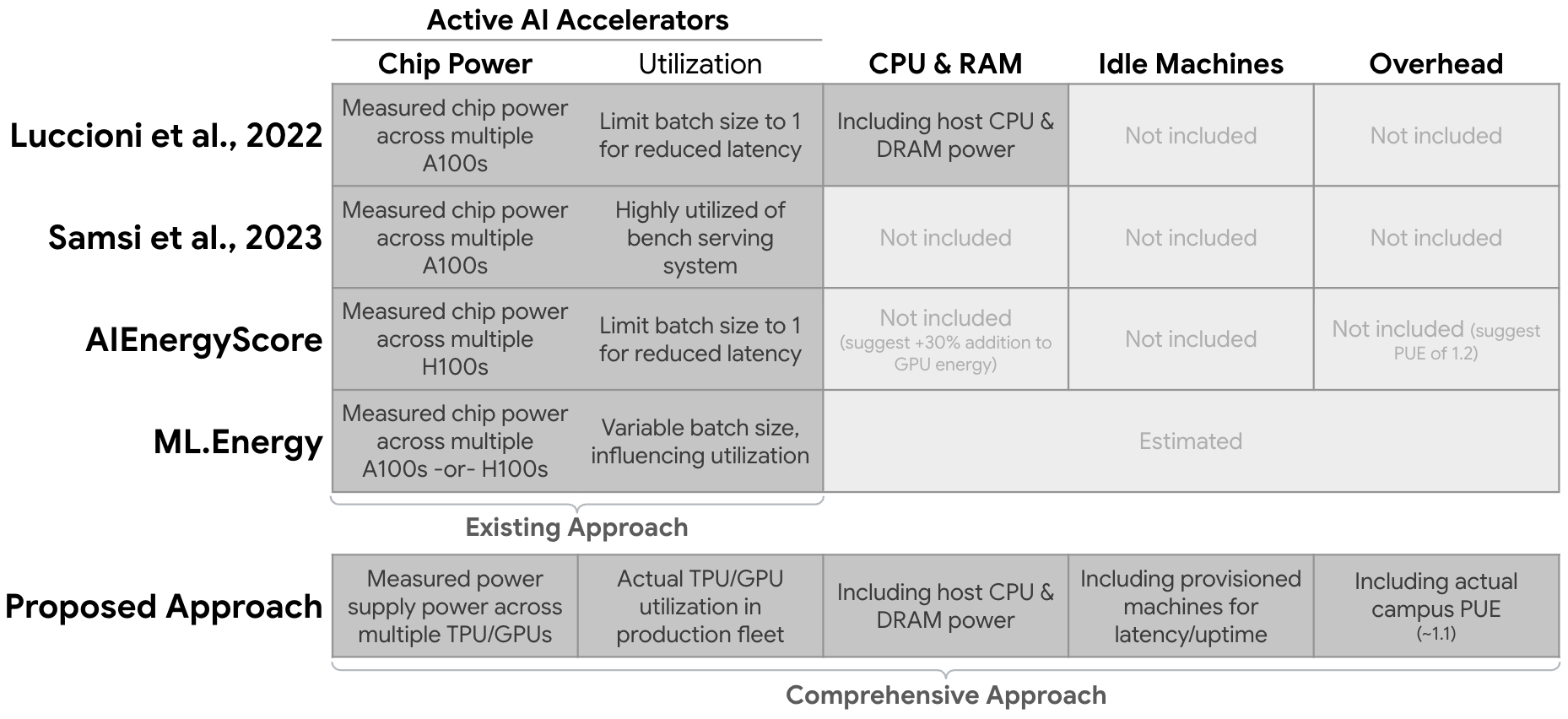

A central contribution is the explicit definition and justification of the measurement boundary for AI inference energy. Previous studies have typically restricted measurement to active AI accelerator energy, omitting host system power, idle capacity, and data center overhead. This paper argues that such omissions lead to significant underestimation and misallocation of environmental impact, impeding both transparency and optimization efforts.

Figure 1: Existing and proposed boundaries for AI inference energy measurements. The comprehensive approach includes all components of the serving stack, not just active accelerators.

The comprehensive approach leverages internal telemetry to capture real-time power draw across the entire serving stack, mapping LLM jobs to machine IDs and aggregating energy consumption over both active and idle periods. Overhead energy is incorporated via the Power Usage Effectiveness (PUE) metric, and exclusions are transparently documented (e.g., external networking, end-user devices, and training energy).

Empirical Results: Energy, Emissions, and Water Consumption

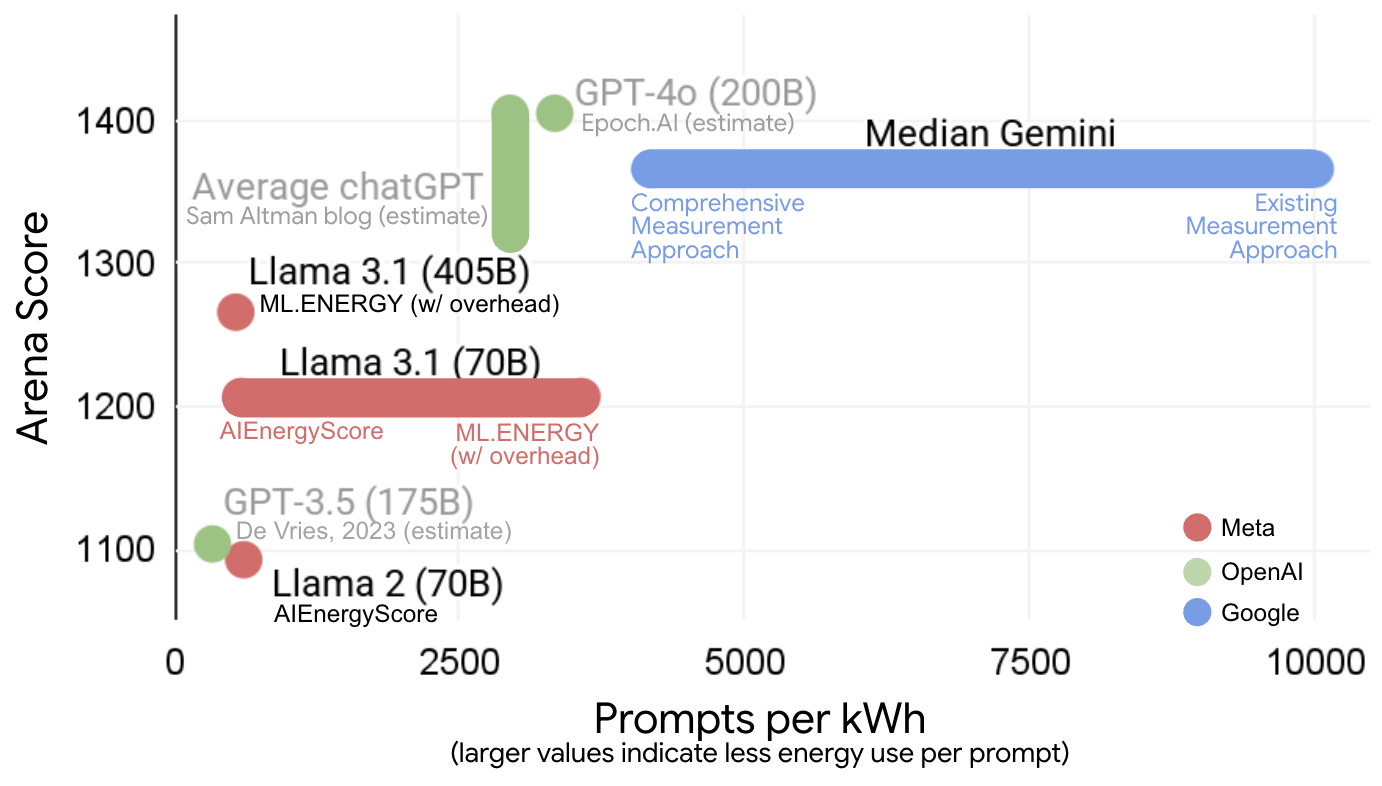

Applying the methodology to Gemini Apps, the median text prompt in May 2025 consumed 0.24 Wh of energy, generated 0.03 gCO2e, and used 0.26 mL of water. These values are substantially lower than many public estimates and prior measurements, which often report per-prompt energy consumption an order of magnitude higher due to narrower boundaries and less efficient serving conditions.

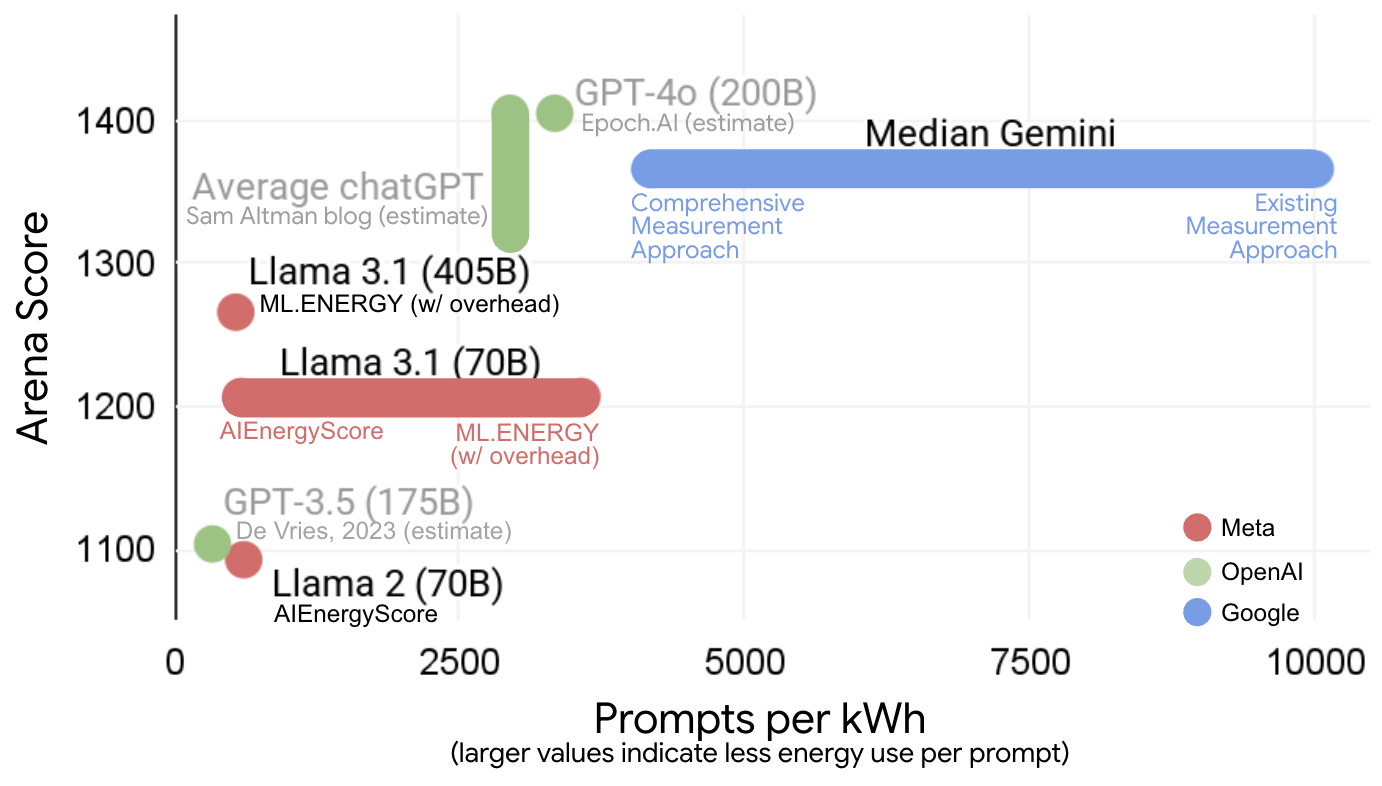

Figure 2: Energy per prompt for large production AI models versus LMArena score, illustrating wide variability in published metrics and the impact of measurement boundary.

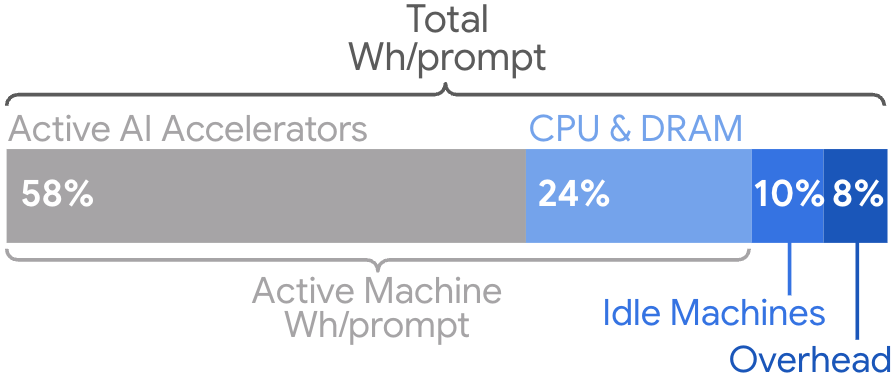

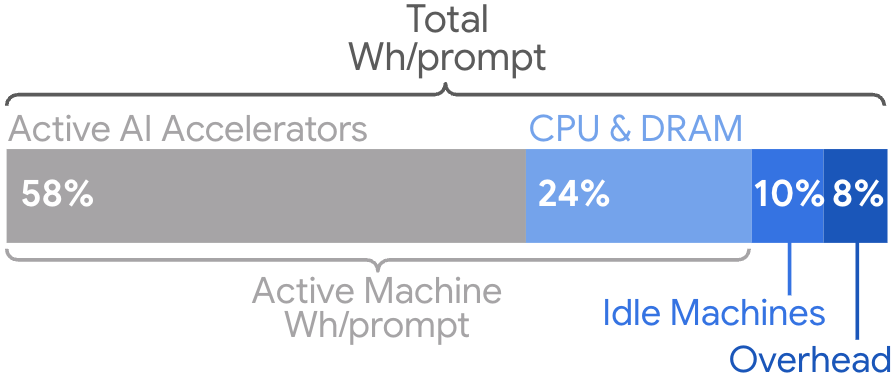

The breakdown of energy consumption per prompt is as follows: active AI accelerators (58%), host CPU/DRAM (25%), idle machines (10%), and data center overhead (8%).

Figure 3: Components of total LLM energy consumption per prompt across the production serving stack, based on the comprehensive measurement approach.

Emissions are calculated using market-based (MB) grid emission factors and include both operational (Scope 2) and embodied (Scope 1+3) contributions. Water consumption is normalized via the Water Usage Effectiveness (WUE) metric, with Google's data centers averaging 1.15 L/kWh for consumptive use.

Efficiency Gains and Environmental Trends

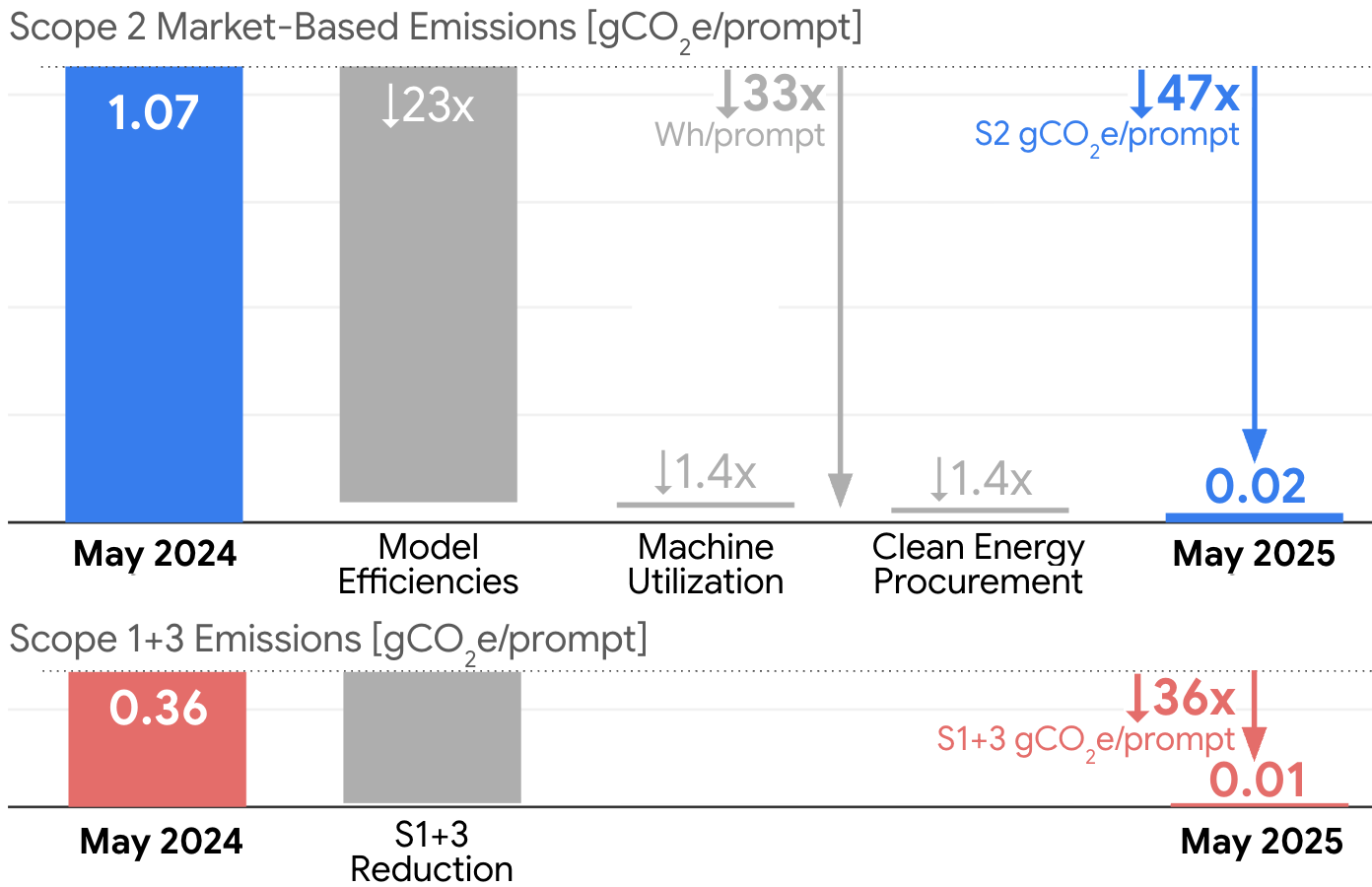

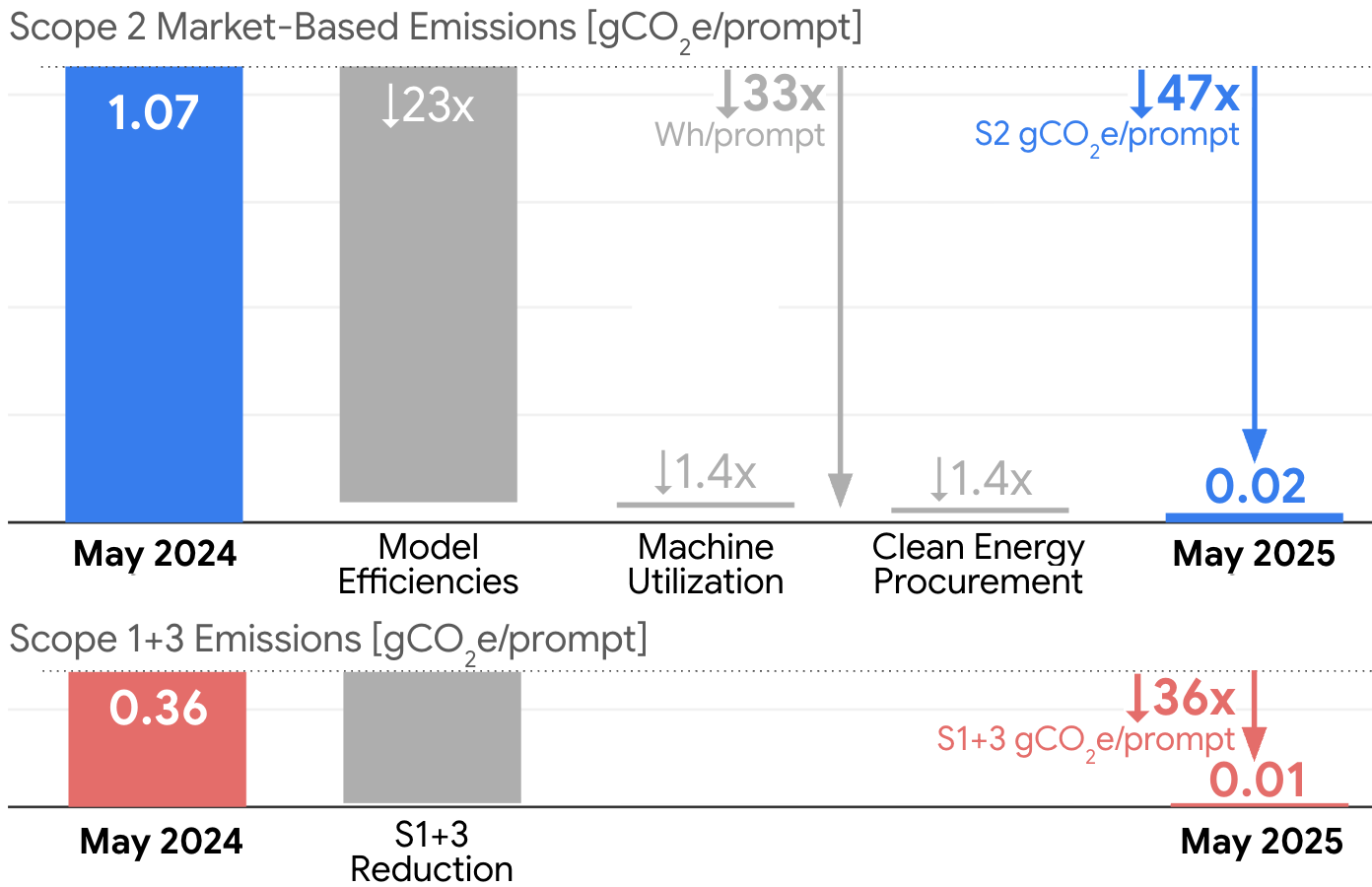

A notable finding is the dramatic improvement in environmental efficiency over a 12-month period. The median Gemini Apps prompt saw a 33x reduction in energy consumption and a 44x reduction in total emissions, driven by software optimizations, improved hardware utilization, clean energy procurement, and reductions in embodied emissions.

Figure 4: Median Gemini Apps text prompt emissions over time, showing a 47x reduction in Scope 2 MB emissions and a 36x reduction in Scope 1+3 emissions per prompt.

Key contributors to these gains include:

- Model architecture improvements (e.g., Mixture-of-Experts, efficient attention mechanisms)

- Quantization and distillation for serving-optimized models

- Speculative decoding and batch inference for higher hardware utilization

- Custom TPU hardware co-designed for energy efficiency

- Dynamic resource allocation to minimize idle energy

- Ultra-efficient data centers (fleet-wide PUE of 1.09)

- Aggressive clean energy procurement (30% reduction in MB emissions factor from 2023 to 2024)

Implications and Future Directions

The results demonstrate that comprehensive, in-situ measurement yields more accurate and actionable environmental metrics for AI serving. The findings challenge prevailing estimates and highlight the necessity of standardized measurement boundaries for cross-provider comparability and effective optimization. The methodology enables identification of efficiency hotspots and incentivizes improvements across the full serving stack, not just model or hardware design.

Theoretical implications include the need to revisit lifecycle analyses of AI systems, incorporating operational realities of large-scale deployment. Practically, the approach provides a template for other providers to adopt, facilitating industry-wide transparency and accountability. Future work should extend comprehensive measurement to training workloads, integrate site-specific normalization for water and emissions, and explore further hardware-software co-design for environmental optimization.

Conclusion

This paper establishes a comprehensive framework for measuring the environmental impact of AI inference at production scale, revealing that existing, narrow approaches substantially underestimate true costs. For Gemini Apps, the median prompt's energy, emissions, and water usage are lower than previously reported, attributable to efficient serving practices and holistic measurement. The work underscores the importance of standardized, full-stack metrics for driving environmental efficiency in AI deployment and advocates for their widespread adoption as AI continues to scale globally.