An Academic Perspective on "Sustainable AI: Environmental Implications, Challenges and Opportunities"

The paper "Sustainable AI: Environmental Implications, Challenges and Opportunities" authored by researchers from Facebook AI provides a comprehensive examination of the environmental impact of AI technologies. By leveraging industry-scale ML use cases, the authors delve deeply into the carbon footprint associated with the super-linear growth of AI across data, algorithms, and system hardware. This work highlights both the operational and embodied carbon emissions throughout the AI model lifecycle, aiming to prompt the community towards environmentally-conscious advancement in AI.

Core Contributions

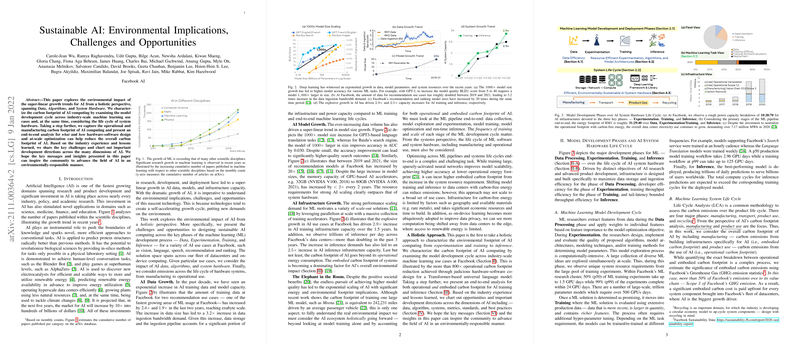

The paper systematically addresses the carbon emissions across AI's infrastructure and development cycle, breaking it down into the phases of data processing, experimentation, training, and inference. The differentiation of the operational and manufacturing carbon footprint offers a nuanced perspective into how AI computing impacts environmental sustainability. Key findings include substantial increases in data volumes and model sizes, with notable examples such as a 2.4 increase in AI training data and a 20 growth in recommendation model sizes recently observed at Facebook.

Detailed Analysis and Results

The authors present quantitative analyses that underscore the significance of AI infrastructure's carbon footprint. For instance, the operational carbon emissions for Facebook's production ML models are detailed, and comparisons are made to prominent open-source models such as GPT-3. The results indicate that both training and inference stages contribute significantly to AI's overall carbon emissions.

Moreover, the paper evaluates the outcomes of hardware-software co-design optimizations. These strategies have achieved operational power footprint reductions—such as an 810-fold decrease in carbon emissions for a Transformer-based LLM—underscoring the potential of efficiency optimizations.

Implications and Future Directions

From a theoretical standpoint, the findings raise imperative considerations for how AI's exponential data, model, and system growth outpaces the improvements in energy efficiency. The authors argue for a holistic approach to understanding AI's carbon footprint, advocating for optimization across data utilization, model development, and system deployment.

Practically, the research suggests a significant need for AI system designs that prioritize sustainability alongside performance. The potential shifts toward on-device learning, with its limited renewable energy reliance, further suggest transformative implications for AI deployment strategies, warranting in-depth exploration of edge-focused solutions.

Speculation on AI's Sustainability Trajectory

The analysis presented elucidates that while operational efficiencies have room for enhancement, they cannot solely curb AI's growing carbon emissions. The sustainability of AI will likely hinge on the integration of environmentally-conscious practices into both AI development methodologies and infrastructure management.

Through posing a call-to-action for further research and the development of simple telemetry for assessing carbon footprints, the paper encourages a cultural shift towards sustainability in the AI community. This initiative aims at fostering innovation in reducing environmental costs while continuing to pursue advancements in AI performance.

As the AI field evolves, embracing environmental responsibility will be critical to mitigating AI's carbon footprint. The trajectory towards sustainable AI calls for collective efforts across reducing embodied carbon costs, leveraging carbon-free energy, and fostering design principles that inherently reduce environmental impact.

In conclusion, "Sustainable AI: Environmental Implications, Challenges and Opportunities" is a pivotal work, urging the AI community to integrate sustainability into the core of technological development. This research lays a foundational pathway for systematically curtailing AI's environmental impact, indispensable as AI technologies continue to grow and integrate into diverse facets of industry and society.