- The paper introduces an adaptive algorithm, A_min, that leverages lower-bound estimates to optimize LLM inference latency while adhering to GPU memory limits.

- It demonstrates that A_min achieves a competitive ratio of O(log(1/α)) and outperforms naive conservative methods, especially under wide prediction intervals.

- Empirical evaluations on the LMSYS-Chat-1M dataset show that A_min closely approximates hindsight-optimal performance even with significant output uncertainty.

Adaptively Robust LLM Inference Optimization under Prediction Uncertainty

The paper addresses the problem of online scheduling for LLM inference under output length uncertainty, with the objective of minimizing total end-to-end latency subject to GPU memory (KV cache) constraints. In LLM serving, each request's prompt length is known at arrival, but the output length—directly impacting both memory usage and processing time—is unknown and must be predicted. The scheduling challenge is compounded by the need to batch requests efficiently while avoiding memory overflows and minimizing latency, especially as LLM inference is both compute- and energy-intensive.

The authors formalize the scheduling problem as a semi-online, resource-constrained batch scheduling task. Each request i has a known prompt size s and an unknown output length oi∈[ℓ,u], where [ℓ,u] is a prediction interval provided by a machine learning model. The system processes requests in batches, with the total memory usage at any time t constrained by the available KV cache M. The performance metric is the total end-to-end latency (TEL), defined as the sum of completion times for all requests.

Baseline and Naive Algorithms

The paper first reviews the hindsight-optimal algorithm (H-SF), which assumes perfect knowledge of all oi and greedily batches the shortest jobs first, achieving the minimum possible TEL. The competitive ratio (CR) is used to evaluate online algorithms relative to H-SF.

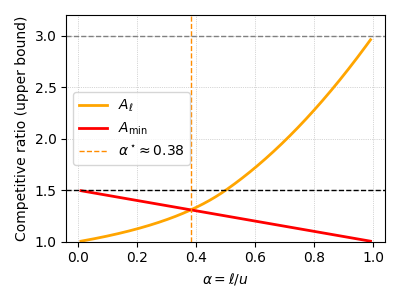

A naive conservative algorithm, Amax, is introduced, which pessimistically assumes every request has output length u (the upper bound of the prediction interval). This approach guarantees no memory overflows but is highly conservative, leading to poor memory utilization and increased latency as the prediction interval widens. Theoretical analysis shows that the competitive ratio of Amax is upper bounded by 2α−1(1+α−1) and lower bounded by 2α−1(1+α−1/2), where α=ℓ/u.

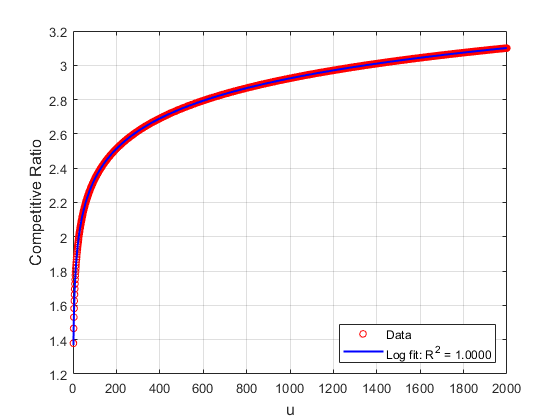

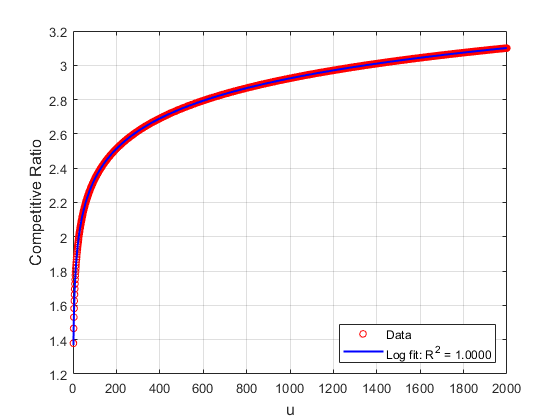

Figure 1: Competitive ratio of Amin as a function of the upper bound u, demonstrating logarithmic scaling with u.

Robust Adaptive Algorithm: Amin

To address the limitations of Amax, the authors propose Amin, an adaptive algorithm that leverages only the lower bound ℓ of the prediction interval. Amin initializes each request's estimated output length to ℓ and dynamically refines this estimate as tokens are generated. If a memory overflow is imminent, the algorithm evicts jobs with the smallest accumulated lower bounds, updating their estimates accordingly. Batch formation is always based on the current lower bounds, and the algorithm never relies on the upper bound u.

Theoretical analysis establishes that Amin achieves a competitive ratio of O(log(α−1)) as M→∞, a significant improvement over Amax, especially when prediction intervals are wide. The analysis leverages a Rayleigh quotient formulation and matrix spectral analysis to bound the competitive ratio logarithmically in u.

Distributional Extensions and Algorithm Selection

The paper further analyzes Amin under specific output length distributions:

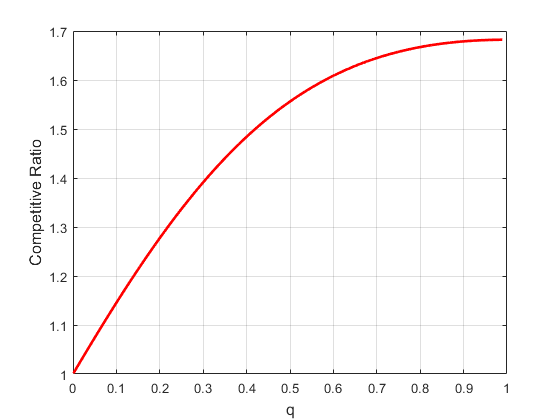

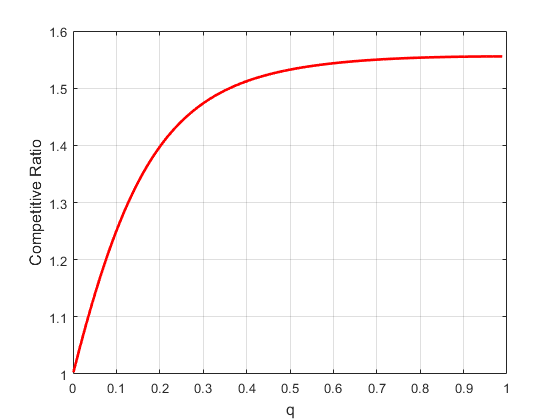

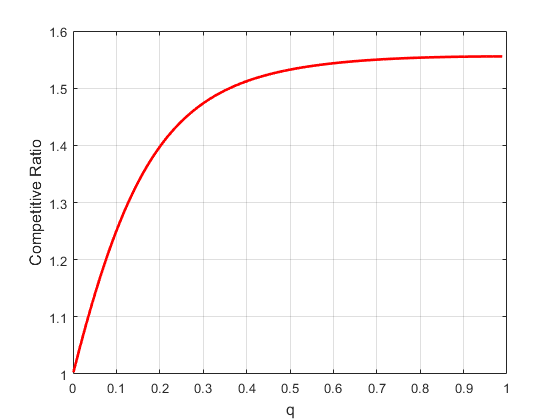

Figure 3: Competitive ratio as a function of parameter q: (Left) geometric distribution G(p); (Right) linearly weighted geometric distribution LG(p).

These results suggest that algorithm selection can be adapted to the empirical distribution of output lengths, switching between Amin and Aℓ to optimize worst-case guarantees.

Numerical Experiments

The authors conduct extensive experiments on the LMSYS-Chat-1M dataset, simulating LLM inference scheduling under three prediction regimes: rough prediction (wide intervals), non-overlapping classification (bucketed intervals), and individualized overlapping intervals (centered on true oi). Across all settings, Amin consistently matches or closely approaches the performance of the hindsight-optimal scheduler, even when prediction intervals are extremely wide. In contrast, Amax only performs well when predictions are highly accurate.

Key empirical findings include:

- Under rough prediction ([1,1000]), Amin achieves average latency nearly identical to H-SF, while Amax suffers from high latency due to underutilized memory.

- As prediction accuracy improves (narrower intervals), both algorithms improve, but Amin remains robust even with poor predictions.

- For individualized intervals, Amin maintains low latency across all levels of prediction uncertainty, while Amax degrades rapidly as uncertainty increases.

Theoretical and Practical Implications

The results demonstrate that robust, adaptive scheduling algorithms can achieve near-optimal LLM inference efficiency even under substantial prediction uncertainty. The key insight is that leveraging only the lower bound of output length predictions, and dynamically updating estimates during execution, is sufficient to guarantee strong performance both in theory and in practice. The avoidance of upper bound reliance is particularly advantageous, as upper bounds are typically harder to predict accurately in real-world systems.

The theoretical framework—combining competitive analysis, memory-preserving combinatorial techniques, and Rayleigh quotient spectral analysis—provides a foundation for future work on robust online scheduling under uncertainty. The distributional analysis and adaptive algorithm selection further suggest that practical LLM serving systems can benefit from workload-aware policy switching.

Conclusion

This work advances the theory and practice of LLM inference scheduling by introducing and analyzing robust algorithms that operate effectively under prediction uncertainty. The adaptive lower-bound-based approach of Amin achieves provably logarithmic competitive ratios and demonstrates strong empirical performance across a range of realistic scenarios. The results have direct implications for the design of scalable, efficient, and robust LLM serving systems, and open avenues for further research on learning-augmented online algorithms and resource-constrained scheduling in AI systems.