- The paper introduces MR6D, a benchmark dataset for 6D pose estimation in mobile robotics using industrial objects like Euro pallets.

- It evaluates pose estimation pipelines with different segmentation methods, revealing significant effects on Average Recall metrics.

- The dataset covers diverse scenarios including static, dynamic, and odometry-based tracking to enhance mobile robot perception.

MR6D: Benchmarking 6D Pose Estimation for Mobile Robots

The paper presents MR6D, a dataset aimed at benchmarking 6D pose estimation specifically for mobile robots operating in industrial settings. Unlike traditional datasets focused on small household items manipulated by robotic arms, MR6D targets larger, industrially relevant objects, addressing unique challenges posed by mobile robotics such as long-range perception, varied object sizes, and complex occlusion/self-occlusion patterns.

Introduction to Mobile Robot Perception

The significance of 6D pose estimation lies in its ability to enable precise localization and manipulation of objects by robots. Industrial mobile robots differ from robotic arms as they require specialized gripping mechanisms and encounter diverse camera viewpoints. Mobile robots must detect larger objects at greater distances and handle self-occlusions and occlusions under extreme camera angles. This presents unique challenges for perception systems that are not addressed by existing datasets. MR6D bridges this gap by focusing on instance-level pose estimation, crucial for industrial tasks involving specific object models such as Euro pallets and standardized storage bins.

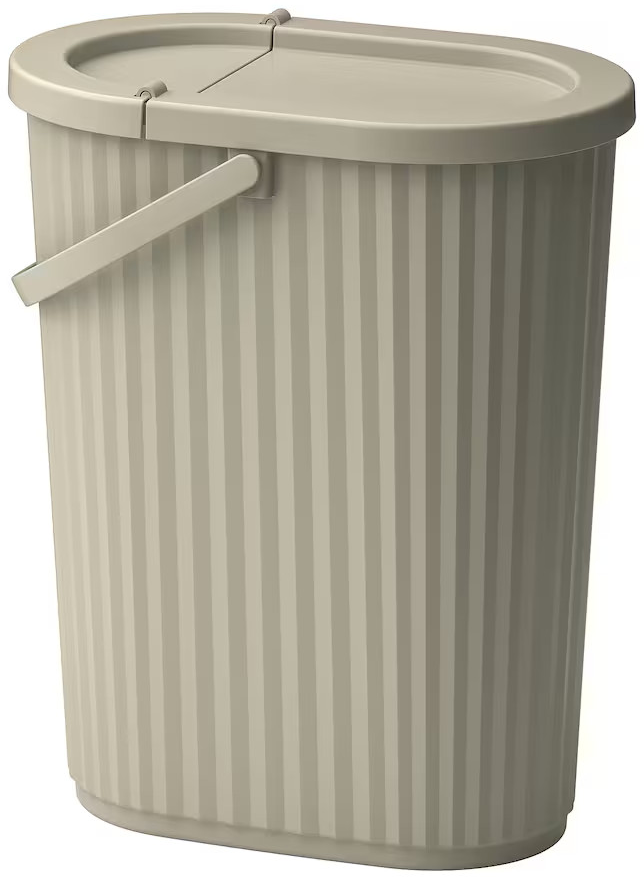

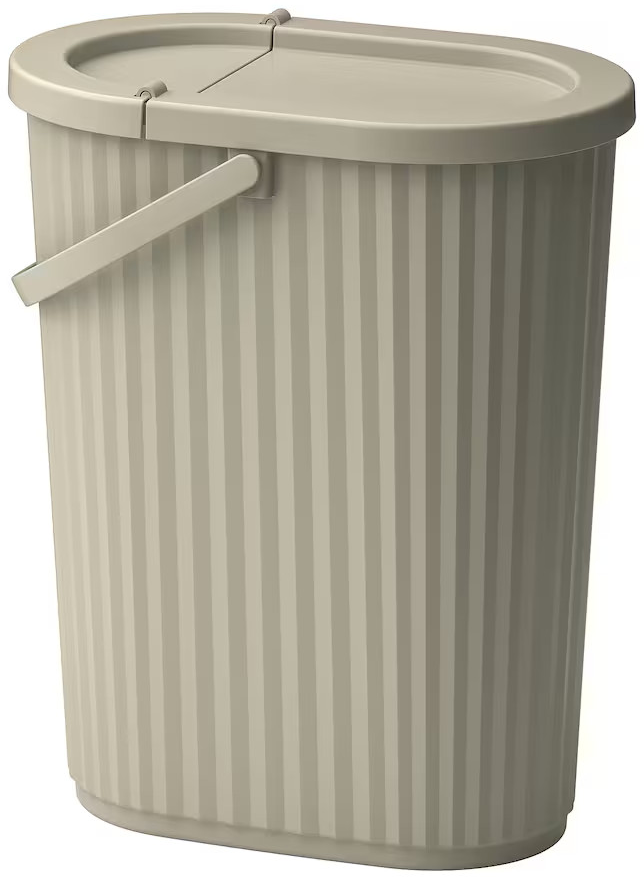

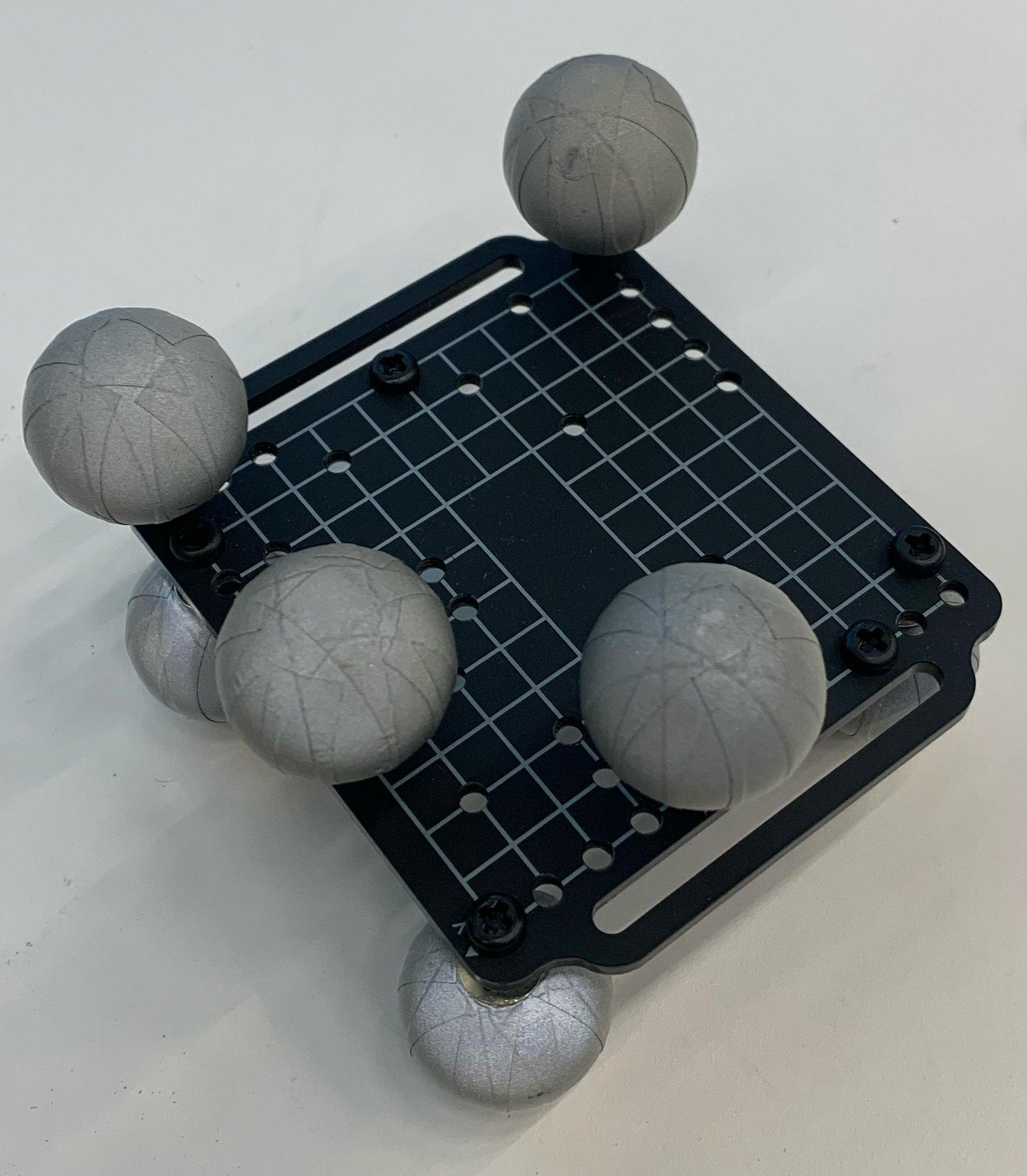

Figure 1: The objects used in our MR6D dataset. These objects are the same as those used in the MTevent dataset.

Dataset Collection Process

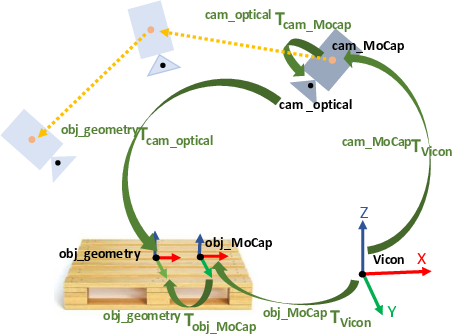

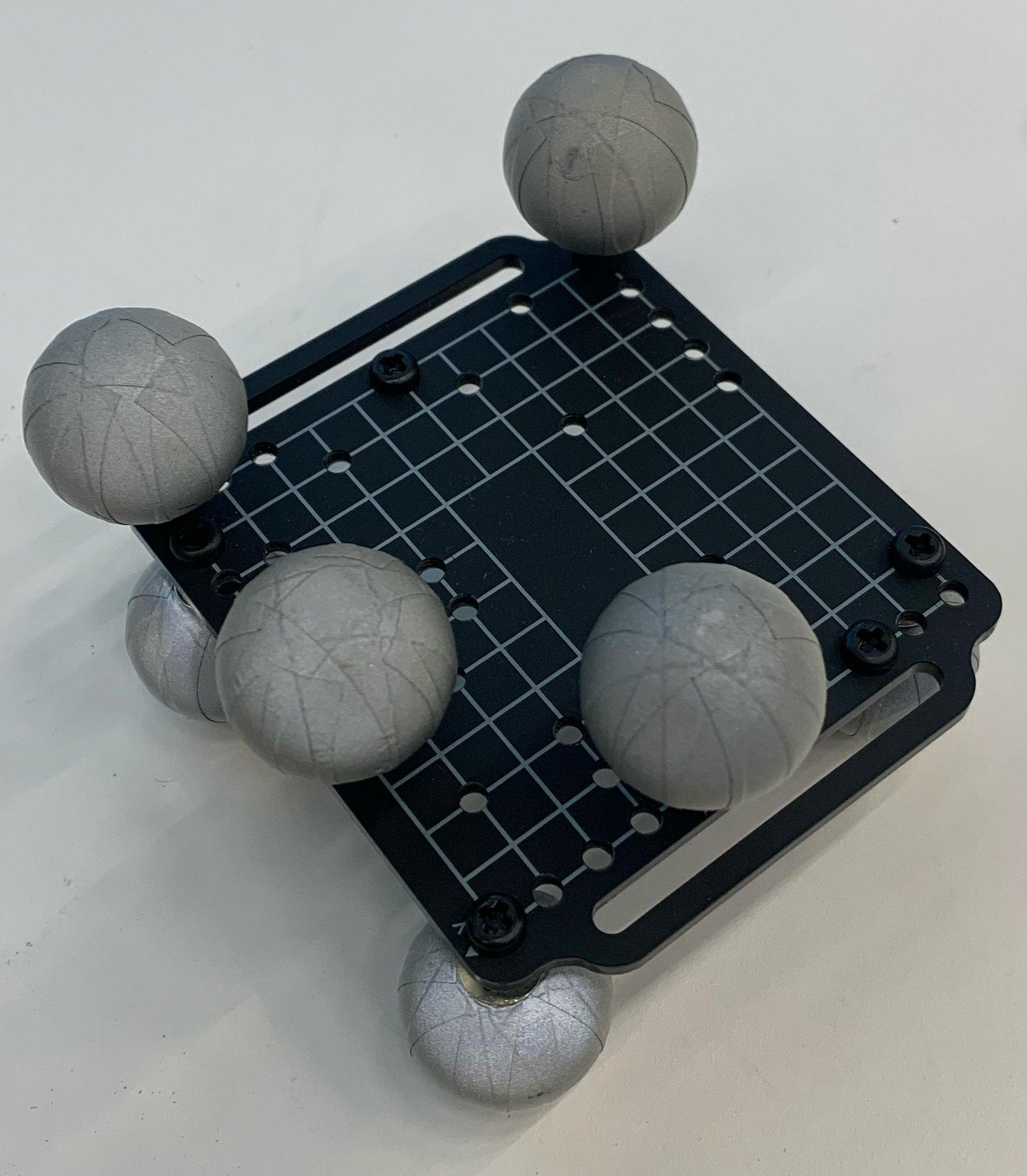

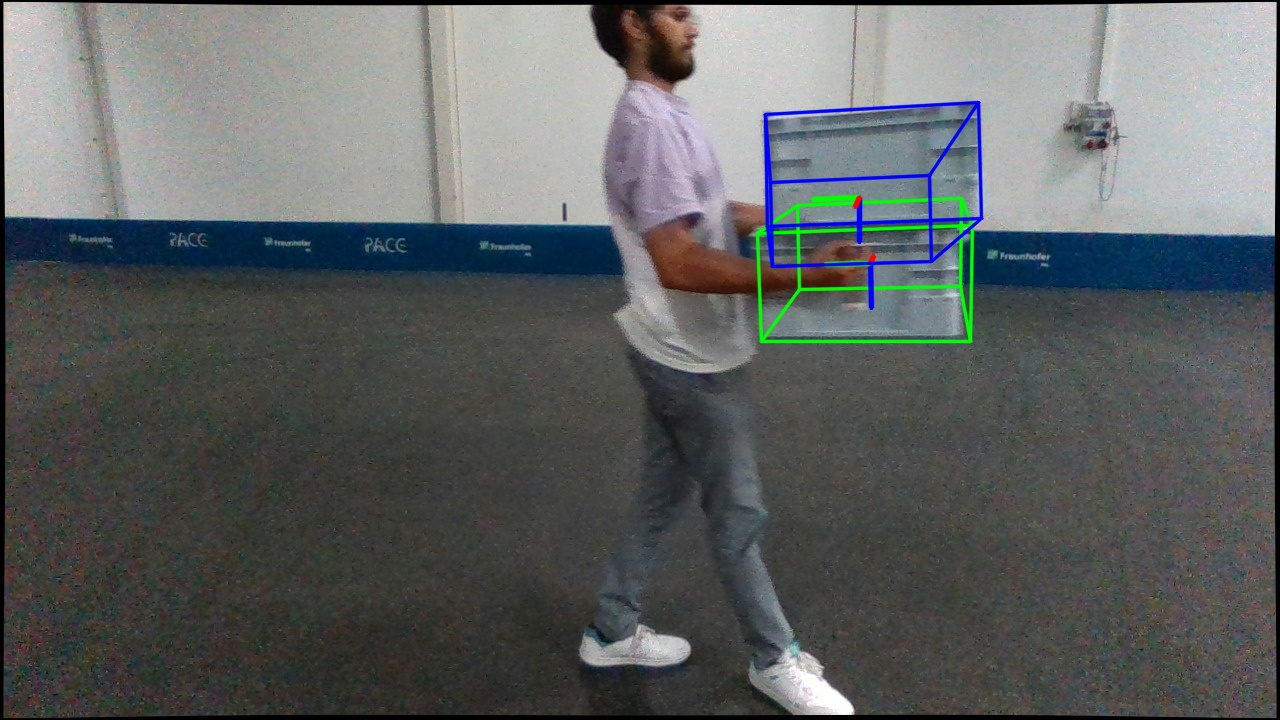

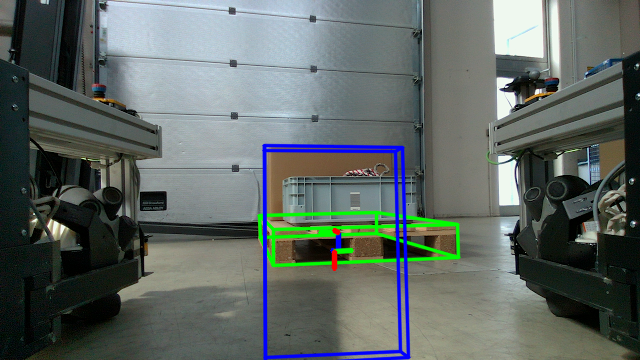

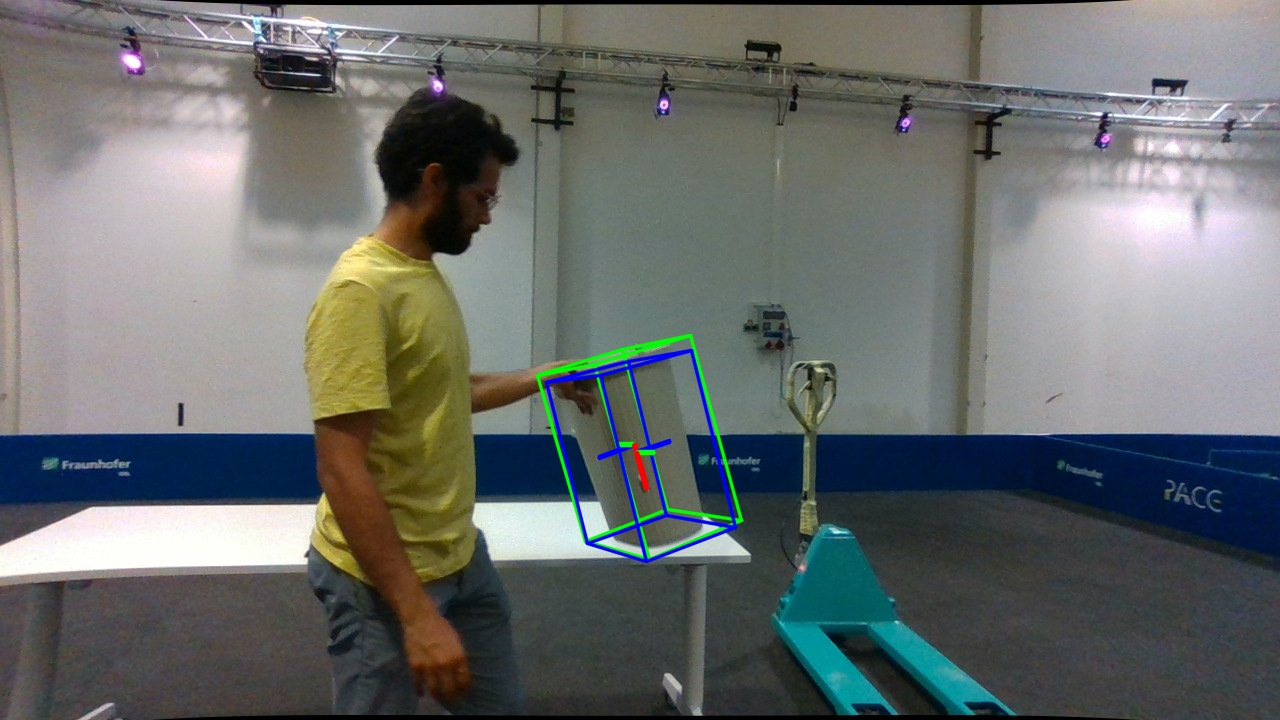

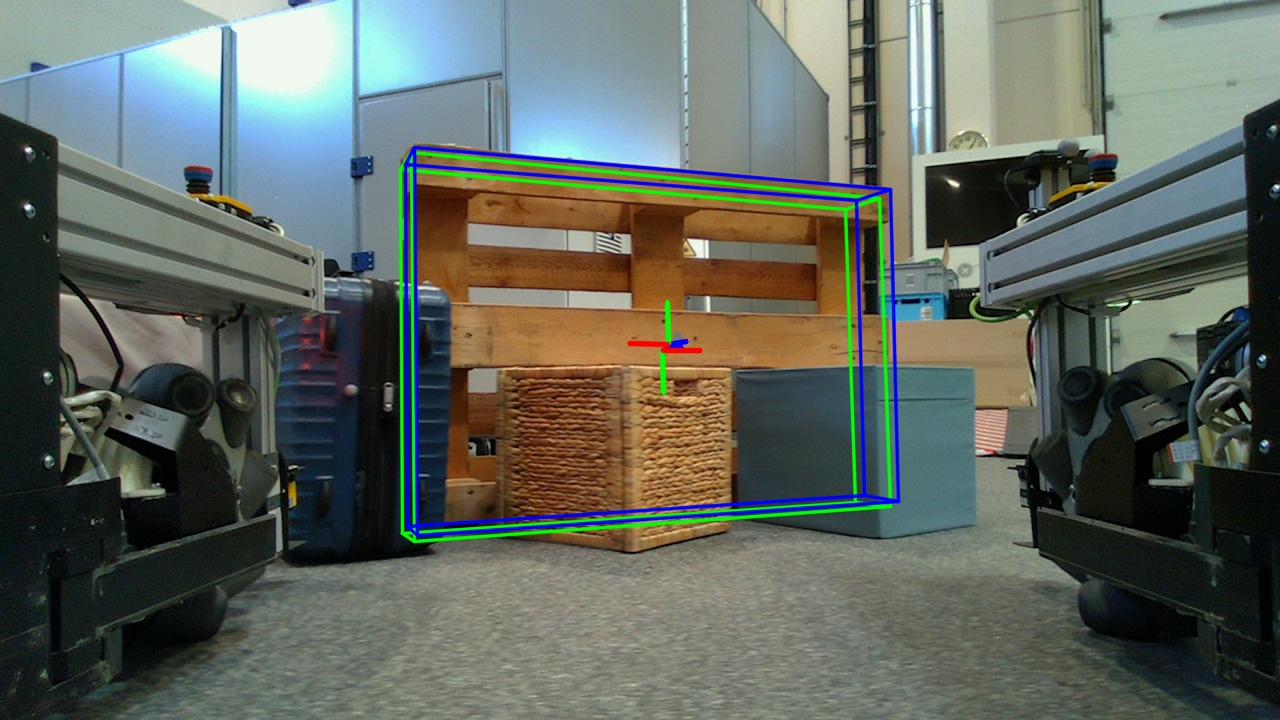

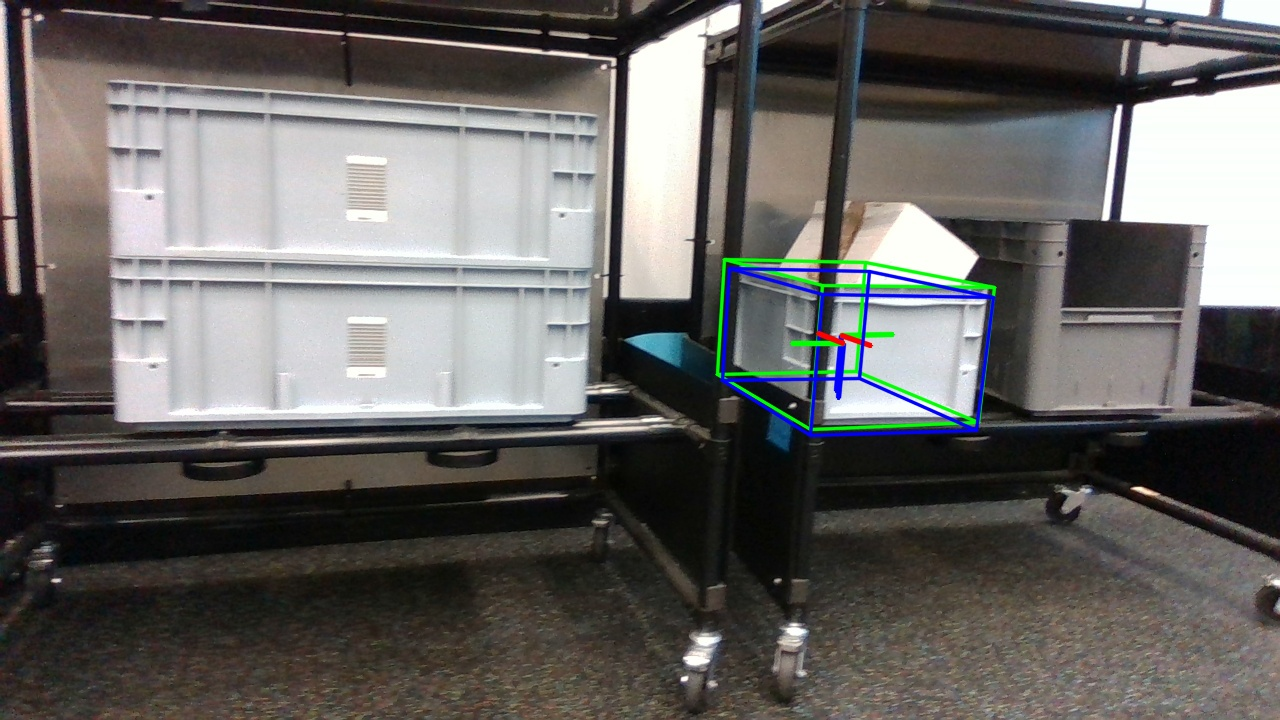

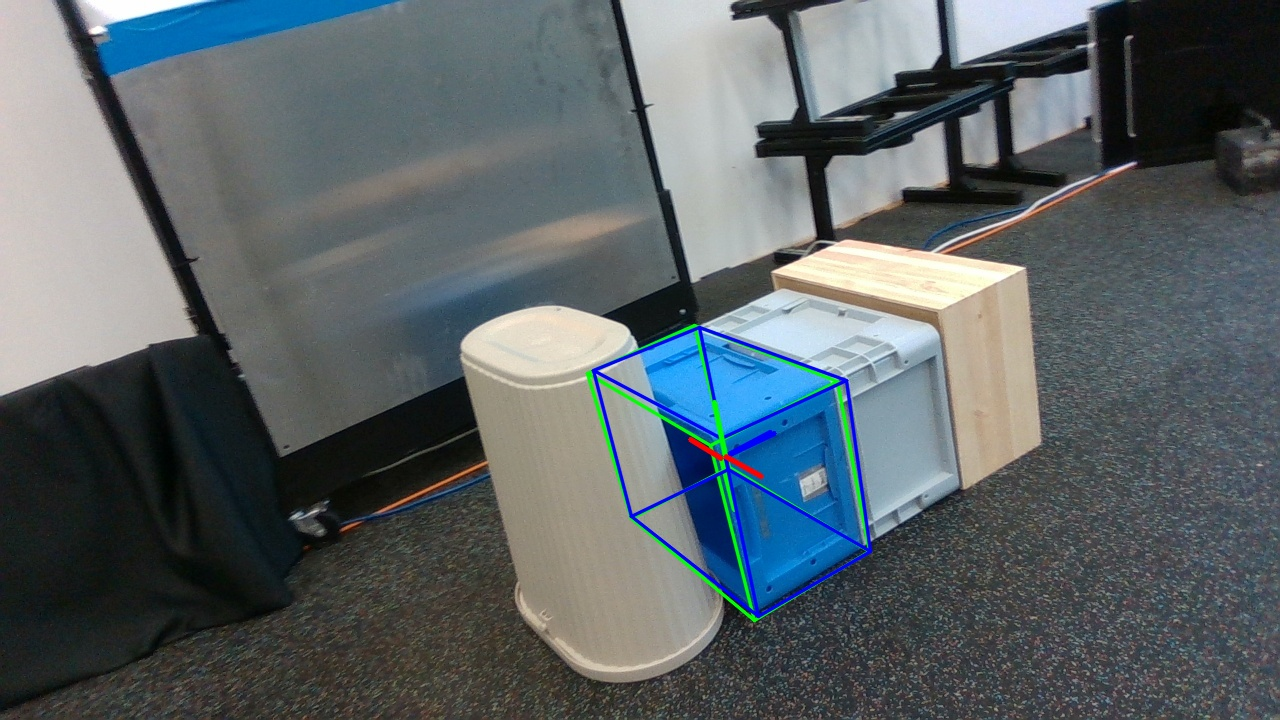

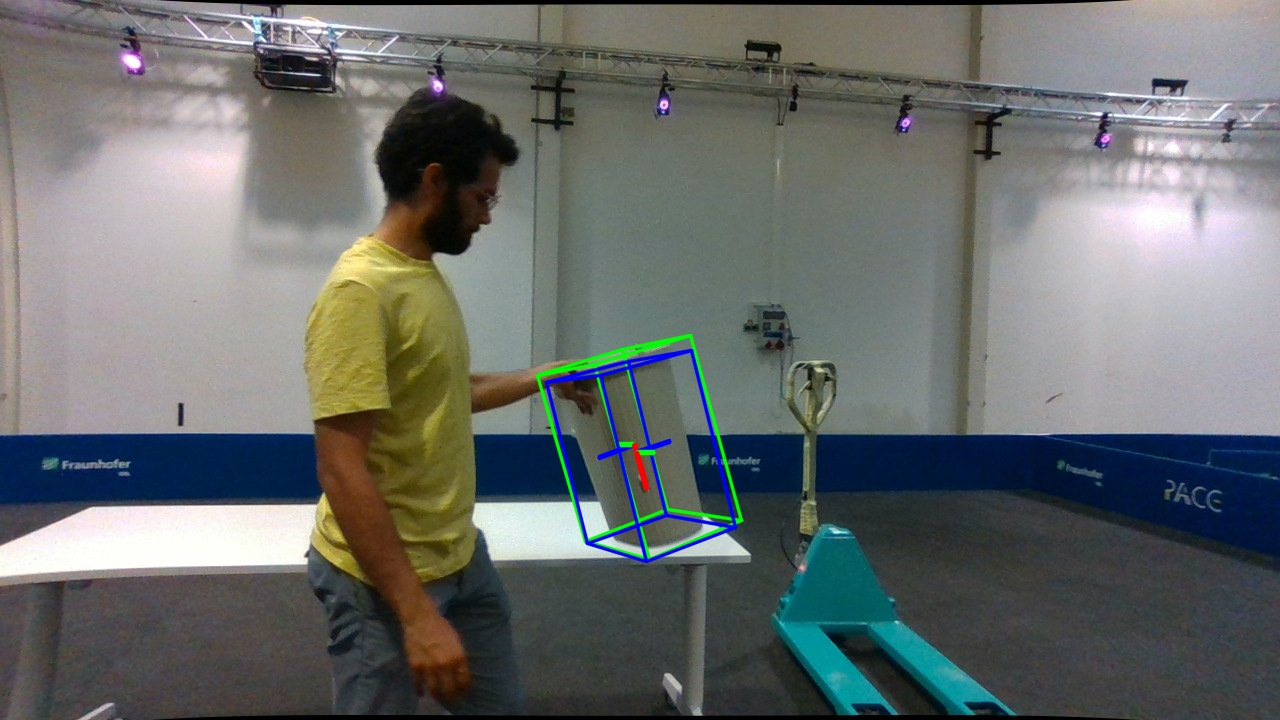

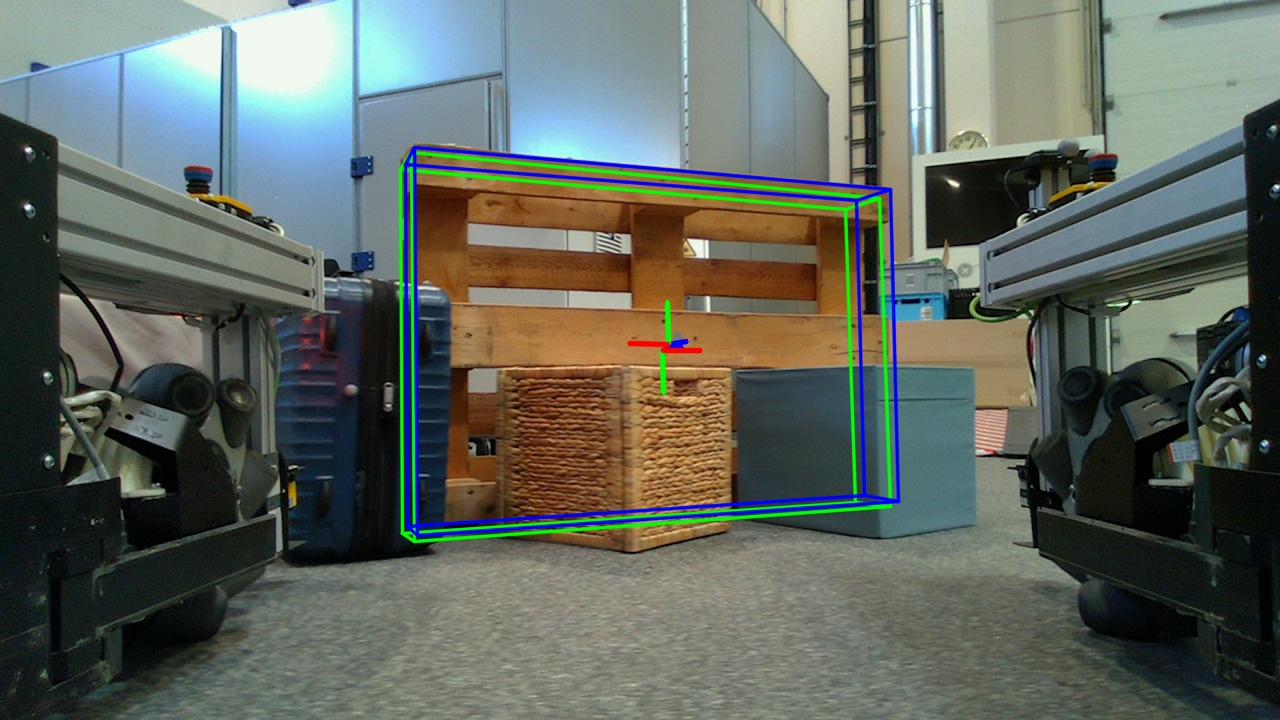

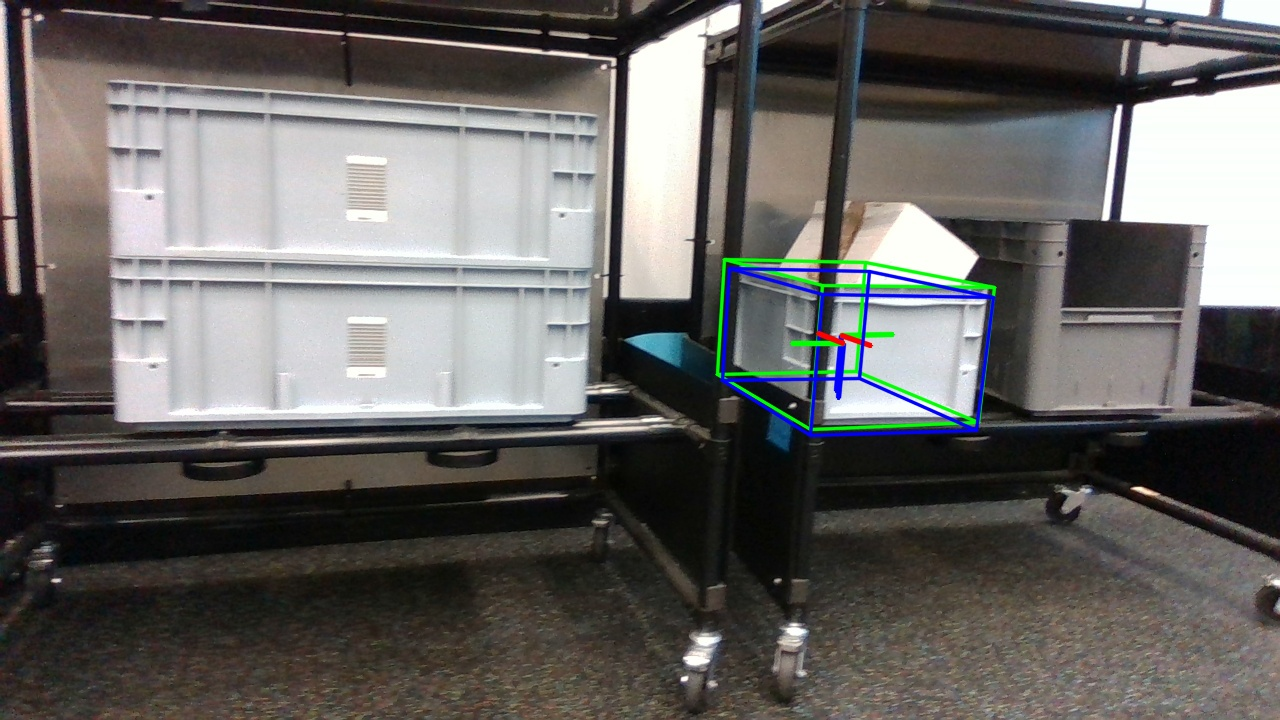

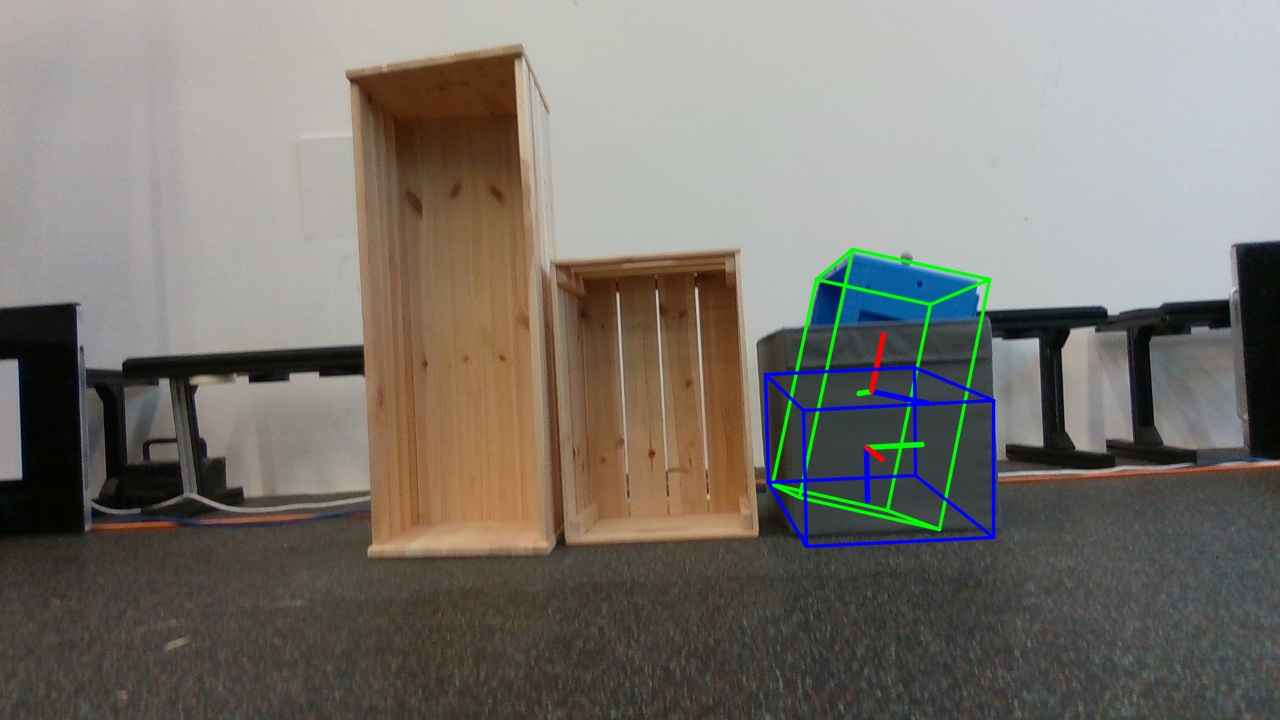

MR6D consists of four subsets: Validation Static, Dynamic Test, O³dyn, and Mobile Robot-Like Static, capturing both static and dynamic interactions. The Validation subset is gathered using a VICON system for accurate camera tracking, while the Dynamic subset includes human interaction, simulating collaborative robot behavior. For environments lacking external tracking, the O³dyn subset estimates camera trajectories based on odometry, later refined using VGGT model-based 3D reconstruction. Each subset presents distinct challenges, illustrating diverse scenarios encountered by mobile robots.

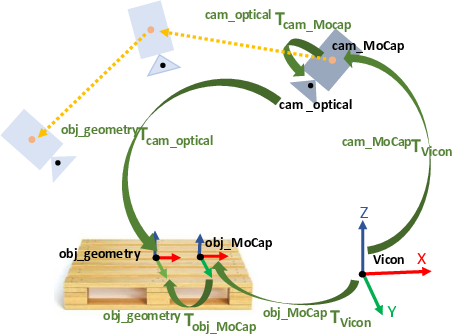

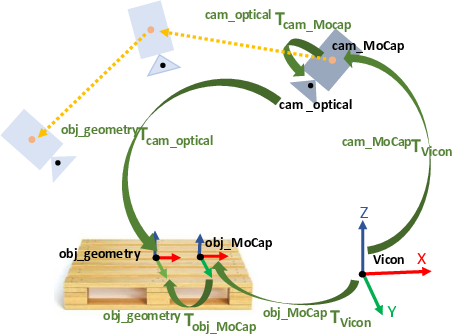

Figure 2: Coordinate transformations used for accurate annotation in validation and dynamic scenes.

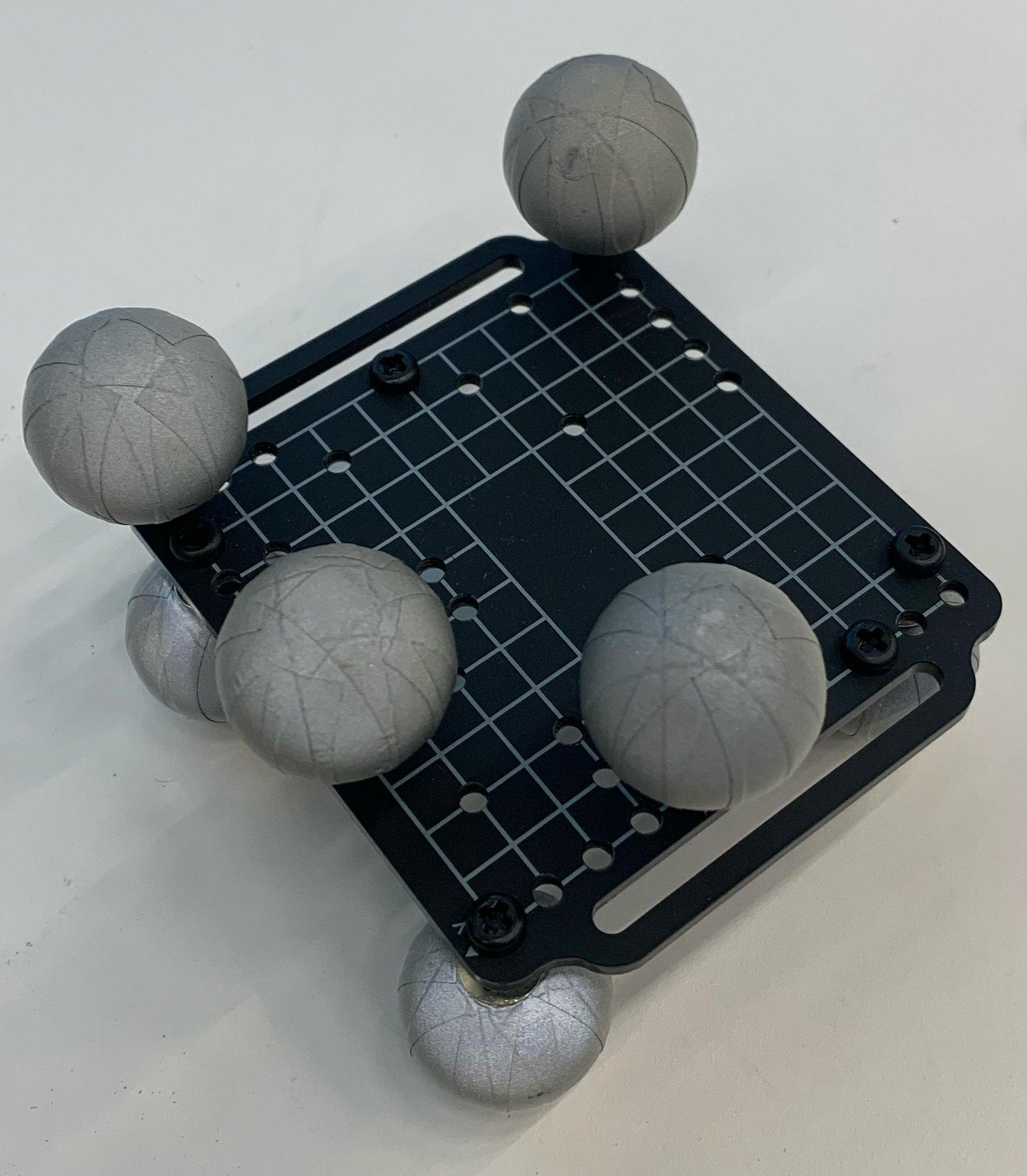

Figure 3: Annotating object 6D pose in the validation subset.

Data Statistics and Object Modeling

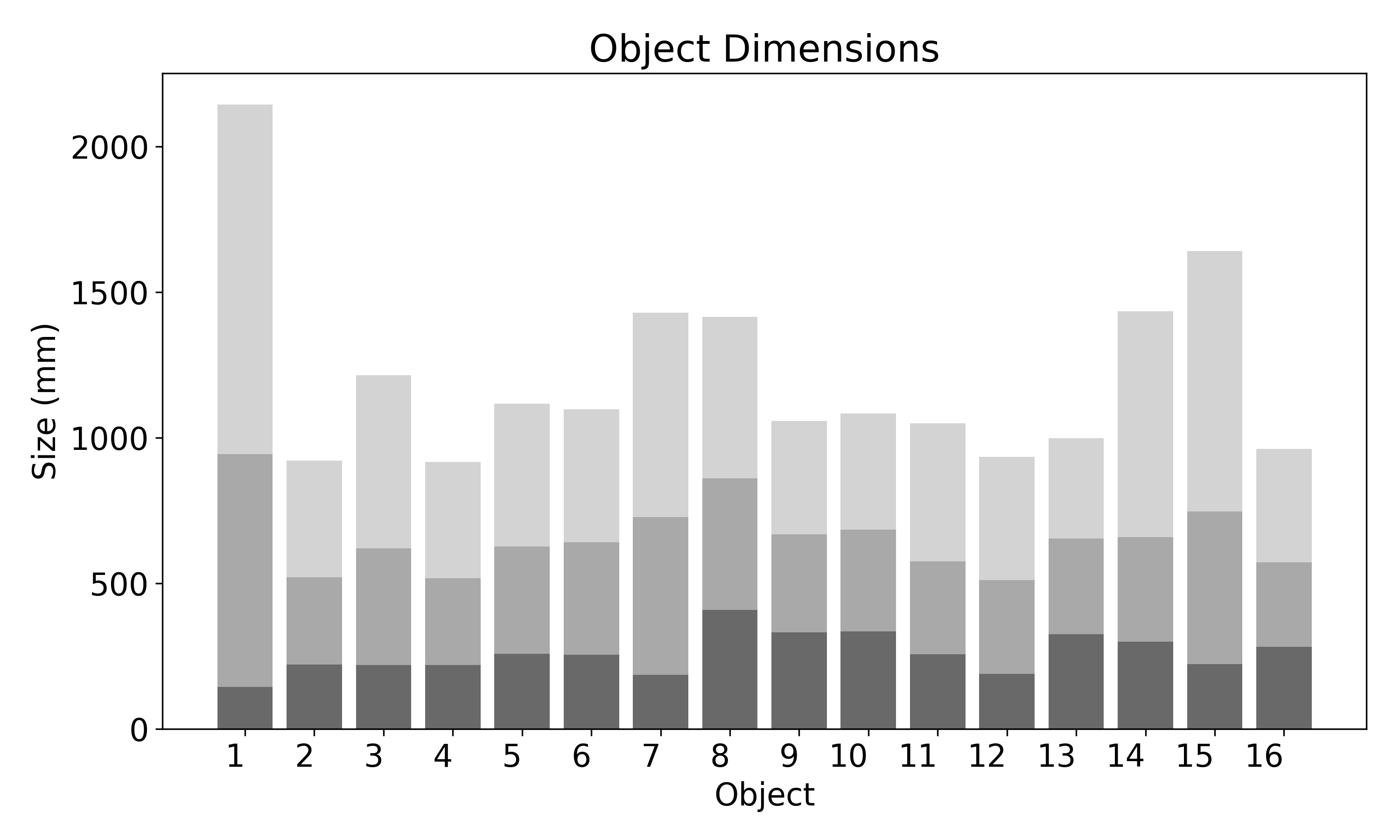

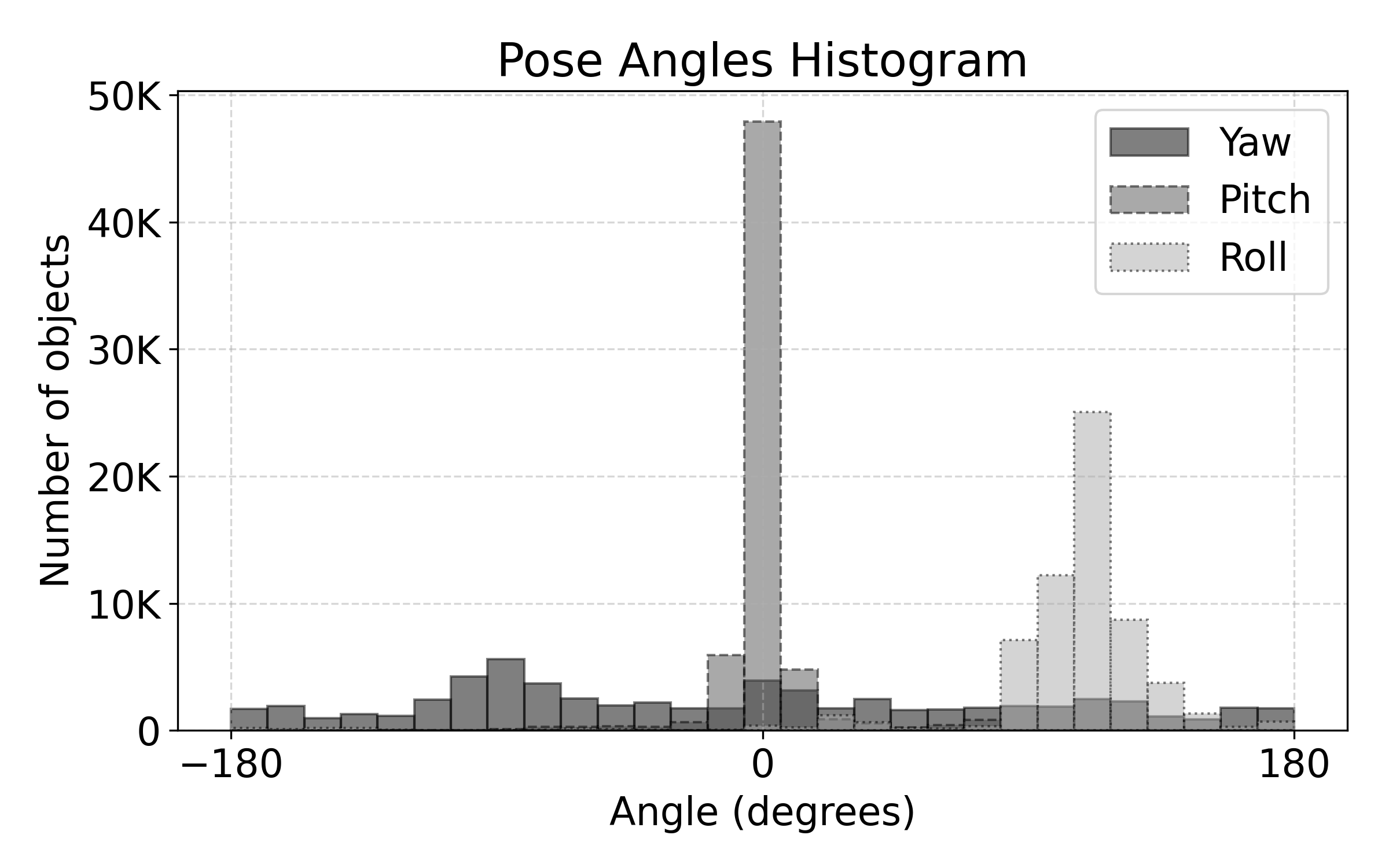

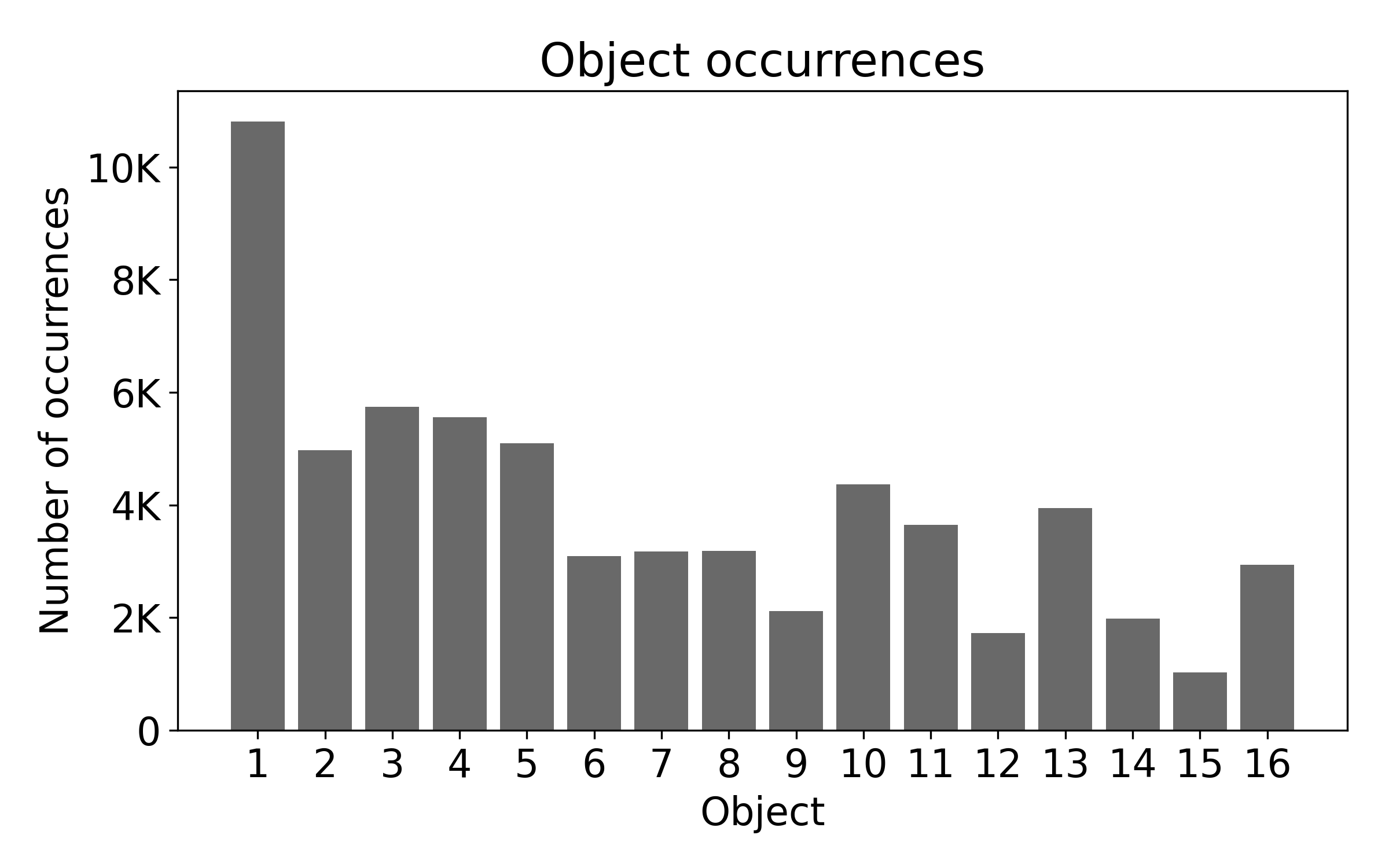

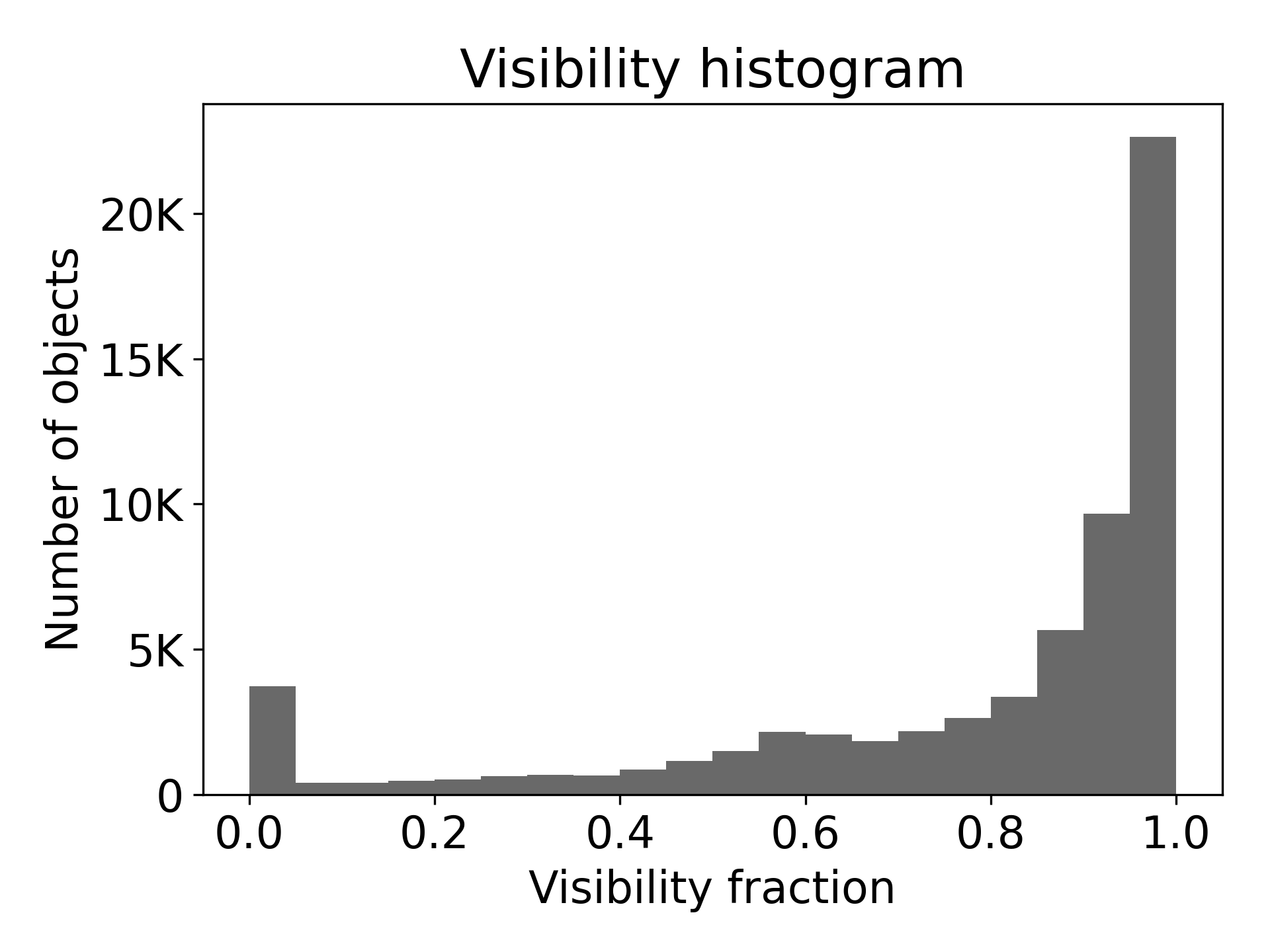

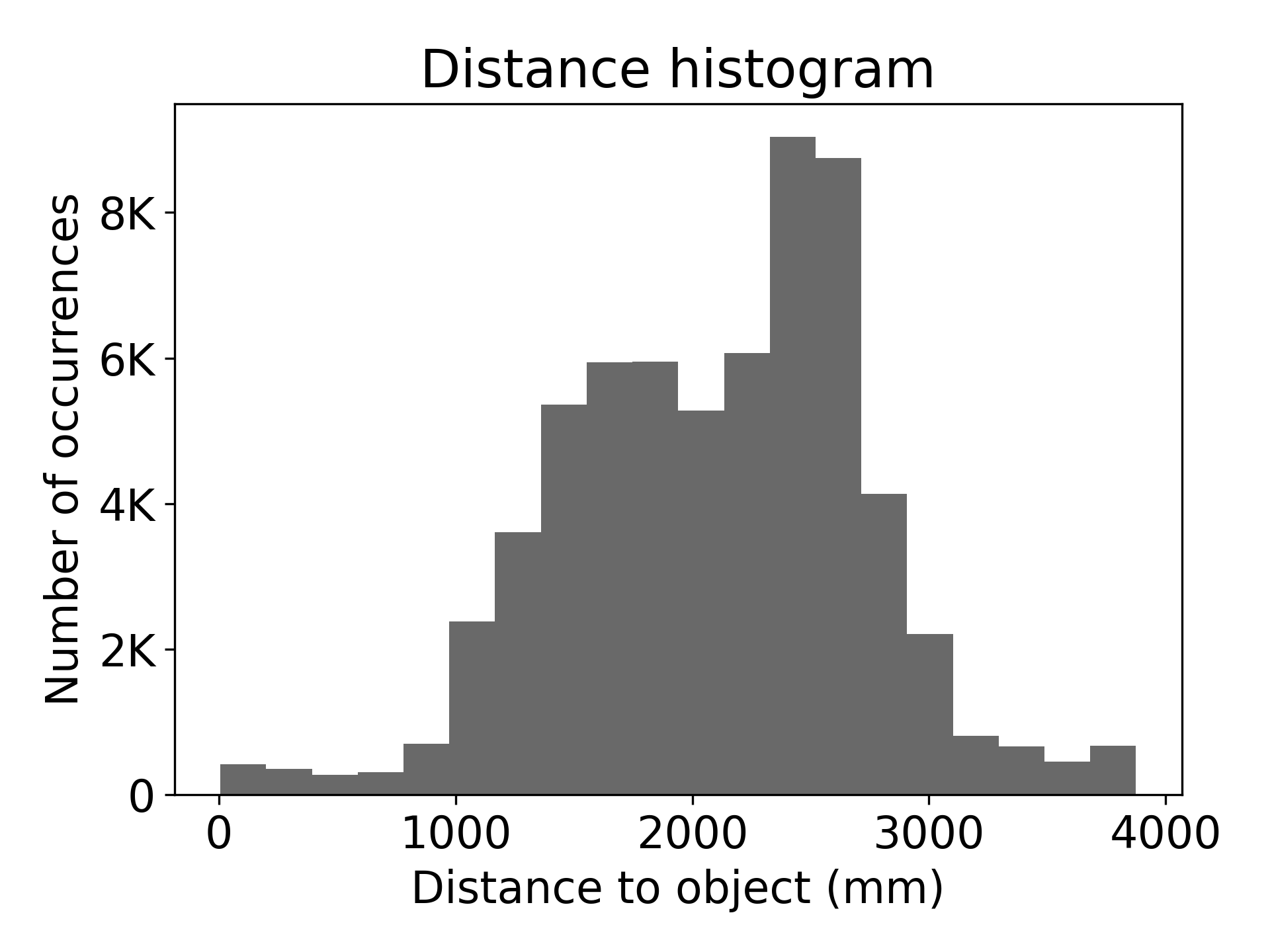

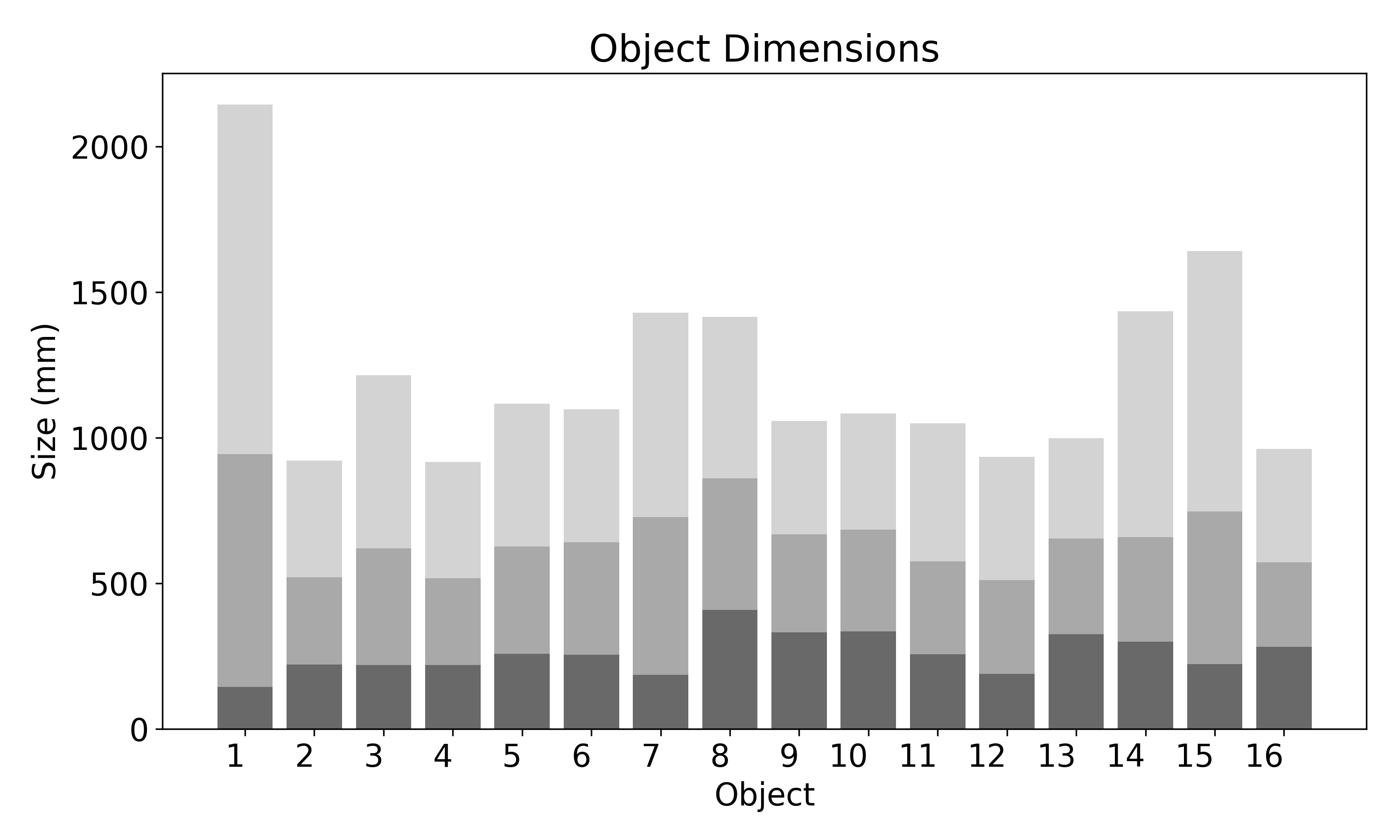

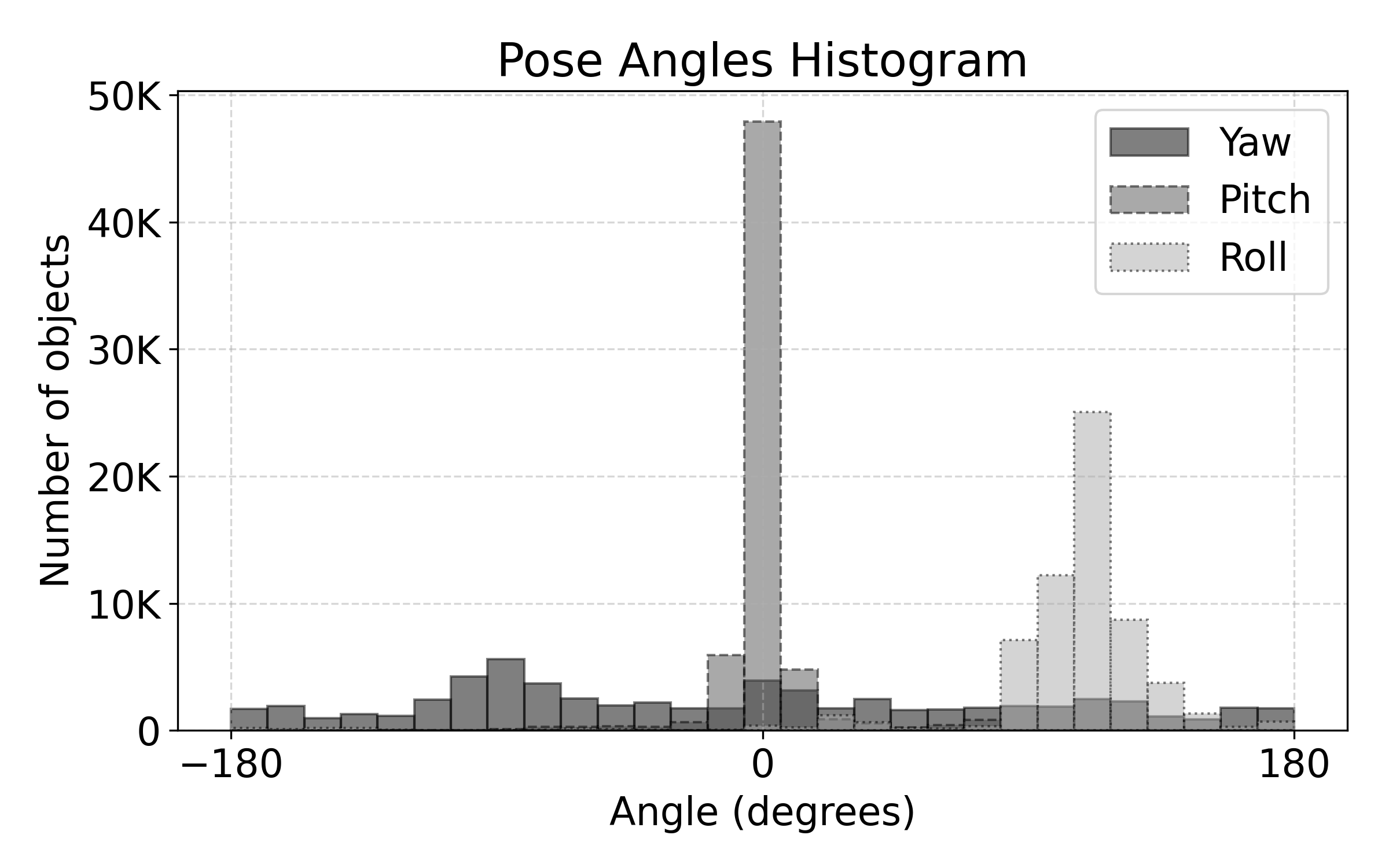

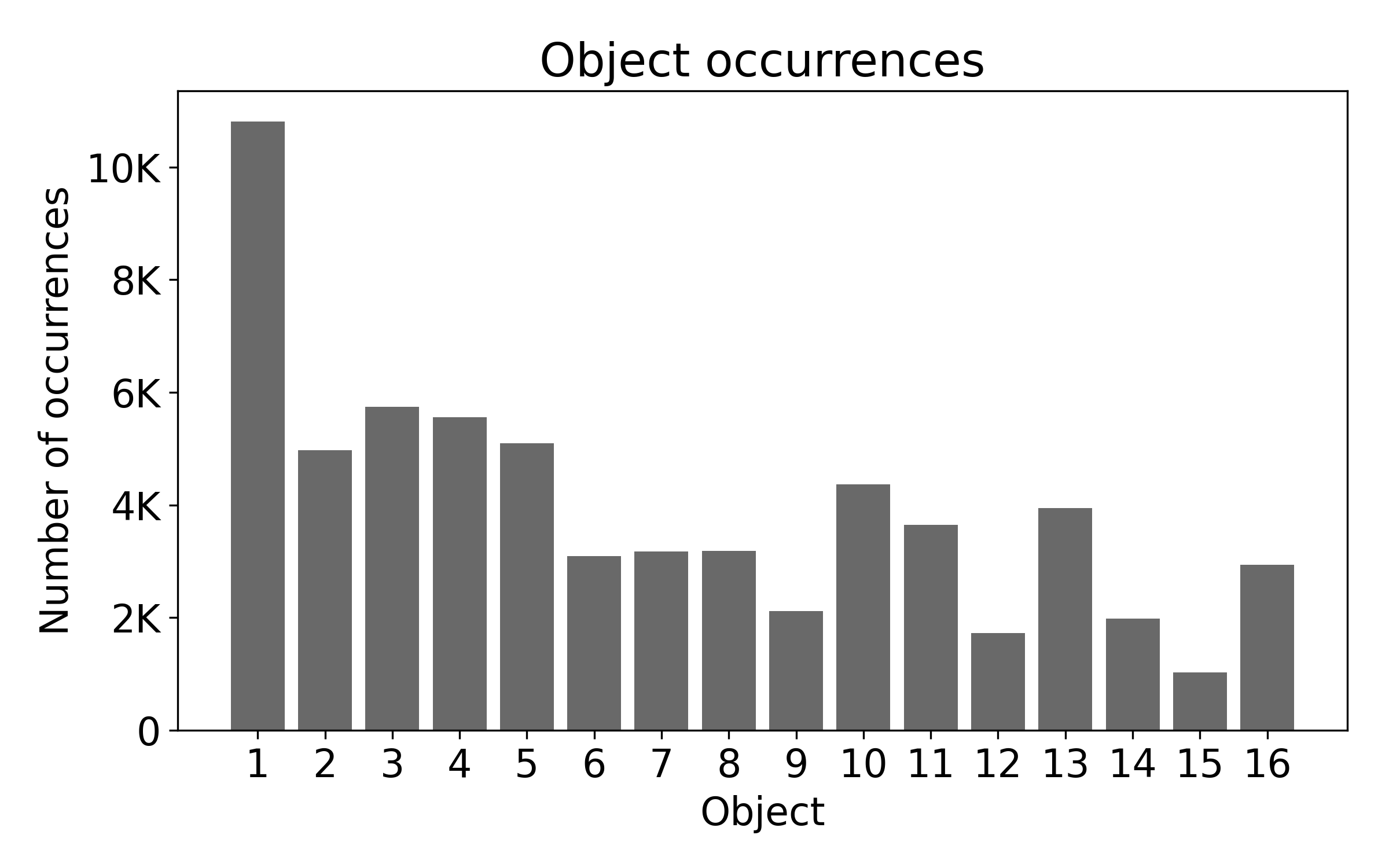

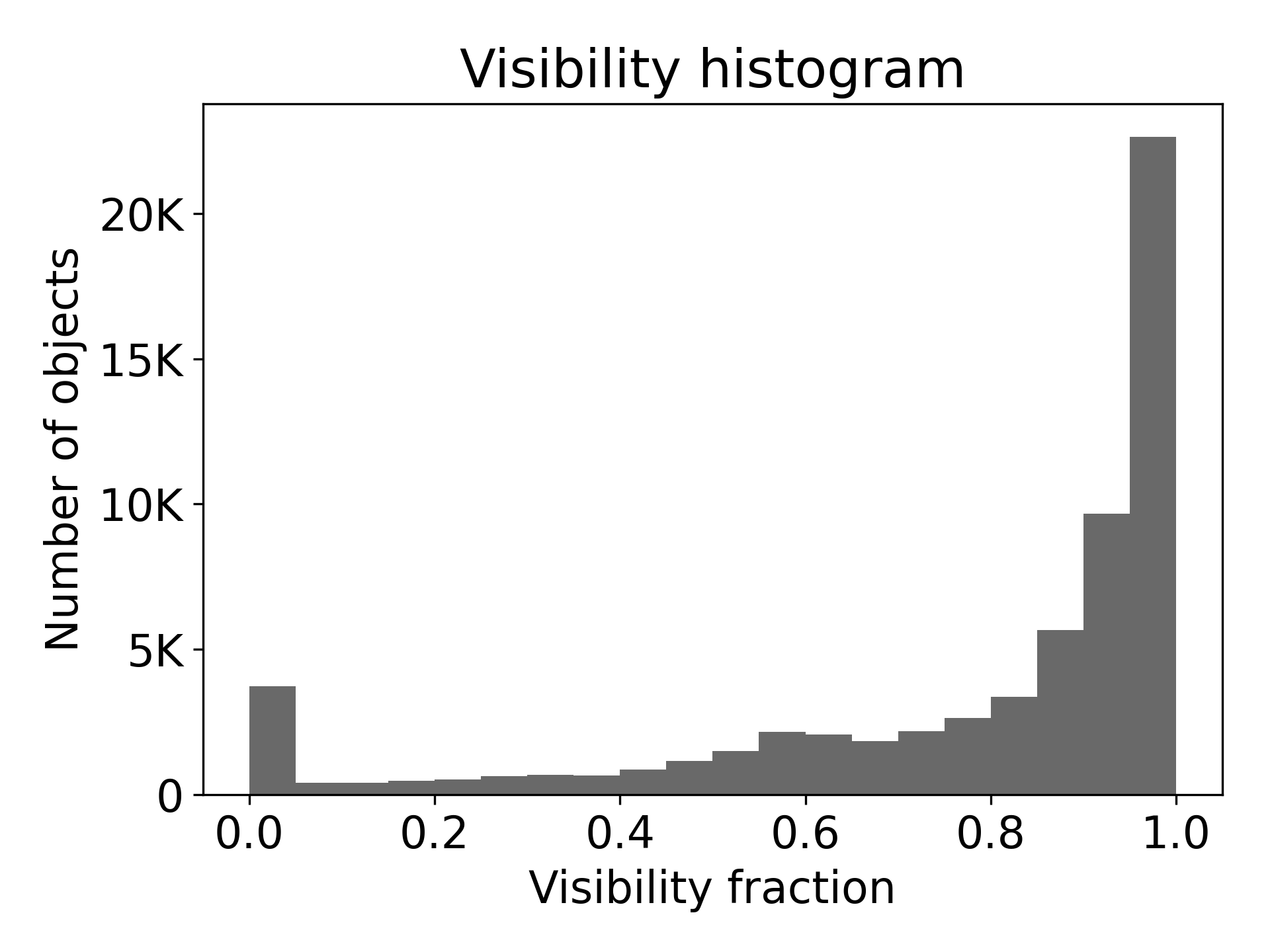

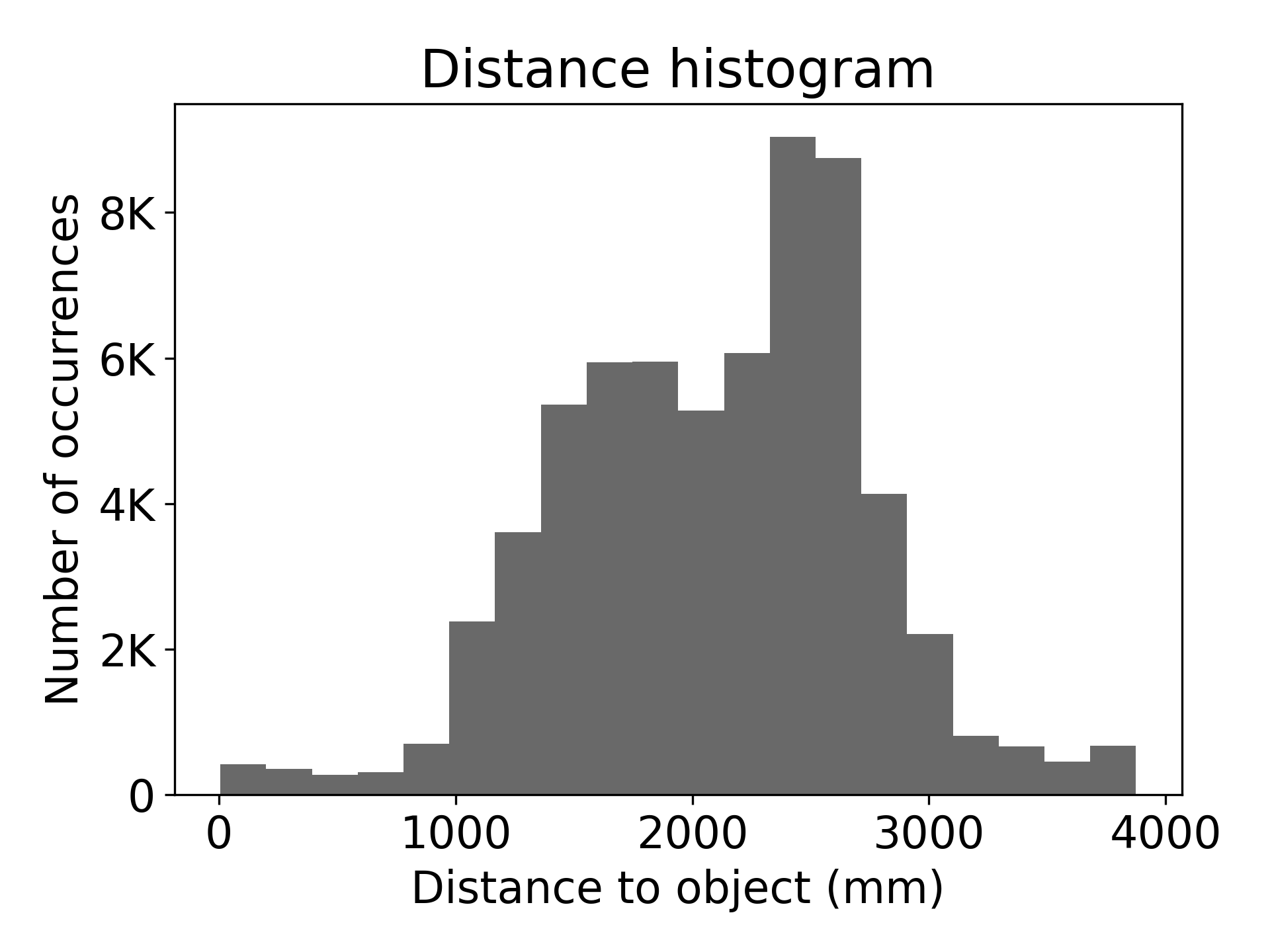

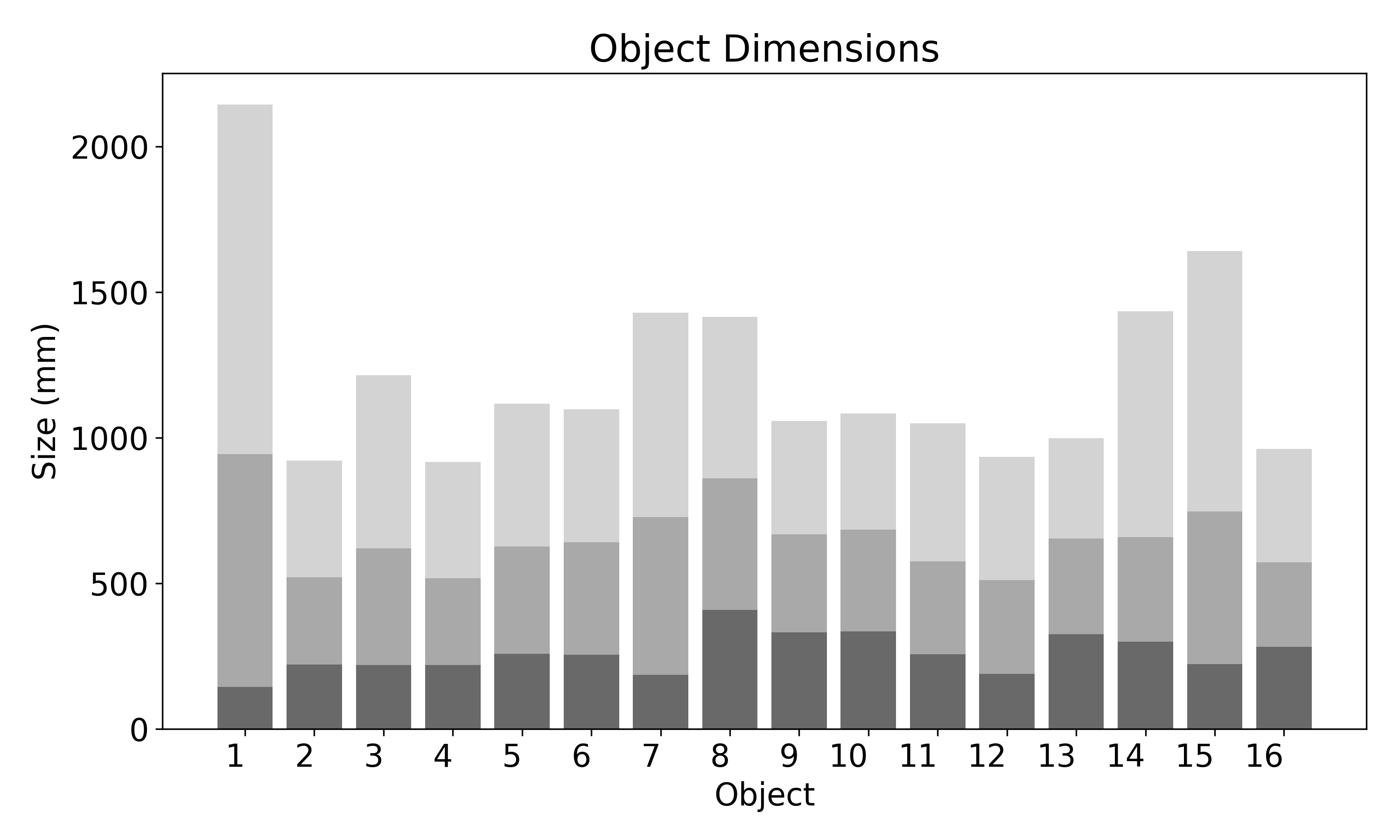

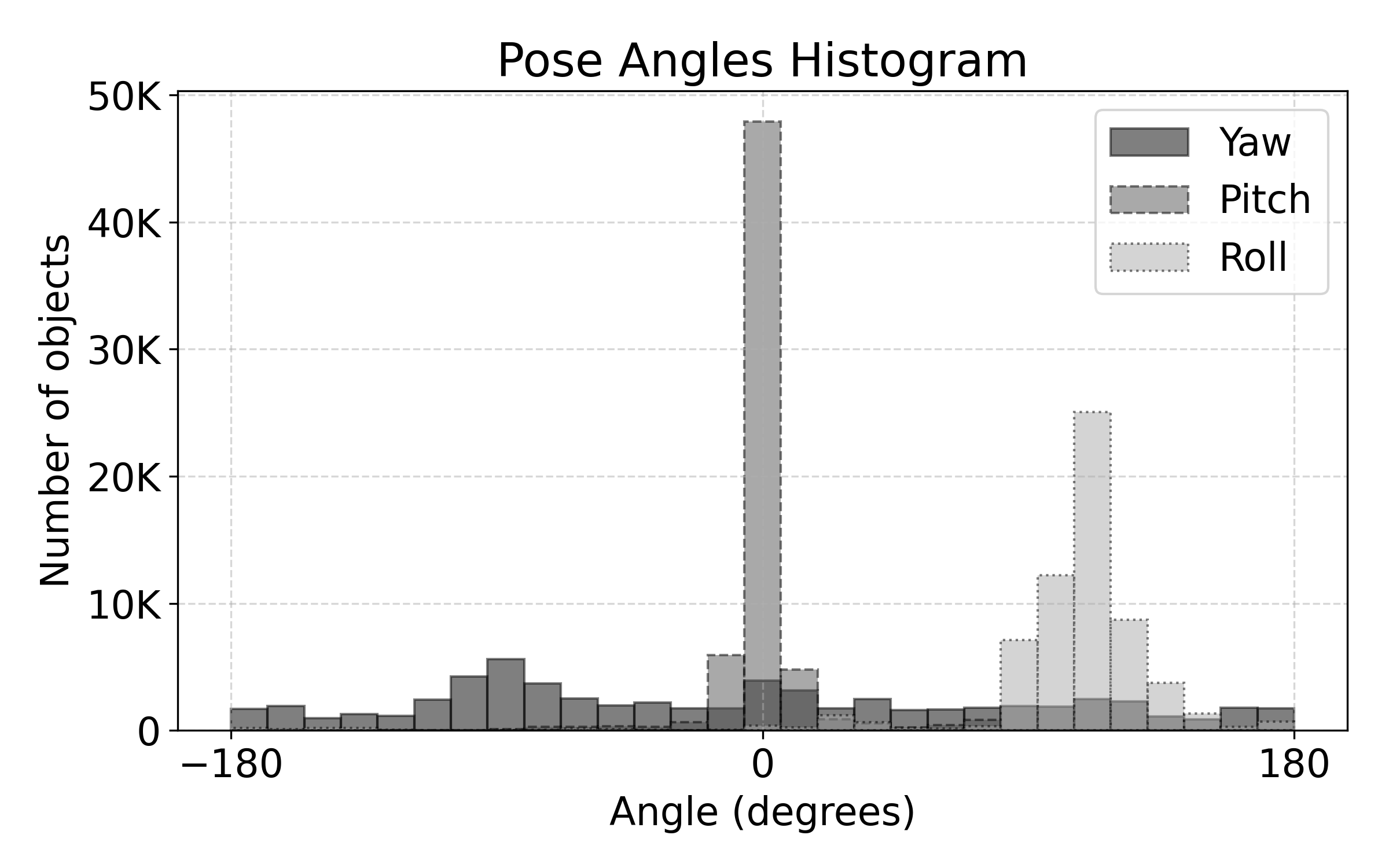

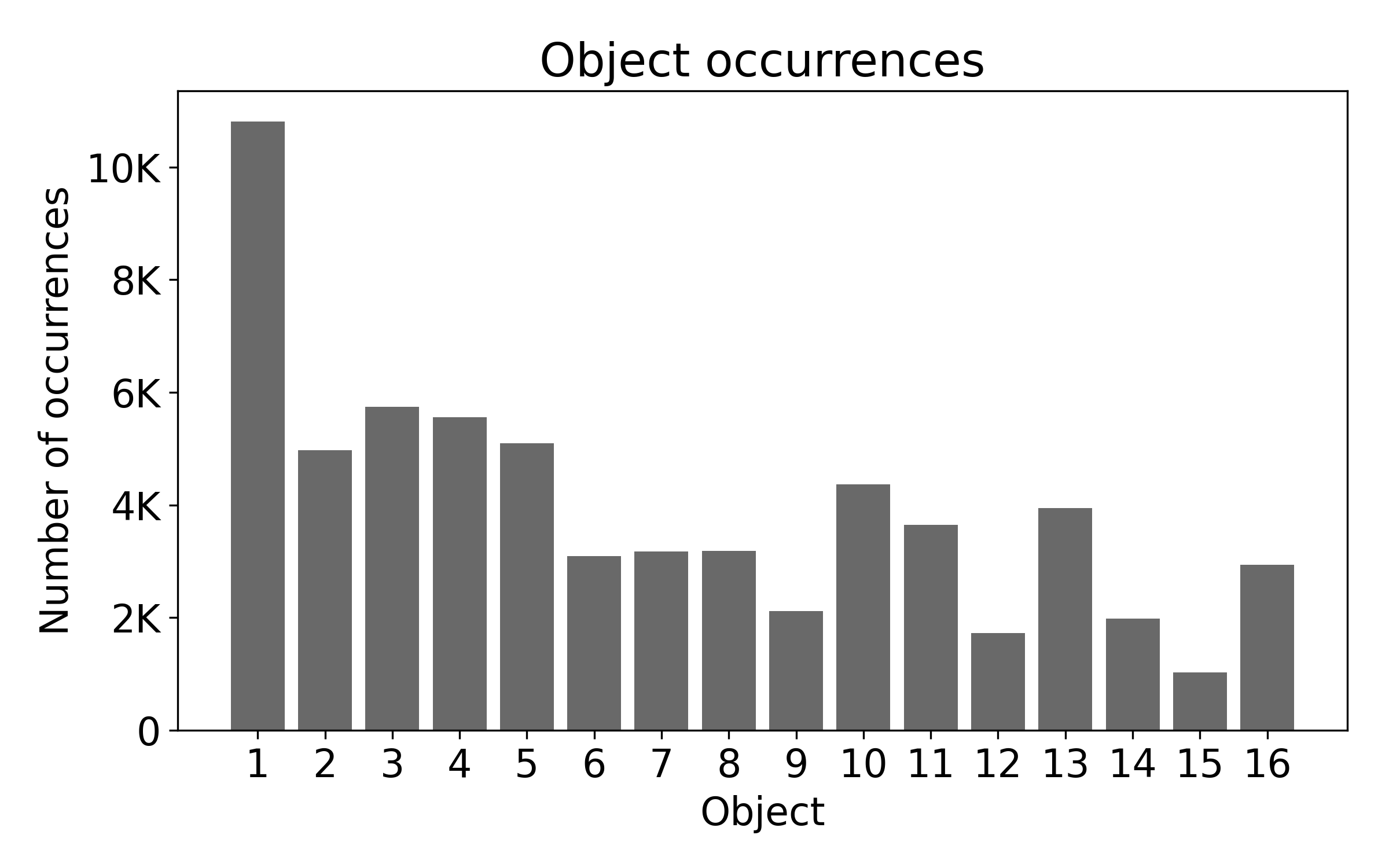

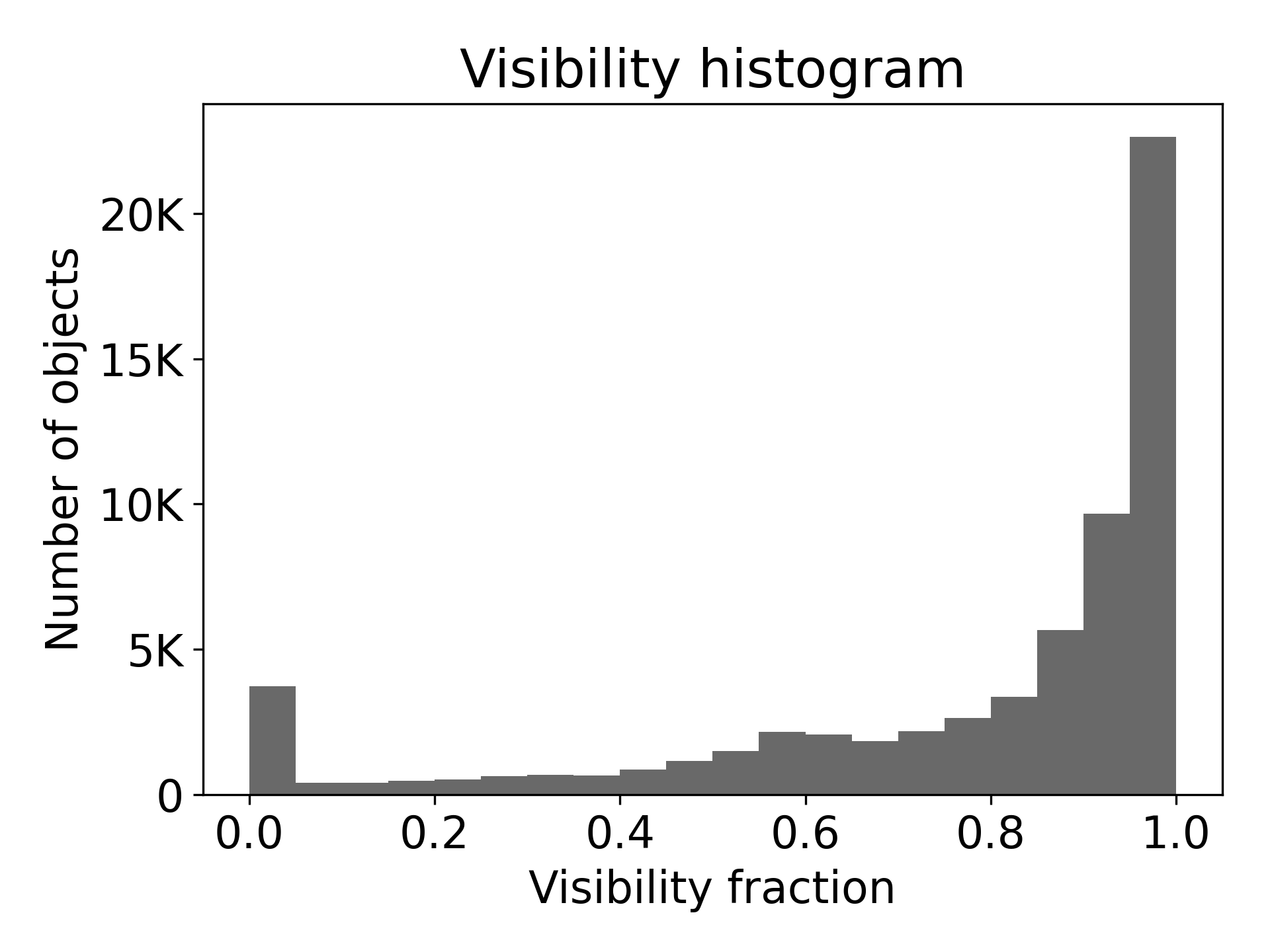

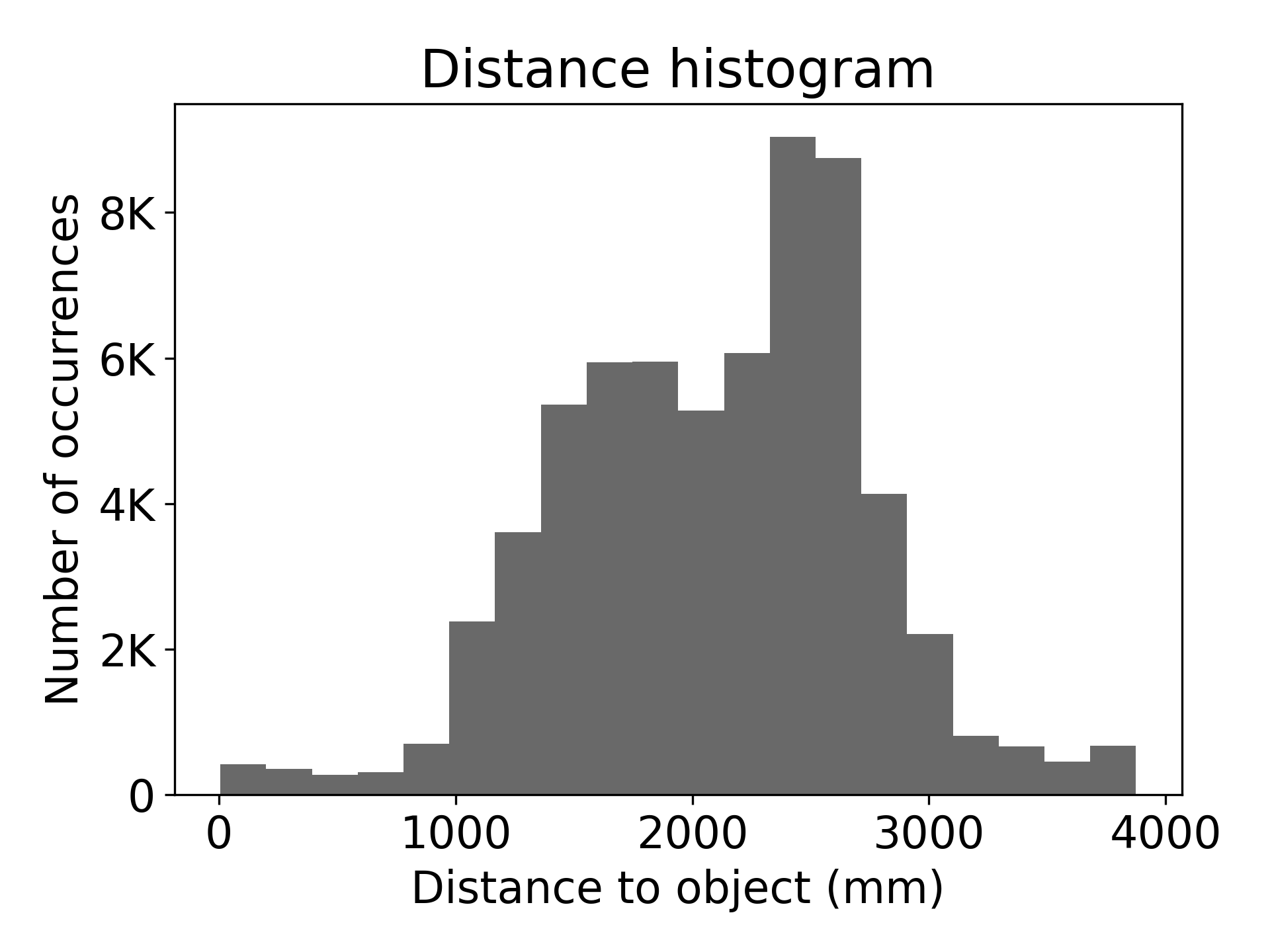

The dataset objects include common industrial items like Euro pallets and KLT bins, rigorously modeled using high-quality 3D meshes for accurate representation. Object selection criteria focus on non-standard gripper interactions and widespread availability. This allows the dataset to serve as a reference for synthesizing training data for instance-based pose estimation models. Figure 4 provides a comprehensive view of object dimensions, pose angle distributions, occurrences, and visibility statistics within MR6D.

Figure 4: Data statistics including dimensions, pose angles, occurrences, visibility, and distances.

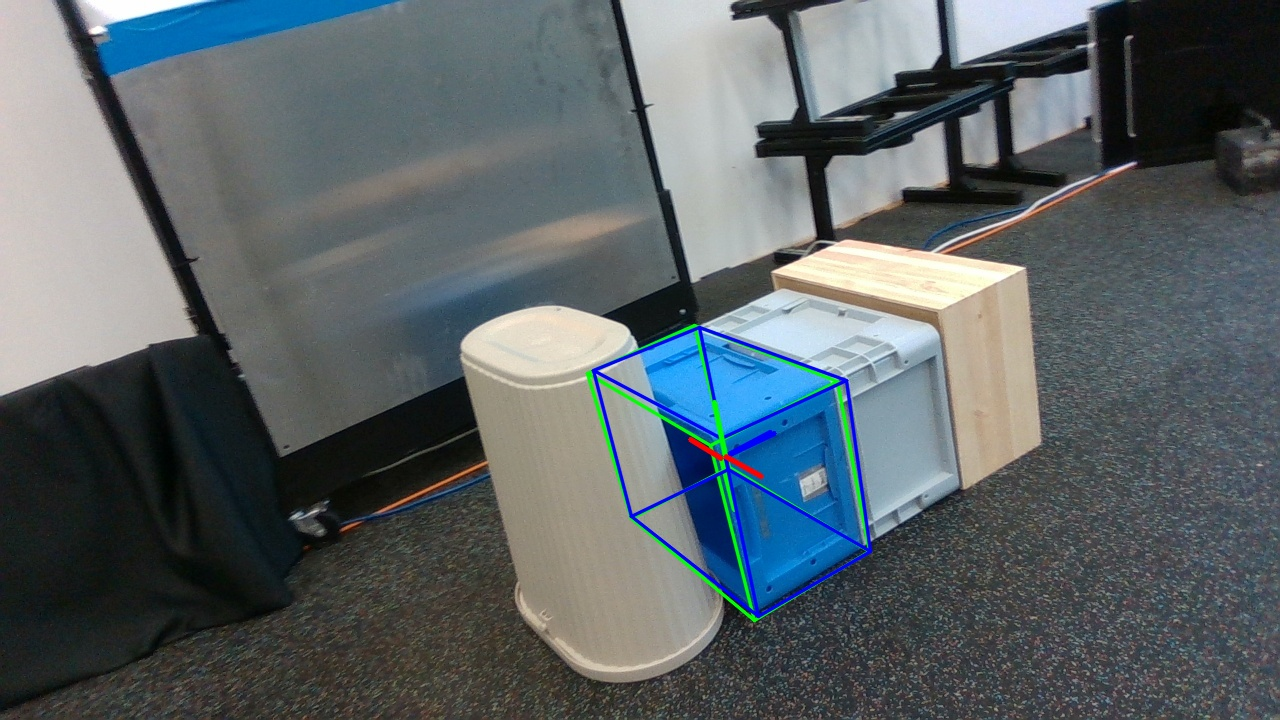

Evaluation of Pose Estimation Pipelines

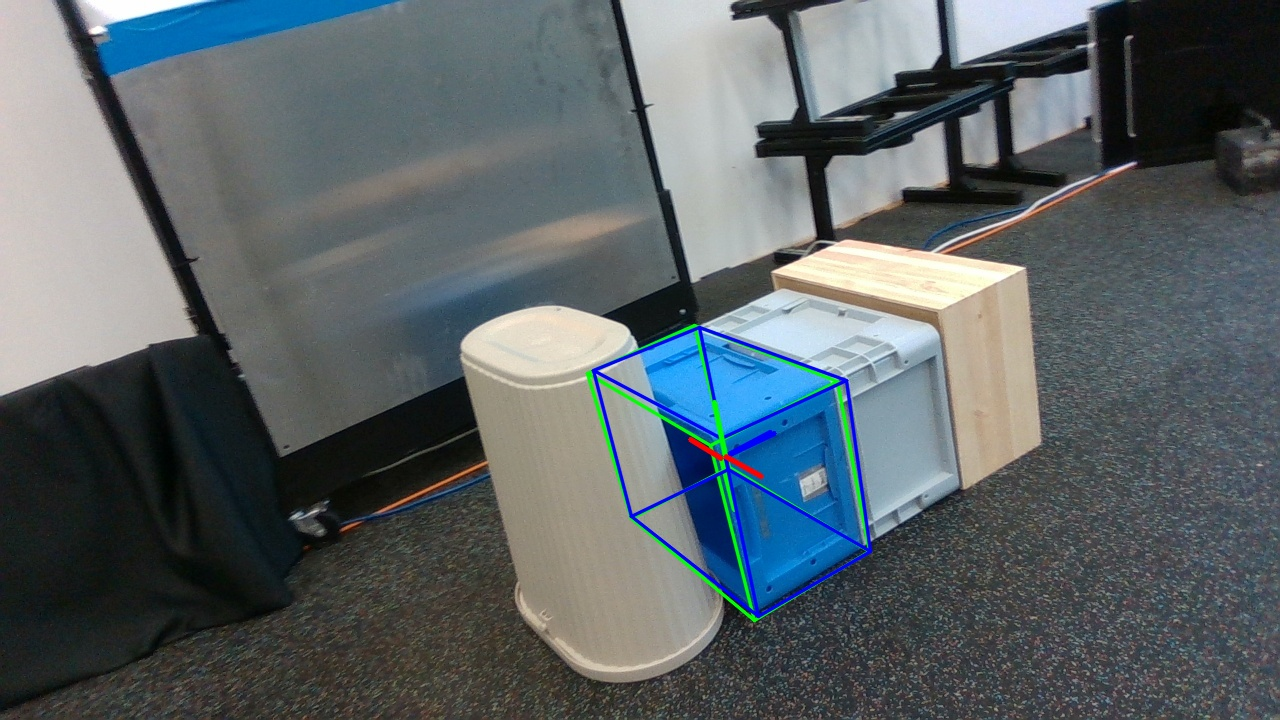

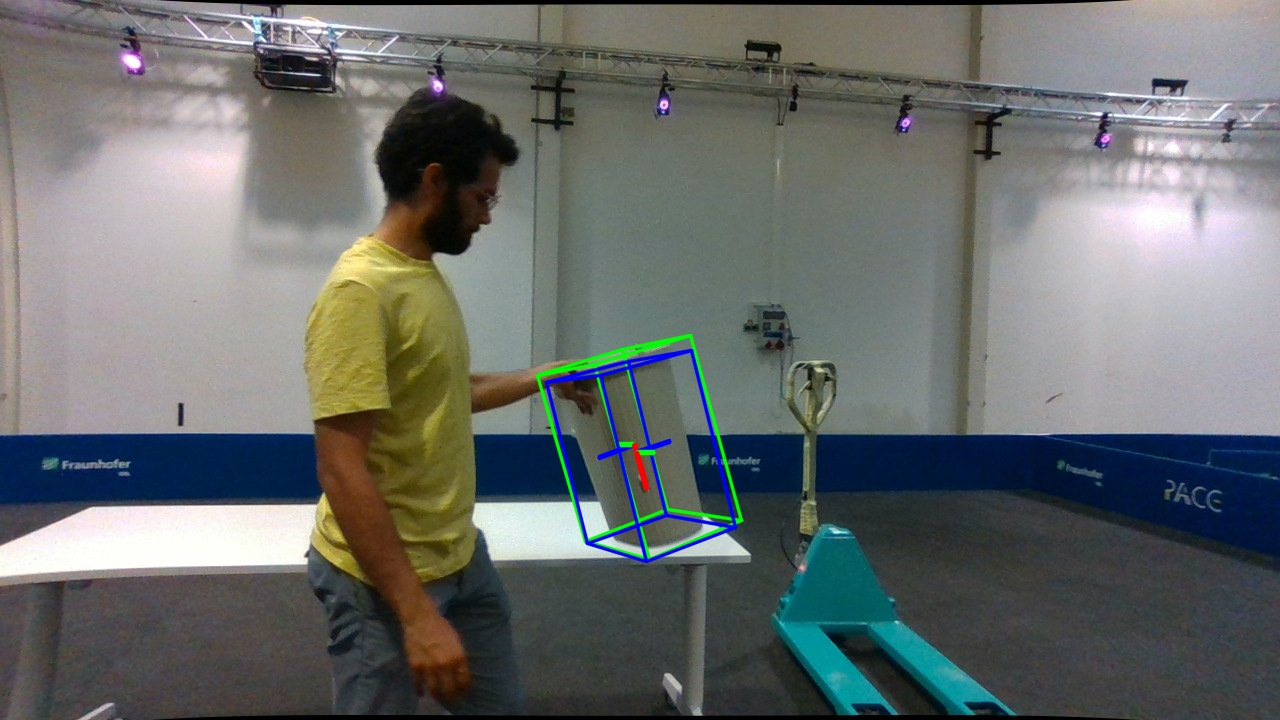

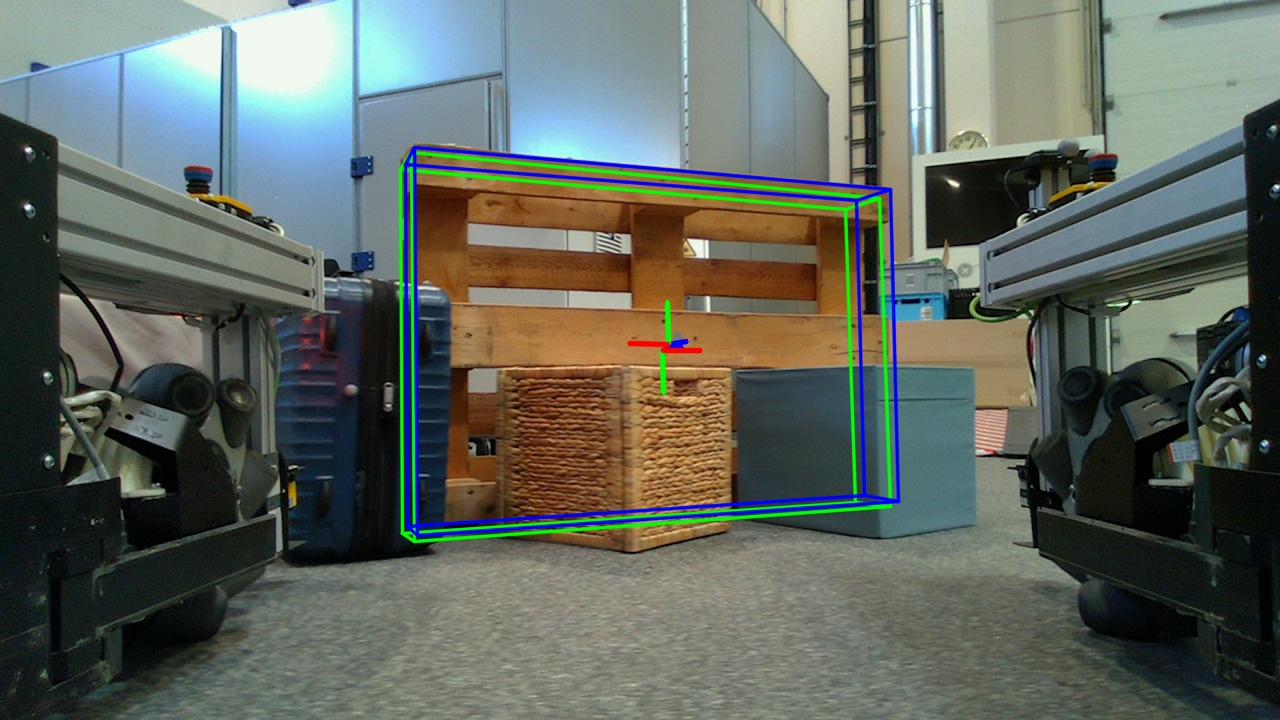

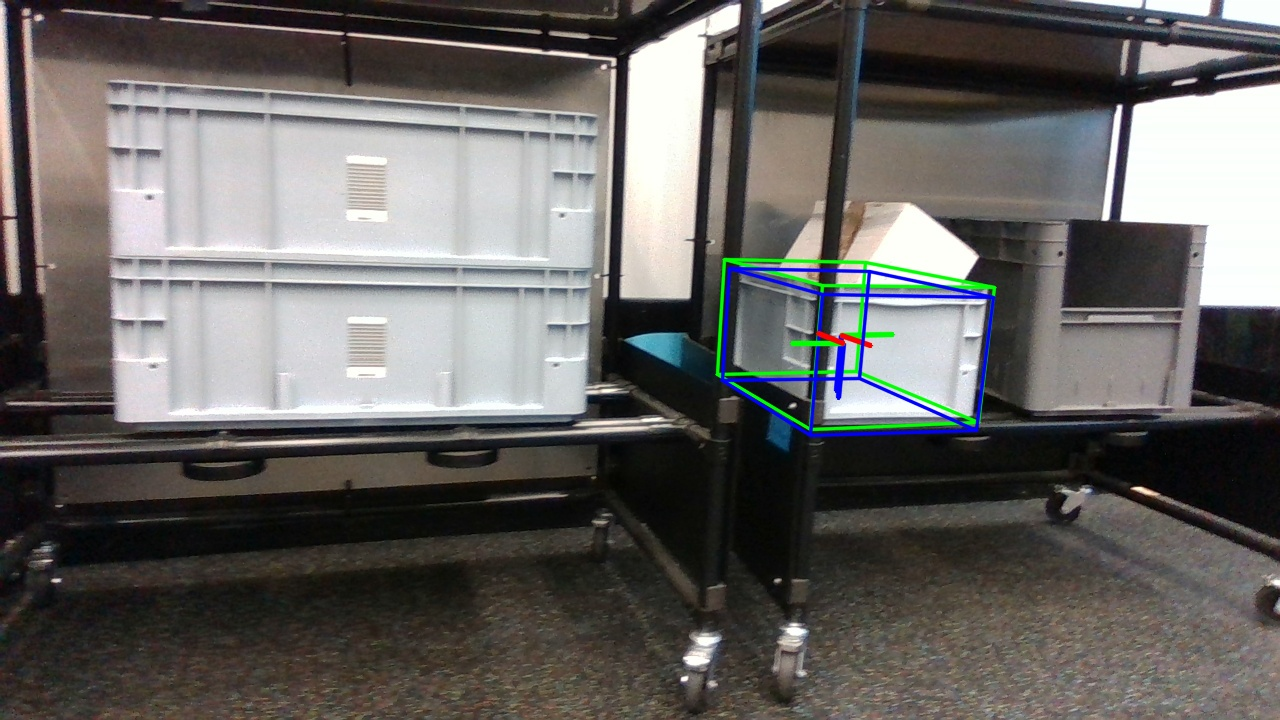

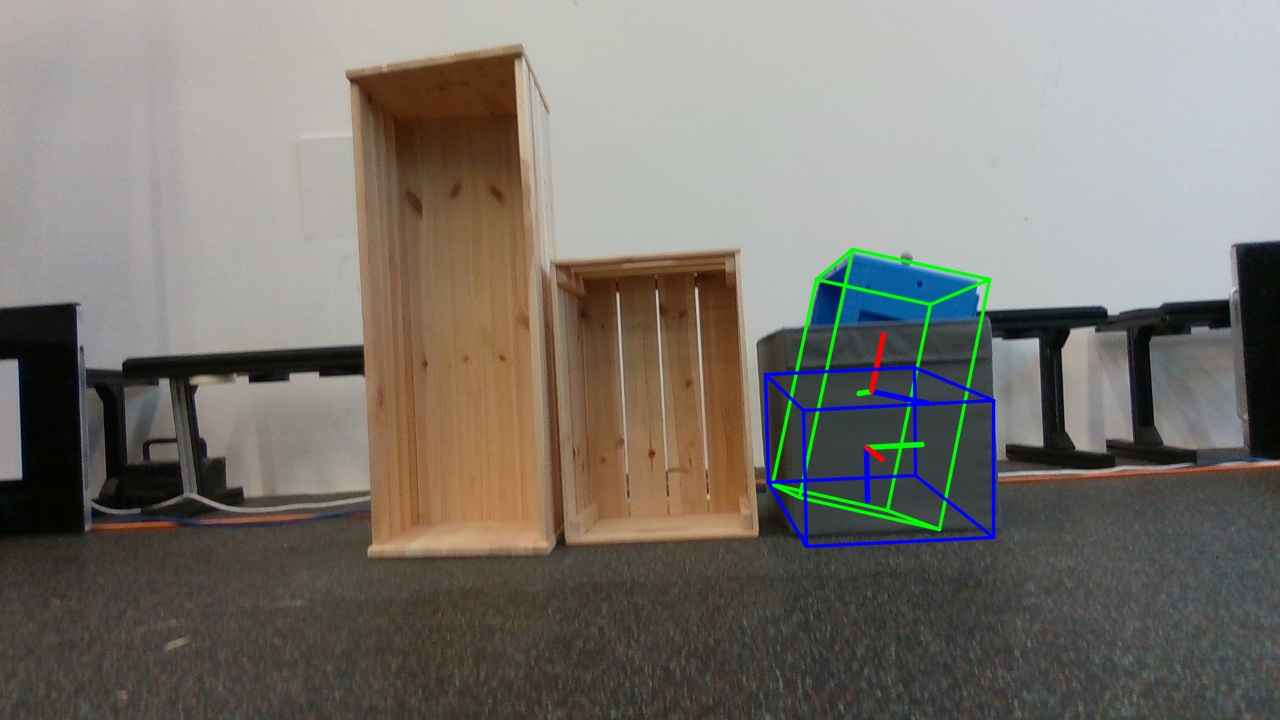

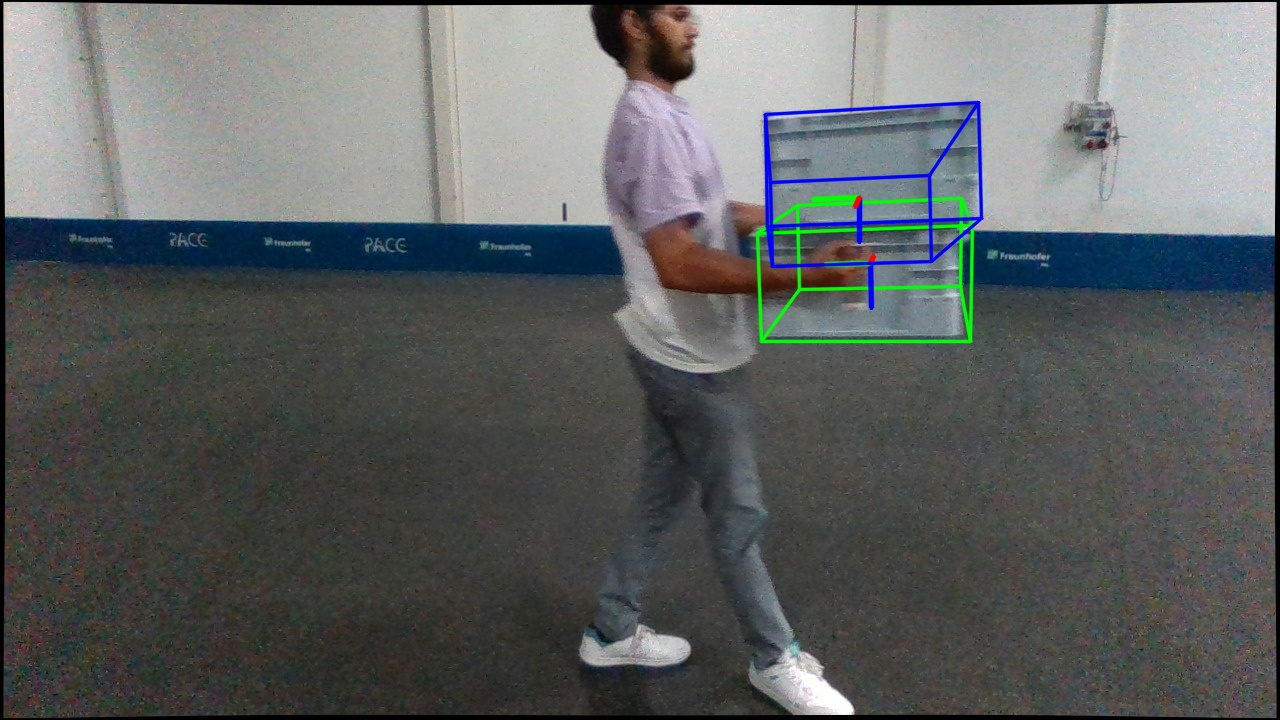

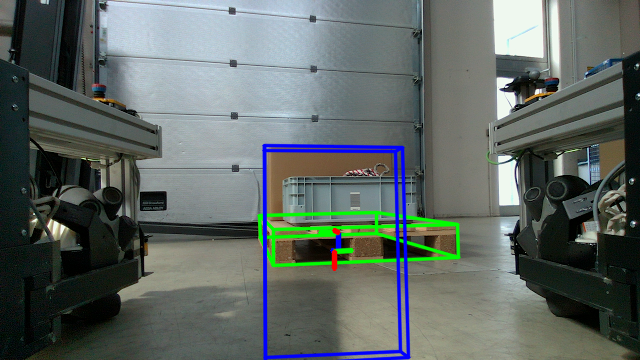

MR6D evaluates pose estimation pipelines using both ground-truth masks and unseen segmentation pipelines, leveraging FoundationPose and CTL methods. Results indicate that while 6D pose models show generalization to novel objects, segmentation quality is a significant constraint. GT-masks+FoundationPose yields an Average Recall of 0.3462 across test subsets, whereas CTL-generated masks lower AR to 0.1841. Figure 5 showcases pipeline predictions against ground truth annotations, emphasizing segmentation as a crucial factor.

Figure 5: Quantitative results for GT-masks + FoundationPose.

Figure 6: Challenging cases in evaluation with GT-masks + FoundationPose.

Conclusion

MR6D establishes a new benchmark for 6D pose estimation tailored for mobile robots in industrial environments. Its instance-level focus on larger objects, combined with diverse environmental settings, addresses crucial perception challenges. The evaluation underscores the importance of improving 2D segmentation and potential future enhancements in BOP metrics to account for detection distances and urgency. Future work may explore entity-level segmentation and depth estimation improvements for enhanced object integrity in 6D pose estimation pipelines.