- The paper introduces a teacher-student framework that enables robust real-world policy adaptation in humanoids.

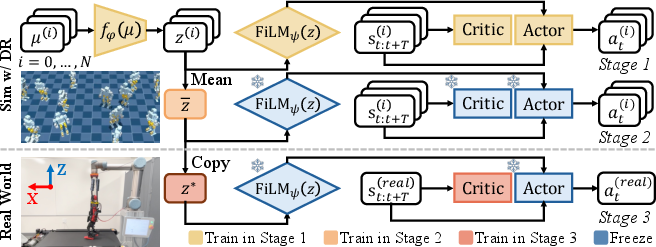

- It employs a three-stage sim-to-real pipeline with FiLM modulation and latent fine-tuning to ensure data-efficient learning.

- Experimental results demonstrate improved stability and safety in treadmill walking and swing-up tasks compared to baseline methods.

Robot-Trains-Robot (RTR): A System for Automatic Real-World Policy Adaptation and Learning for Humanoids

Introduction and Motivation

The "Robot-Trains-Robot" (RTR) framework addresses the persistent challenges in real-world reinforcement learning (RL) for humanoid robots, particularly the sim-to-real gap, safety, reward design, and learning efficiency. While simulation-based RL has enabled significant progress in humanoid locomotion, direct real-world learning remains rare due to the fragility and complexity of humanoid systems. RTR introduces a teacher-student paradigm, where a robotic arm (teacher) actively supports and guides a humanoid robot (student), providing a comprehensive solution for safe, efficient, and largely autonomous real-world policy learning and adaptation.

System Architecture

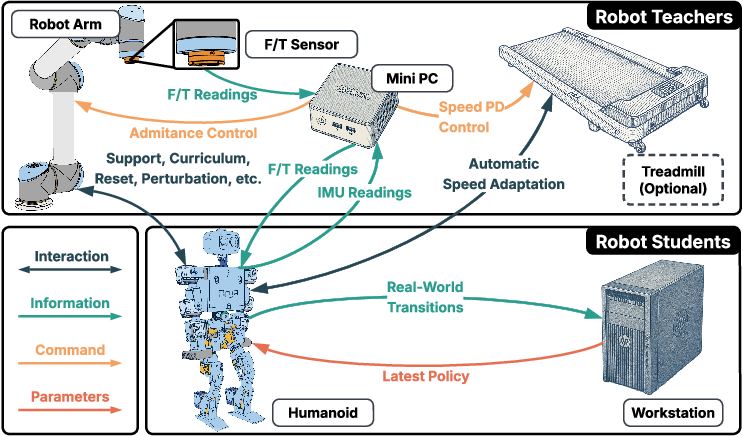

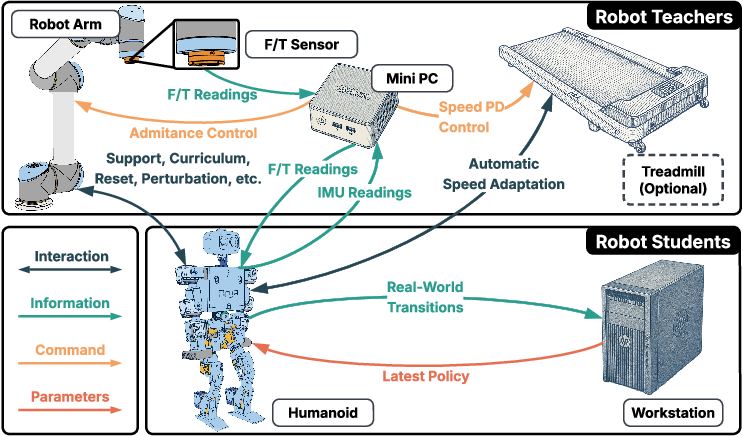

RTR's hardware system comprises two main components: the robot teacher and the robot student. The teacher is a 6-DoF UR5 robotic arm equipped with a force/torque (F/T) sensor and, for locomotion tasks, a programmable treadmill. The student is a 30-DoF open-source humanoid (ToddlerBot) and a workstation for policy training. The teacher provides physical support, interactive feedback, and environmental measurements, while the student executes and learns policies.

Figure 1: System Setup. The teacher (robot arm, F/T sensor, treadmill, mini PC) interacts with the student (humanoid, workstation) via physical, data, control, and parameter channels.

This architecture enables the teacher to deliver compliance control, curriculum scheduling, reward estimation, failure detection, and automatic resets, thereby minimizing human intervention and maximizing safe exploration.

Sim-to-Real Fine-Tuning Algorithm

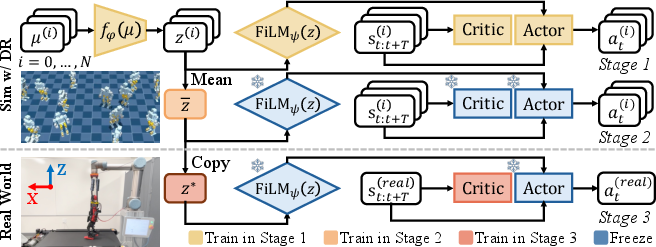

RTR's sim-to-real adaptation pipeline is a three-stage process:

- Dynamics-Conditioned Policy Training in Simulation: A policy π(s,z) is trained in N=1000 domain-randomized environments, where z is a latent vector encoding environment-specific physical parameters via an MLP encoder. The latent is injected into the policy network using FiLM layers, which modulate hidden activations through learned scaling and shifting.

- Universal Latent Optimization: Since real-world environment parameters are unknown, a universal latent z∗ is optimized across all simulation domains by freezing the policy and FiLM parameters and updating z via PPO to maximize expected return. This provides a robust initialization for real-world deployment.

- Real-World Latent Fine-Tuning:

In the real world, the actor and FiLM parameters are frozen, and only the latent z is fine-tuned using PPO. The critic is retrained from scratch due to differences in available observations. The reward is based on treadmill speed tracking, approximating the robot's walking velocity.

Figure 2: Sim-to-real Fine-tuning Algorithm. Three-stage process: simulation training, universal latent optimization, and real-world latent refinement.

This approach leverages the expressivity of FiLM-based latent modulation and the stability of universal latent initialization, enabling rapid and robust adaptation to real-world dynamics.

Real-World Learning from Scratch

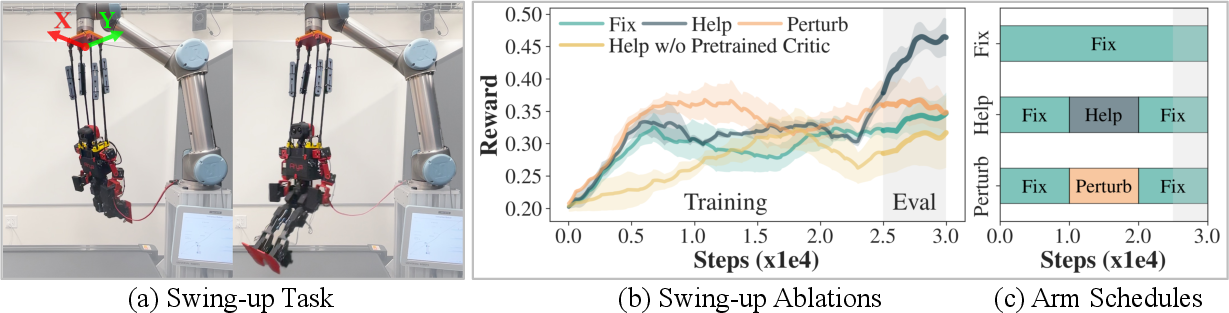

RTR also supports direct real-world RL for tasks that are difficult to simulate, such as the swing-up task involving complex cable dynamics. The training pipeline consists of:

- Initial Data Collection: Actor and critic are trained from scratch in the real world, collecting suboptimal transitions.

- Critic Pretraining: The critic is pretrained offline on the collected data.

- Joint Training: A new actor is initialized, and both actor and critic are trained jointly online.

The reward is defined via the amplitude of the dominant periodic force (extracted using FFT) measured by the F/T sensor, incentivizing maximal swing height.

Teacher Policies and Curriculum

The teacher robot provides:

Admittance control for compliant support during walking; phase-aligned position control for swing-up (helping or perturbing the swing).

Gradual reduction of support (e.g., lowering arm height) to increase task difficulty.

Proxy rewards derived from F/T sensor and treadmill data.

- Failure Detection and Automatic Resets:

Monitoring of torso pitch and F/T readings to trigger resets.

This active involvement enables safe, efficient, and robust real-world learning.

Experimental Results

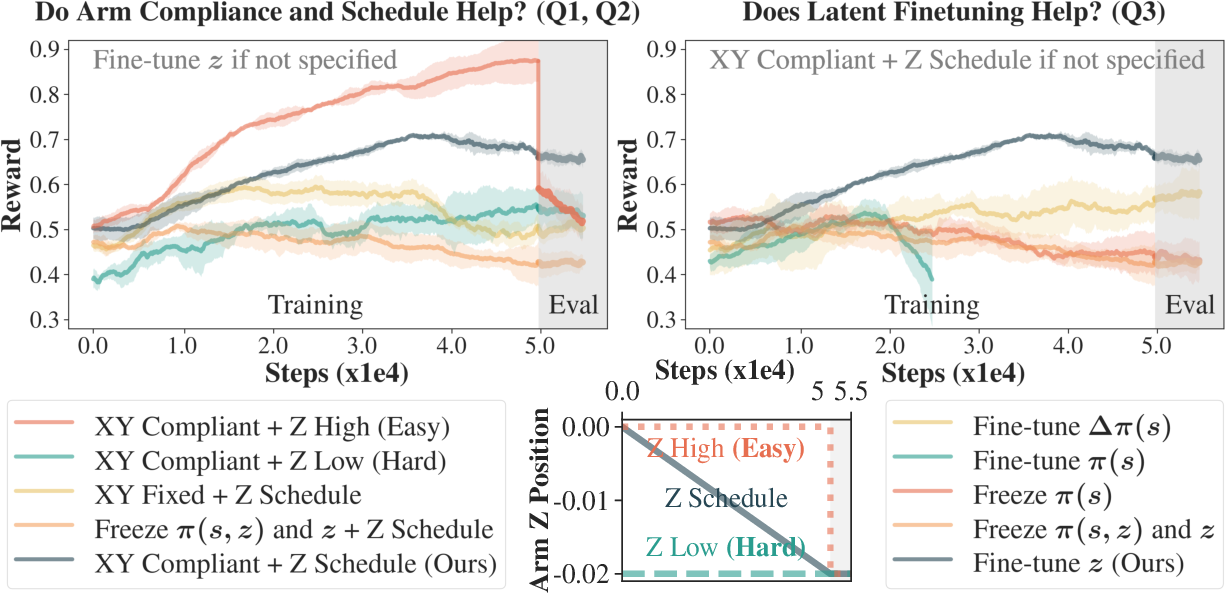

Walking Task: Sim-to-Real Adaptation

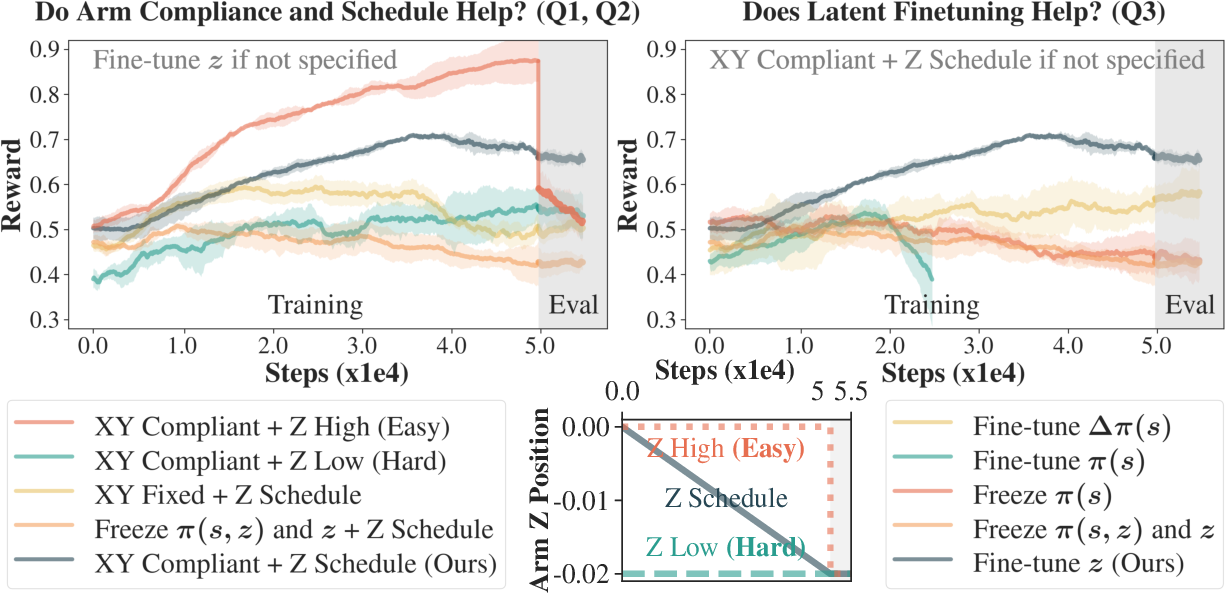

RTR was evaluated on treadmill walking with precise speed tracking. Key findings include:

XY-compliant arm control significantly improves adaptation and stability compared to fixed-arm baselines.

Gradual reduction of support yields better final performance and stability than static or abrupt schedules.

Fine-tuning only the latent z (with actor and FiLM frozen) is more data-efficient and stable than direct or residual policy fine-tuning.

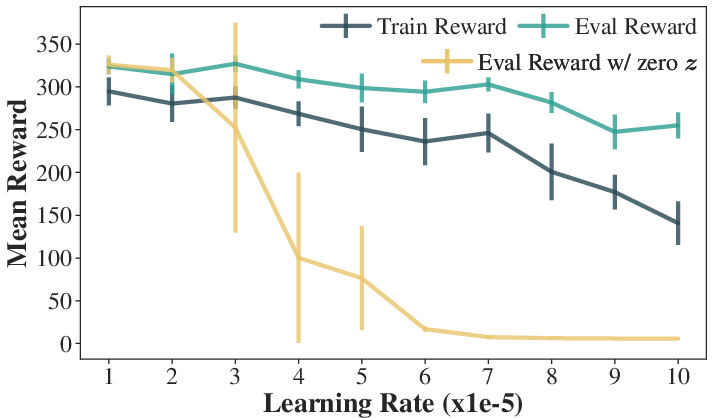

Figure 3: Walking Ablation. Linear velocity tracking rewards during training and evaluation for different arm and latent fine-tuning strategies.

RTR achieves stable walking at 0.15 m/s with lower torso pitch/roll and end-effector forces than all baselines, including RMA-style adaptation.

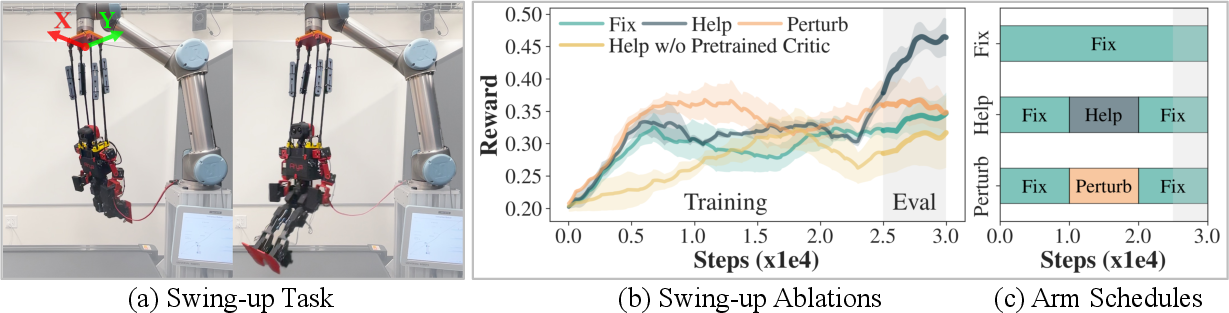

Swing-Up Task: Real-World RL from Scratch

RTR enables a humanoid to learn a swing-up behavior in under 15 minutes of real-world interaction. Ablations show:

Both helping and perturbing arm schedules outperform a fixed-arm baseline, with helping yielding the fastest and highest reward improvement.

Pretraining the critic with offline data accelerates early-stage learning.

Figure 4: Swing-up Ablation. (a) Swing-up setup. (b) Reward curves for helping, perturbing, and fixed-arm schedules, with/without critic pretraining. (c) Arm schedule visualization.

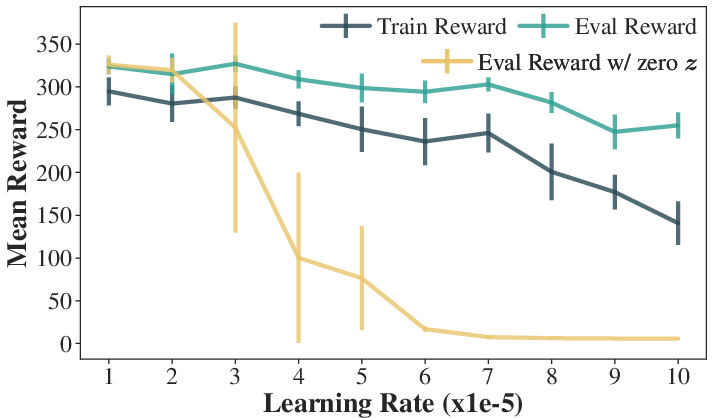

FiLM Learning Rate Ablation

The learning rate for FiLM layers is critical: too small, and the policy ignores the latent; too large, and training becomes unstable. A learning rate of 5×10−5 balances performance and latent utilization.

Figure 5: FiLM learning rate ablation. Policy performance as a function of FiLM learning rate.

Comparative Analysis

RTR's FiLM-based latent modulation outperforms RMA's concatenation approach in both simulation and real-world adaptation. Universal latent optimization provides a more stable and effective initialization for real-world fine-tuning than RMA's adaptation module, especially for high-DoF humanoids.

Implications and Future Directions

RTR demonstrates that a teacher-student robotic system can autonomously and safely enable real-world RL for complex humanoid tasks, with minimal human intervention. The modularity and generality of the approach suggest applicability to larger humanoids and more complex tasks, contingent on scaling the teacher's payload and workspace. The reliance on task-specific curricula and hardware-constrained reward design remains a limitation; future work should focus on automated curriculum generation and richer real-world sensing modalities.

Conclusion

RTR provides a comprehensive framework for real-world humanoid policy adaptation and learning, integrating hardware support, curriculum scheduling, and a robust sim-to-real RL pipeline. The system achieves efficient, stable, and largely autonomous real-world learning for both adaptation and from-scratch tasks. The approach sets a foundation for scalable, generalizable real-world RL in humanoid robotics, with future work needed on curriculum automation and advanced sensing for broader applicability.