A comprehensive taxonomy of hallucinations in Large Language Models (2508.01781v1)

Abstract: LLMs have revolutionized natural language processing, yet their propensity for hallucination, generating plausible but factually incorrect or fabricated content, remains a critical challenge. This report provides a comprehensive taxonomy of LLM hallucinations, beginning with a formal definition and a theoretical framework that posits its inherent inevitability in computable LLMs, irrespective of architecture or training. It explores core distinctions, differentiating between intrinsic (contradicting input context) and extrinsic (inconsistent with training data or reality), as well as factuality (absolute correctness) and faithfulness (adherence to input). The report then details specific manifestations, including factual errors, contextual and logical inconsistencies, temporal disorientation, ethical violations, and task-specific hallucinations across domains like code generation and multimodal applications. It analyzes the underlying causes, categorizing them into data-related issues, model-related factors, and prompt-related influences. Furthermore, the report examines cognitive and human factors influencing hallucination perception, surveys evaluation benchmarks and metrics for detection, and outlines architectural and systemic mitigation strategies. Finally, it introduces web-based resources for monitoring LLM releases and performance. This report underscores the complex, multifaceted nature of LLM hallucinations and emphasizes that, given their theoretical inevitability, future efforts must focus on robust detection, mitigation, and continuous human oversight for responsible and reliable deployment in critical applications.

Summary

- The paper introduces a formal proof that hallucinations in any computable LLM are inevitable, using computability and diagonalization arguments.

- The paper proposes a dual-axis taxonomy distinguishing intrinsic/extrinsic and factuality/faithfulness hallucinations to enable standardized evaluation.

- The analysis identifies data, model, and prompt factors as causes and suggests layered mitigation strategies and human oversight for safe deployment.

A Comprehensive Taxonomy of Hallucinations in LLMs

Introduction and Motivation

The paper "A comprehensive taxonomy of hallucinations in LLMs" (2508.01781) presents a systematic and theoretically grounded analysis of hallucinations in LLMs, addressing both their formal inevitability and their diverse empirical manifestations. The work synthesizes formal definitions, taxonomic frameworks, empirical typologies, causal analyses, evaluation methodologies, and mitigation strategies, providing a unified reference for researchers and practitioners concerned with the reliability and safety of LLM deployments.

Formal Definition and Theoretical Inevitability

A central contribution is the formalization of hallucination as an inconsistency between a computable LLM h and a computable ground truth function f, within a formal world Gf={(s,f(s))∣s∈S}. The paper leverages computability theory, specifically diagonalization arguments, to prove that for any computable LLM, there exists a computable f such that the LLM will hallucinate on at least one, and in fact infinitely many, inputs. This result is architecture-agnostic and holds regardless of training data, learning algorithm, or prompting strategy. The corollary is that no computable LLM can self-eliminate hallucination, and thus, hallucination is not a removable artifact but an intrinsic limitation of the LLM paradigm.

This theoretical inevitability has direct implications for deployment: LLMs cannot be trusted as autonomous agents in safety-critical or high-stakes domains without external verification, guardrails, or human oversight.

Core Taxonomies: Intrinsic/Extrinsic and Factuality/Faithfulness

The paper delineates two orthogonal axes for classifying hallucinations:

- Intrinsic vs. Extrinsic: Intrinsic hallucinations contradict the provided input or context (e.g., internal logical inconsistencies or misrepresentation of source material), while extrinsic hallucinations introduce content unsupported or refuted by the input or training data (e.g., fabricated entities or events).

- Factuality vs. Faithfulness: Factuality hallucinations are factually incorrect with respect to external reality or knowledge bases, whereas faithfulness hallucinations diverge from the input prompt or context, regardless of external truth.

These axes are not mutually exclusive and often overlap in real-world cases. The lack of a unified taxonomy in the literature is identified as a barrier to standardized evaluation and mitigation.

Specific Manifestations and Task-Specific Hallucinations

The taxonomy is further refined into concrete categories, including:

- Factual errors and fabrications: Incorrect facts, invented entities, or fabricated citations.

- Contextual inconsistencies: Contradictions or unsupported additions relative to the input.

- Instruction deviation: Failure to follow explicit user instructions.

- Logical inconsistencies: Internal contradictions or reasoning errors.

- Temporal disorientation: Outdated or anachronistic information.

- Ethical violations: Harmful, defamatory, or legally incorrect outputs.

- Amalgamated hallucinations: Erroneous blending of multiple facts.

- Nonsensical responses: Irrelevant or incoherent outputs.

- Task-specific: Hallucinations in code generation, multimodal reasoning, dialogue, summarization, and QA.

The diversity of these manifestations underscores the need for domain- and task-specific detection and mitigation strategies.

Underlying Causes: Data, Model, and Prompt Factors

The paper provides a granular analysis of the root causes of hallucination:

- Data-related: Noisy, biased, or outdated training data; insufficient representation; source-reference divergence.

- Model-related: Auto-regressive generation, exposure bias, capability and belief misalignment, over-optimization, stochastic decoding, overconfidence, generalization failure, reasoning limitations, knowledge overshadowing, and extraction failures.

- Prompt-related: Adversarial attacks, confirmatory bias, and poor prompting.

The emergent property of hallucination is attributed to the statistical, auto-regressive nature of LLMs, which optimize for plausible token sequences rather than factual or logical correctness.

Human Factors and Cognitive Biases

The perception and impact of hallucinations are modulated by human factors:

- User trust and interpretability: Fluency and confidence in LLM outputs can mislead users into overtrusting hallucinated content.

- Cognitive biases: Automation bias, confirmation bias, and the illusion of explanatory depth increase susceptibility to hallucinations, even when users are warned of potential errors.

- Design implications: Calibrated uncertainty displays, source-grounding indicators, justification prompts, and factuality-aware interfaces are recommended to enhance user resilience and support human-in-the-loop oversight.

Evaluation Benchmarks and Metrics

The paper surveys principal benchmarks and metrics for hallucination detection:

- Benchmarks: TruthfulQA, HalluLens, FActScore, Q2, QuestEval, and domain-specific datasets (e.g., MedHallu, CodeHaluEval, HALLUCINOGEN).

- Metrics: ROUGE, BLEU, BERTScore (surface/semantic similarity); FactCC, SummaC (entailment/NLI-based); KILT, RAE (knowledge-grounded); and human evaluation (correctness, faithfulness, coherence, harmfulness).

- Limitations: Lack of standardization, task dependence, insensitivity to subtle hallucinations, and limited explainability.

The need for unified, taxonomy-aware, and context-sensitive evaluation frameworks is emphasized.

Mitigation Strategies: Architectural and Systemic

Mitigation is addressed at both the model and system levels:

- Architectural: Toolformer-style augmentation (external tool/API calls), retrieval-augmented generation (RAG), fine-tuning with synthetic or adversarially filtered data.

- Systemic: Guardrails (logic validators, factual filters, rule-based fallbacks), symbolic integration, and hybrid context-aware systems.

No single technique suffices; layered, hybrid approaches tailored to application context are advocated.

Monitoring and Benchmarking Resources

The paper highlights web-based resources for tracking LLM performance and hallucination trends:

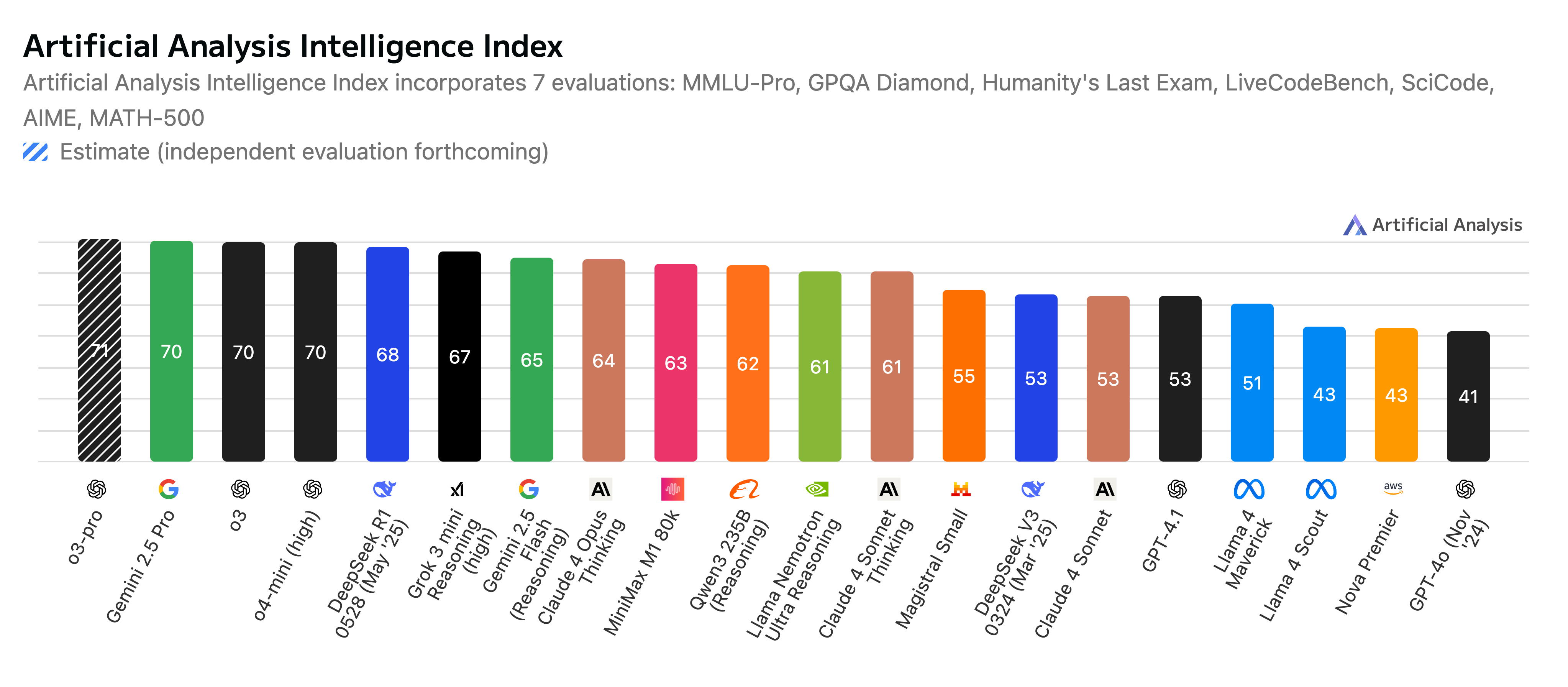

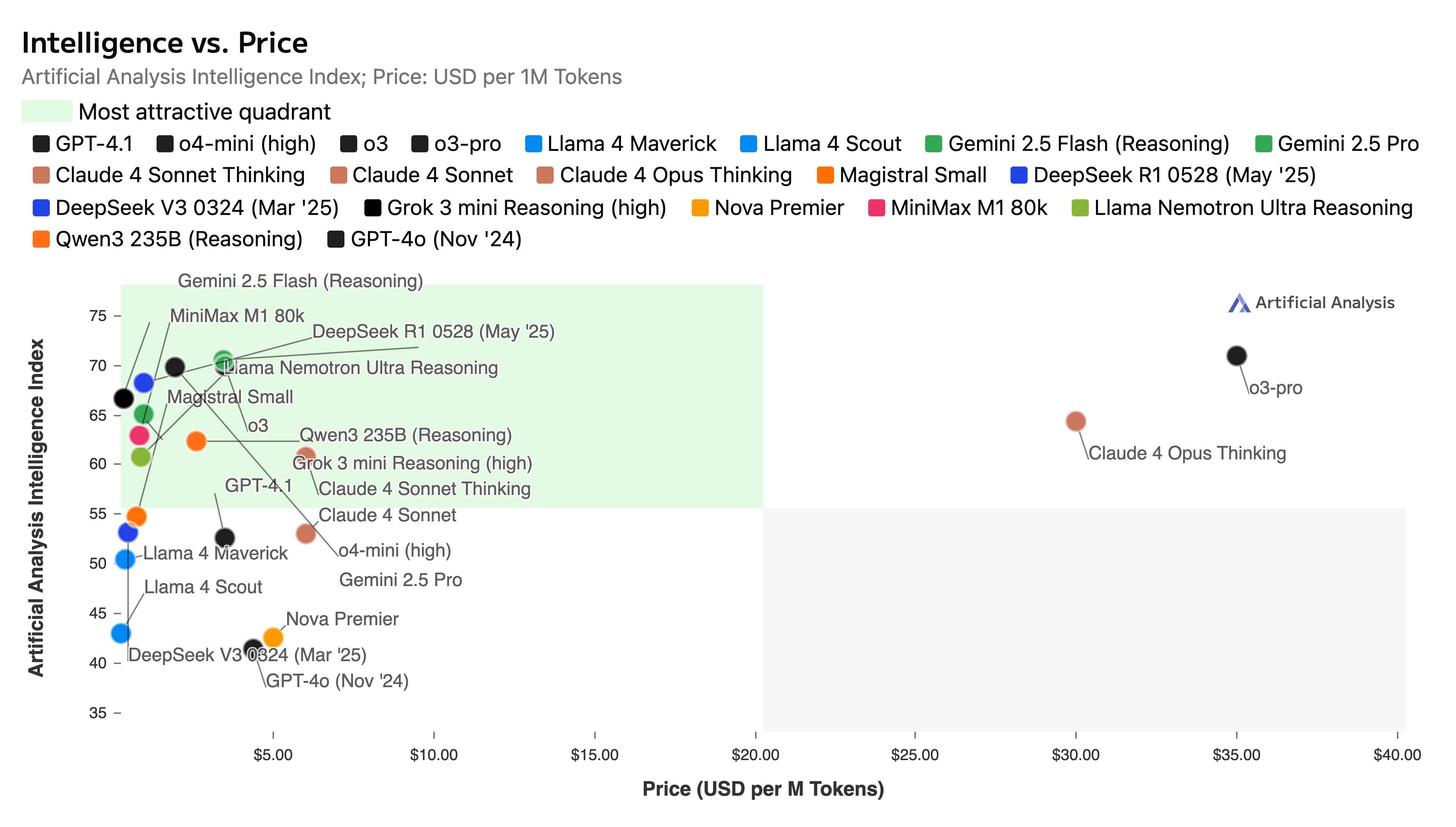

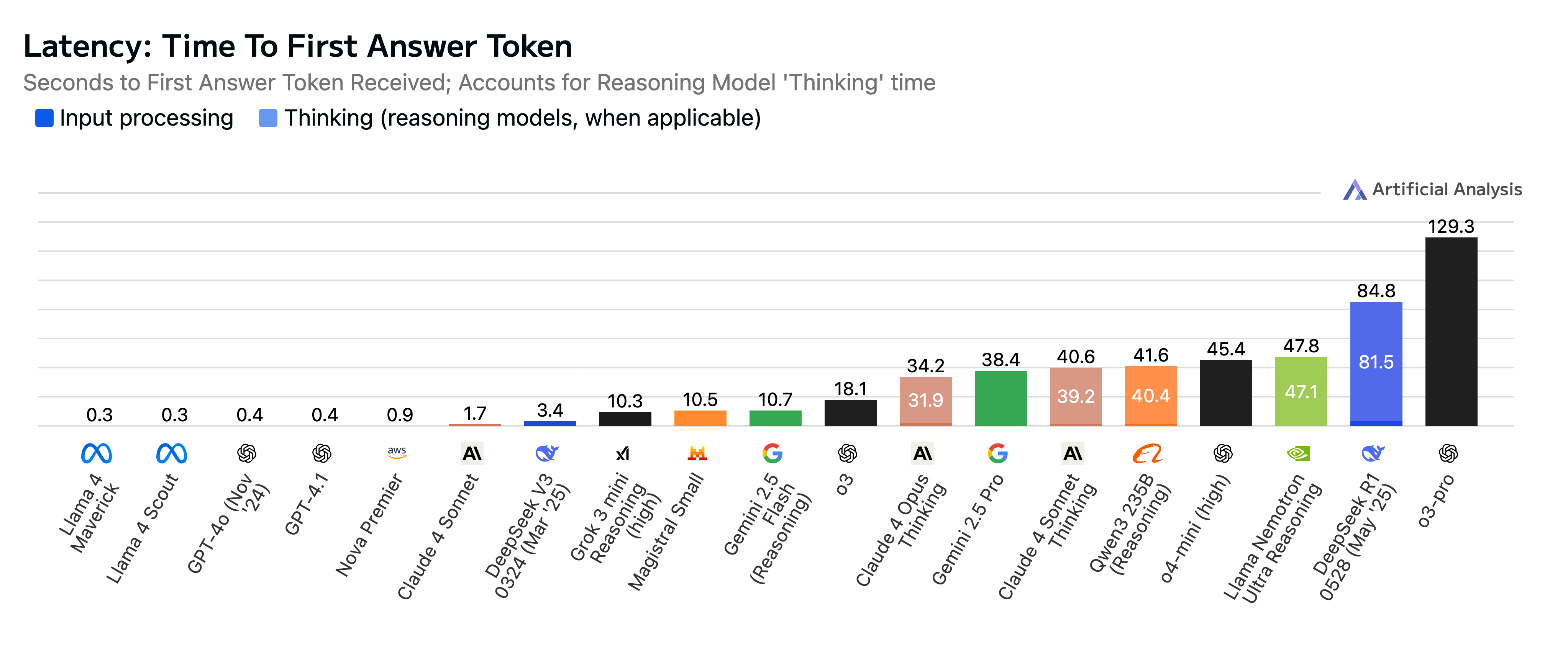

- Artificial Analysis: Intelligence, cost, latency, and multimodal benchmarks.

Figure 1: Sample visualization of the AI Index, retrieved on 28 June 2025.

Figure 2: Sample visualization of intelligence versus price, retrieved on 28 June 2025.

Figure 3: Sample visualization of latency, retrieved on 28 June 2025.

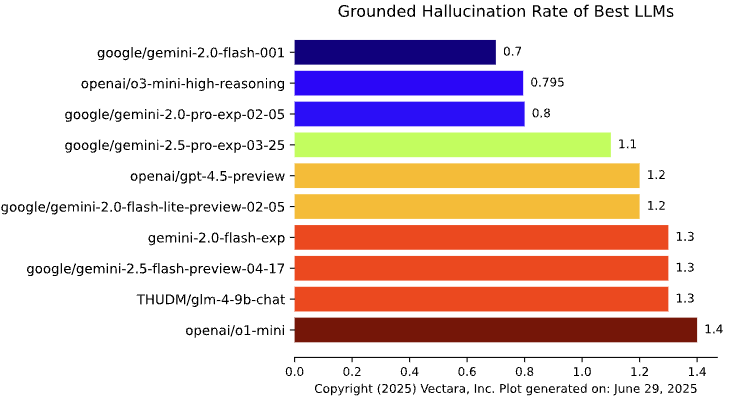

- Vectara Hallucination Leaderboard: Explicit tracking of hallucination rates in summarization.

Figure 4: Sample visualization of grounded hallucinations rate using Hughes hallucination evaluation model, retrieved on 29 June 2025.

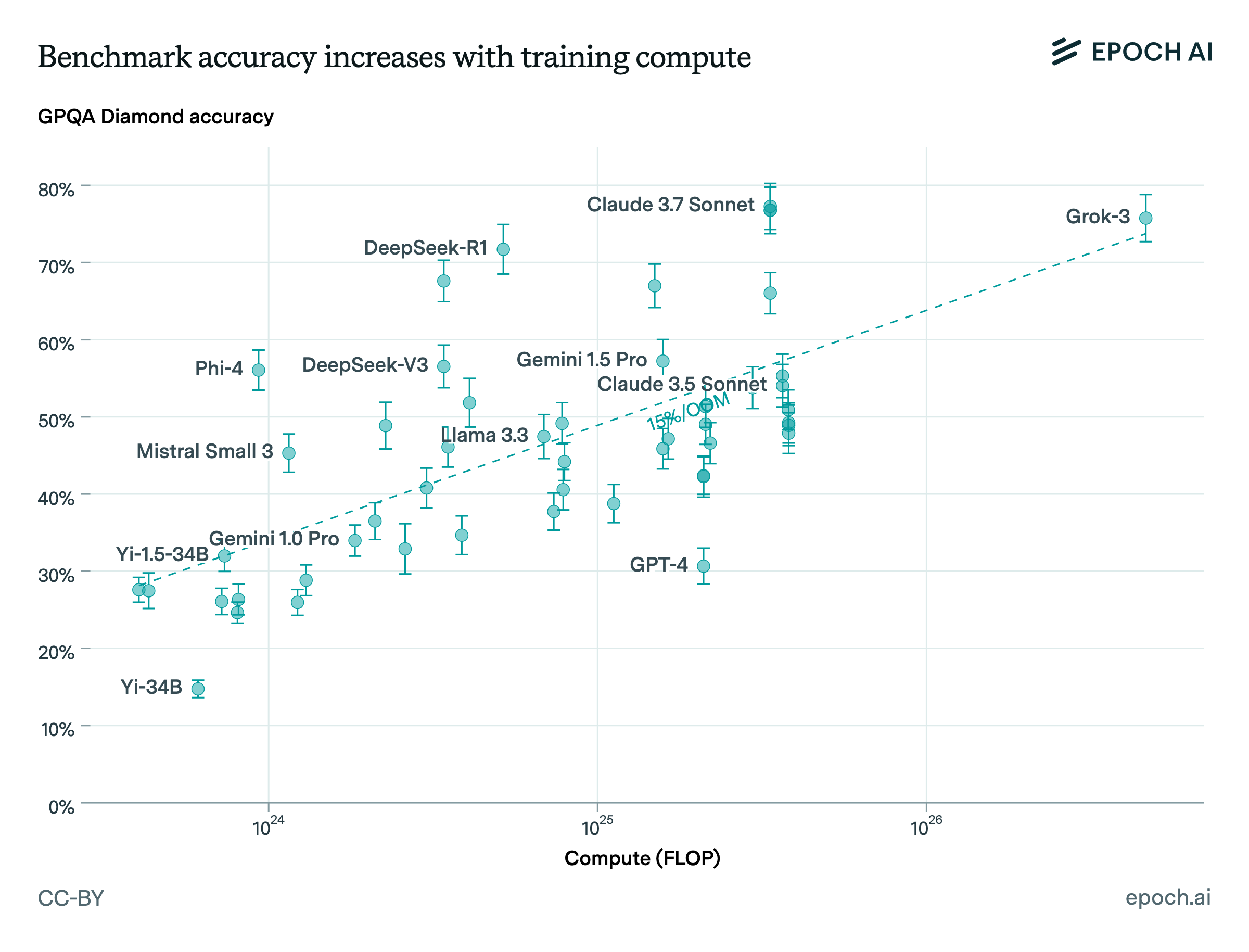

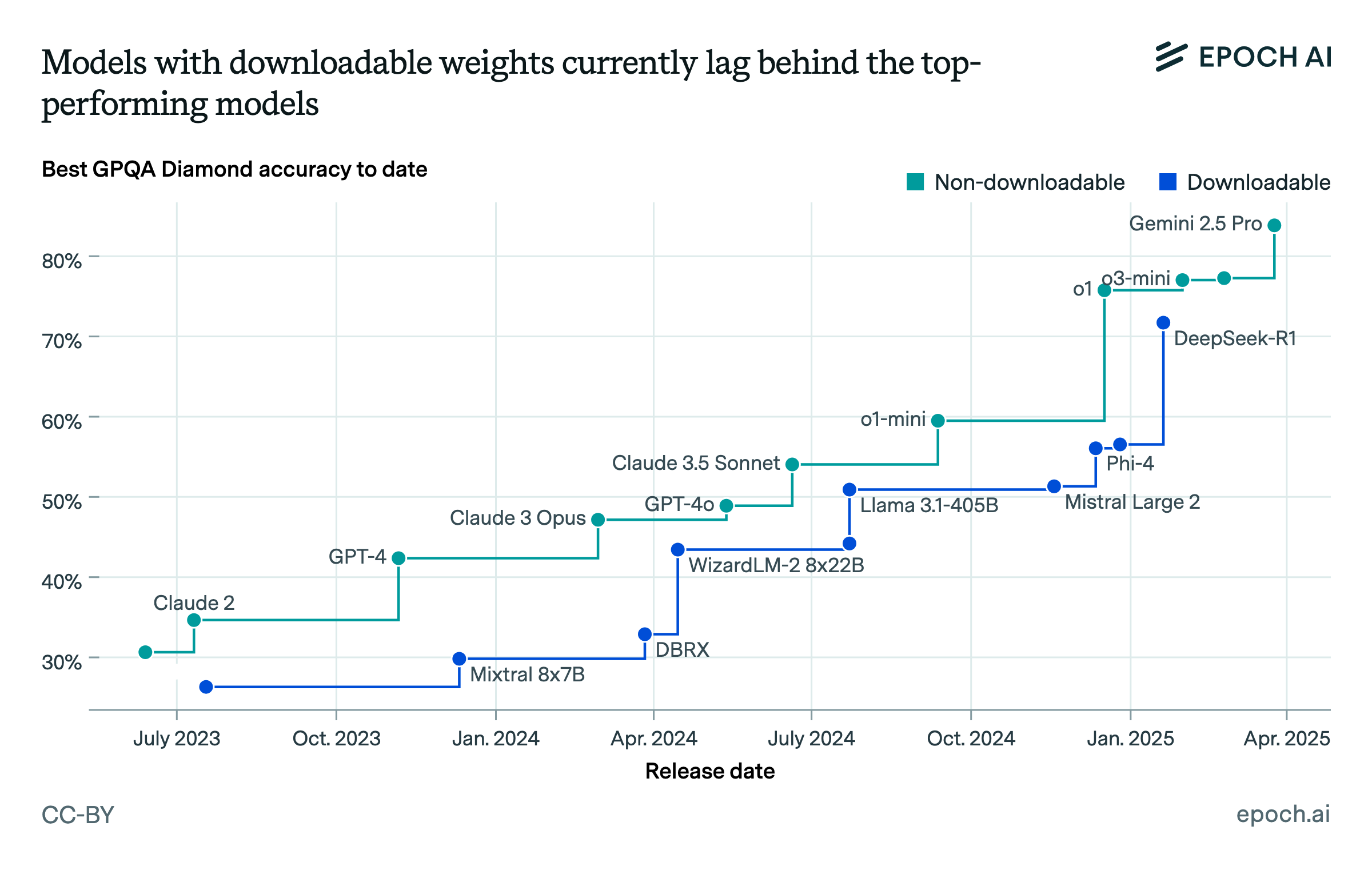

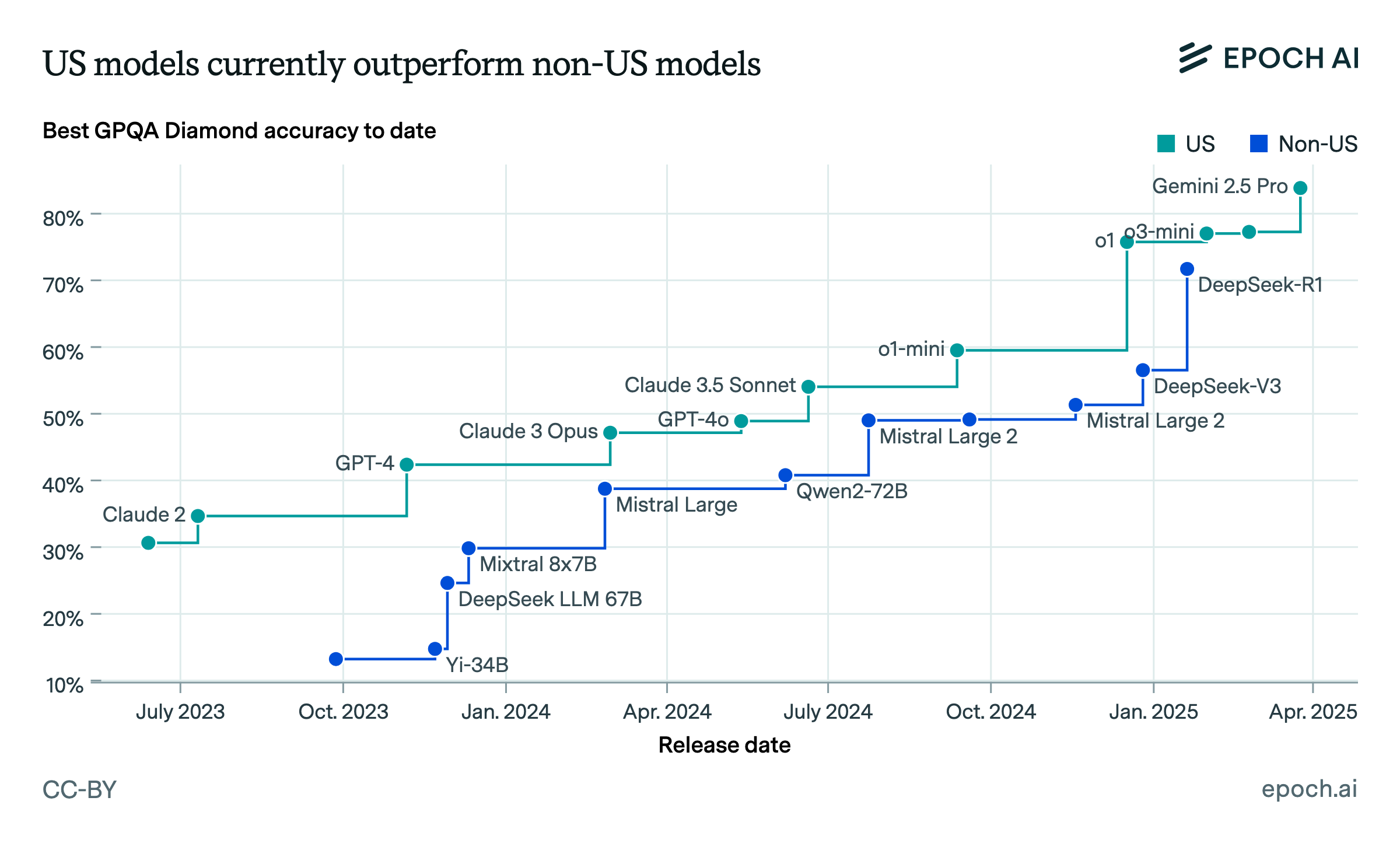

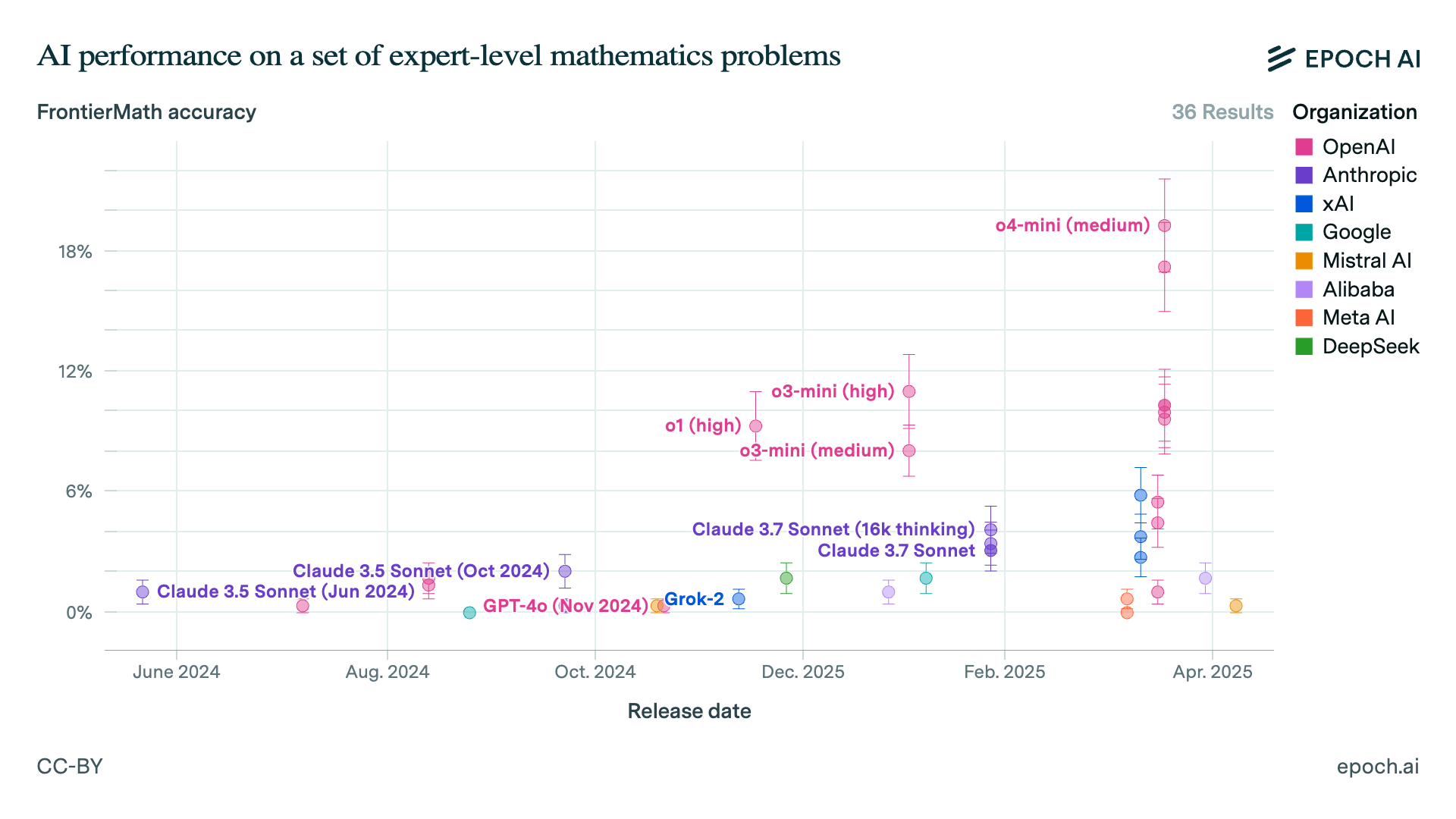

- Epoch AI Benchmarking Dashboard: Longitudinal trends in accuracy, compute, and open/proprietary model performance.

Figure 5: Sample visualization of accuracy versus training compute, retrieved on 29 June 2025.

Figure 6: Sample visualization of models with downloadable weights vs proprietary, retrieved on 29 June 2025.

Figure 7: Sample visualization of US models vs non-US, retrieved on 29 June 2025.

Figure 8: Sample visualization of models performance on expert-level mathematics problems, retrieved on 29 June 2025.

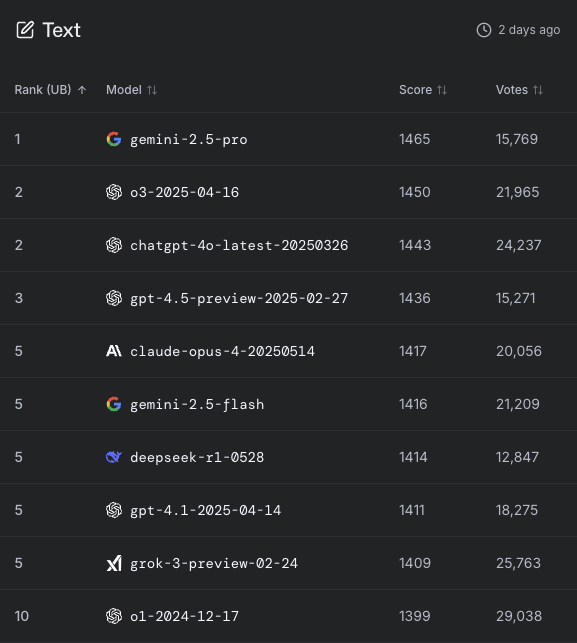

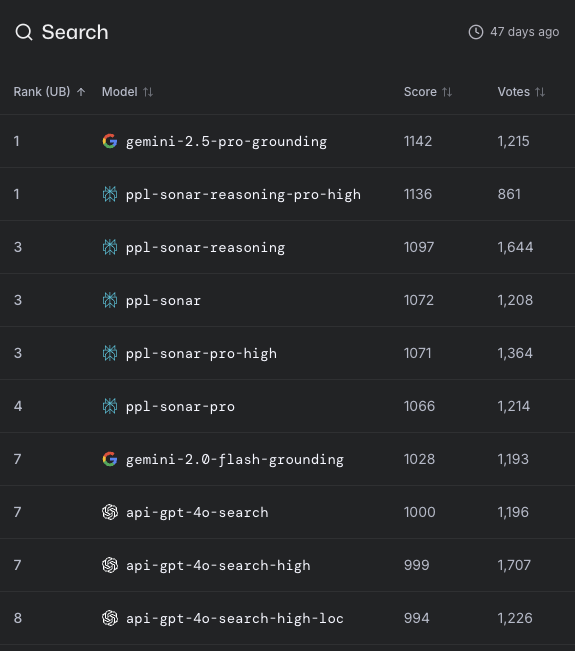

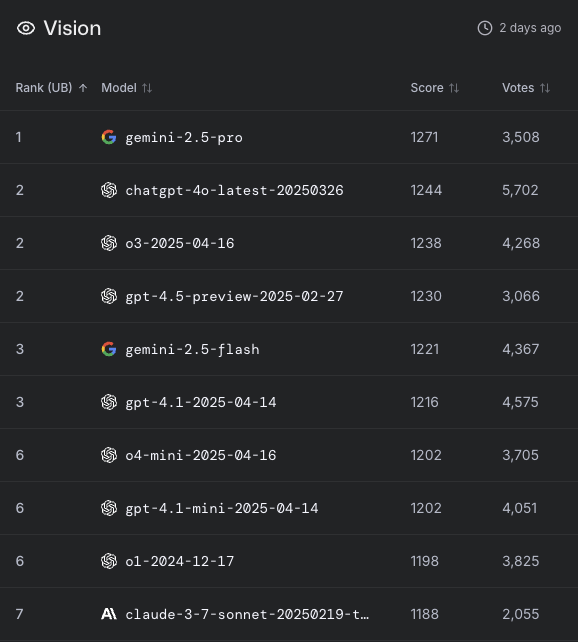

- LM Arena: Community-driven, real-world preference-based evaluation of model helpfulness and trustworthiness.

Figure 9: Sample visualization of models performance on text generation, retrieved on 9 July 2025.

Figure 10: Sample visualization of models performance on generative AI models capable of understanding and processing visual inputs, retrieved on 9 July 2025.

These resources facilitate transparent, reproducible, and up-to-date monitoring of LLM capabilities and hallucination risks.

Conclusion

The inevitability and multifaceted nature of hallucinations in LLMs, as rigorously established in this work, necessitate a paradigm shift from elimination to robust detection, mitigation, and human-centered oversight. The formal proofs of inevitability, combined with the detailed empirical taxonomy and causal analysis, provide a foundation for future research on reliable LLM deployment. Progress will depend on the development of unified taxonomies, context-aware evaluation frameworks, hybrid mitigation architectures, and user-centric interface designs. In high-stakes domains, continuous human-in-the-loop validation and external safeguards are essential. The paper's synthesis of theoretical, empirical, and practical perspectives offers a comprehensive roadmap for advancing the safety and trustworthiness of LLMs in real-world applications.

Follow-up Questions

- How do computability theory and diagonalization arguments support the claim of unavoidable hallucinations in LLMs?

- What are the practical implications of differentiating intrinsic versus extrinsic hallucinations for model evaluation?

- How can the dual-axis taxonomy improve the design of mitigation strategies in high-stakes applications?

- What limitations exist in current benchmarks for evaluating hallucinations in large language models?

- Find recent papers about mitigating hallucinations in LLMs.

Related Papers

- The Troubling Emergence of Hallucination in Large Language Models -- An Extensive Definition, Quantification, and Prescriptive Remediations (2023)

- Siren's Song in the AI Ocean: A Survey on Hallucination in Large Language Models (2023)

- A Survey on Hallucination in Large Language Models: Principles, Taxonomy, Challenges, and Open Questions (2023)

- Cognitive Mirage: A Review of Hallucinations in Large Language Models (2023)

- A Comprehensive Survey of Hallucination Mitigation Techniques in Large Language Models (2024)

- The Dawn After the Dark: An Empirical Study on Factuality Hallucination in Large Language Models (2024)

- Hallucination is Inevitable: An Innate Limitation of Large Language Models (2024)

- LLMs Will Always Hallucinate, and We Need to Live With This (2024)

- HALoGEN: Fantastic LLM Hallucinations and Where to Find Them (2025)

- HalluciNot: Hallucination Detection Through Context and Common Knowledge Verification (2025)

Authors (1)

Tweets

YouTube

alphaXiv

- A comprehensive taxonomy of hallucinations in Large Language Models (46 likes, 0 questions)