Introduction

Hallucination in LLMs is a recognized problem that occurs when these models generate text containing inaccurate or unfounded information. This presents substantial challenges in applications like summarizing medical records or providing financial advice, where accuracy is vital. The survey explored in this discussion tackles over thirty-two different techniques developed to mitigate hallucinations in LLMs.

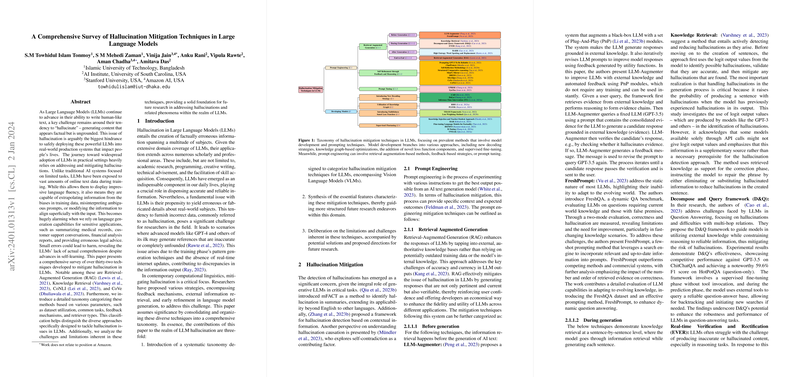

Hallucination Mitigation Techniques

The survey categorizes these techniques into several groups. Prompting methods focus on optimizing instructions to generate more accurate responses. For instance, Retrieval-Augmented Generation (RAG) incorporates external knowledge to update and enrich model responses. Techniques that unfold through self-refinement leverage feedback to improve subsequent outputs, such as the Self-Reflection Methodology that iteratively refines medical QA responses.

Furthermore, studies have also proposed novel model architectures specifically designed to tackle hallucinations, including decoding strategies like Context-Aware Decoding (CAD), which emphasizes context-relevant information, and the utilization of Knowledge Graphs (KGs) that enable models to ground responses in verified information.

Supervised Fine-Tuning

Supervised fine-tuning refines the model on task-specific data, which can significantly improve the relevance and reliability of text produced by LLMs. For example, Knowledge Injection techniques infuse domain-specific knowledge, while others like Refusal-Aware Instruction Tuning (R-Tuning) teach the model when to avoid responding to certain prompts due to knowledge limitations.

Challenges and Future Directions

The survey addresses the challenges and limitations associated with current hallucination mitigation techniques. These include the varying reliability of tagged datasets and the complexity of implementing solutions that could work across different language domains and tasks. Looking forward, potential directions include hybrid models that integrate multiple mitigation approaches, unsupervised learning methods to reduce reliance on labeled data, and the development of models with inherent safety features to tackle hallucinations.

Conclusion

The thorough survey presented in this discussion offers a structured categorization of hallucination mitigation techniques, providing a basis for future research. It underscores the need for continued advancement in this area, as the reliability and accuracy of LLMs are critical for their practical application. With ongoing review and development of mitigation strategies, we move closer to the goal of creating LLMs that can consistently produce coherent and contextually relevant information, while minimizing the risk and impact of hallucination.