- The paper introduces MTMs that leverage sparse autoencoders to extract interpretable feature directions for topic modeling and steer text generation.

- The study presents three variants—mLDA, mETM, and mBERTopic—that redefine topic representations in a semantically rich feature space.

- Empirical results show that MTMs outperform traditional baselines in coherence metrics and enable effective control of language model outputs.

Leveraging Sparse Autoencoders for Mechanistic Topic Models

The paper introduces Mechanistic Topic Models (MTMs), a novel approach to topic modeling that uses interpretable features learned by sparse autoencoders (SAEs) instead of traditional bag-of-words representations. These models define topics over a semantically rich space, enabling the discovery of deeper conceptual themes with expressive feature descriptions and controllable text generation through topic-based steering vectors. The paper presents three instantiations of MTMs: mechanistic LDA (mLDA), mechanistic ETM (mETM), and mechanistic BERTopic (mBERTopic).

Figure 1: Sample MTM topic outputs on the PoemSum dataset, demonstrating the model's ability to capture complex semantic content through interpretable, high-level features.

Key Concepts and Implementation

The core idea behind MTMs is to leverage the linear representation hypothesis, which suggests that high-level concepts in LLMs are encoded as linear directions within their internal activations. SAEs are used to extract these interpretable features from LLM activations. The activation vector $\a \in \mathbb{R}^{H}$ produced by a transformer model can be decomposed as:

$\a = \sum_{i=1}^W \alpha_i w_i + b$

where b is an input-independent constant vector, the set {w1,w2,…,wW} consists of nearly orthogonal unit vectors, each vector wi corresponds to a human-interpretable feature, each scalar αi represents the strength of feature i in the activation vector $\a$, with sparse activation, and the number of vectors W is typically much larger than their dimension H.

The implementation involves several key steps:

- Corpus Transformation: The corpus is transformed into SAE feature counts by counting how often each feature activates strongly within each document using a thresholding approach.

$\tilde{c}_{d,i} = \sum_{j=1}^{N_{\text{tok} 1 \{ \alpha_{i}(\mathbf{a}_{d,j}) > q_i\}$ where αi(ad,j) is feature i's activation on token j, and qi is the 80th percentile of feature i's activation distribution on the original SAE training data.

- Topic-Feature Weight Learning: Topic-feature weights βk∈R+W and document-topic distributions θd∈ΔK−1 are learned using adaptations of existing topic modeling algorithms.

- Topic Description Generation: Interpretable topic descriptions tk are generated from learned features, either through direct concatenation of top feature descriptions (TopFeatures) or by summarizing them using an LLM (Summarization).

- Steering Vector Construction: Steering vectors sk are constructed for controllable generation by weighting SAE feature directions according to their importance in topic k. This is defined as:

$s_k = \frac{\sum_{i \in W} _{k,i}w_i}{\left\| \sum_{i \in W} _{k,i}w_i\right\|_2}$

where sk is a unit vector that points in the direction most characteristic of topic k in the LLM's activation space.

MTM Variants

The paper explores three MTM variants:

Evaluation Methodology

The paper introduces topic judge, an LLM-based pairwise comparison evaluation framework. This framework addresses limitations of existing metrics by using pairwise comparisons to assess how well topics describe documents, enabling fair cross-vocabulary evaluation while capturing semantic nuance. The method involves performing pairwise comparisons between all model pairs, prompting an LLM judge to select which representation better captures the document. A Bradley-Terry model is then used to compute final scores.

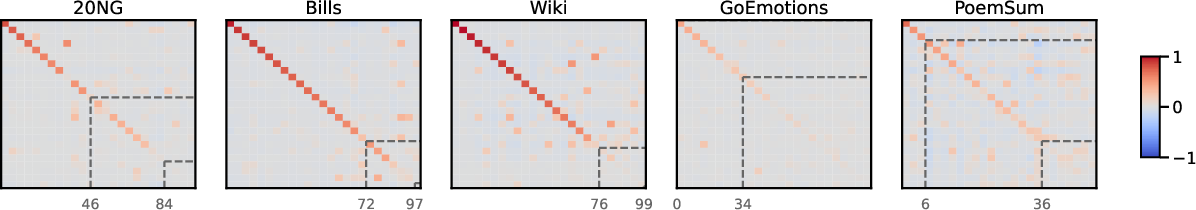

Figure 2: Heatmap representations showing the similarity between mLDA and LDA topics across different datasets, highlighting novel topics found by mLDA on certain datasets.

Empirical Results

The paper evaluates MTMs on five datasets: 20NG, Bills, Wiki, GoEmotions, and PoemSum. The results demonstrate that:

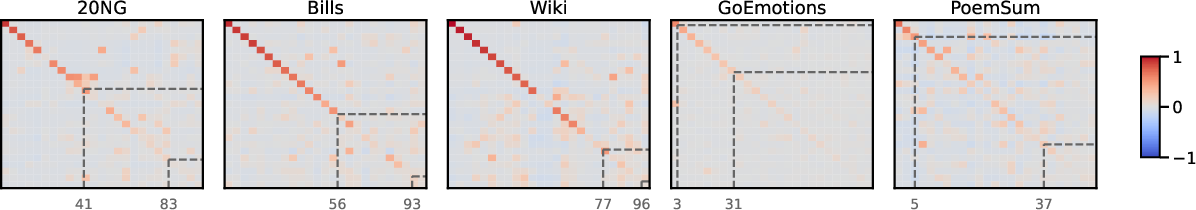

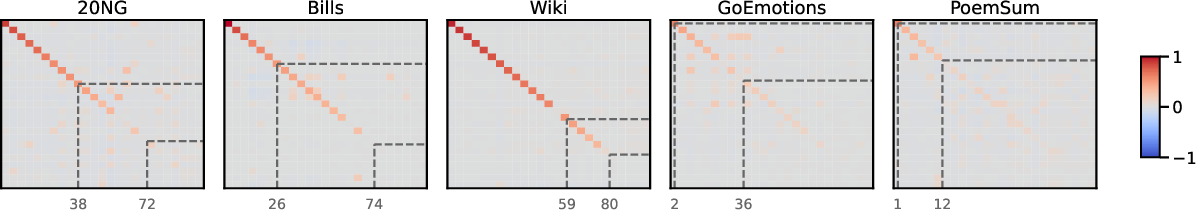

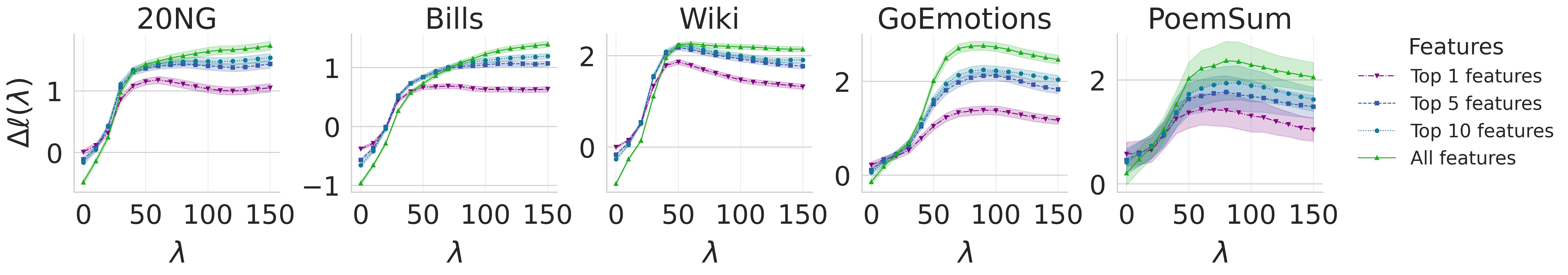

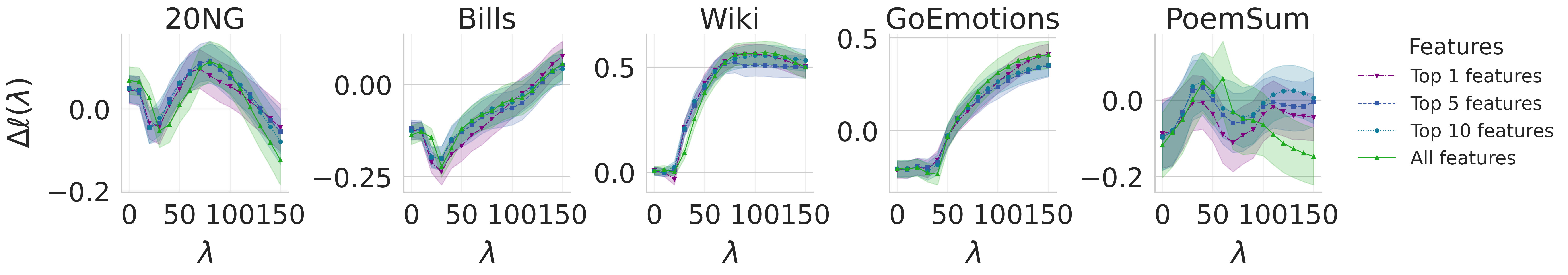

The evaluation uses standard topic modeling metrics like coherence and topic diversity, along with the newly introduced topic judge metric. The paper also analyzes topic novelty by computing correlations between document-topic distributions of different models. The results show that MTMs discover new topics, particularly on datasets where semantic nuance is critical. The topic relevance win rate (TWR) exceeds 85% across all datasets, reaching 99% on Bills, indicating that steering shifts text generation toward intended topics.

Figure 4: Heatmap representations comparing mETM and ETM topics, and mBERTopic and BERTopic topics, illustrating topic similarity based on document proportions.

Discussion and Implications

The paper demonstrates that MTMs offer a practical improvement to topic modeling by leveraging SAE features to capture context and semantic nuance. The results suggest that interpretability tools like SAEs can be successfully repurposed for downstream tasks, provided that appropriate filtering steps are applied and the downstream task is robust to some degree of noise and mislabeling.

Figure 5: Document log-likelihood difference for mETM and mBERTopic, showing how steering affects the likelihood of on-topic versus off-topic documents.

The ability of MTMs to enable controllable text generation opens up new avenues for research in areas such as content creation and personalized LLMing. The strong performance of MTMs on abstract datasets like PoemSum highlights their potential for uncovering hidden themes and semantic relationships in complex text collections.