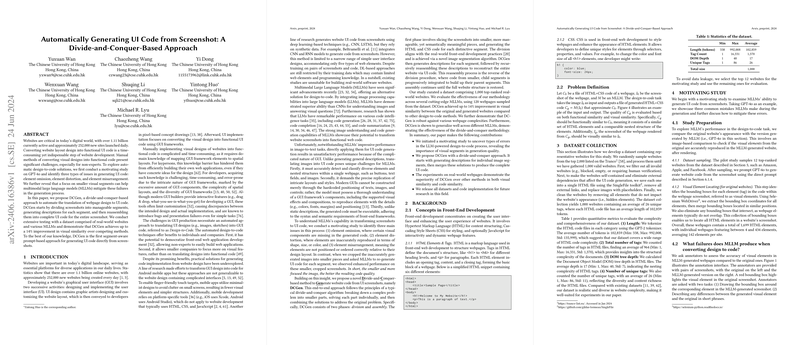

The paper "Automatically Generating UI Code from Screenshot: A Divide-and-Conquer-Based Approach" presents a framework named DCGen, developed to automate the translation of webpage designs into UI code utilizing a divide-and-conquer strategy. The authors advocate for the automation of the design-to-code translation process due to the rising complexity and time-intensive nature of manual UI code generation from graphical designs.

Motivation and Challenges

The authors begin by identifying the challenges associated with the manual conversion of visual designs into structured code. This process is not only labor-intensive but also prone to errors and generally unattainable for non-experts. With an aim to address these issues, the authors conduct an initial paper using GPT-4o, a multimodal LLM (MLLM). This paper surfaces three primary types of failures in generating UI code: element omission, element distortion, and element misarrangement. The paper finds that focusing on smaller visual segments helps mitigate these failures.

Proposed Framework: DCGen

- Divide-and-Conquer Strategy: DCGen employs a novel strategy whereby screenshots of web pages are divided into smaller, manageable segments. This division is recursive and hierarchical, enabling the localization of visual elements that can be effectively processed by MLLMs.

- Segment Analysis and Code Generation: Each segment undergoes a separate analysis where MLLMs generate descriptions or code fragments individually. This divide-and-conquer strategy aligns with traditional computational problem-solving techniques.

- Assembly Process: After segment-level code snippets are generated, DCGen assembles these snippets into a coherent codebase that represents the entire webpage layout.

Empirical Evaluation

The authors validate their approach using a dataset of real-world websites, rigorously testing against various MLLMs, including GPT-4o, Claude-3, and Gemini-1.5. The DCGen framework shows notable improvements, achieving up to a 14% better visual similarity compared to existing methods. These improvements are assessed through high-level metrics such as CLIP scores for visual likeness and BLEU scores for code similarity. Furthermore, the authors measure fine-grained details like text similarity, color matching, and position alignment to benchmark specific capabilities in handling UI elements.

Generalization and Model Adaptation

DCGen was also tested for its generalizability with other models, proving its adaptability and robustness across different MLLMs. The framework enhanced the performance of these models in UI code generation from images, indicating a successful application of the divide-and-conquer methodology.

Conclusion

The paper concludes by highlighting the effectiveness and efficiency of the DCGen framework in automating the design-to-code translation process. The framework's structured approach, through the reduction of complex images into simpler tasks, showcases significant potential in augmenting the productivity of both novice and experienced developers. Future research directions may include extending the framework's applicability to dynamic websites and addressing context-length limitations in current MLLMs. The authors offer all datasets and source codes, making a commendable effort to facilitate further research in this domain.