On The Role of Pretrained Language Models in General-Purpose Text Embeddings: A Survey (2507.20783v1)

Abstract: Text embeddings have attracted growing interest due to their effectiveness across a wide range of NLP tasks, such as retrieval, classification, clustering, bitext mining, and summarization. With the emergence of pretrained LLMs (PLMs), general-purpose text embeddings (GPTE) have gained significant traction for their ability to produce rich, transferable representations. The general architecture of GPTE typically leverages PLMs to derive dense text representations, which are then optimized through contrastive learning on large-scale pairwise datasets. In this survey, we provide a comprehensive overview of GPTE in the era of PLMs, focusing on the roles PLMs play in driving its development. We first examine the fundamental architecture and describe the basic roles of PLMs in GPTE, i.e., embedding extraction, expressivity enhancement, training strategies, learning objectives, and data construction. Then, we describe advanced roles enabled by PLMs, such as multilingual support, multimodal integration, code understanding, and scenario-specific adaptation. Finally, we highlight potential future research directions that move beyond traditional improvement goals, including ranking integration, safety considerations, bias mitigation, structural information incorporation, and the cognitive extension of embeddings. This survey aims to serve as a valuable reference for both newcomers and established researchers seeking to understand the current state and future potential of GPTE.

Summary

- The paper systematically analyzes how pretrained language models drive GPTE through advanced architectures, pooling strategies, and contrastive learning.

- It examines multi-stage training, auxiliary objectives, and synthetic data generation to enhance semantic understanding across modalities.

- The survey discusses adaptation methods, safety and bias challenges, and future directions for robust and interpretable embeddings.

The Role of Pretrained LLMs in General-Purpose Text Embeddings

Introduction

This survey provides a comprehensive and technically rigorous analysis of the evolution, architecture, and future directions of general-purpose text embeddings (GPTE) in the context of pretrained LLMs (PLMs). The work systematically dissects the foundational and advanced roles of PLMs in GPTE, covering architectural paradigms, training objectives, data curation, evaluation, and the extension of embeddings to multilingual, multimodal, and code domains. The survey also addresses emerging challenges such as safety, bias, and the integration of structural and reasoning capabilities.

GPTE: Concepts, Applications, and Architecture

Text embeddings are dense, fixed-size vector representations of variable-length texts, enabling efficient computation of semantic similarity, relevance, and encoding for downstream tasks such as retrieval, classification, clustering, and summarization. The survey delineates three primary application categories: semantic similarity (e.g., STS, NLI, clustering), semantic relevance (e.g., IR, QA, RAG), and semantic encoding (e.g., as features for classifiers or generative models). Hybrid applications, such as direct embedding injection into LLMs for RAG, are also discussed.

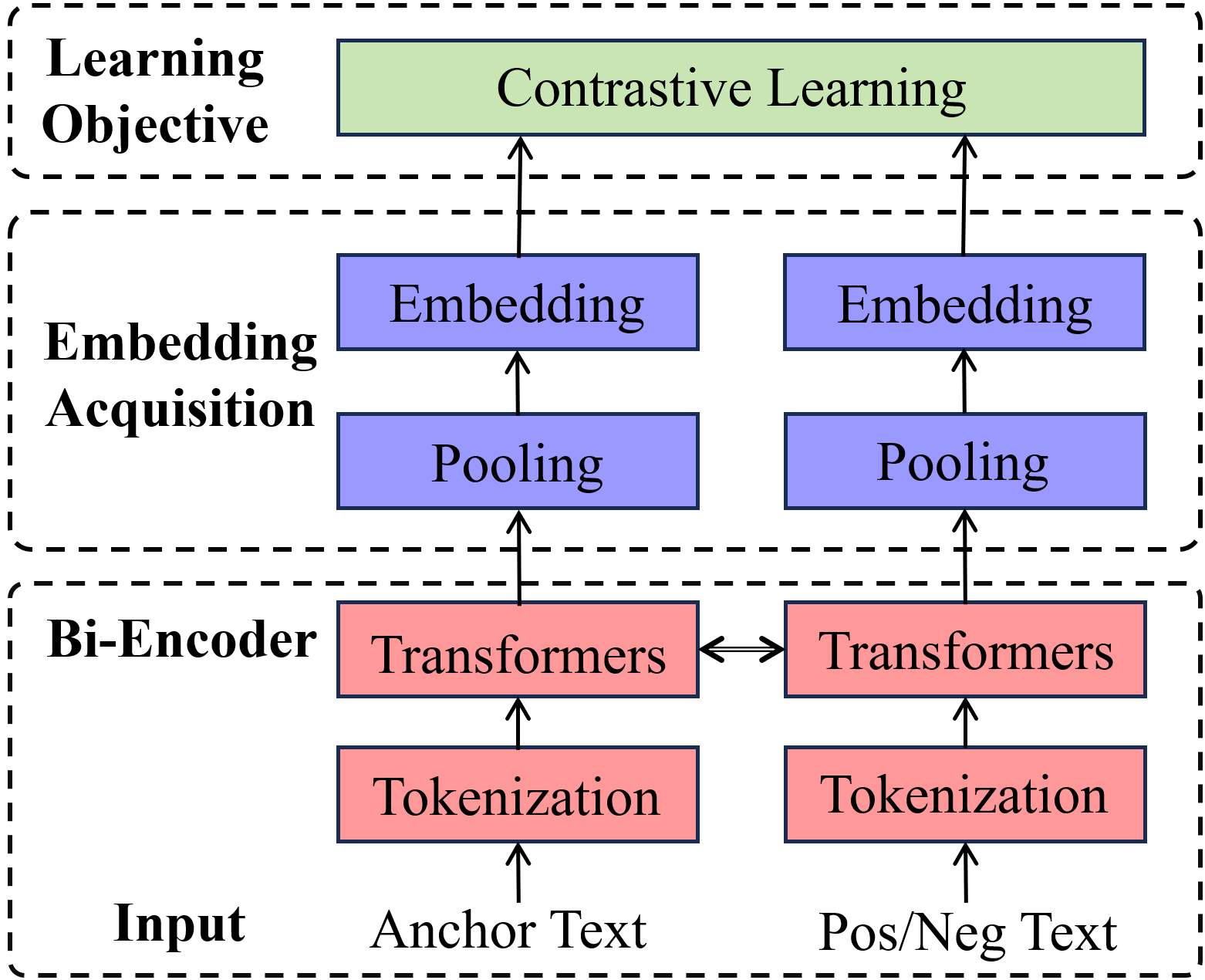

The canonical GPTE architecture is a multi-stage pipeline: a PLM backbone encodes input text, a pooling operation aggregates token-level representations, and contrastive learning (CL) on large-scale text pairs optimizes the embedding space. This architecture is illustrated in the following figure.

Figure 1: The typical architecture and training manner of GPTE models.

Contrastive learning, typically via InfoNCE loss, is the dominant paradigm, with positive and negative pairs constructed from labeled or synthetic data. The survey emphasizes the universality of this approach across tasks and the importance of large, diverse training corpora.

Fundamental Roles of PLMs in GPTE

Embedding Extraction Strategies

PLMs, whether encoder-based (BERT, RoBERTa), encoder-decoder (T5), or decoder-only (GPT, LLaMA, Qwen), require careful pooling strategies for embedding extraction. Encoder-based models often use the [CLS] token or mean pooling; decoder-only models rely on last-token pooling due to causal attention, with partial pooling as a refinement for long texts. Multi-layer aggregation and attentive pooling further enhance representational robustness, especially for long-context or complex inputs.

Enhancing Expressivity

Long-context modeling is addressed via both plug-and-play augmentation (e.g., extending position embeddings, integrating RoPE) and pretraining from scratch with architectural modifications (e.g., Alibi, RoPE, or non-transformer encoders). Prompt-informed embeddings, leveraging both hard and soft prompts, enable extractive summarization and instruction-following capabilities, with recent work unifying prompt-based and instruction-based paradigms for both encoder and decoder architectures.

Optimization Objectives and Training Paradigms

Multi-stage training, combining weakly supervised pre-finetuning on massive noisy corpora with high-quality supervised fine-tuning, is shown to be critical for both generalization and task-specific performance. Beyond standard CL, auxiliary objectives such as MLM, RTD, NTP, MNTP, Matryoshka Representation Learning (MRL), cosine/angle-based losses, lexicon-based sparse objectives, and knowledge distillation from cross-encoders are systematically reviewed. The survey highlights the importance of large batch sizes, in-batch negative mining, and distributed training for effective CL.

Data Synthesis and Benchmarking

LLMs are increasingly used to synthesize high-quality training data, including anchor, positive, and hard negative pairs, enabling scalable and diverse data generation across languages, domains, and tasks. Synthetic data is now central to state-of-the-art GPTE training. The survey also discusses the evolution of evaluation benchmarks, with MTEB and MMTEB providing unified, multilingual, and multimodal evaluation frameworks.

PLM Choice: Architecture and Scale

Encoder-only models (BERT, XLM-R) have dominated GPTE, but decoder-only LLMs (Qwen, Mistral, LLaMA) now achieve competitive or superior performance at larger scales. The survey notes that decoder-based models require significantly more parameters to match encoder-based performance, motivating research into efficient scaling (e.g., MoE architectures). Model scale remains a primary determinant of embedding quality, but inference cost and memory constraints are nontrivial for deployment.

Advanced Roles: Multilingual, Multimodal, and Code Embeddings

Multilingual Embeddings

Multilingual PLMs (mBERT, XLM-R, LaBSE, mE5, mGTE) and synthetic data generation enable robust cross-lingual embeddings. The survey details strategies for leveraging monolingual, parallel, and synthetic corpora, and discusses the transferability of CL objectives across languages and the impact of data and model coverage on low-resource scenarios.

Multimodal Embeddings

The integration of PLMs into multimodal embedding models (CLIP, BLIP, ALIGN, E5-V, VLM2Vec, mmE5, UniME, jina-v4) has enabled unified text, image, and video representations. The survey reviews architectural paradigms (separate vs. unified encoders), training objectives (cross-modal CL, instruction tuning, hard negative mining), and data synthesis techniques (e.g., MegaPairs, GME). The shift toward instruction-aware, task-adaptive, and multi-vector representations is emphasized.

Code Embeddings

PLM-based code embeddings leverage both text-code and code-code CL, with models such as CodeBERT, GraphCodeBERT, UniXcoder, CodeT5+, and Codex. The survey highlights the importance of structural information (ASTs, data flow), multi-modal CL, and large-scale, high-quality code-text corpora (CodeSearchNet, CoSQA, APPS, CornStack) for effective code representation learning.

Adaptation, Safety, Bias, and Future Directions

Scenario-Specific Adaptation

SFT, LoRA, and neural adapters enable efficient adaptation of GPTE models to specific tasks, languages, domains, or modalities. Instruction-following embeddings, inspired by LLM instruction tuning, are shown to improve task generalization and zero-shot performance. The survey notes that generalist models can outperform domain-specific models in some settings, challenging the necessity of specialization.

Safety and Privacy

The survey provides a detailed account of security risks, including data poisoning, backdoor attacks (Badnl, BadCSE), and privacy leakage via embedding inversion (GEIA, ALGEN, Text Revealer). The increasing deployment of GPTE models in sensitive applications necessitates robust defenses against both training-time and inference-time attacks.

Bias and Fairness

Task, domain, language, modal, and social biases are identified as critical challenges. The survey advocates for task-diverse pretraining, domain-adaptive CL, multilingual alignment, balanced modality fusion, and fairness-aware objectives to mitigate these biases and improve robustness, inclusivity, and ethical alignment.

Structural and Reasoning Extensions

The exploitation of structural information (discourse, tables, graphs, code) is highlighted as a key avenue for improving long-range and global semantic understanding. The survey proposes extending CL beyond pairwise optimization to chain and graph structures, and integrating GPTE with reasoning-capable LLMs for explainability, privacy, and robustness. The analogy between embeddings and cognitive memory is invoked to motivate continual, context-aware, and memory-augmented representations.

Conclusion

This survey delivers a comprehensive and technically detailed synthesis of the state of GPTE in the PLM era. It elucidates the architectural, algorithmic, and data-centric advances that have shaped the field, and provides a critical perspective on emerging challenges and future research directions. The integration of PLMs into GPTE has enabled unprecedented generalization, multilinguality, and multimodality, but also introduces new complexities in safety, bias, and interpretability. The survey's roadmap will inform both foundational research and practical deployment of embedding models in increasingly diverse and demanding real-world scenarios.

Follow-up Questions

- How do different pooling strategies impact the quality of embeddings extracted from pretrained language models?

- What are the comparative benefits of contrastive learning versus other training objectives in GPTE?

- In what ways does multi-stage training improve the generalization of text embeddings?

- How can emerging challenges in safety and bias be addressed in large-scale embedding models?

- Find recent papers about general-purpose text embeddings.

Related Papers

- A Comprehensive Survey on Pretrained Foundation Models: A History from BERT to ChatGPT (2023)

- AMMUS : A Survey of Transformer-based Pretrained Models in Natural Language Processing (2021)

- Pretrained Language Models for Text Generation: A Survey (2021)

- Large Language Models for Education: A Survey and Outlook (2024)

- A Survey on Large Language Models with Multilingualism: Recent Advances and New Frontiers (2024)

- Large Language Models Meet NLP: A Survey (2024)

- Recent advances in text embedding: A Comprehensive Review of Top-Performing Methods on the MTEB Benchmark (2024)

- Making Text Embedders Few-Shot Learners (2024)

- Survey of different Large Language Model Architectures: Trends, Benchmarks, and Challenges (2024)

- LLMs are Also Effective Embedding Models: An In-depth Overview (2024)

Authors (6)

alphaXiv

- On The Role of Pretrained Language Models in General-Purpose Text Embeddings: A Survey (18 likes, 0 questions)