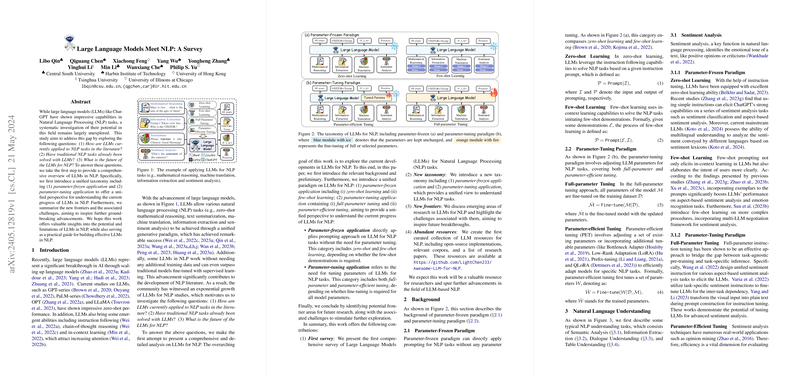

This paper, "LLMs Meet NLP: A Survey" (Qin et al., 21 May 2024 ), provides a comprehensive overview of how LLMs are being applied to various NLP tasks. The authors propose a unified taxonomy to understand the current landscape, dividing applications into two main paradigms: Parameter-Frozen and Parameter-Tuning. The survey also explores the extent to which LLMs have addressed traditional NLP challenges and identifies future research frontiers.

The core of the paper's framework lies in its taxonomy:

- Parameter-Frozen Application: This paradigm involves using LLMs directly for NLP tasks without updating their parameters.

- Zero-shot Learning: LLMs perform tasks based solely on a given instruction prompt, leveraging their inherent instruction-following capabilities.

- Few-shot Learning: The model is provided with a few examples (demonstrations) within the prompt context to guide its behavior, leveraging in-context learning.

- Parameter-Tuning Application: This paradigm involves adjusting the LLM's parameters to adapt it to specific NLP tasks.

- Full-parameter Tuning: All model parameters are fine-tuned on a task-specific dataset.

- Parameter-efficient Tuning (PET): Only a small subset of existing parameters or additional lightweight parameters are tuned. Techniques like Adapter, Low-Rank Adaptation (LoRA), Prefix-tuning, and QLoRA fall into this category, offering computational efficiency compared to full fine-tuning.

The paper then details the application of these paradigms across various NLP understanding and generation tasks:

Natural Language Understanding (NLU)

- Sentiment Analysis:

- Parameter-Frozen: Simple instructions enable zero-shot sentiment classification and aspect-based sentiment analysis [zhang2023sentiment, koto2024zero]. Few-shot prompting with exemplars improves performance, especially for aspect-based tasks and emotion recognition [zhang2023sentiment, zhao2023chatgpt]. Multi-LLM negotiation frameworks are also explored [sun2023sentiment].

- Parameter-Tuning: Full instruction tuning with task-specific or unified instructions can enhance performance on advanced sentiment analysis tasks [wang2022unifiedabsa, varia2022instruction]. PET methods like LoRA are used for efficient adaptation to specific datasets, such as developing emotional support systems on conversational data [qiu2023smile].

- Information Extraction (IE):

- Parameter-Frozen: Zero-shot prompting leverages LLMs' knowledge for tasks like NER and RE, often by decomposing them into simpler QA subproblems [zhang2023aligning, wei2023zero]. Syntactic prompting and tool augmentation can further improve zero-shot performance [xie-etal-2023-empirical]. Few-shot learning uses retrieved examples for better understanding [li2023far]. Some approaches reformulate IE tasks as code generation using code-centric LLMs [li2023codeie, bi2023codekgc].

- Parameter-Tuning: Full fine-tuning can be applied to single datasets, standardized formats across IE subtasks, or mixed datasets for better generalization [lu2023event, gan2023gieLLM, sainz2023gollie]. PET methods like dynamic sparse fine-tuning and Lottery Prompt Tuning address efficiency challenges, especially with limited data or for lifelong learning scenarios [das-etal-2023-unified, liang-etal-2023-prompts].

- Dialogue Understanding (DU):

- Parameter-Frozen: Zero-shot prompting is effective for SLU and DST [pan2023preliminary, he2023can]. Chain-of-thought prompting enables step-by-step reasoning [gao2023self]. Viewing SLU/DST as agent systems or code generation tasks can improve performance [zhangseagull, wu2023semantic]. Zero-shot methods are applied to real-world scenarios like dialogue management [chung2023instructtods]. Few-shot learning uses demonstrations, often retrieved to be diverse to avoid overfitting [hu2022context, king2023diverse]. Integrating DST with agents via in-context learning is also explored [lin2023toward].

- Parameter-Tuning: Full fine-tuning unifies structured dialogue tasks into textual formats for improved generalization [xie2022unifiedskg]. Using demonstrations within the input format for tuning also yields good results [gupta2022show]. PET via LoRA is used for efficient DST adaptation [feng2023towards]. A soft-prompt pool based on conversation history is another PET strategy [liu2023prompt].

- Table Understanding (TU):

- Parameter-Frozen: Zero-shot approaches handle tabular data by breaking down large tables or generating parsing statements to focus on relevant data [ye2023large, sui2023gpt4table, patnaik2024cabinet]. Incorporating external knowledge enhances performance [sui2023tap4LLM]. Using secure Pandas queries addresses data leakage [ye2024dataframe]. Few-shot learning employs hybrid prompts and retrieval-of-thought for better example quality [luo2023hrot]. Task redefinition as coding (e.g., generating Python or SQL) or agent tasks using external tools are common [cheng2022binding, li2023sheetcopilot, zhang-etal-2023-crt].

- Parameter-Tuning: Full fine-tuning on table-related data through instruction tuning improves generalization [li2023table, xie2022unifiedskg]. Integrating RAG components with fine-tuned LLMs also supports table understanding [xue2023db]. PET, particularly LoRA, is used for efficient instruction tuning and even handling long table inputs with variants like LongLoRA [zhang2023jellyfish, zhang2023tablellama].

Natural Language Generation (NLG)

- Summarization:

- Parameter-Frozen: Zero-shot summarization demonstrates impressive performance with simple prompts, though studies note biases towards initial text segments [Goyal2022NewsSA, Ravaut2023OnCU]. Instruction tuning is crucial for success [Zhang2023BenchmarkingLL]. Few-shot learning with in-context examples and dialog-like approaches improves faithfulness [Zhang2023ExtractiveSV]. Techniques like "Chain of Density" prompting explore generating denser summaries [Adams2023FromST].

- Parameter-Tuning: Full fine-tuning adapts models for specific tasks like dialogue summarization or uses question-driven approaches for more controllable output [Li2022DIONYSUSAP, Pagnoni2022SocraticPQ]. PET strategies like prefix-tuning enhance domain adaptation and generalization [zhao-etal-2022-domain, Yuan2022FewshotQS]. Combining prompt tuning with discrete prompts enables controllable abstractive summarization [Ravaut2023PromptSumPC].

- Code Generation (CG):

- Parameter-Frozen: Code LLMs trained on code and natural language show strong zero-shot capabilities [nijkamp2022codegen, roziere2023code]. Architectures like CodeT5+ improve performance with flexible designs [wang2023codet5+]. Few-shot learning allows generating accurate code from minimal examples [chen2021evaluating, allal2023santacoder]. Smaller yet powerful models are also emerging in this space [li2023textbooks].

- Parameter-Tuning: Full fine-tuning incorporates code-specific pre-training (e.g., CodeT5) or reinforcement learning based on compiler feedback or execution results (e.g., CodeRL, StepCoder) for performance improvements [wang2021codet5, le2022coderl]. PET with adapters and LoRA is explored for efficiency, though generative performance can be limited compared to full tuning [Ayupov2022ParameterEfficientFO].

- Machine Translation (MT):

- Parameter-Frozen: Zero-shot MT is enhanced through cross-lingual or multilingual instruction tuning [Zhu2023ExtrapolatingLL, Wei2023PolyLMAO]. Bilingual models like OpenBA target specific language pairs efficiently [Li2023OpenBAAO]. Few-shot learning uses techniques like Chain-of-Dictionary prompting for rare words in low-resource languages [Lu2023ChainofDictionaryPE]. The choice of demonstration examples significantly impacts in-context learning quality [Raunak2023DissectingIL].

- Parameter-Tuning: Full fine-tuning is used to improve accuracy, disambiguate polysemous words, and enhance real-time/context-aware translation [Iyer2023TowardsED, Moslem2023FinetuningLL]. Contrastive Preference Optimization (CPO) refines translation quality [Xu2024ContrastivePO]. PET is empirically validated across languages and model sizes, with adapters showing effectiveness for efficient adaptation [Ustun2022WhenDP, Alves2023SteeringLL].

- Mathematical Reasoning (MR):

- Parameter-Frozen: Vanilla zero-shot prompting is challenging. Techniques like Zero-shot Chain-of-Thought ("Let's think step by step") elicit reasoning [kojima2022large]. Self-consistency decoding improves results by integrating multiple reasoning paths [wang2023selfconsistency]. Few-shot CoT uses explicit demonstrations [wei2022chain]. Automatic example selection methods address the manual effort [zhang2022automatic]. Generating programs as intermediate steps (like PAL) allows execution for precise calculation [gao2023pal].

- Parameter-Tuning: Full fine-tuning leverages methods like Reinforcement Learning from Evol-Instruct Feedback (RLEIF) or instruction tuning on mathematical datasets [luo2023wizardmath, yue2023mammoth]. Distilling rationales from large models to smaller ones is also explored [ho-etal-2023-large]. Tool usage (e.g., calculator via ToolFormer) can be integrated [schick2023toolformer]. PET techniques like LoRA and general adapter frameworks make fine-tuning more accessible [hu2022lora, hu2023LLMadapters].

The paper concludes by outlining critical future directions and challenges:

- Multilingual LLMs: Expanding LLMs' success beyond English, focusing on improving performance in low-resource languages and enhancing cross-lingual alignment.

- Multi-modal LLMs: Integrating other modalities (like vision) to enable complex multi-modal reasoning and developing effective multi-modal interaction mechanisms for NLP tasks.

- Tool-usage: Utilizing LLMs as controllers for external tools and agents, addressing challenges in appropriate tool selection and efficient planning/orchestration of multiple tools.

- X-of-thought: Refining reasoning processes (like Chain-of-Thought) for complex problems, focusing on universal step decomposition and integrating knowledge from different prompting strategies.

- Hallucination: Mitigating the generation of factually incorrect or unwanted content, developing efficient evaluation benchmarks, and exploring how controlled "hallucination" might stimulate creativity.

- Safety: Addressing safety concerns like copyright, toxicity, bias, and psychological safety by building effective benchmarks and tackling multilingual safety risks.

In summary, the paper serves as a valuable resource by systematically categorizing and detailing the practical applications of LLMs in NLP across different tasks and implementation paradigms, while also charting the course for future research in critical areas.