Yume: An Interactive World Generation Model (2507.17744v1)

Abstract: Yume aims to use images, text, or videos to create an interactive, realistic, and dynamic world, which allows exploration and control using peripheral devices or neural signals. In this report, we present a preview version of \method, which creates a dynamic world from an input image and allows exploration of the world using keyboard actions. To achieve this high-fidelity and interactive video world generation, we introduce a well-designed framework, which consists of four main components, including camera motion quantization, video generation architecture, advanced sampler, and model acceleration. First, we quantize camera motions for stable training and user-friendly interaction using keyboard inputs. Then, we introduce the Masked Video Diffusion Transformer~(MVDT) with a memory module for infinite video generation in an autoregressive manner. After that, training-free Anti-Artifact Mechanism (AAM) and Time Travel Sampling based on Stochastic Differential Equations (TTS-SDE) are introduced to the sampler for better visual quality and more precise control. Moreover, we investigate model acceleration by synergistic optimization of adversarial distillation and caching mechanisms. We use the high-quality world exploration dataset \sekai to train \method, and it achieves remarkable results in diverse scenes and applications. All data, codebase, and model weights are available on https://github.com/stdstu12/YUME. Yume will update monthly to achieve its original goal. Project page: https://stdstu12.github.io/YUME-Project/.

Summary

- The paper presents a novel framework that enables infinite-length video generation from a single image using discretized camera motion control and autoregressive techniques.

- It introduces a masked video diffusion transformer that enhances structural consistency, reduces memory overhead, and mitigates artifacts through advanced sampling strategies like AAM and TTS-SDE.

- The model outperforms baselines in instruction-following and visual quality while leveraging adversarial distillation and caching to accelerate inference.

Yume: An Interactive World Generation Model

Introduction and Motivation

Yume presents a comprehensive framework for interactive, high-fidelity world generation, enabling users to explore dynamic environments synthesized from a single input image via continuous keyboard control. The model addresses the limitations of prior video diffusion approaches in terms of controllability, visual realism, and scalability to complex real-world scenes. Yume’s architecture is designed to support infinite-length, autoregressive video generation, with a focus on robust camera motion control, artifact mitigation, and efficient inference.

Figure 1: Yume enables streaming, interactive world generation from an input image, supporting continuous keyboard-driven exploration.

Core Components and Methodology

Yume’s system is structured around four principal innovations: quantized camera motion control, a masked video diffusion transformer (MVDT) architecture, advanced sampling strategies, and model acceleration via adversarial distillation and caching.

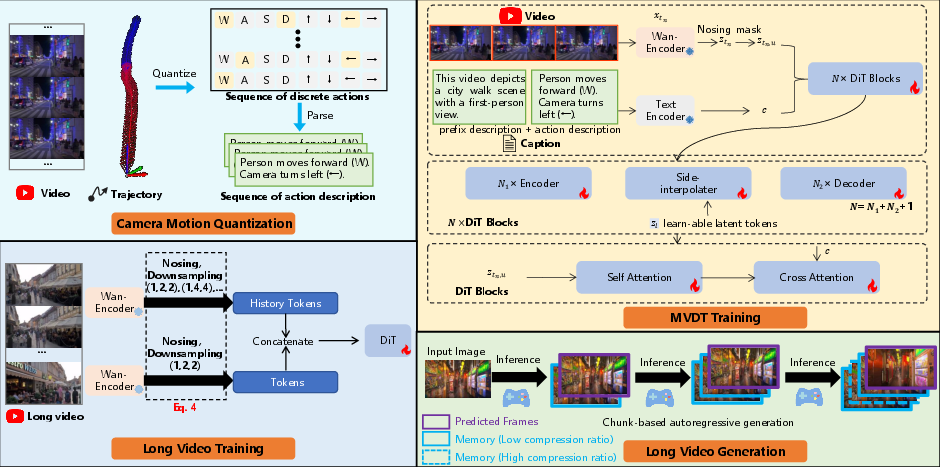

Figure 2: The four core components of Yume: camera motion quantization, model architecture, long video training, and generation.

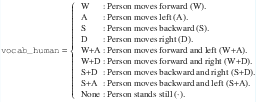

Quantized Camera Motion (QCM)

Traditional video diffusion models rely on dense, per-frame camera pose matrices, which are difficult to annotate and often result in unstable or unintuitive control. Yume introduces a quantization scheme that discretizes camera trajectories into a finite set of canonical actions (e.g., move forward, turn left, tilt up), each mapped to a relative transformation. This quantization is performed by matching the actual relative transformation between frames to the closest canonical action, as formalized in Algorithm 1 of the paper. The resulting action sequence is then injected as a textual condition, enabling precise, low-latency keyboard-based control without additional learnable modules.

Figure 3: Example vocabulary for translational motion, mapping discrete actions to natural language descriptions.

Figure 4: Example vocabulary for rotational motion, supporting intuitive camera orientation control.

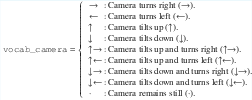

Masked Video Diffusion Transformer (MVDT)

Yume’s backbone is a DiT-based video diffusion model augmented with masked representation learning. The MVDT architecture applies stochastic masking to input video tokens, focusing computation on visible regions and interpolating masked content via a side-interpolator. This design improves structural consistency across frames and reduces memory/computation overhead. The model is trained end-to-end, with masking applied only during training to encourage robust feature learning.

Long-Form Video Generation

To support infinite-length, temporally coherent video, Yume employs a chunk-based autoregressive generation strategy. Historical frames are compressed using a hierarchical Patchify module (with increasing spatiotemporal compression for older frames), and concatenated with newly generated segments. This approach, inspired by FramePack, preserves high-resolution context for recent frames while maintaining long-term consistency.

Figure 5: Long-form video generation method, leveraging hierarchical compression and autoregressive chunking.

Advanced Sampler: AAM and TTS-SDE

Yume introduces two key sampling innovations:

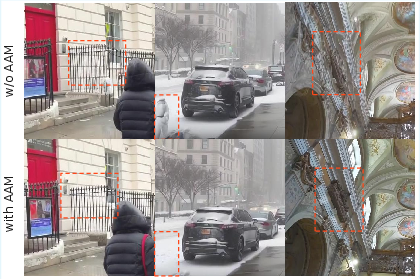

- Anti-Artifact Mechanism (AAM): A training-free, two-stage denoising process that refines high-frequency details by recombining low-frequency components from an initial generation with high-frequency components from a refinement pass. This approach, inspired by DDNM and DLSS, significantly reduces visual artifacts in complex scenes without additional training.

- Time Travel Sampling with SDE (TTS-SDE): An SDE-based sampling method that leverages information from future denoising steps to guide earlier ones, introducing controlled stochasticity to improve both sharpness and instruction-following. TTS-SDE outperforms standard ODE and SDE samplers in instruction adherence, as shown in ablation studies.

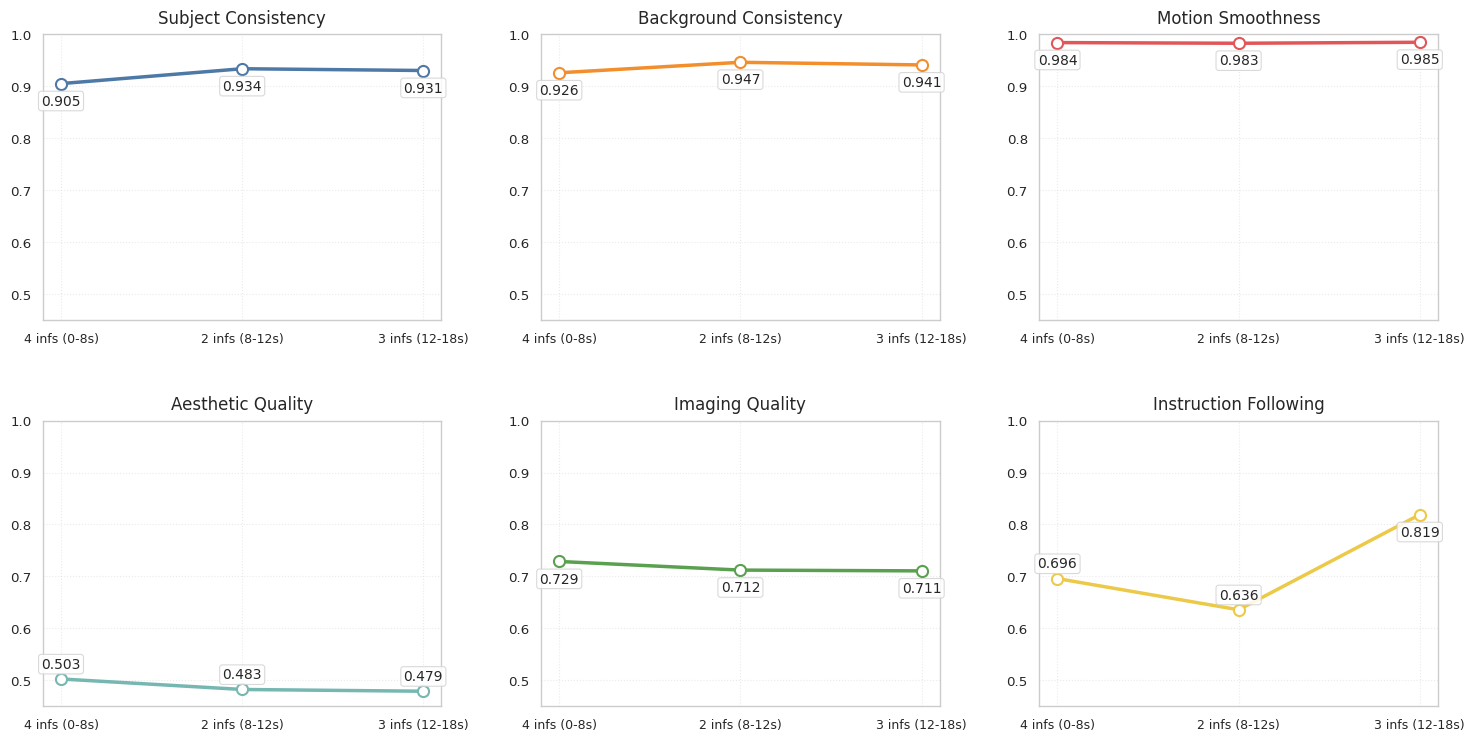

Figure 6: Metric dynamics in long-video generation, demonstrating stability and instruction-following over multiple extrapolations with TTS-SDE.

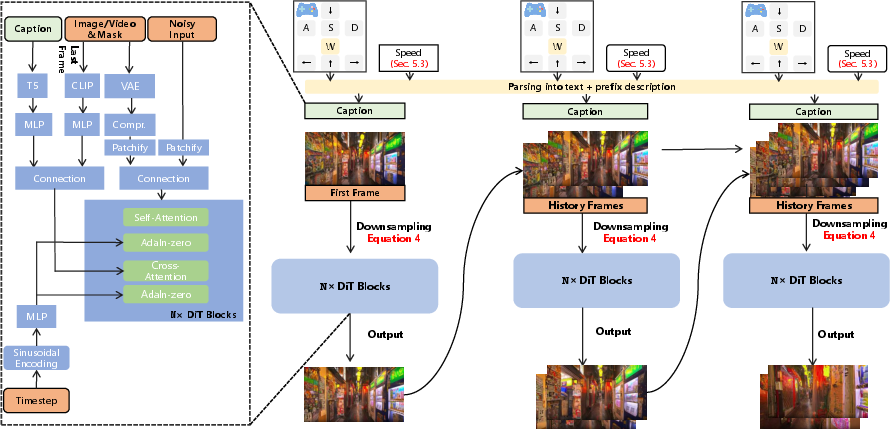

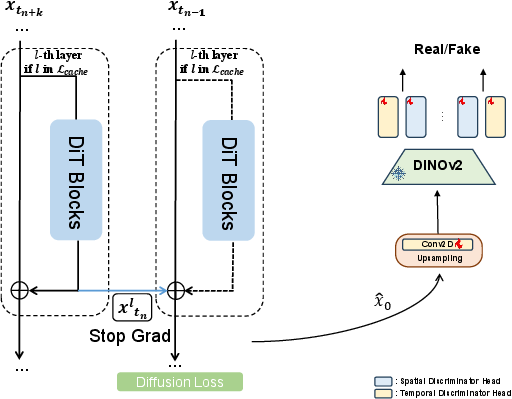

Model Acceleration: Adversarial Distillation and Caching

Yume addresses the high computational cost of diffusion-based video generation by jointly optimizing adversarial distillation and cache acceleration:

- Adversarial Distillation: The denoising process is distilled into fewer steps using a GAN-based loss, preserving visual quality while reducing inference time. The discriminator is adapted from OSV for memory efficiency.

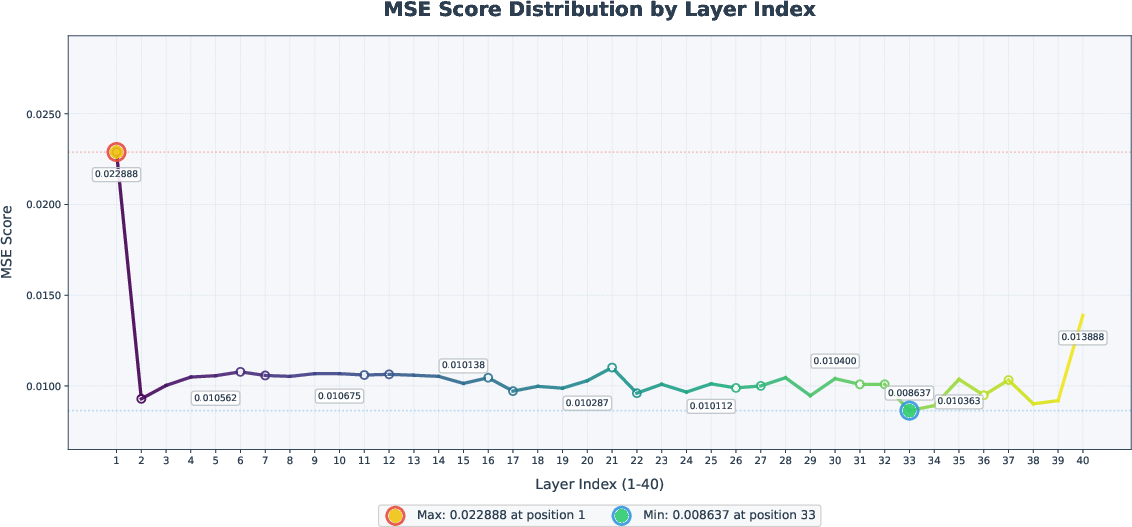

- Cache Acceleration: Intermediate residuals from selected DiT blocks are cached and reused across denoising steps, with block importance determined via MSE-based ablation. Central blocks are identified as least critical and targeted for caching, yielding significant speedups with minimal quality loss.

Figure 7: Significance of individual DiT blocks, guiding the selection of cacheable layers for acceleration.

Figure 8: Acceleration method design, illustrating the integration of adversarial distillation and caching.

Experimental Results

Visual Quality and Controllability

Yume is evaluated on the Yume-Bench, a custom benchmark for interactive video generation with complex camera motions. Compared to state-of-the-art baselines (Wan-2.1, MatrixGame), Yume achieves a substantial improvement in instruction-following (0.657 vs. 0.271 for MatrixGame), while maintaining or exceeding performance in subject/background consistency, motion smoothness, and aesthetic quality.

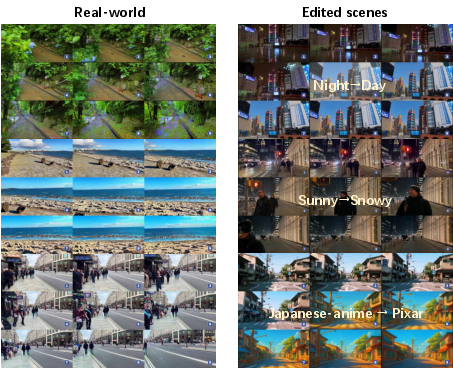

Figure 9: Yume demonstrates superior visual quality and precise adherence to keyboard control in both real-world and synthetic scenarios.

Long-Video Stability

Yume maintains high subject and background consistency over 18-second sequences, with only minor degradation observed during abrupt motion transitions. Instruction-following recovers rapidly after transitions, indicating robust temporal modeling.

Figure 10: AAM improves structural details in urban and architectural scenes, reducing artifacts and enhancing realism.

Ablation and Acceleration

- TTS-SDE yields the highest instruction-following (0.743), with a slight trade-off in background consistency and imaging quality.

- Distilled models (14 steps vs. 50) achieve a 3.7x speedup with minimal loss in visual metrics, though instruction-following is somewhat reduced.

- Cache acceleration further reduces inference time by targeting non-critical DiT blocks.

Practical Implications and Future Directions

Yume’s architecture enables a range of applications, including real-time world exploration, world editing (via integration with image editing models such as GPT-4o), and adaptation to both real and synthetic domains. The quantized camera motion paradigm offers a scalable solution for interactive control, while the MVDT and advanced sampling strategies set a new standard for artifact-free, temporally coherent video generation.

The release of code, models, and data facilitates reproducibility and further research. However, challenges remain in scaling to higher resolutions, improving runtime efficiency, and extending control to object-level interactions and neural signal inputs.

Conclusion

Yume establishes a robust foundation for interactive, infinite-length world generation from static images, combining discrete camera motion control, masked video diffusion, advanced artifact mitigation, and efficient inference. The model demonstrates strong numerical results in both visual quality and controllability, outperforming prior baselines in complex, real-world scenarios. Future work will focus on enhancing visual fidelity, expanding interaction modalities, and further optimizing inference for real-time deployment.

Follow-up Questions

- How does the quantized camera motion scheme improve interactive control over traditional video diffusion methods?

- What challenges does the masked video diffusion transformer overcome in maintaining temporal consistency?

- In what ways does the TTS-SDE sampling method enhance video clarity and instruction adherence compared to standard samplers?

- How do adversarial distillation and caching contribute to the overall efficiency of the video generation process?

- Find recent papers about interactive video generation.

Related Papers

- PEEKABOO: Interactive Video Generation via Masked-Diffusion (2023)

- DreamForge: Motion-Aware Autoregressive Video Generation for Multi-View Driving Scenes (2024)

- GameGen-X: Interactive Open-world Game Video Generation (2024)

- Wan: Open and Advanced Large-Scale Video Generative Models (2025)

- SkyReels-V2: Infinite-length Film Generative Model (2025)

- MAGI-1: Autoregressive Video Generation at Scale (2025)

- Sekai: A Video Dataset towards World Exploration (2025)

- Matrix-Game: Interactive World Foundation Model (2025)

- From Virtual Games to Real-World Play (2025)

- Hunyuan-GameCraft: High-dynamic Interactive Game Video Generation with Hybrid History Condition (2025)

GitHub

- GitHub - stdstu12/YUME (9 stars)

- 𝙔𝙪𝙢𝙚

Tweets

YouTube

alphaXiv

- Yume: An Interactive World Generation Model (53 likes, 0 questions)