- The paper presents an innovative fuzzing framework using LLMs for high-quality seed generation and enhanced smart contract vulnerability detection.

- It employs a multi-feedback optimization strategy combining evolutionary algorithms with symbolic execution to maximize coverage.

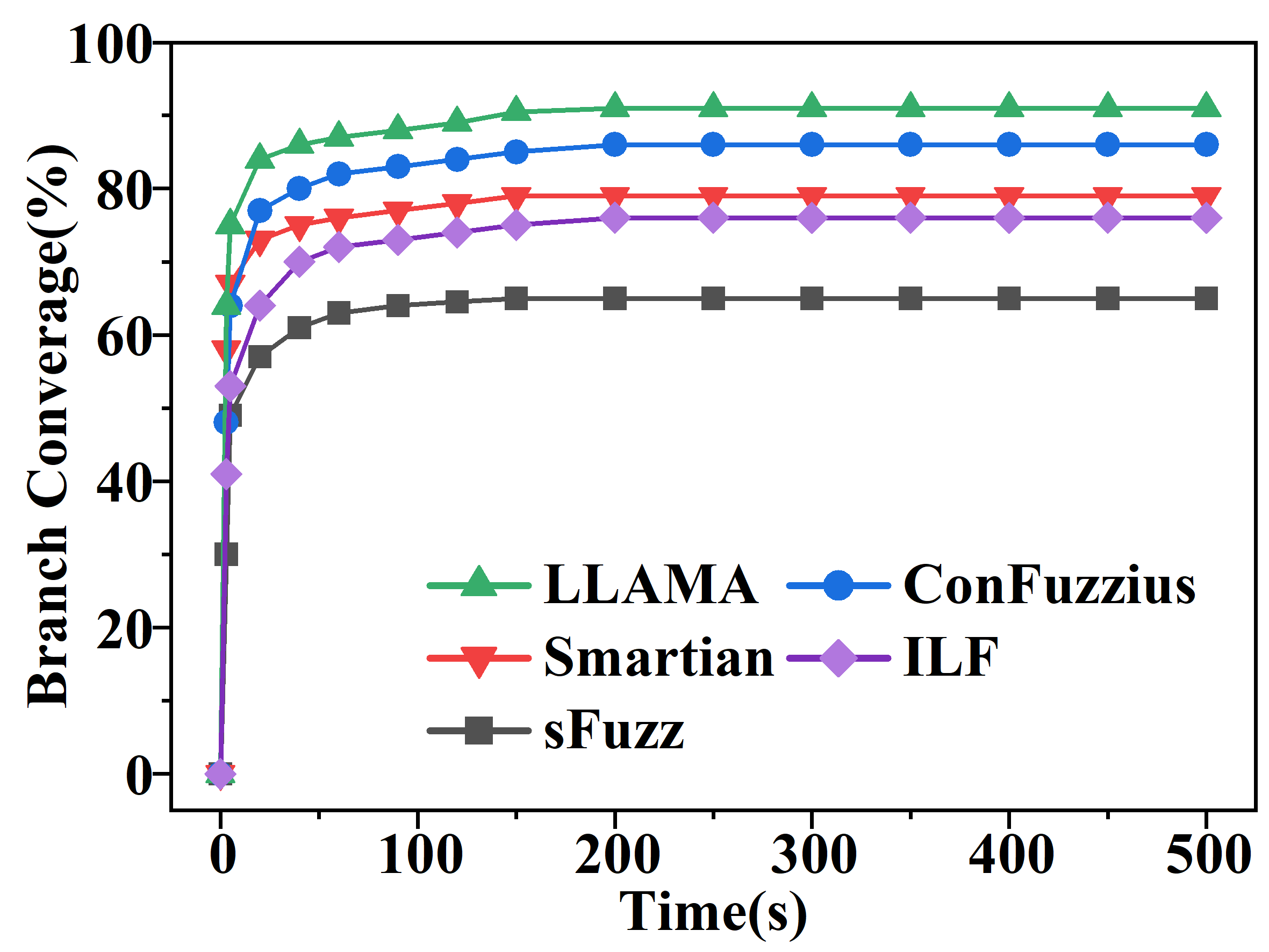

- Experimental results show 91% instruction coverage and detection of 132 vulnerabilities, demonstrating LLAMA's effectiveness over traditional fuzzers.

LLAMA: Multi-Feedback Smart Contract Fuzzing Framework with LLM-Guided Seed Generation

Introduction

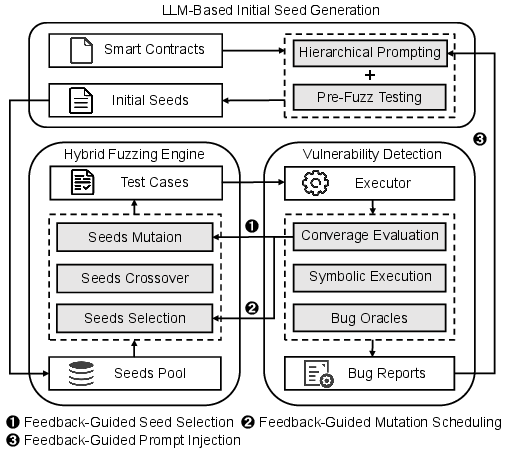

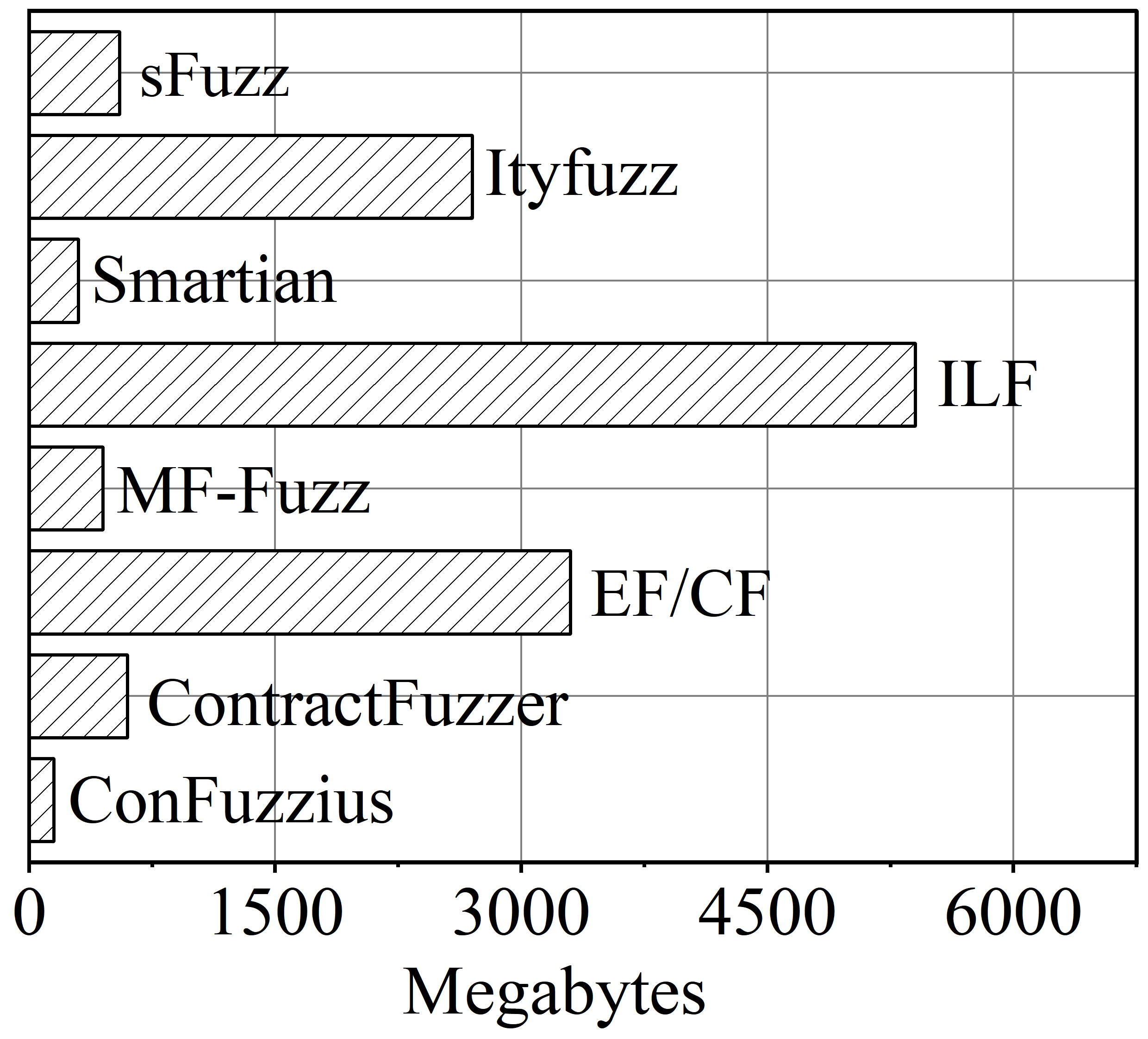

LLAMA introduces an innovative smart contract fuzzing framework utilizing LLMs for seed generation, evolutionary mutation strategies, and hybrid testing techniques, targeting smart contract security in blockchain systems. The framework's pivotal function rests in its ability to enhance fuzz testing via high-quality seed generation, adaptive feedback integration across the fuzzing process, and efficient hybrid execution methodologies.

Framework Architecture

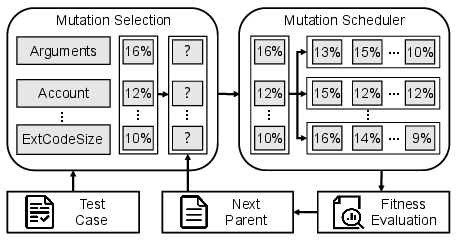

Figure 1: Architecture of the proposed LLAMA.

1. LLM-Based Initial Seed Generation

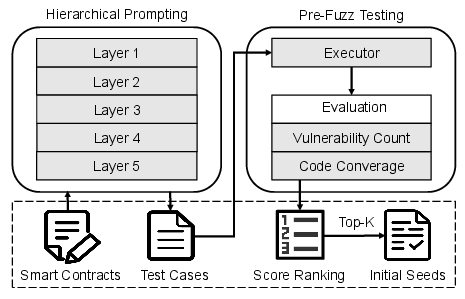

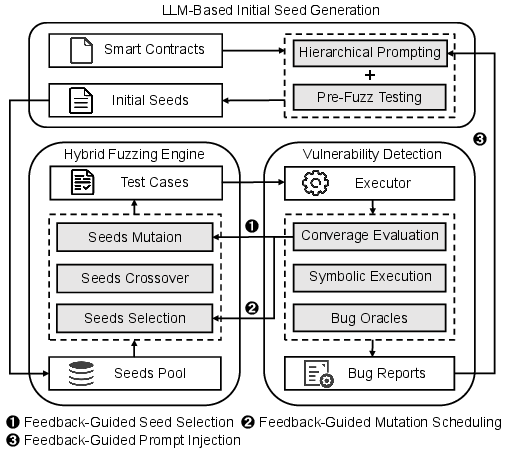

The LLM-Based Initial Seed Generation module of LLAMA employs a five-layer hierarchical prompting strategy to harness the semantic power of LLMs for generating structurally and semantically valid initial seeds. The process initiates with function abstraction followed by transaction sequence inference, format verification, semantic optimization, and behavior-guided prompt injection. This multistage approach facilitates the synthesis of high-quality inputs capable of exploring deeper contract logic effectively.

Figure 2: LLM-based seed generation in LLAMA.

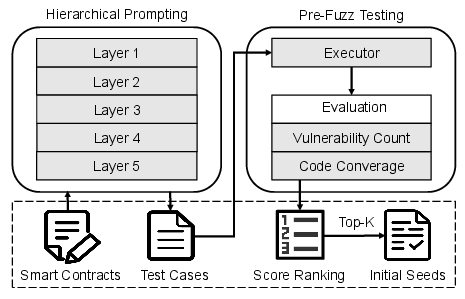

2. Multi-Feedback Optimization Strategy

The strategic foundation of LLAMA's multi-feedback optimization involves dynamically leveraging different runtime feedback signals to enhance seed generation, selection, and mutation scheduling. Feedback incorporates both control flow and semantic insights, crucial for driving exploration in smart contract fuzzing and maximizing coverage and vulnerability detection.

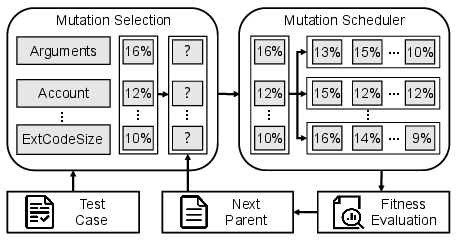

Figure 3: Illustration of the evolutionary scheduling process for mutation operator selection in LLAMA.

3. Hybrid Fuzzing Engine

By integrating evolutionary algorithms with symbolic execution selectively, the Hybrid Fuzzing Engine in LLAMA addresses path stagnation effectively. This engine leverages genetic algorithms for efficient exploration but triggers symbolic execution when coverage growth shows diminishing returns, ensuring exploration beyond shallow paths.

Implementation Details

Hierarchical Prompting Strategy

For the hierarchical prompting within LLM-based seed generation, the process involves breaking down tasks into actionable prompts:

1

2

3

4

5

6

7

8

|

def hierarchical_prompting(contract_code):

function_abstract = extract_function_behavior(contract_code)

transaction_sequence = infer_transaction_sequences(function_abstract)

verified_sequence = verify_format(transaction_sequence)

optimized_sequence = optimize_semantics(verified_sequence)

final_prompt = inject_behavior_guidance(optimized_sequence)

return generate_seeds(final_prompt) |

Feedback-Guided Mutation Strategy

The mutation strategy employs fitness functions using runtime feedback such as instruction coverage and branch coverage to iteratively refine mutation operator probabilities, embedded with randomness to encourage exploration of novel paths:

1

2

3

4

5

|

def feedback_guided_mutation(strategy, population):

for each_seed in population:

branches, instructions = execute_and_collect(each_seed)

fitness_score = compute_fitness(branches, instructions)

update_operator_probabilities(fitness_score) |

Experimental Results

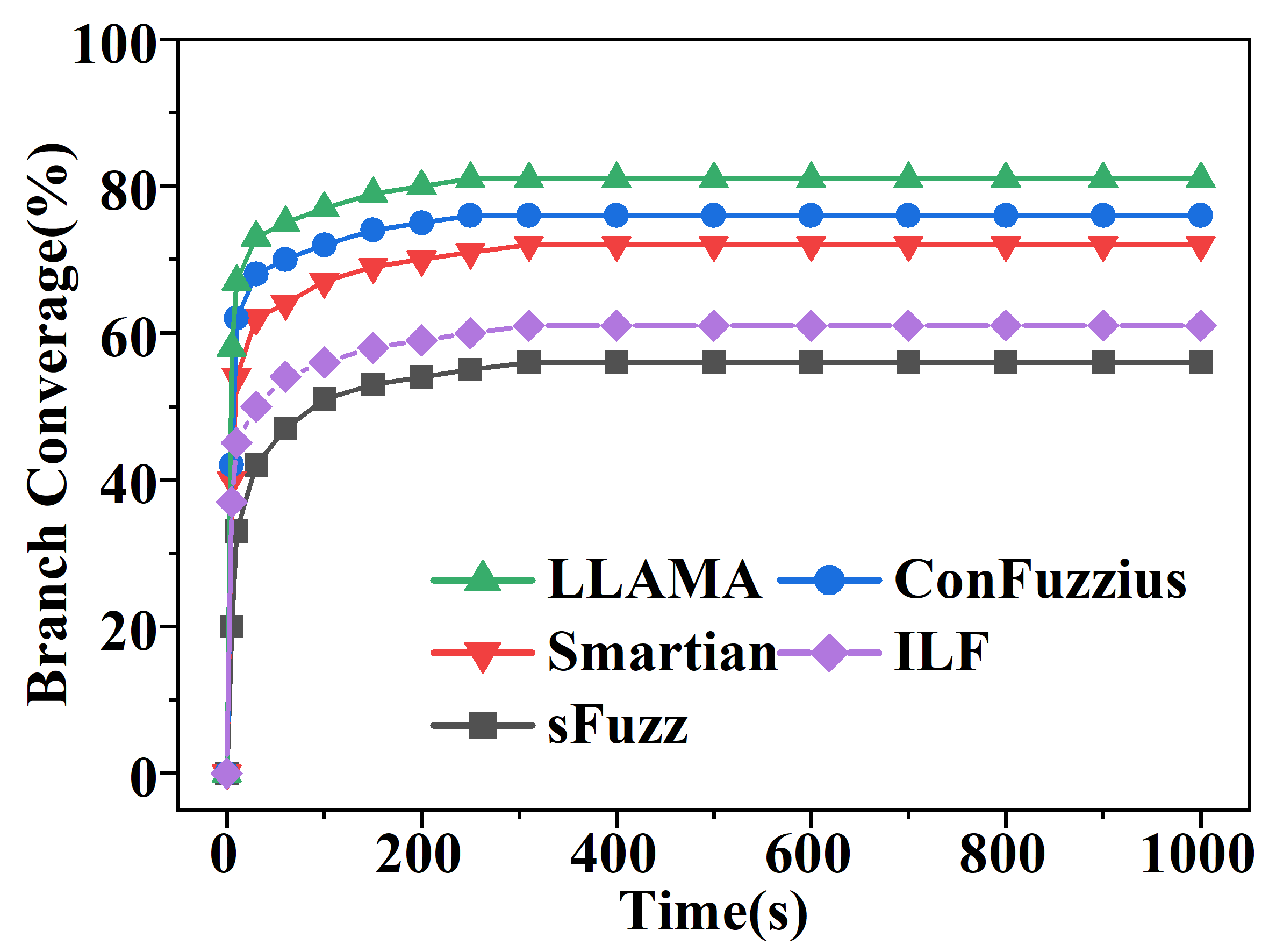

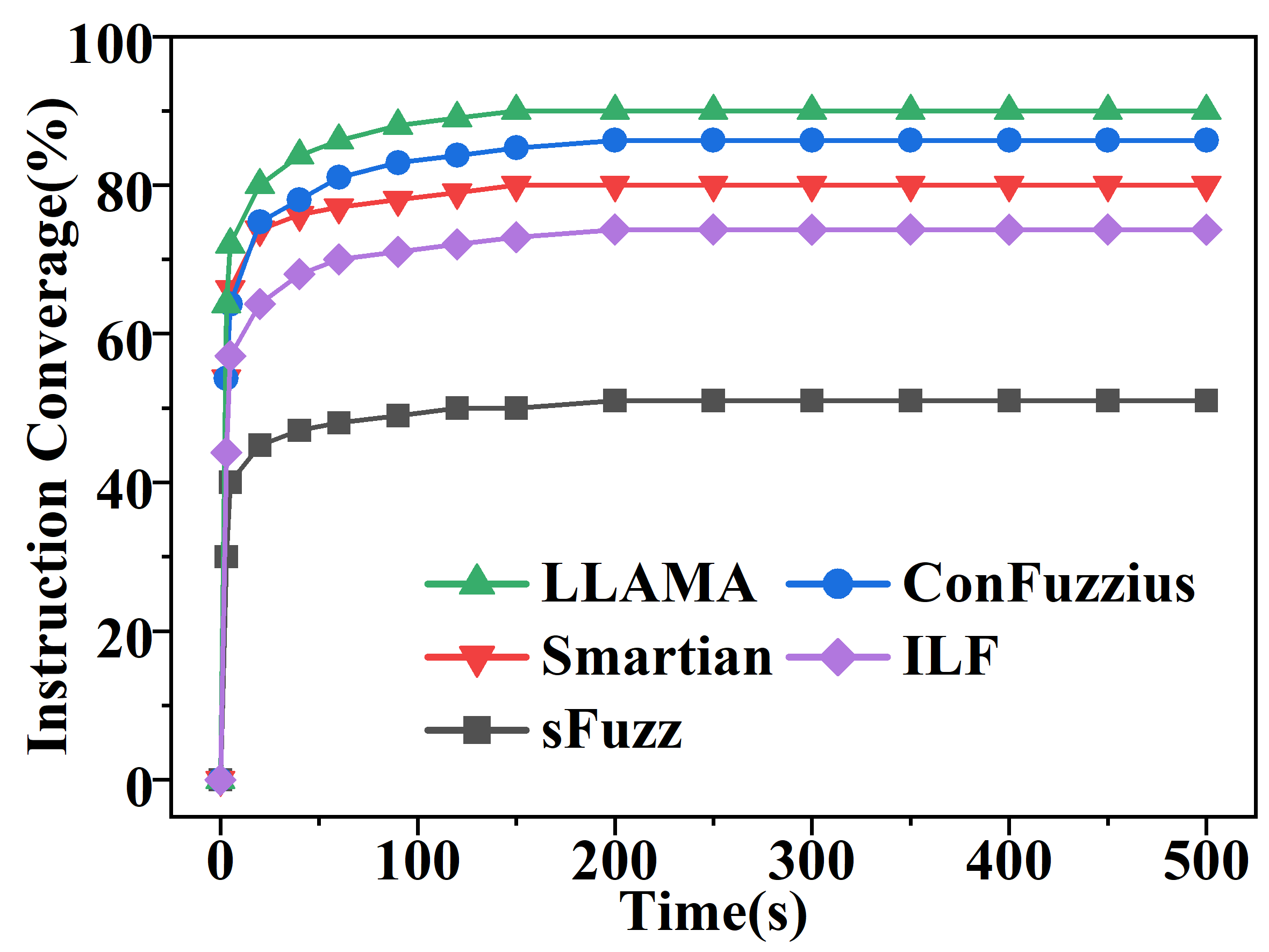

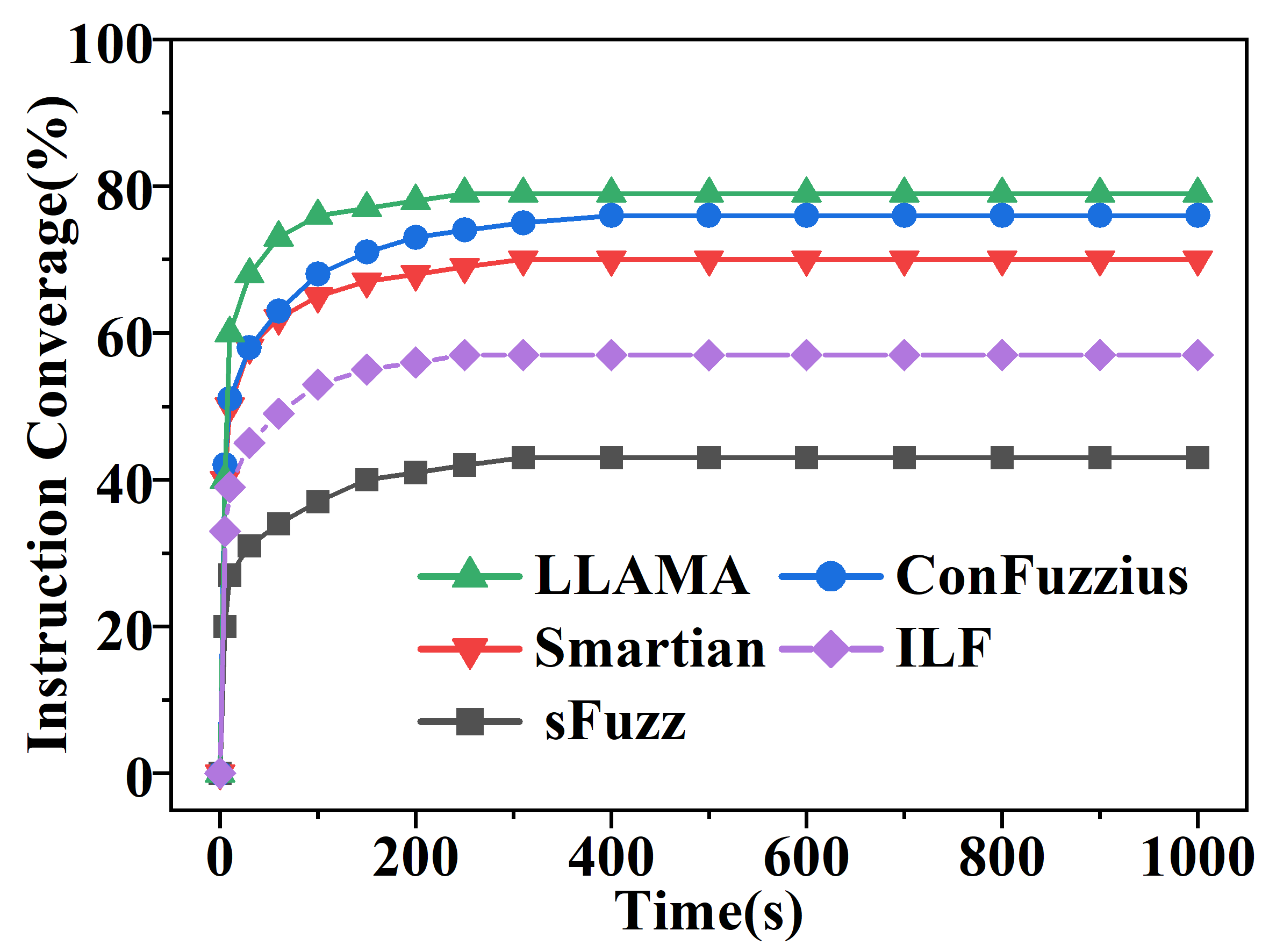

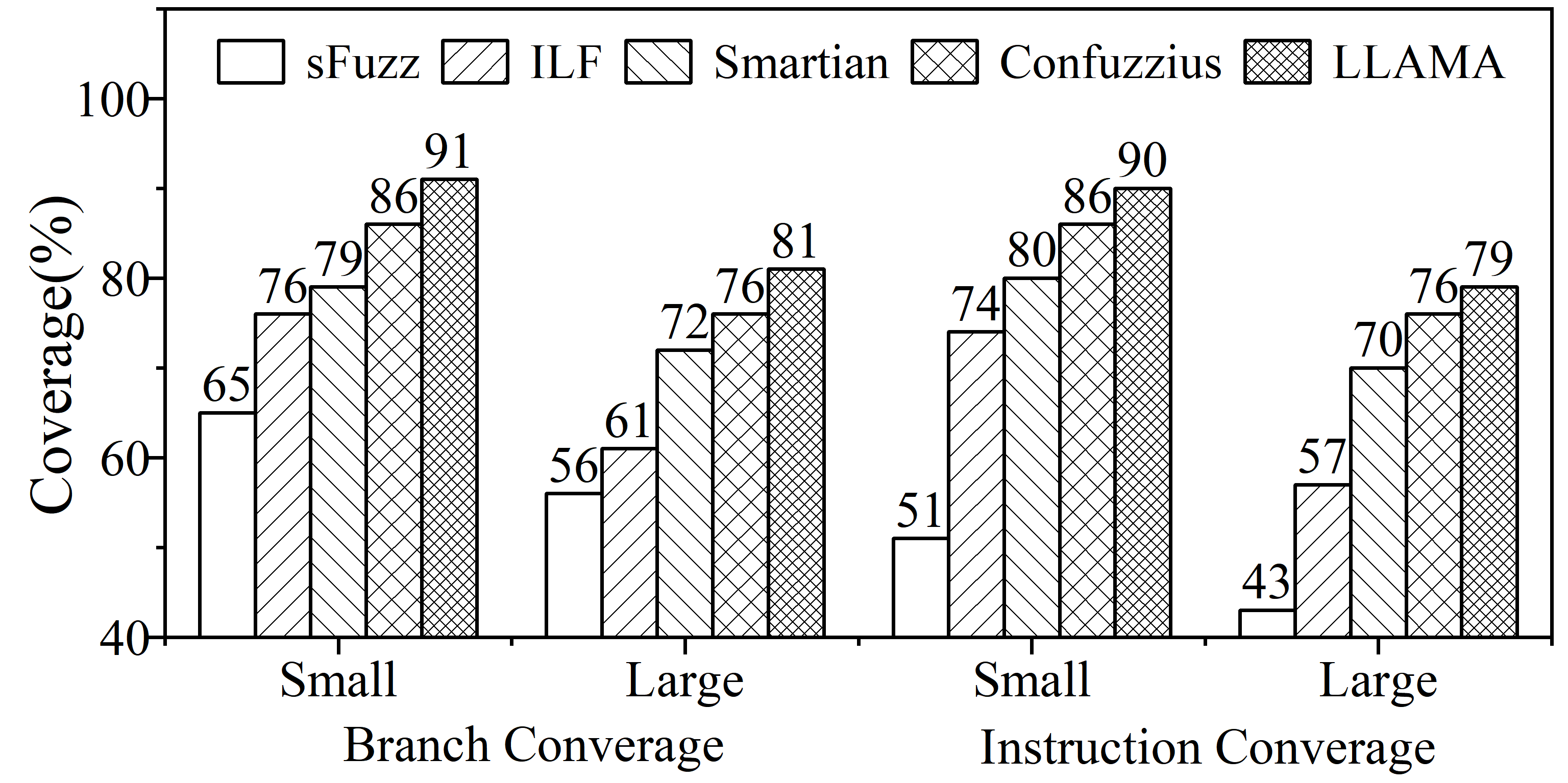

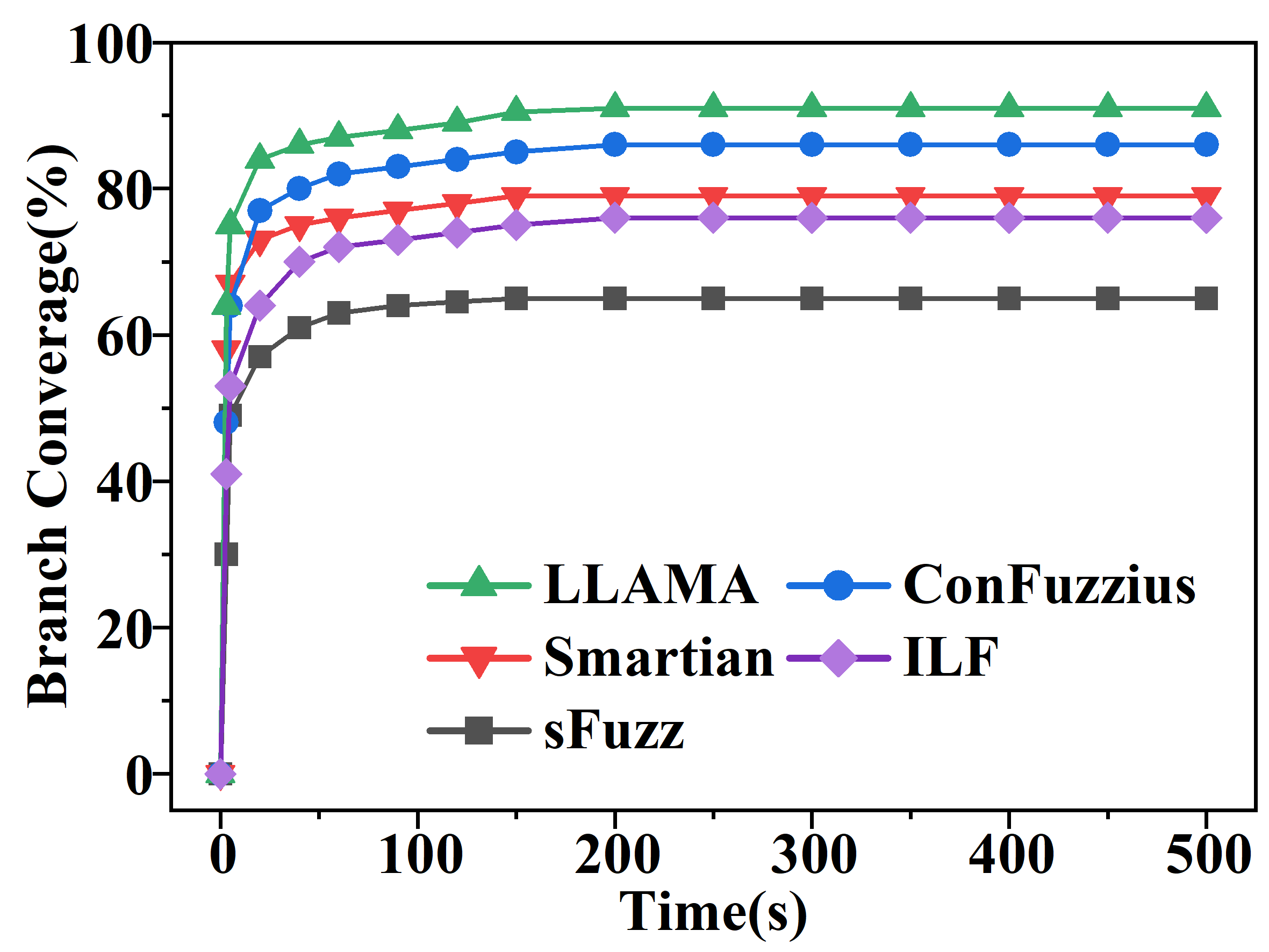

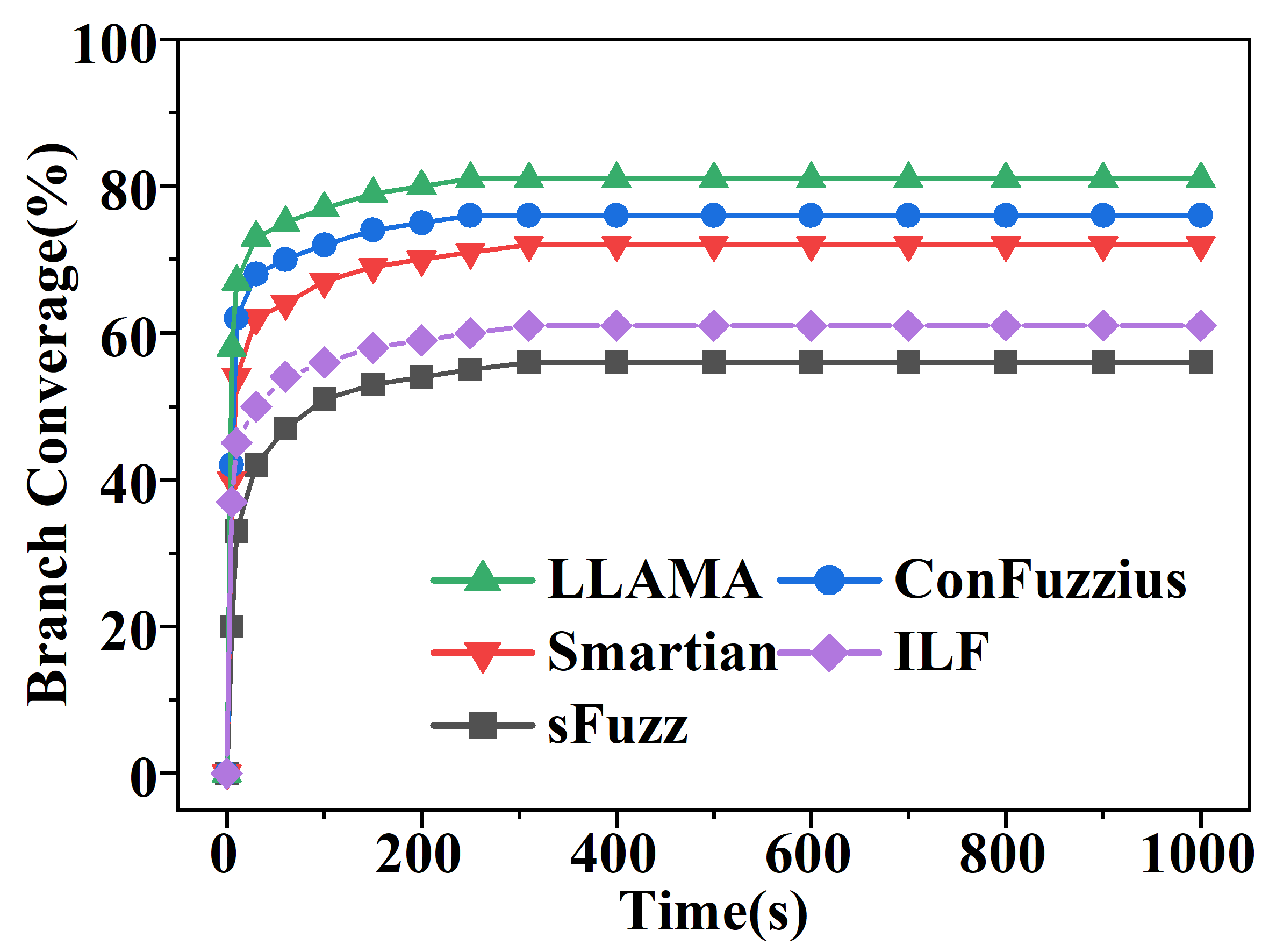

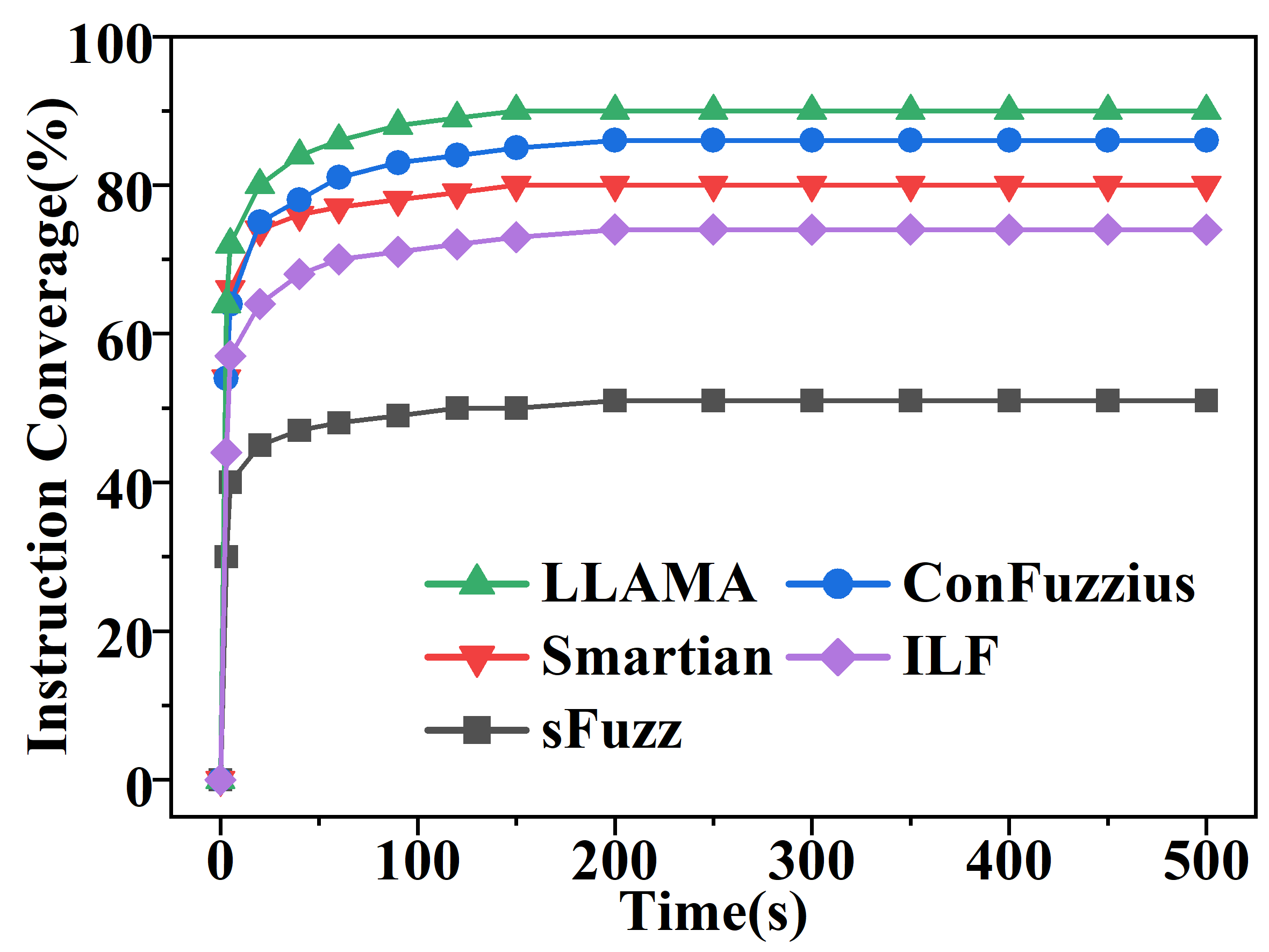

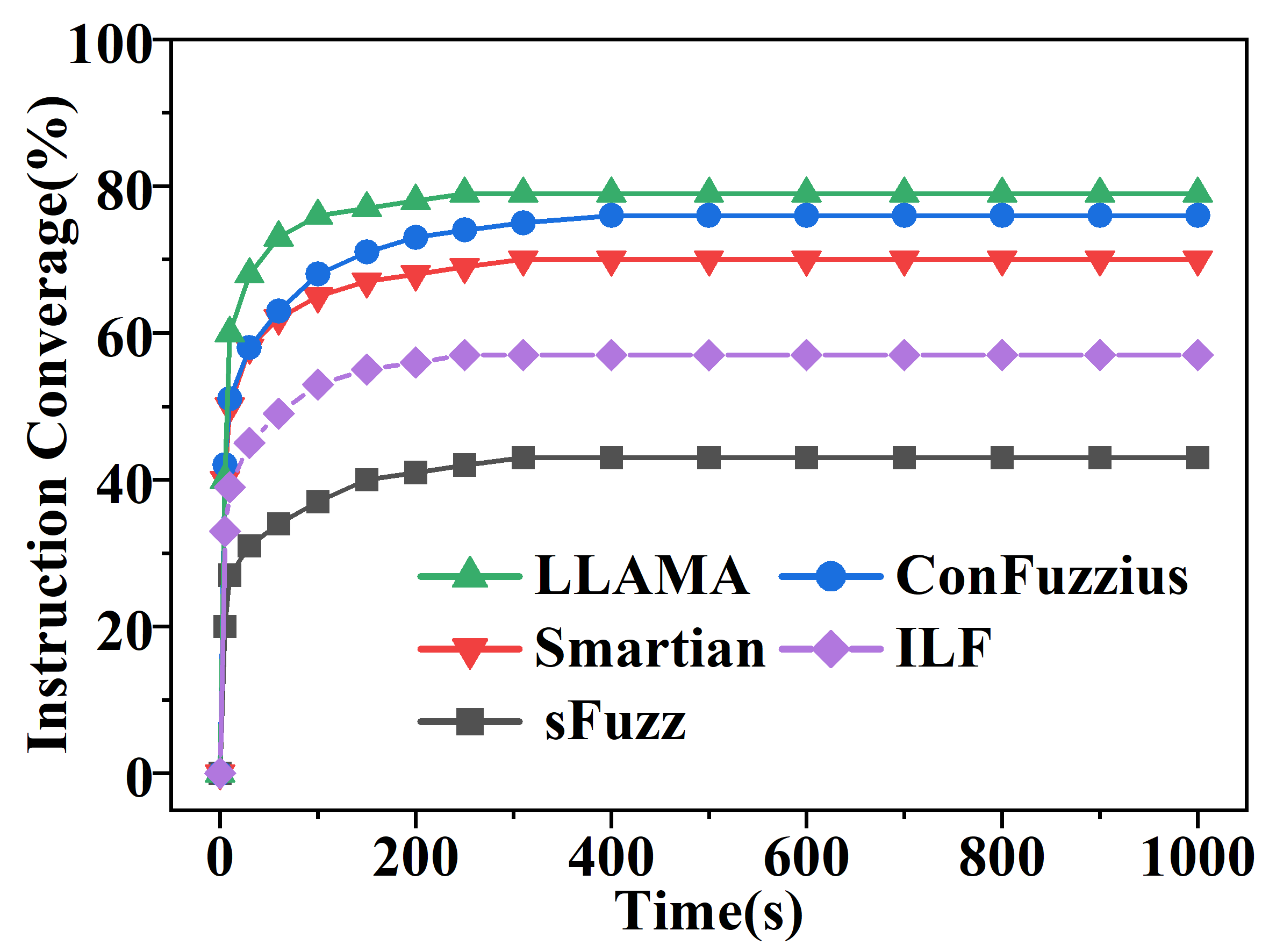

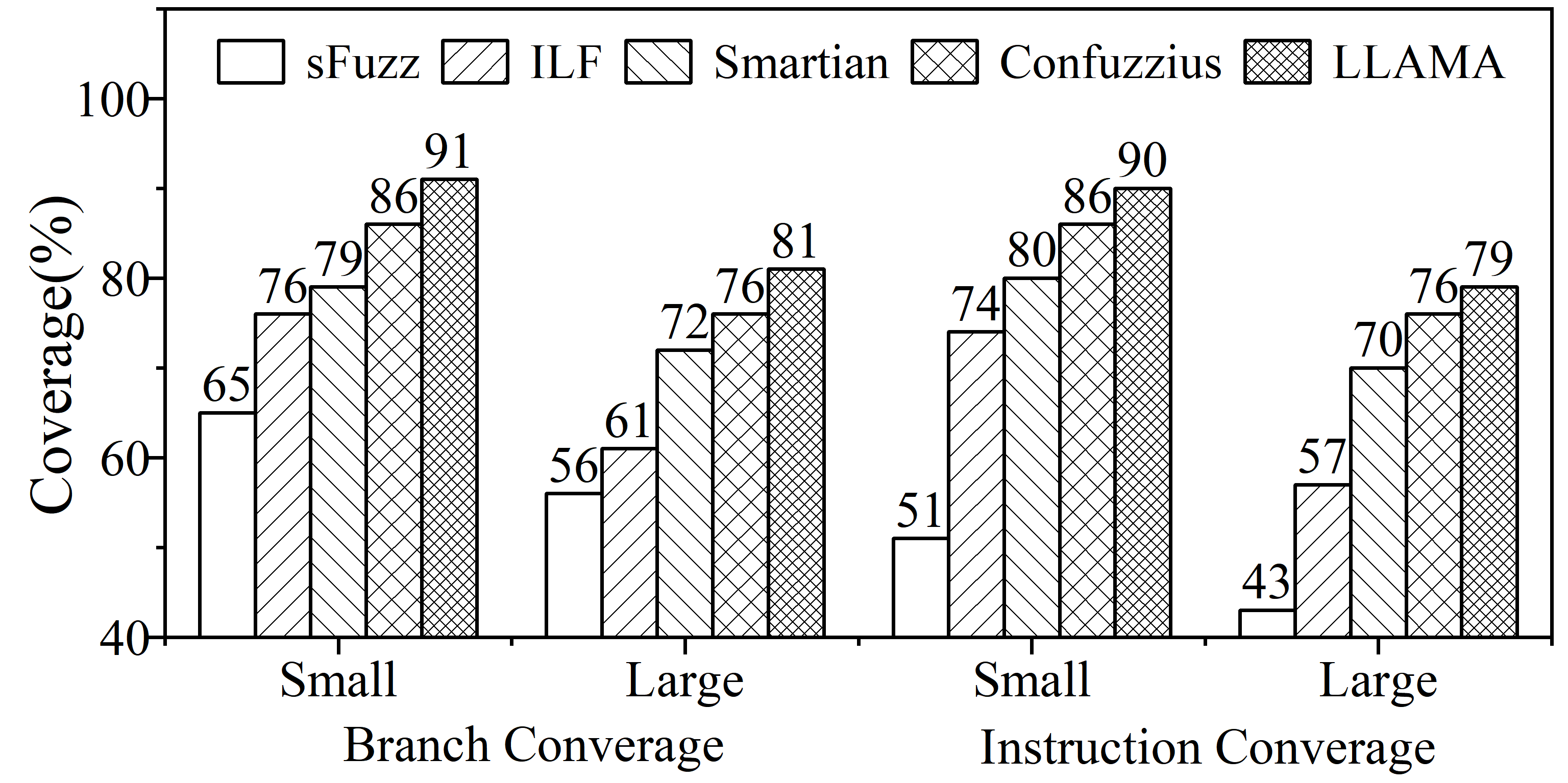

Experiments confirm LLAMA's ability to outperform existing fuzzing tools, achieving 91% instruction coverage and detecting 132 vulnerabilities out of 148 across various smart contract benchmarks.

Figure 4: Branch and instruction coverage comparison on small and large contracts.

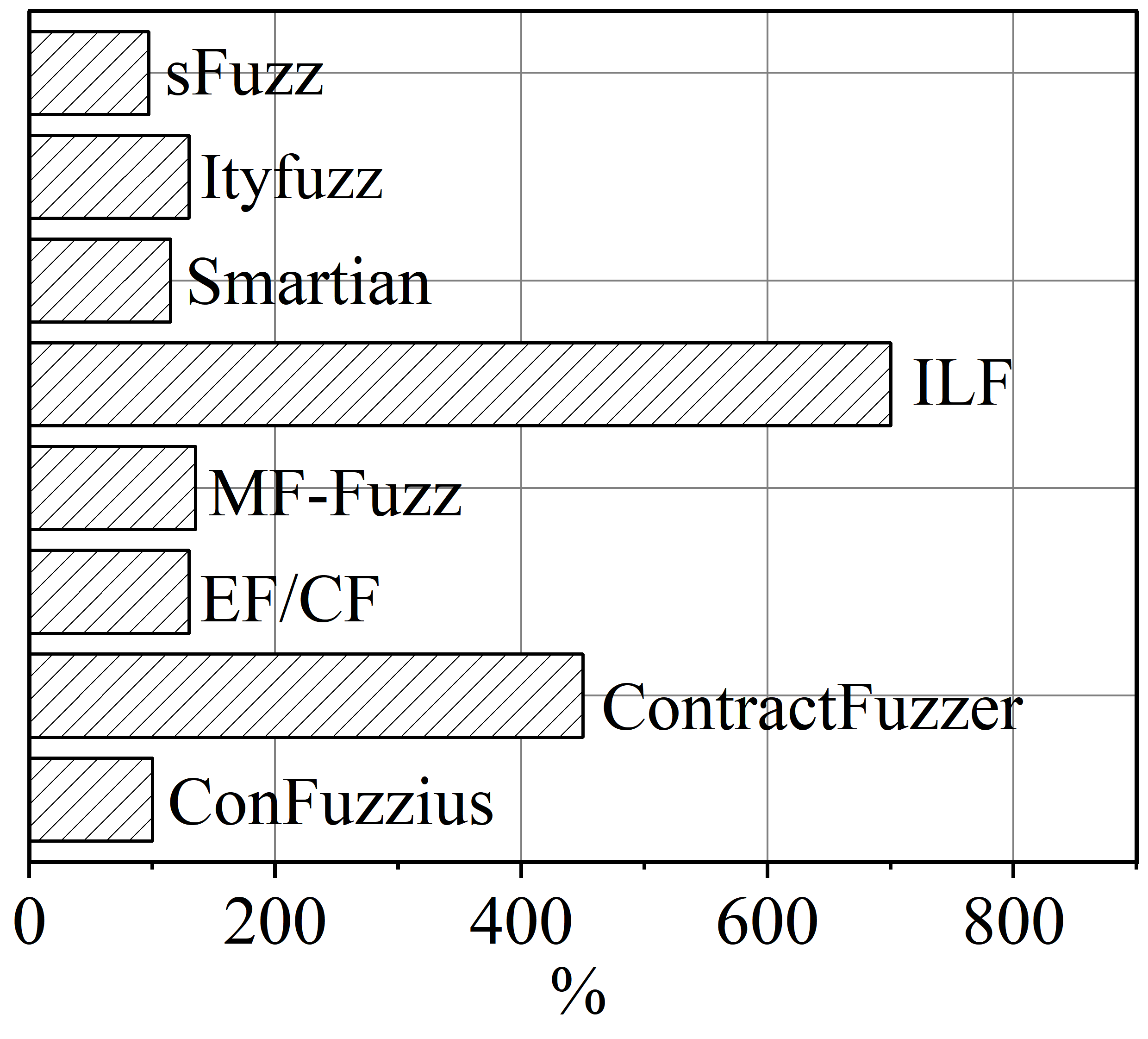

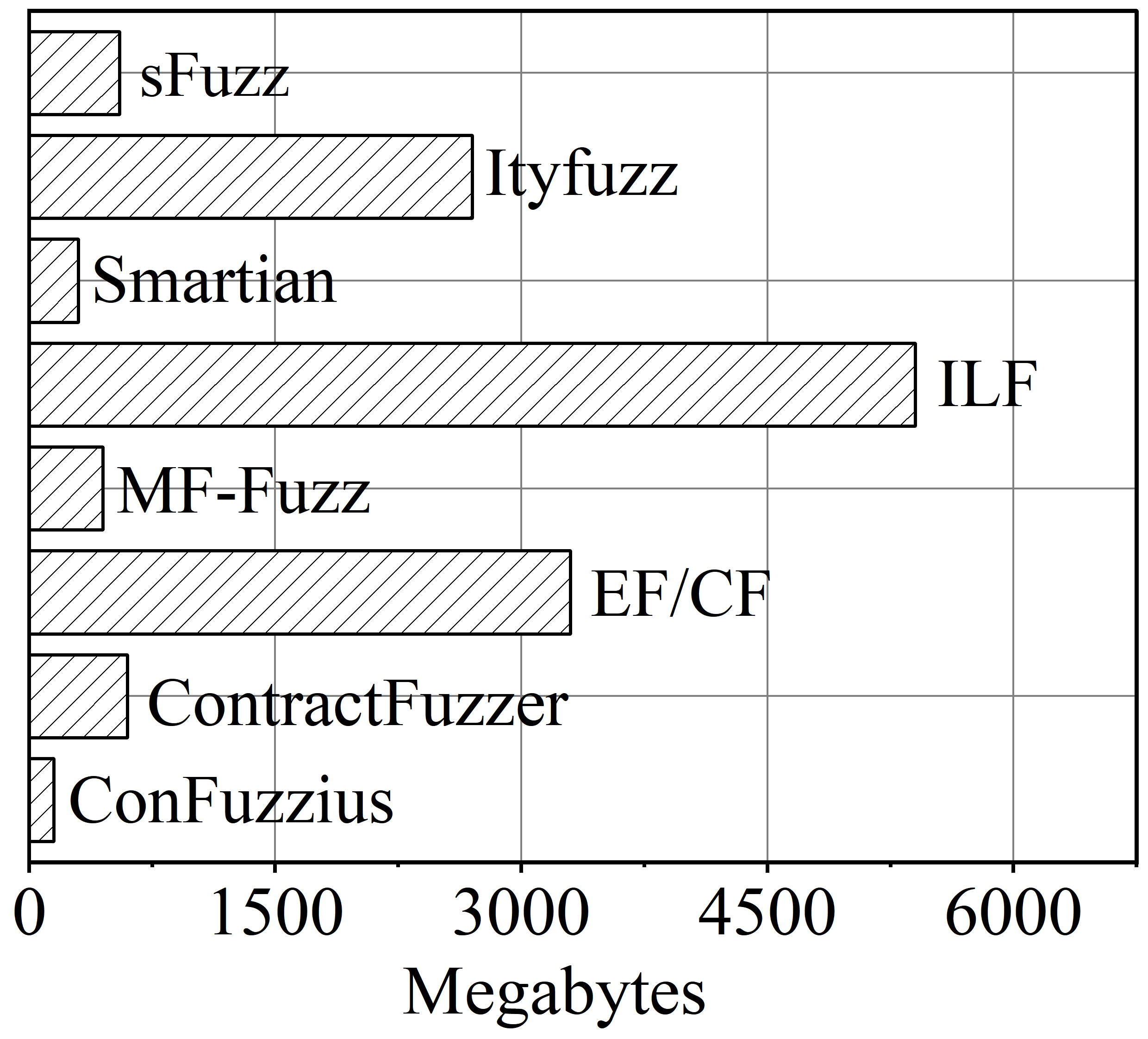

LLAMA shows remarkable adaptability and efficacy, leveraging symbolic execution selectively to explore deep logical paths while maintaining resource efficiency compared to traditional fuzzing and hybrid fuzzing strategies.

Figure 5: Overall coverage comparison.

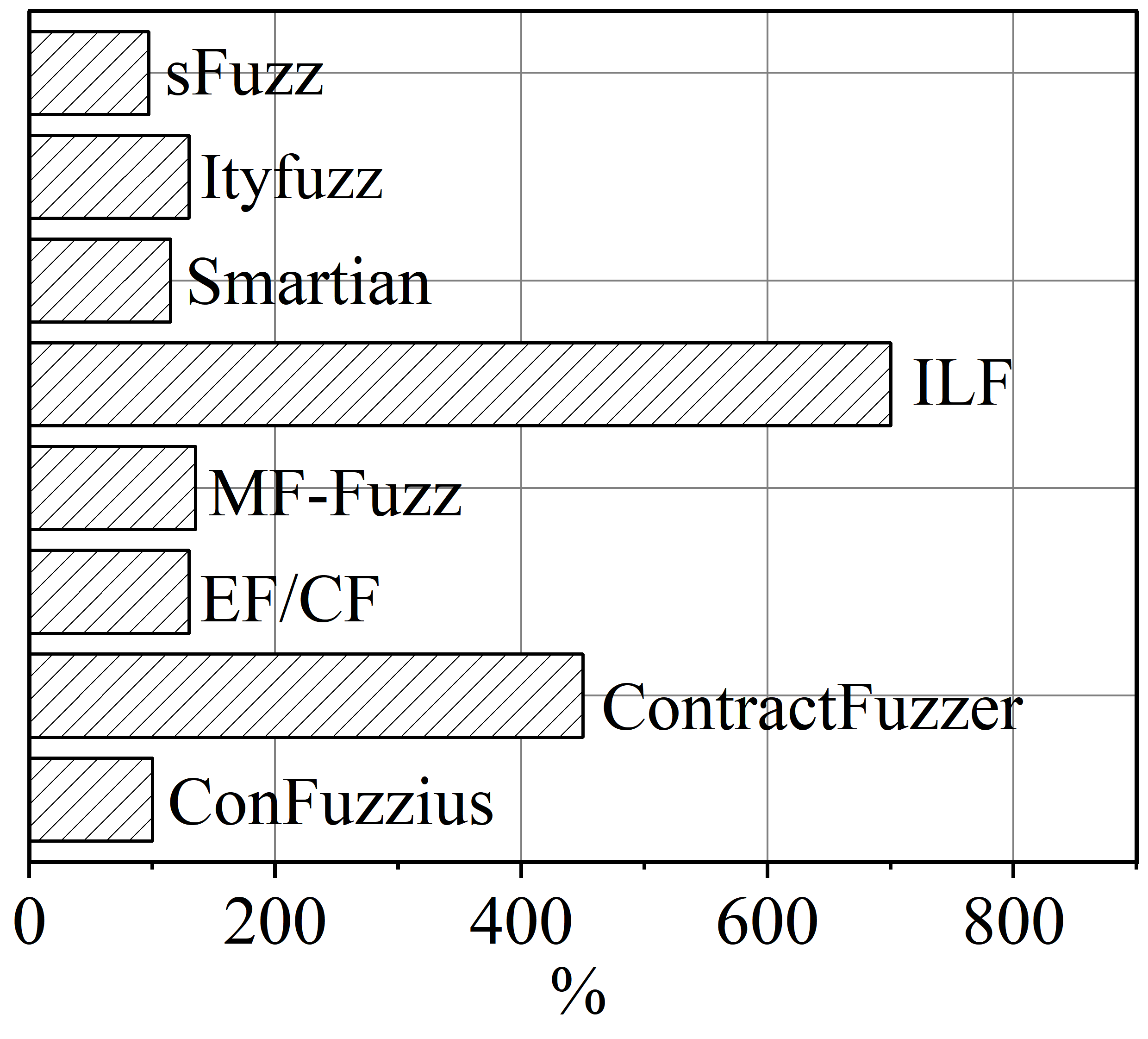

Figure 6: Resource consumption comparison.

Conclusion

LLAMA establishes a new benchmark for smart contract fuzzing, offering a balanced, highly effective approach capable of addressing complex execution paths and revealing vulnerabilities with high accuracy. Its reliance on LLMs for semantically aware seed generation, coupled with a robust, feedback-driven process, marks a significant advancement in the application of AI-driven strategies for blockchain security testing. Future exploration will continue to refine LLAMA, focusing on enhancing mutation strategies and exploring deeper integrations of LLM capabilities for more sophisticated contract analysis.