- The paper introduces PRIME, a framework that integrates episodic and semantic memory to personalize LLM outputs based on user history and evolving beliefs.

- It employs personalized thinking and chain-of-thought generation using cognitive memory models, achieving significant response relevance in experiments on the CMV dataset.

- Experimental results confirm that authentic user profiles are crucial, as performance sharply drops with mismatched histories, underscoring PRIME’s user-centric design.

PRIME: LLM Personalization with Cognitive Memory and Thought Processes

Introduction to PRIME

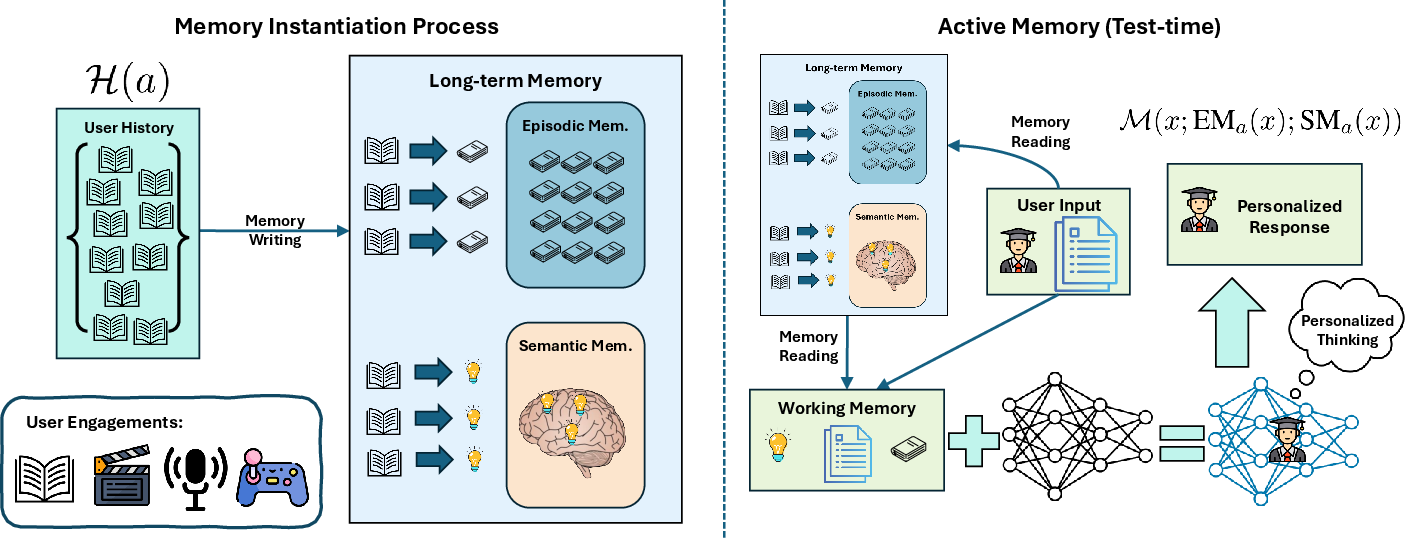

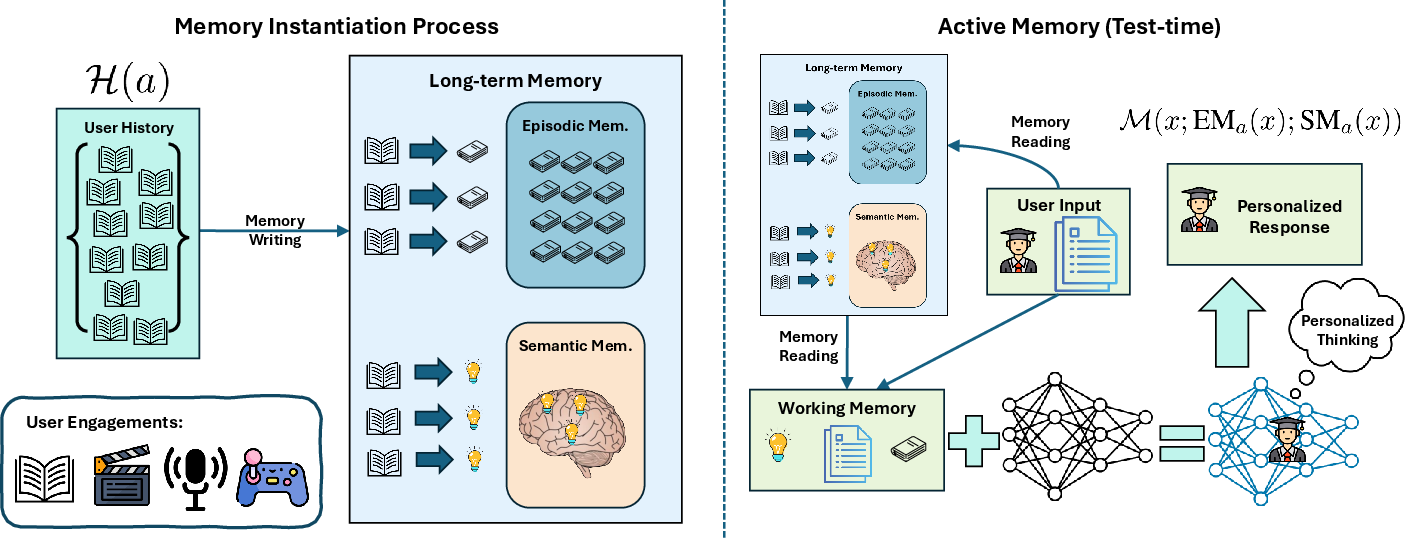

The paper "PRIME: LLM Personalization with Cognitive Memory and Thought Processes" introduces a novel framework for personalizing LLMs. This framework, dubbed PRIME, integrates dual-memory cognitive models comprising episodic and semantic memories, thereby enabling LLMs to better align outputs with users' unique preferences and opinions. PRIME goes beyond existing personalization methods by offering a unified theoretical framework that combines historical user engagements and long-term evolving beliefs.

Framework Components

PRIME leverages a dual-memory model to systematically capture and utilize user-specific historical and semantic information. The framework is inspired by well-established cognitive theories that differentiate between episodic memory—specific personal experiences—and semantic memory—abstract knowledge and beliefs.

Figure 1: Overview of our unified framework, PRIME, inspired by dual-memory model.

- Episodic Memory (EM): Stores interaction-specific data, capturing recent user engagements. The memory reading mechanism employs strategies like recent and relevant history recalls.

- Semantic Memory (SM): Encodes generalized user preferences abstracted from historical data. This can be instantiated via parametric training approaches such as fine-tuning and input-only training, with semantic abstractions realized through model parameters.

- Integration and Personalization: The paper introduces personalized thinking, an augmented capability of PRIME drawn from slow thinking strategies, allowing for generation of chain-of-thoughts that reflect personalized reasoning paths.

Benchmarking and Dataset

Recognizing the lack of suitable benchmarks for evaluating long-context personalization, the authors introduce a dataset derived from the Change My View (CMV) Reddit forum. This dataset is specifically tailored to test the long- and short-context personalization capabilities of LLMs. CMV data enables a ranking-based recommendation task, where the objective is identifying responses that effectively alter users' points of view.

Experimental Results

Empirical studies on CMV data and existing benchmarks demonstrate that:

- Semantic Memory Dominance: Semantic memory usually provides a more robust personalization compared to episodic memory alone.

- PRIME Effectiveness: By integrating both memory types with personalized thinking, PRIME consistently delivers responses that are both contextually relevant and aligned with user-specific long-term beliefs.

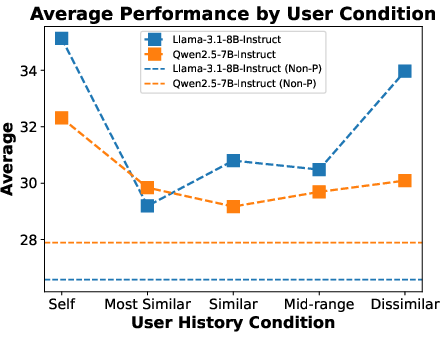

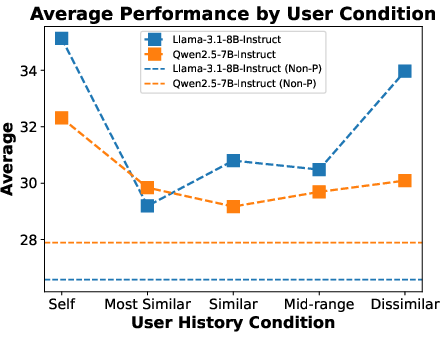

- Profile Replacement Sensitivity: Experiments confirm PRIME's sensitivity to authentic user history, as performance notably dips when personalized memory is replaced with other users' history. This underscores PRIME's dynamic personalization rather than reliance on bandwagon biases.

Figure 2: Average performance under five user-profile replacement conditions. Performance drops sharply when a target user's profile is replaced, confirming the faithfulness of PRIME to user history.

Scalability and Implementation

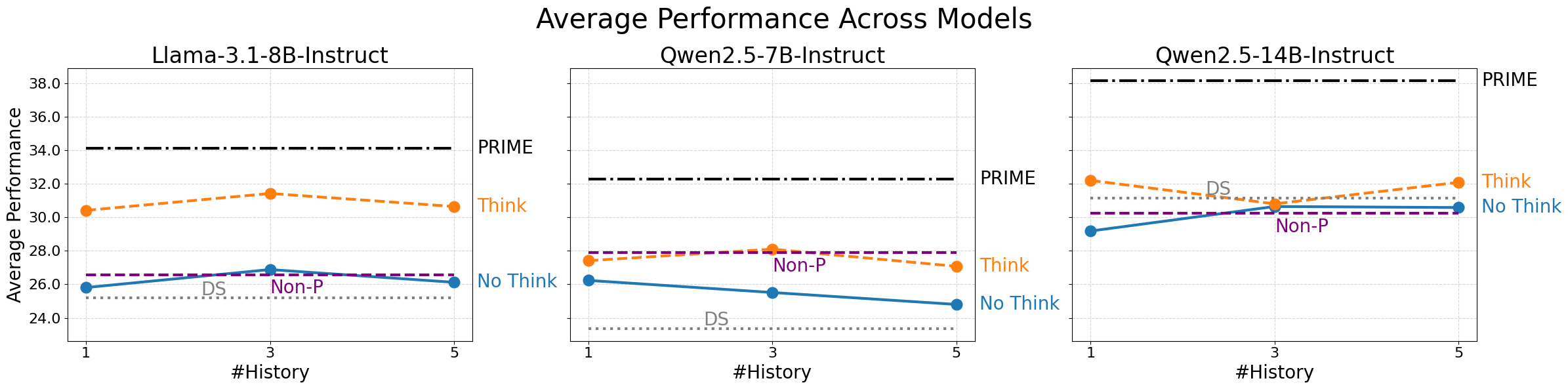

PRIME's scalable nature is highlighted by its compatibility with different model families and sizes. The framework leverages efficient methods like LoRA for memory instantiation, ensuring flexibility in user adaptation, even with limited historical data — often termed the "cold-start" challenge.

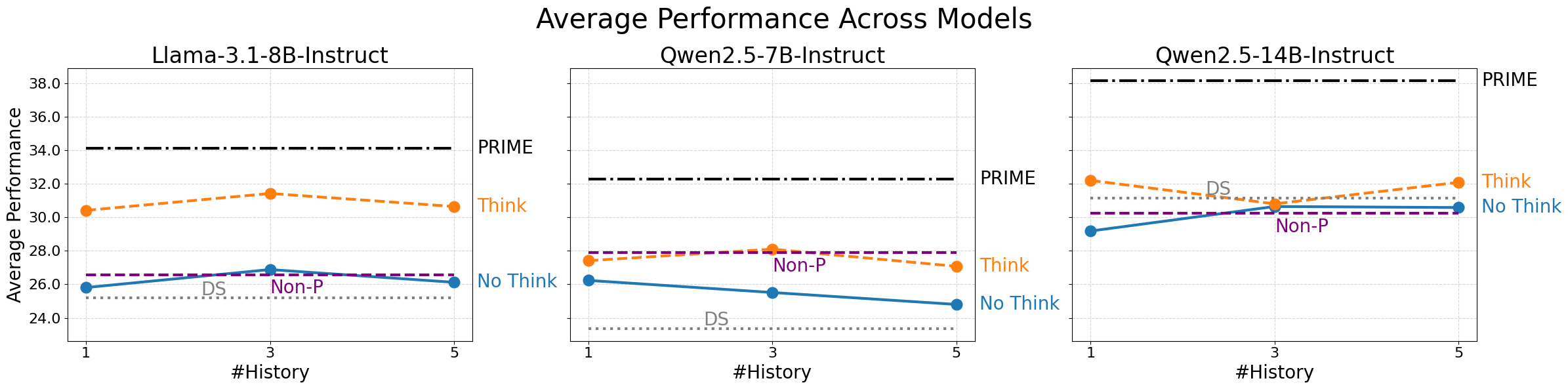

Figure 3: Average performance for Train-free Personalized Thinking paper. Think refers to our train-free thinking approach.

Conclusions and Future Work

The introduction of PRIME as a unified framework for LLM personalization marks a significant advancement in the field, integrating cognitive memory models to achieve more nuanced user alignment. Future work will likely focus on expanding PRIME's applications across broader domains and enhancing its capabilities through further exploration of memory integration and personalized reasoning techniques. The adaptability and robustness of PRIME suggest promising directions for ongoing research in scalable, user-centric AI systems.