- The paper introduces a hierarchical Gaussian world model to differentiate acting and stabilizing arms in bimanual robotic manipulation.

- It employs task-oriented Gaussian Splatting and a leader-follower architecture to predict multi-body spatiotemporal dynamics.

- Experimental results demonstrate a 20.2% success rate improvement in simulation and 60% success in real-world tasks.

Hierarchical Gaussian World Model for Robotic Bimanual Manipulation

The paper "ManiGaussian++: General Robotic Bimanual Manipulation with Hierarchical Gaussian World Model" (2506.19842) introduces a novel approach to address the challenges of multi-task robotic bimanual manipulation by explicitly modeling multi-body spatiotemporal dynamics. The proposed method, ManiGaussian++, extends the prior ManiGaussian framework by incorporating a hierarchical Gaussian world model to enable effective dual-arm collaboration. The core idea involves differentiating between acting and stabilizing arms through task-oriented Gaussian Splatting and employing a leader-follower architecture within the hierarchical Gaussian world model to predict future scenes. This allows the agent to learn complex collaboration patterns necessary for diverse bimanual tasks.

Methodological Innovations

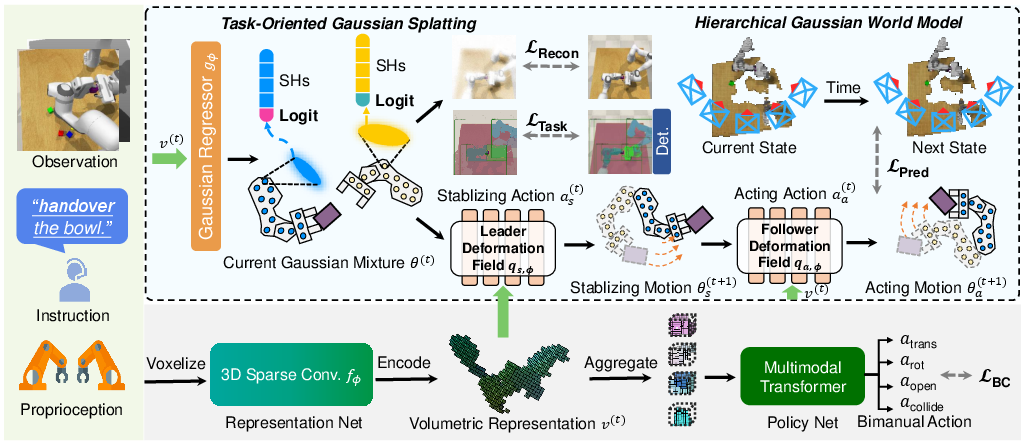

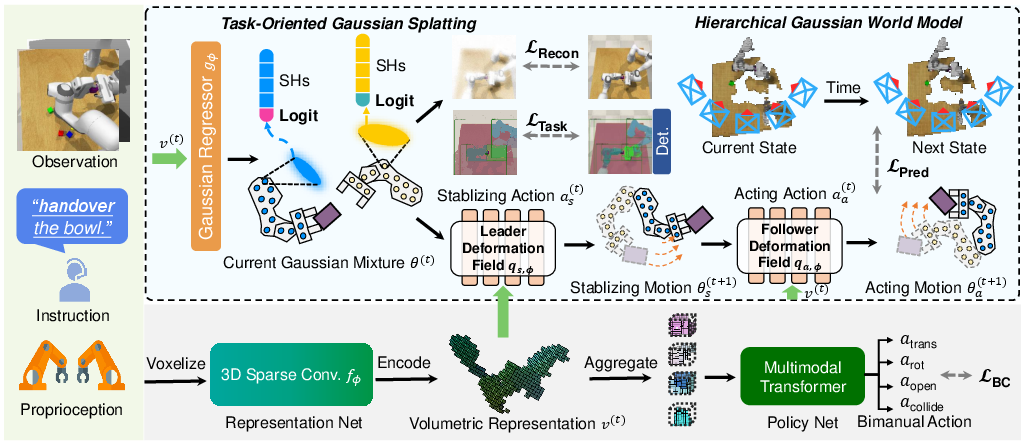

The ManiGaussian++ pipeline (Figure 1) begins by transforming visual input from RGB-D cameras into a volumetric format using a sparse convolutional network. To distinguish between acting and stabilizing arms, a task-oriented Gaussian radiance field is generated. This field assigns unique labels to task-relevant agents and objects, guided by instance logits distilled from pretrained VLMs. This differentiation is crucial for modeling multi-body spatiotemporal dynamics effectively.

Figure 1: The ManiGaussian++ pipeline transforms visual inputs into a volumetric format, generates task-oriented Gaussian Splatting with instance logits, and employs a hierarchical Gaussian world model for future scene prediction.

The hierarchical Gaussian world model, structured with a leader-follower architecture, predicts future scenes to mine multi-body spatiotemporal dynamics. The leader predicts Gaussian Splatting deformation caused by the stabilizing arm's motion, while the follower generates the physical consequences resulting from the acting arm's movement. This architecture allows the model to capture complex interactions between the two manipulators and target objects.

The movement of explicit Gaussian points is modeled based on the robot's actions, with the model predicting the SE(3) movement of Gaussian particles following the Newton-Euler equation. This approach keeps inherent properties such as color, scaling, opacity, and instance logits consistent along the Markovian transition.

Experimental Validation and Results

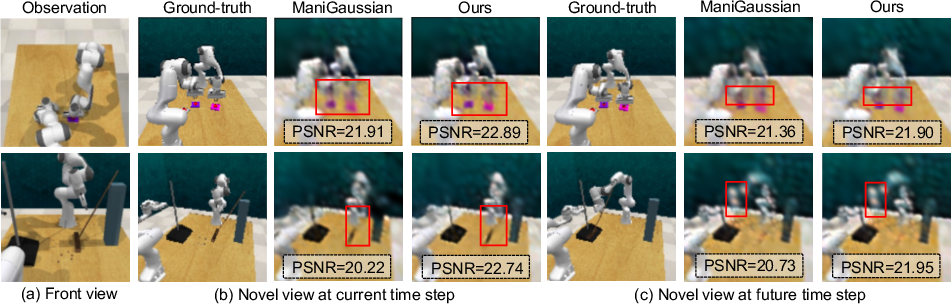

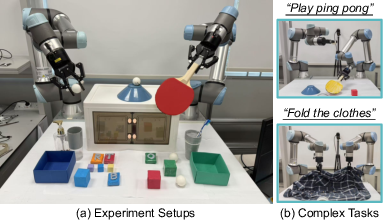

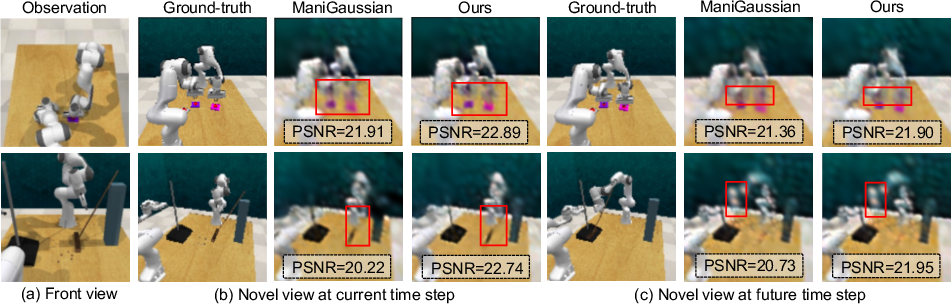

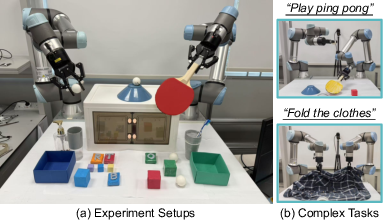

The paper presents comprehensive experiments conducted on the RLBench2 benchmark, a bimanual extension of RLBench, and in real-world settings with two UR5e manipulators (Figure 2). ManiGaussian++ demonstrates significant improvements over state-of-the-art bimanual manipulation methods, achieving a 20.2% improvement in success rate across 10 simulated tasks and a 60% average success rate in 9 real-world tasks. Ablation studies validate the effectiveness of the task-oriented Gaussian radiance field and the hierarchical Gaussian world model. Novel view synthesis results (Figure 3) further illustrate the method's ability to capture multi-body spatiotemporal dynamics accurately, while ManiGaussian fails to model it. Qualitative results from real-world experiments highlight the effectiveness of ManiGaussian++ in completing complex tasks involving intricate collaboration patterns.

Figure 3: Comparison of novel view synthesis, demonstrating that ManiGaussian++ accurately captures multi-body spatiotemporal dynamics, while ManiGaussian fails to model it effectively.

Figure 2: Training and evaluation of Peract2, ManiGaussian, and ManiGaussian++ on nine real-world tasks.

Implications and Future Directions

The results suggest that explicitly modeling multi-body spatiotemporal dynamics through a hierarchical Gaussian world model can significantly enhance the performance of bimanual manipulation systems. The task-oriented Gaussian Splatting and leader-follower architecture are key components that enable the model to learn complex collaboration patterns.

The paper identifies limitations, including the reliance on calibrated multi-view cameras for supervision, which can increase the cost of real robot deployment. Future research could focus on addressing this limitation by exploring methods to reduce the number of required cameras or developing techniques for self-calibration. Additionally, investigating the integration of reinforcement learning techniques to further refine the learned policies and improve generalization capabilities could be a promising direction. The method could also be extended to more complex manipulation scenarios involving multiple objects and agents, as well as tasks requiring long-horizon planning and reasoning.

Conclusion

ManiGaussian++ represents a significant advancement in the field of robotic bimanual manipulation. By explicitly modeling multi-body spatiotemporal dynamics through a hierarchical Gaussian world model, the proposed framework enables robots to learn complex collaboration patterns and achieve high success rates in both simulated and real-world tasks. The presented results and analyses provide valuable insights for future research and development in the area of general robotic manipulation.