- The paper introduces a hybrid network leveraging DenseNet121 and advanced attention mechanisms to accurately segment breast tumors in ultrasound images.

- The methodology combines global, local, and self-aware attention modules with a spatial features enhancement block to boost feature extraction.

- Experimental evaluations on BUSI and UDIAT datasets demonstrate superior Dice and Jaccard performance compared to state-of-the-art methods.

Hybrid Attention Network for Accurate Breast Tumor Segmentation in Ultrasound Images

Introduction

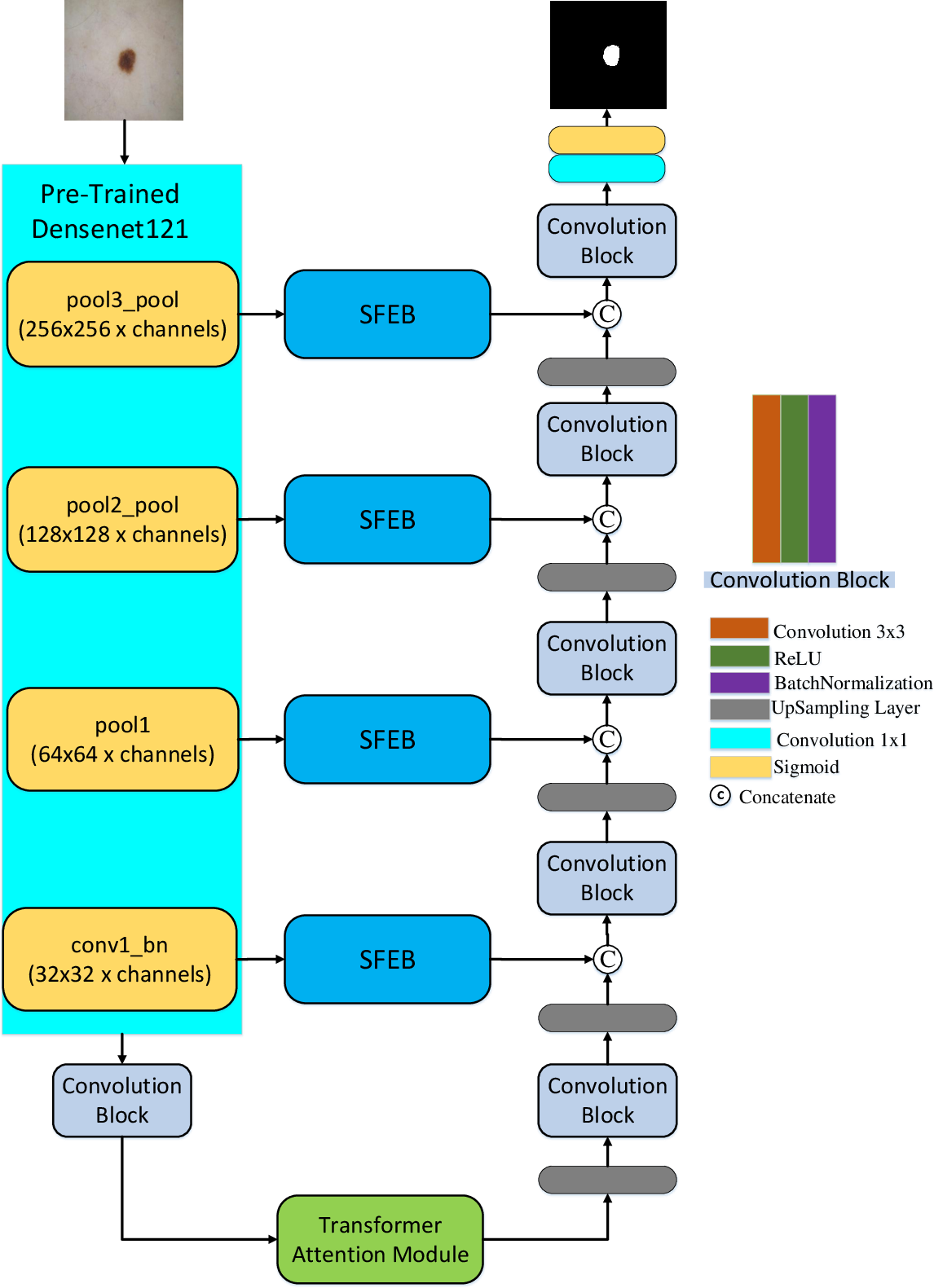

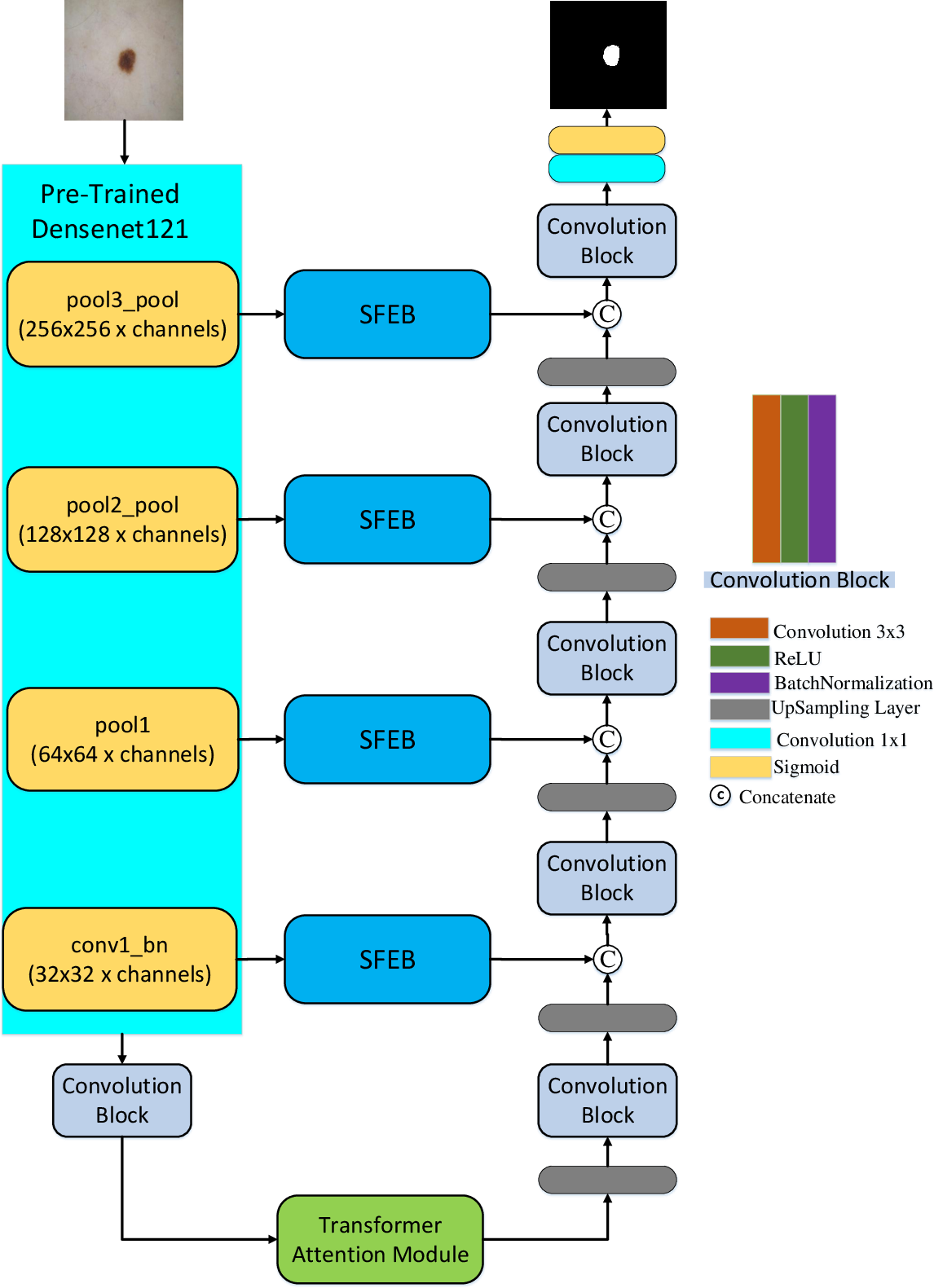

This paper introduces a hybrid attention-based neural network designed to accurately segment breast tumors in ultrasound images. The primary focus is addressing challenges such as noise, lesion scale variation, and unclear boundaries inherent in ultrasound imaging. The authors propose a sophisticated network architecture that integrates a DenseNet121-based encoder, advanced attention mechanisms, and a multi-branch decoder.

Proposed Method

The network architecture is built around several key components:

- Pre-trained DenseNet121 Encoder: For robust feature extraction, DenseNet121 is chosen for its ability to capture complex hierarchical features necessary in medical imaging tasks.

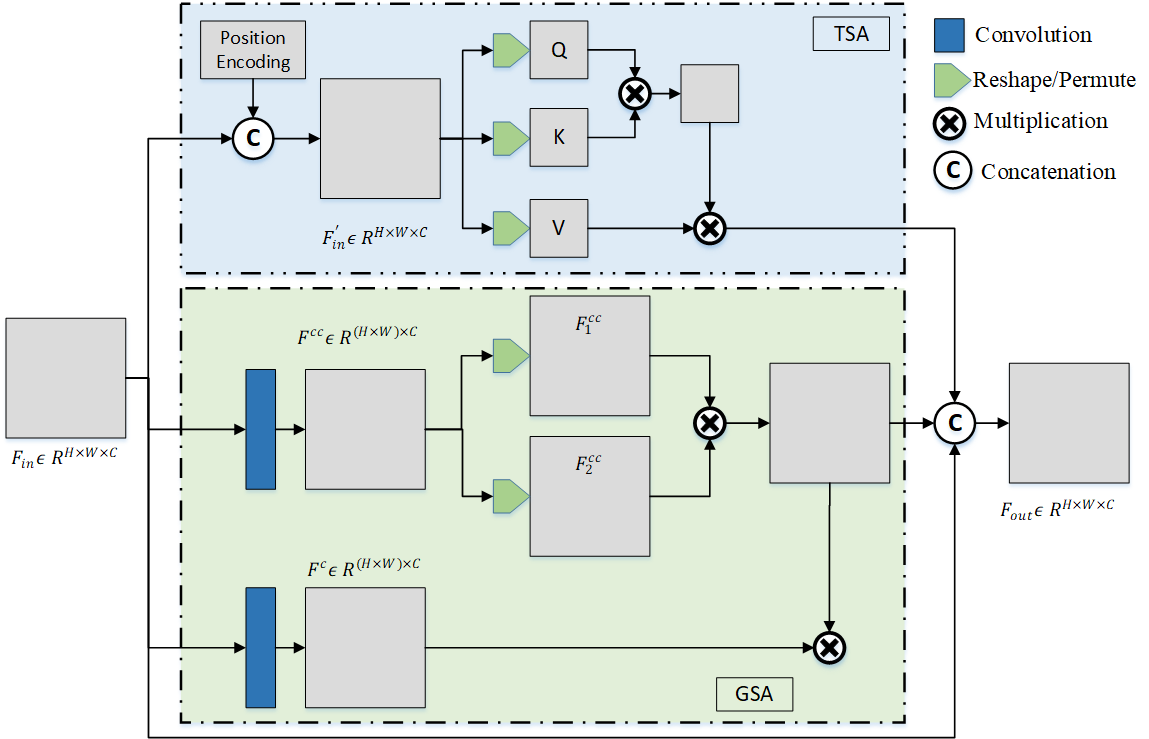

- Attention-Enhanced Decoder: Informed by the shortcomings of traditional U-Net architectures in handling long-range dependencies, the decoder integrates three attention mechanisms:

These mechanisms empower the network to capture and integrate diverse contextual and spatial relationships critical for precise segmentation without introducing computational overhead.

- Spatial Features Enhancement Block (SFEB): Embedded within skip connections, SFEB refines spatial features, enhancing the focus on tumor areas, an essential feature when tackling fuzzy and noisy ultrasound data.

Figure 1: The details of the proposed method. The proposed method consists of a pre-trained encoder and a specific decoder, a spatial features enhancement block (SFEB), and a Transformer attention module.

Self-Aware Attention Module

The Self-Aware Attention Module (SAM) incorporates two key components:

Loss Functions and Optimization Strategy

A hybrid loss function combines Binary Cross-Entropy (BCE) and Jaccard Index loss, addressing the class imbalance often present in medical datasets. This hybrid approach ensures both pixel-wise accuracy and region-level overlap, accommodating the morphological variability of tumors.

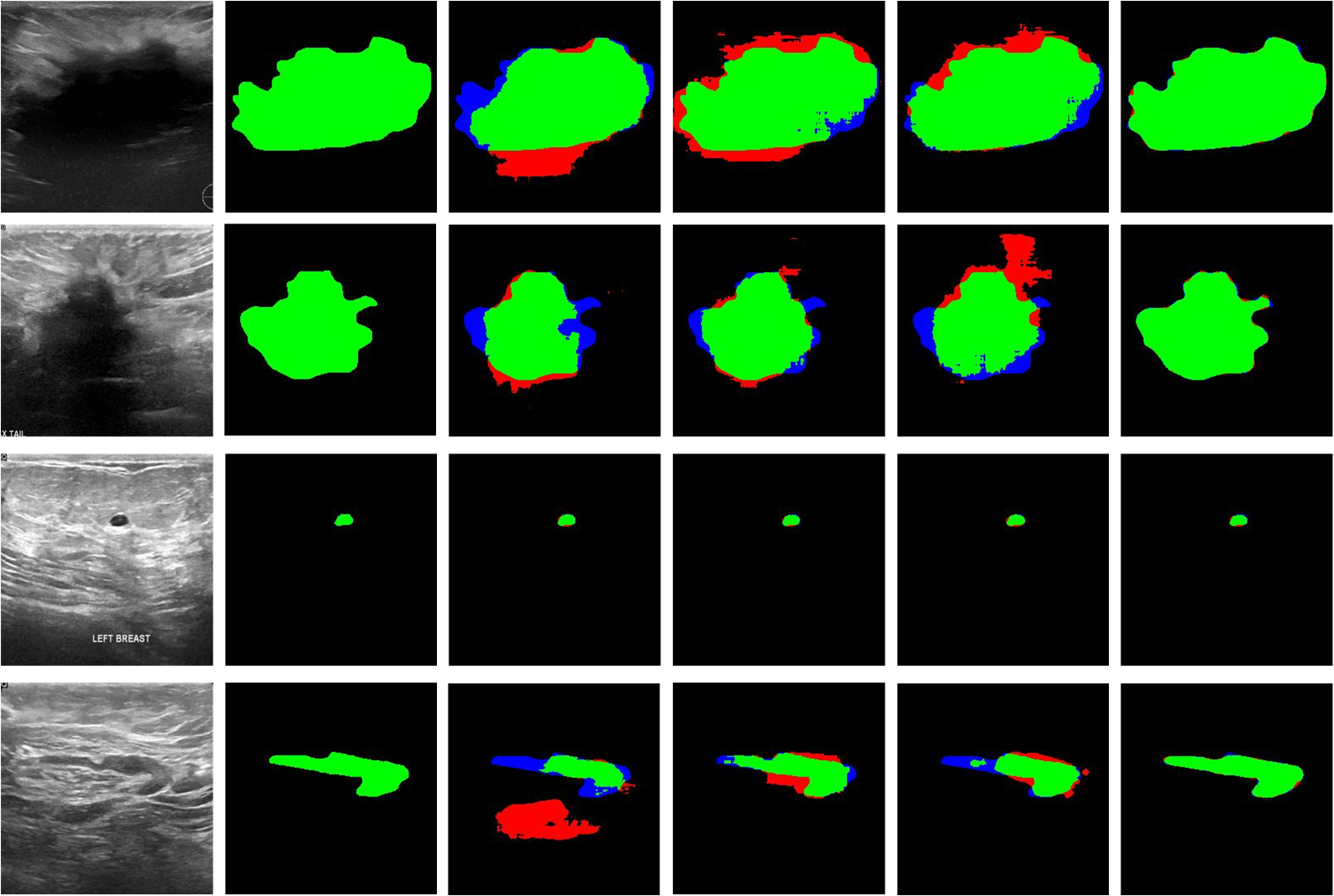

Experimental Evaluation

The efficacy of the proposed method was validated across the BUSI and UDIAT datasets, achieving superior performance metrics compared to state-of-the-art segmentation algorithms:

- Dice Coefficient: Significantly higher than existing models, indicating improved overlap of predicted and actual tumor regions.

- Jaccard Index: Demonstrating increased alignment accuracy.

- Specificity and Sensitivity: Balanced improvement, showing both robust identification of tumor areas and minimal false positives.

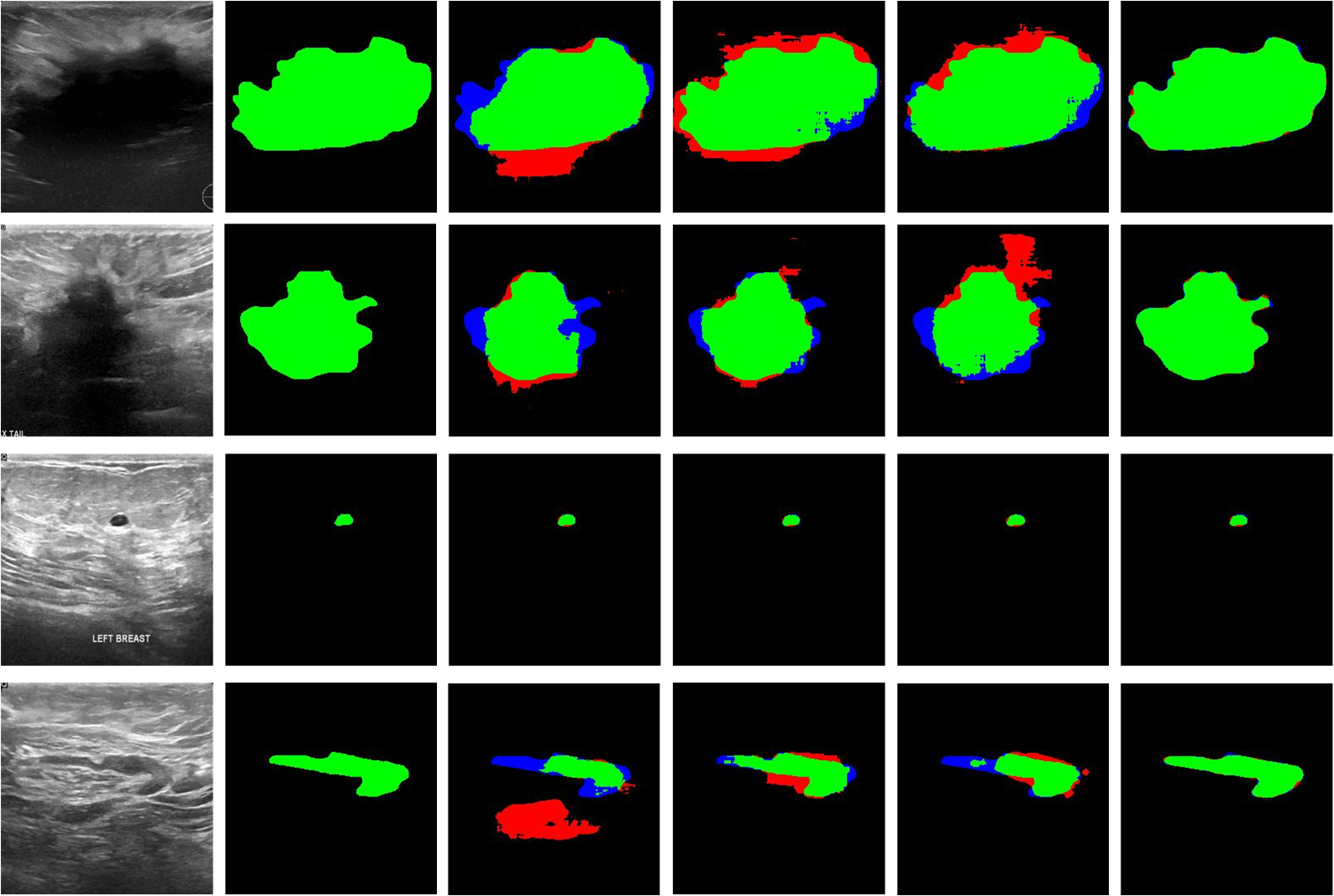

Figure 3: Qualitative comparison of segmentation results on the BUSI dataset. From left to right: original image, ground truth, U-Net, UNet++, and the proposed method.

A series of ablation studies illustrated the impact of each architectural component, affirming the contribution of hybrid attention mechanisms and SFEB in boosting segmentation accuracy, especially in challenging ultrasound imagery scenarios.

Conclusion

The proposed Hybrid Attention Network elevates the standard for breast ultrasound image segmentation through its integration of advanced attention modules within a DenseNet framework. This refined approach not only equips radiologists with a powerful diagnostic tool but also sets the groundwork for further improvements in real-time clinical applications, reinforcing both diagnostic accuracy and operational efficiency.

In conclusion, the paper presents a practically viable solution to a critical challenge in medical imaging, promising enhanced diagnostic resolutions through a blend of deep learning innovations. Future research directions may include exploring data augmentation strategies and domain adaptation capabilities to broaden the clinical utility of the framework across diverse imaging environments.