- The paper introduces AniMaker, a framework that uses multiple agents and MCTS-driven clip generation to transform text into coherent animated narratives.

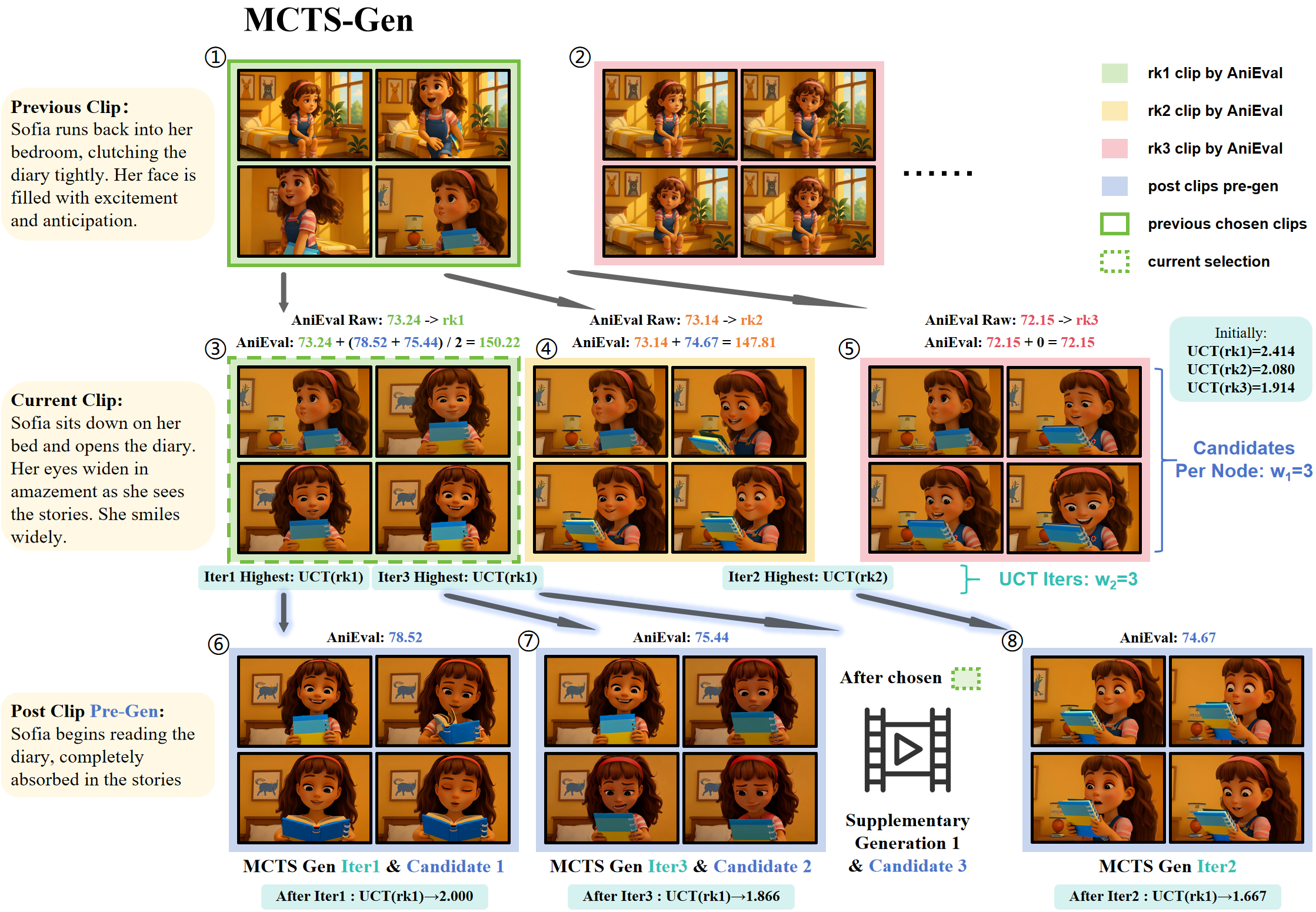

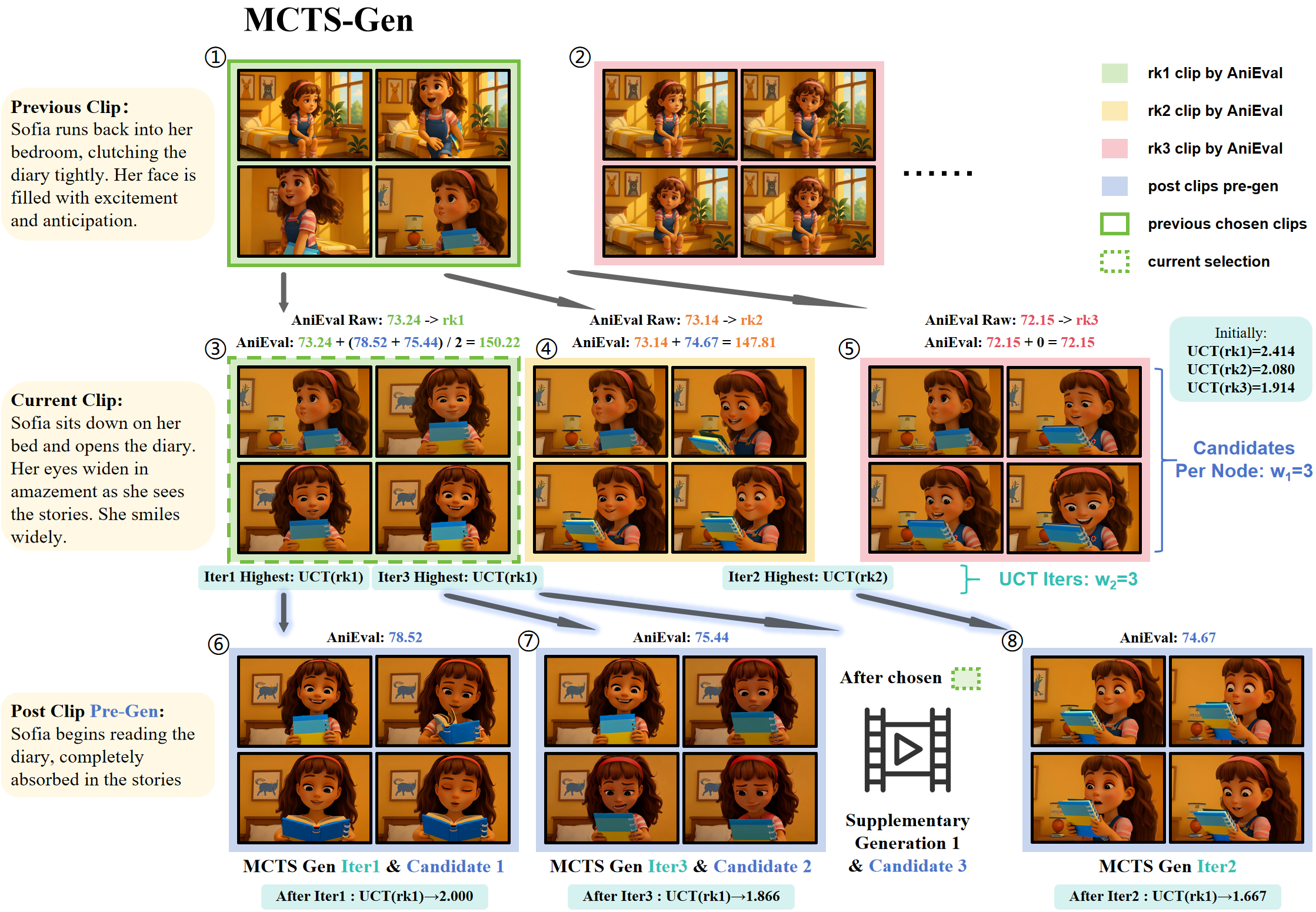

- The paper details a novel MCTS-Gen strategy that efficiently balances exploration and exploitation to generate high-quality video clips.

- The paper demonstrates improved narrative and visual coherence through the AniEval evaluation framework and superior performance on standard metrics.

AniMaker: Automated Multi-Agent Animated Storytelling with MCTS-Driven Clip Generation

Introduction

The "AniMaker" framework presents a sophisticated approach to generating storytelling animations directly from textual inputs. This framework is designed to address the challenges inherent in creating coherent, long-form animated videos that involve multiple scenes and characters. Traditional methods have struggled with maintaining narrative coherence and visual continuity because they often rely on rigid keyframe conversion techniques, leading to disjointed narratives. AniMaker proposes a novel solution by employing a multi-agent system to achieve automation in high-quality animated storytelling.

Architecture Overview

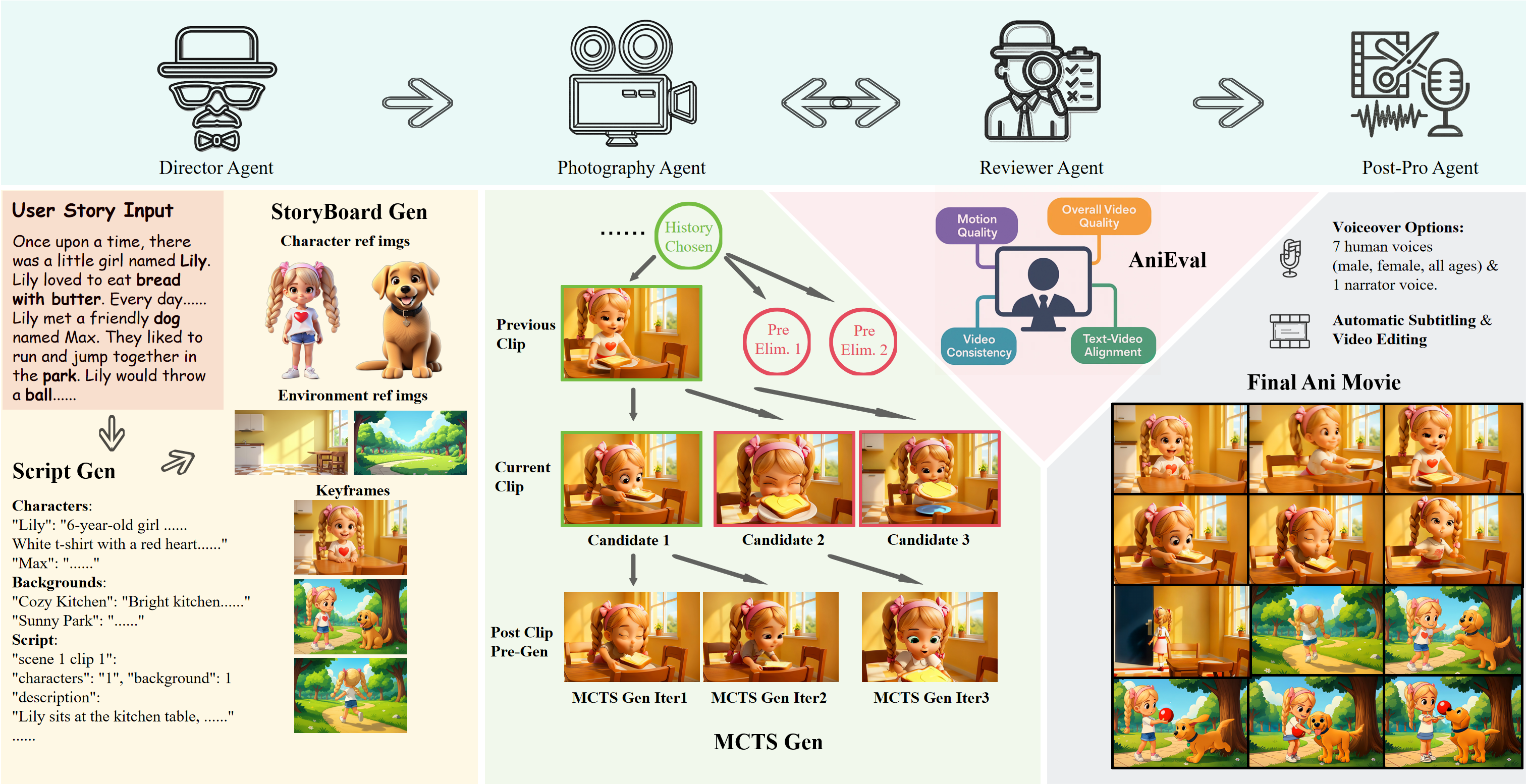

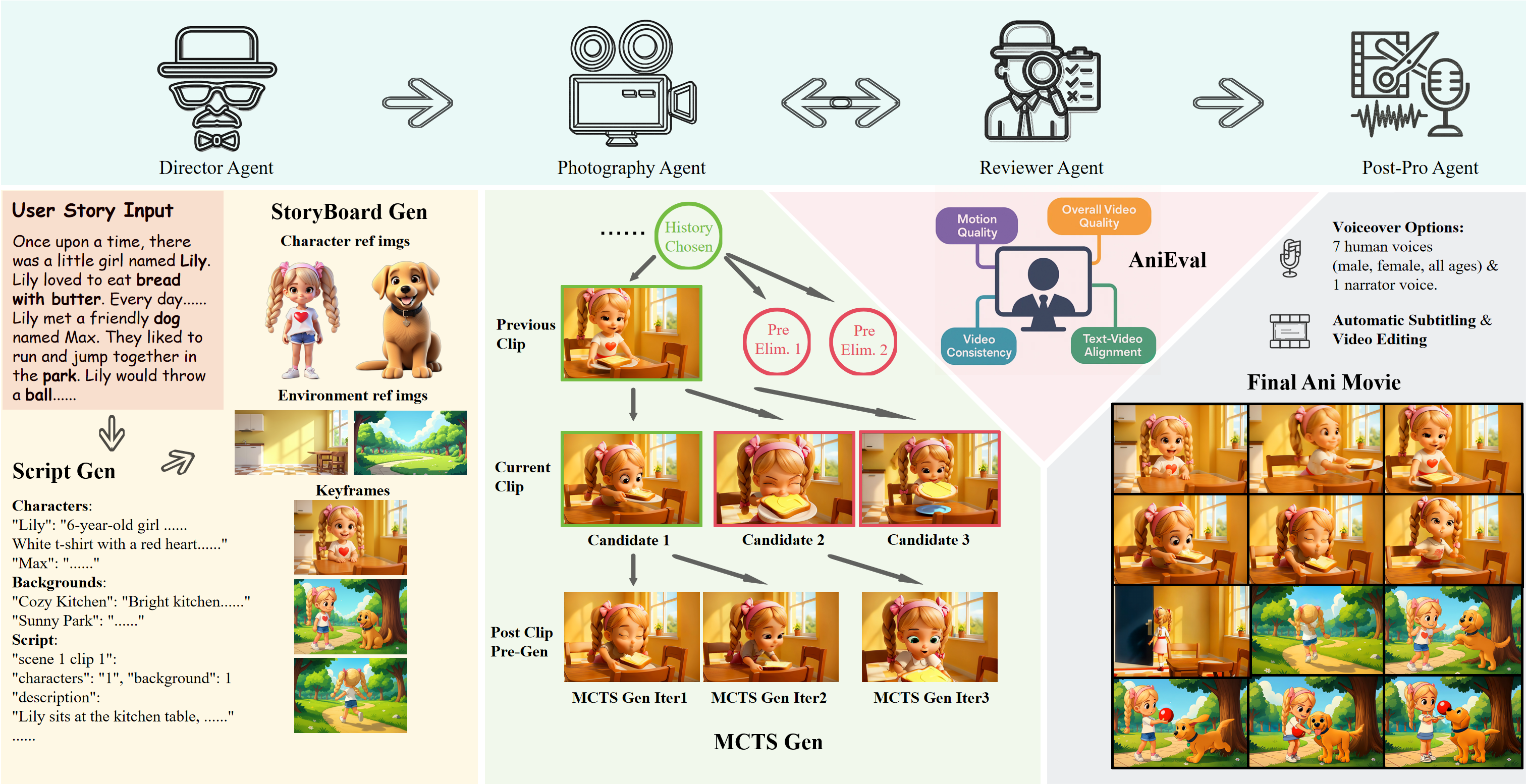

AniMaker’s architecture is composed of several specialized agents: the Director Agent, Photography Agent, Reviewer Agent, and Post-Production Agent. These agents collaborate to transform text into fully realized animations, each fulfilling specific roles in the animation production pipeline.

- Director Agent: Responsible for converting text prompts into detailed scripts and storyboards, incorporating character and background management.

- Photography Agent: Utilizes the MCTS-Gen strategy to efficiently explore and generate video clip candidates, balancing exploration and resource usage.

- Reviewer Agent: Evaluates candidate clips using the AniEval system to ensure story and visual consistency.

- Post-Production Agent: Compiles selected clips into the final animation, adding voiceovers and synchronizing audio.

Figure 1: The overall architecture of our AniMaker framework. Given a story input, Director Agent creates detailed scripts and storyboards with reference images. Photography Agent generates candidate video clips using MCTS-Gen, which optimizes exploration-exploitation balance. Reviewer Agent evaluates clips with our AniEval assessment system. Post-production Agent assembles selected clips, adds voiceovers, and synchronizes audio with subtitles. This multi-agent system enables fully automated, high-quality animated storytelling.

MCTS-Driven Clip Generation

The core of the AniMaker framework is the MCTS-Gen strategy employed by the Photography Agent. This Monte Carlo Tree Search-inspired method effectively navigates the vast candidate space of video generation by focusing on promising paths while significantly reducing computational overhead. MCTS-Gen optimizes both the exploration of diverse clips and the evaluation of high-potential sequences, ensuring coherent video outputs.

Figure 2: Illustration of our MCTS-Gen strategy for efficient Best-of-N Sampling.

AniEval Framework

AniEval advances beyond existing evaluation metrics by specifically targeting multi-shot storytelling animation. It provides a context-aware assessment that includes various dimensions such as story consistency, action completion, and animation-specific features. AniEval evaluates each clip in the context of its neighbors, optimizing the selection process for creating a coherent narrative flow.

Experimental Results

AniMaker demonstrates superior performance across several standard and novel evaluation metrics, including VBench and the newly proposed AniEval framework. It achieves consistent top-tier results in visual and narrative coherence compared to existing models specialized in visual narration and video generation.

Scene Image and Video Generation

In scene image generation, AniMaker outperforms other models with a notable improvement in text-to-image similarity metrics. For video generation, AniMaker achieves superior scores in metrics of visual appeal and character consistency, indicating its effectiveness in maintaining narrative fidelity.

Conclusion

AniMaker provides a robust framework for fully automated storytelling animation, from text input to final video production. By integrating advanced MCTS-based generation and a comprehensive evaluation system, it significantly enhances narrative and visual coherence in animated storytelling. The framework's modular design allows for future advancements as new models become available, thus steadily narrowing the gap between AI-generated content and production-quality animation.