- The paper introduces IC-Fusion, which prioritizes IR features using an innovative cross-modal fusion strategy for enhanced object detection.

- It employs a dual-stream architecture with specialized modules (MSFD, CCSG, CLKG, CSP) to efficiently fuse multi-scale features from IR and RGB images.

- Experimental results indicate that the IR-centric approach achieves robust detection in adverse lighting, outperforming traditional RGB-only methods.

This paper introduces IC-Fusion, a multispectral object detection framework designed to effectively fuse visible (RGB) and infrared (IR) data by prioritizing infrared features. The core insight is that IR images inherently contain structurally rich, high-frequency information crucial for object detection, especially in adverse lighting conditions. The proposed method leverages this observation through an infrared-centric fusion strategy, incorporating a novel and efficient cross-modal fusion module.

Motivation and Key Insights

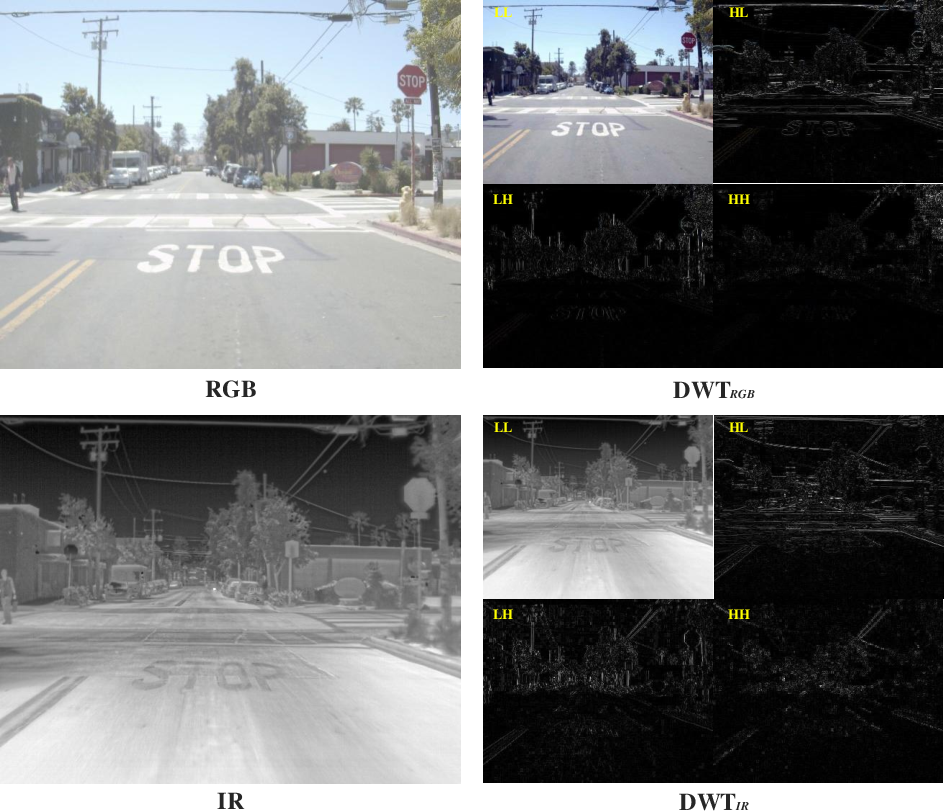

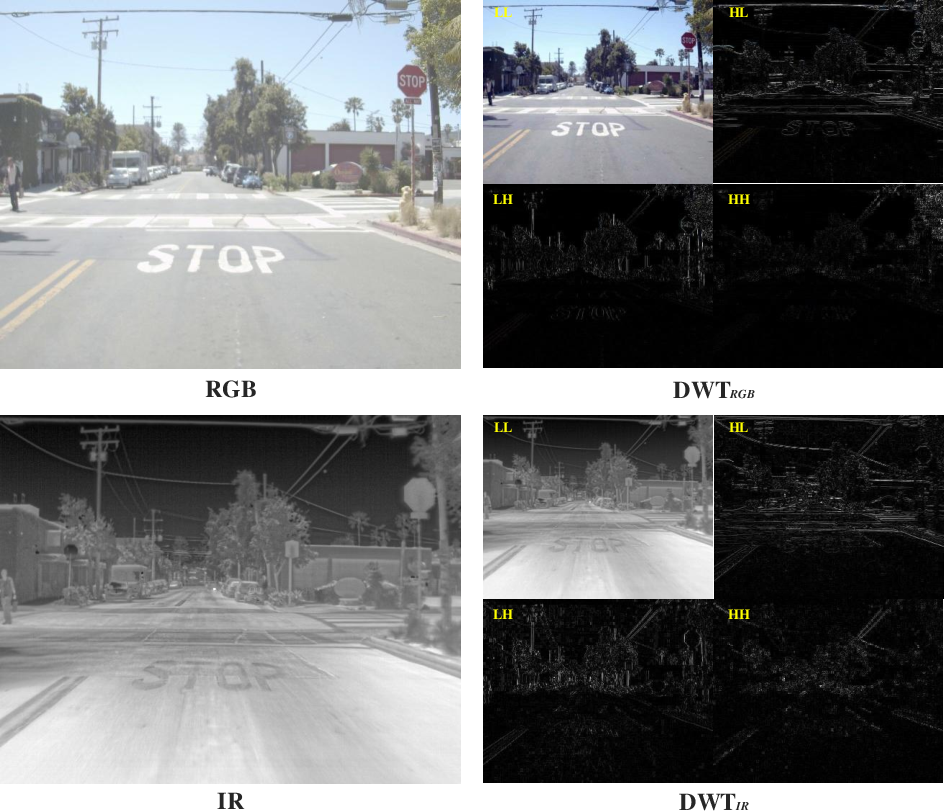

The paper highlights the challenges of object detection in low-light and adverse conditions, where RGB images often degrade. While IR imagery provides complementary thermal radiation information, effectively integrating RGB and IR modalities remains difficult due to spectral differences. Wavelet analysis reveals that IR images contain sharper structural cues beneficial for localization, while RGB images primarily offer low-frequency semantic information (Figure 1). This motivates the design of IC-Fusion, which emphasizes IR features while integrating complementary RGB context. The paper includes a quantitative comparison that shows IR detectors outperform RGB detectors, which implies feature extraction from RGB may be redundant.

Figure 1: Wavelet decomposition reveals that IR images contain distinct object boundaries and contours, especially in the LH and HL sub-bands, while RGB images primarily contain low-frequency semantic structures in the LL band.

IC-Fusion Framework

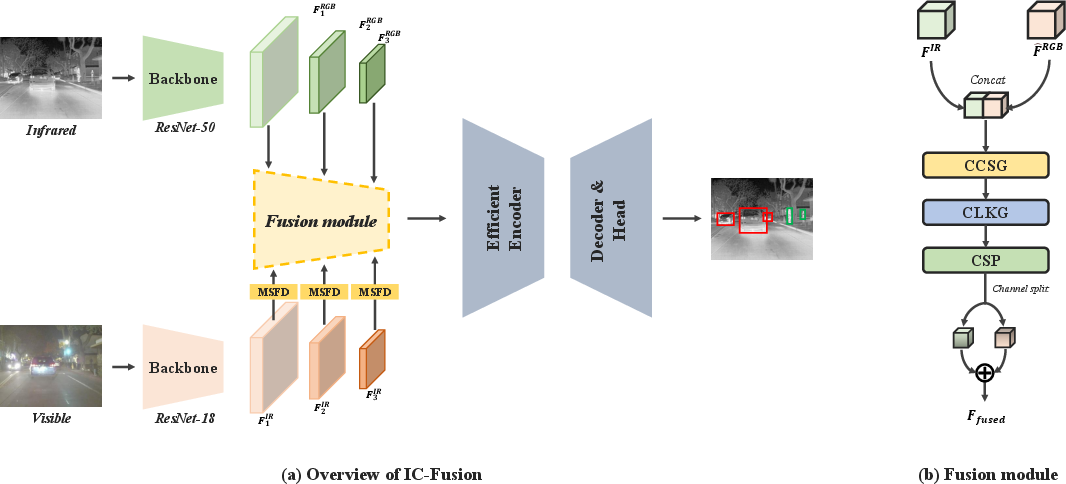

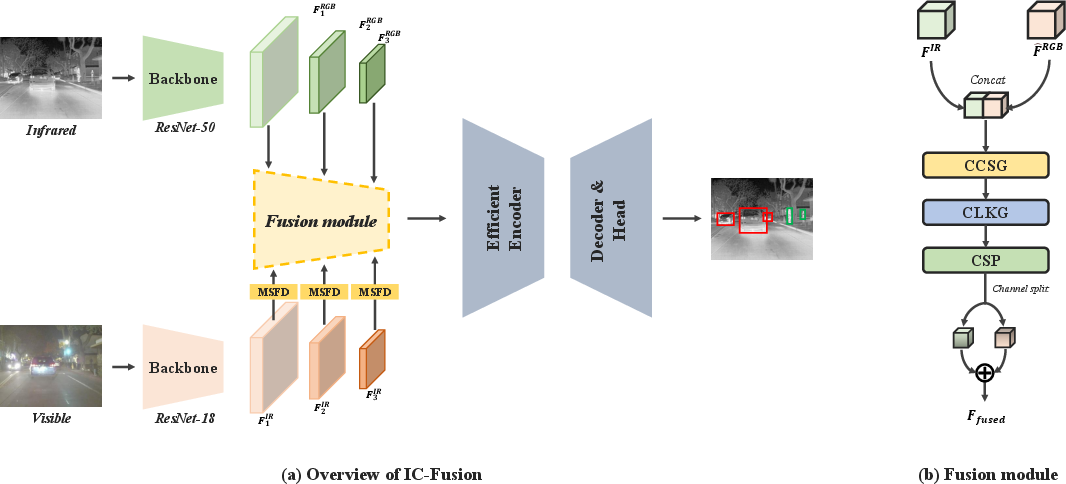

IC-Fusion employs a dual-stream architecture with a lightweight ResNet-18 backbone for RGB and a ResNet-50 for IR, connected via a novel cross-modal fusion module (Figure 2). The framework integrates multi-scale features from both modalities using a three-stage fusion block consisting of a Cross-Modal Channel Shuffle Gate (CCSG), a Cross-Modal Large Kernel Gate (CLKG), and a Channel Shuffle Projection (CSP) module. This fusion block is designed to extract and fuse complementary cross-modal representations, with the fused output then fed into a DETR-based transformer for final object prediction.

Figure 2: IC-Fusion integrates RGB and IR features using dual-stream backbones and a fusion module composed of CCSG, CLKG, and CSP modules, followed by a DETR-based transformer encoder-decoder.

Key Components

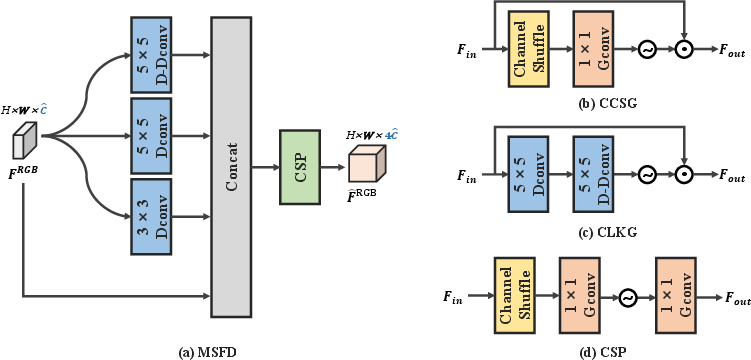

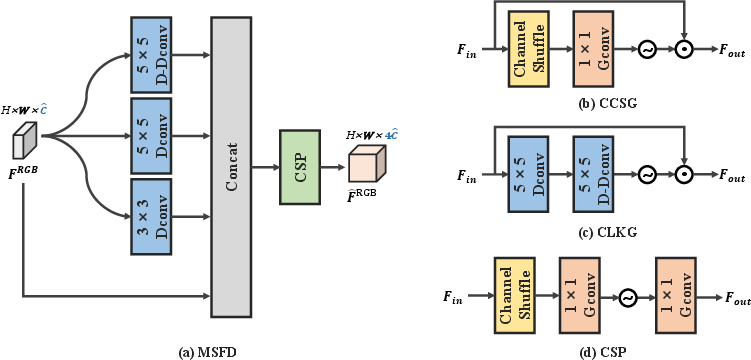

Multi-Scale Feature Distillation (MSFD)

The MSFD module enhances the representational capacity of RGB features while aligning their dimensions with the IR stream (Figure 3a). It extracts spatially diverse cues from multiple receptive fields using multi-branch depthwise convolutions and a CSP module. This design encodes both fine-grained and large-scale context efficiently.

Cross-Modal Channel Shuffle Gate (CCSG)

The CCSG module facilitates effective feature interaction between RGB and IR modalities through group-based channel reorganization and a gating mechanism (Figure 3b). It performs lightweight cross-modal refinement by shuffling channels, applying grouped convolutions, and using a GELU-based gating mechanism.

Cross-Modal Large Kernel Gate (CLKG)

The CLKG module captures long-range contextual dependencies and enhances structural consistency across modalities (Figure 3c). It reinforces semantically aligned regions while suppressing modality-specific noise through spatial gating with large receptive fields, implemented via a two-layer large kernel depthwise convolution block.

Channel Shuffle Projection (CSP)

The CSP module efficiently fuses features across channels by applying a channel shuffle operation followed by grouped 1×1 convolutions and GELU activation (Figure 3d). This module is utilized within both the MSFD and fusion modules to ensure efficient feature distillation and projection.

Figure 3: The framework incorporates MSFD to enhance RGB features, CCSG for gated channel-wise interaction, CLKG for capturing long-range structural features, and CSP for efficient feature projection and distillation.

Experimental Results

The method was evaluated on the FLIR and LLVIP datasets. Results show competitive performance on FLIR-aligned and state-of-the-art performance on LLVIP (Table 1). Ablation studies validate the effectiveness of the IR-centric approach, demonstrating that a stronger IR backbone combined with a lightweight RGB backbone yields the best performance-to-complexity trade-off. The individual contributions of the MSFD, CCSG, and CLKG modules were also confirmed through ablation studies.

Qualitative results on the FLIR dataset showcase IC-Fusion's ability to produce more accurate and stable predictions in low-light scenes, especially for small or occluded objects (Figure 4).

Figure 4: IC-Fusion demonstrates more accurate and stable predictions compared to unimodal RGB and IR detectors, especially in low-light scenes and for small or occluded objects.

Conclusion

The paper presents IC-Fusion, a multispectral object detection framework leveraging an IR-centric feature fusion strategy. The design incorporates a lightweight yet effective fusion module consisting of MSFD, CCSG, CLKG, and CSP components. Extensive experiments validate the effectiveness of this design, demonstrating improved performance and efficiency on multispectral object detection benchmarks.