- The paper demonstrates that dynamic voltage and frequency scaling (DVFS) can achieve up to a 10% improvement in energy-delay product (EDP) compared to baseline settings.

- It employs a benchmark-driven approach using diverse computing kernels across Intel, AMD, and Nvidia platforms to assess power capping, DVFS, and ACPI/P-State modes.

- The study reveals that JAX and TensorFlow exhibit distinct energy efficiencies, emphasizing the need for platform-specific tuning and workload characterization in energy-aware computing.

This paper (2505.03398) presents an empirical paper on the impact of power management techniques on modern CPU and GPU architectures using the JAX and TensorFlow frameworks. The paper focuses on three prevalent power management techniques: power limitation (capping), frequency limitation (DVFS), and ACPI/P-State governor modes. Through a benchmark-driven approach, the paper analyzes the power/performance trade-offs across different hardware units and software frameworks, providing insights into energy-aware computing.

Experimental Setup and Benchmarks

The experimental setup includes Intel Xeon Platinum 8358, AMD EPYC 7513, and NVIDIA A100 SXM4 platforms. The evaluation employs six computing kernels: GEMM, Stencil, SpMV, Triad, Dist, and Monte Carlo, representing a mix of compute-bound, memory-bound, and mixed-workloads. The authors used the EA2P tool to measure energy consumption. JAX and TensorFlow were configured with specific settings to optimize memory allocation and performance. For example, the JAX benchmarks used specific XLA environment variables (XLA_PYTHON_CLIENT_PREALLOCATE=false, XLA_PYTHON_CLIENT_MEM_FRACTION=.10, XLA_PYTHON_CLIENT_ALLOCATOR=platform) to mitigate OOM errors. Similarly, TensorFlow employed TF_GPU_ALLOCATOR = 'cuda_malloc_async' to accelerate memory operations.

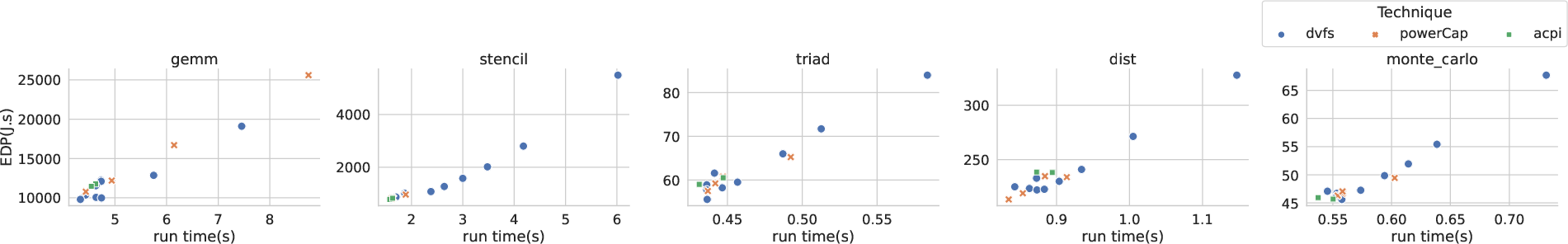

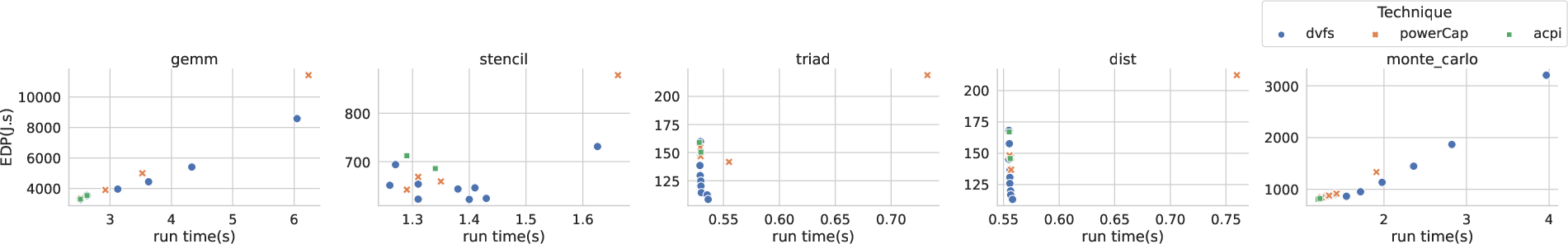

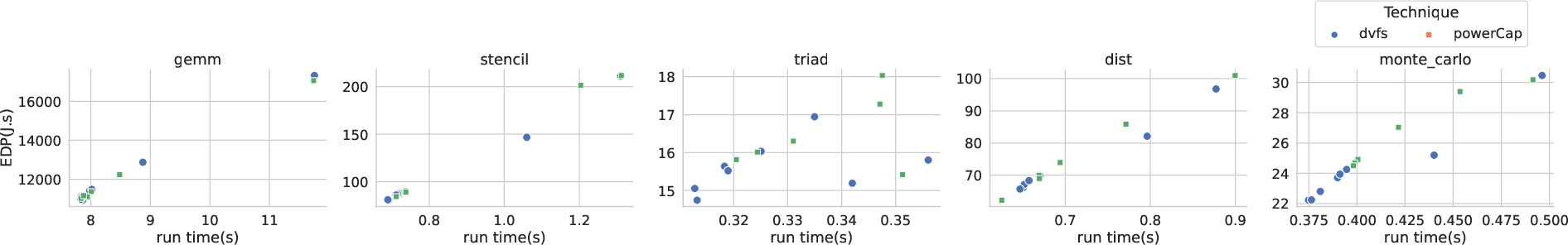

Impact of Power Management Techniques on EDP

The paper evaluates the impact of DVFS, ACPI governors, and power capping on execution time, power consumption, energy consumption, and EDP. The results indicate that frequency limitation is often the most effective technique for improving EDP. Running at the highest frequency compared to a reduced one could lead to a reduction of up to 101 in EDP. Furthermore, the paper observes that frequency management shows consistent behavior across different CPUs, while TensorFlow and JAX exhibit opposite effects under the same power management settings.

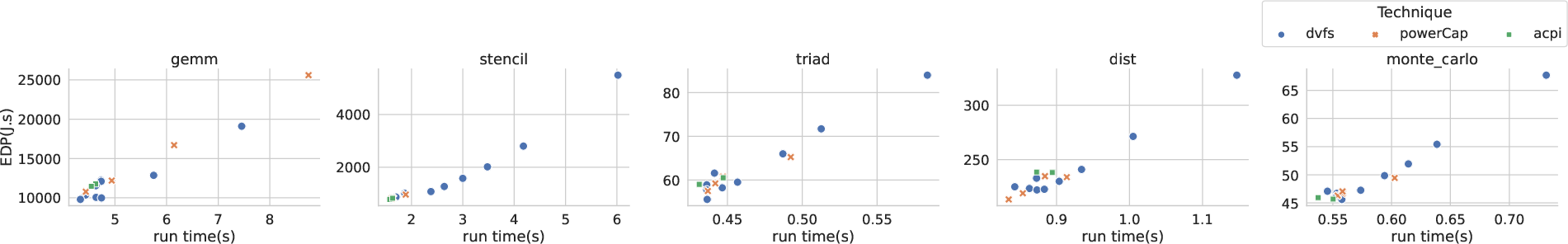

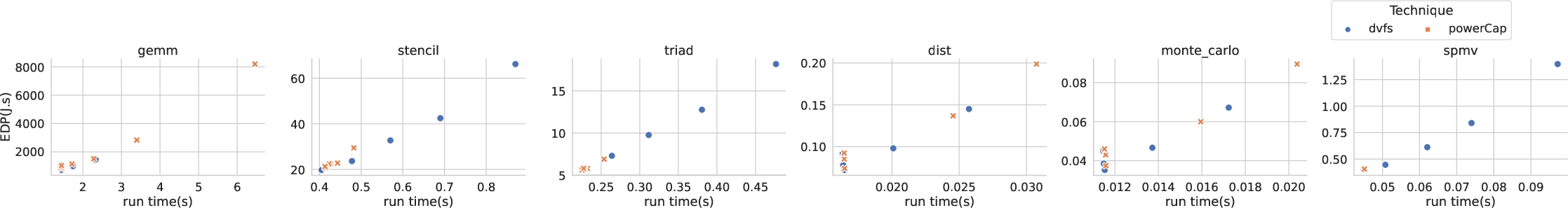

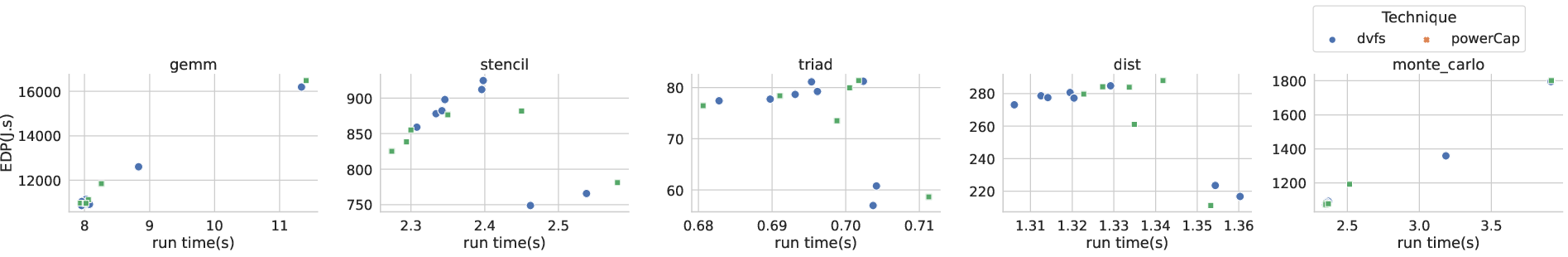

Figure 1: EDP vs Time on Intel for JAX.

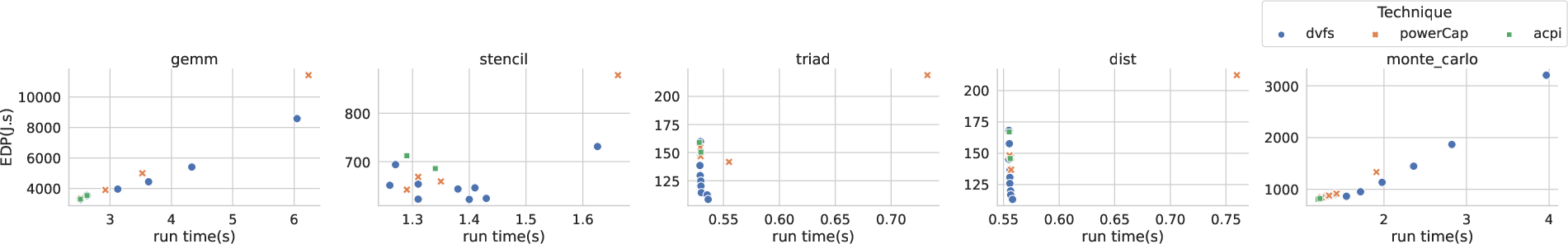

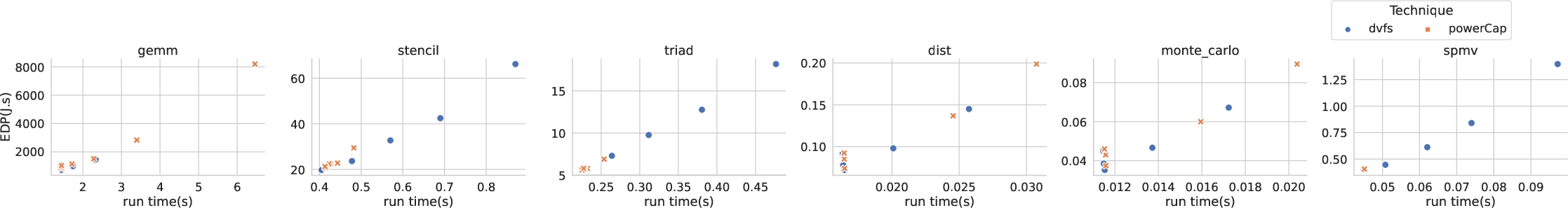

Figure 2: EDP vs Time on Intel for TF.

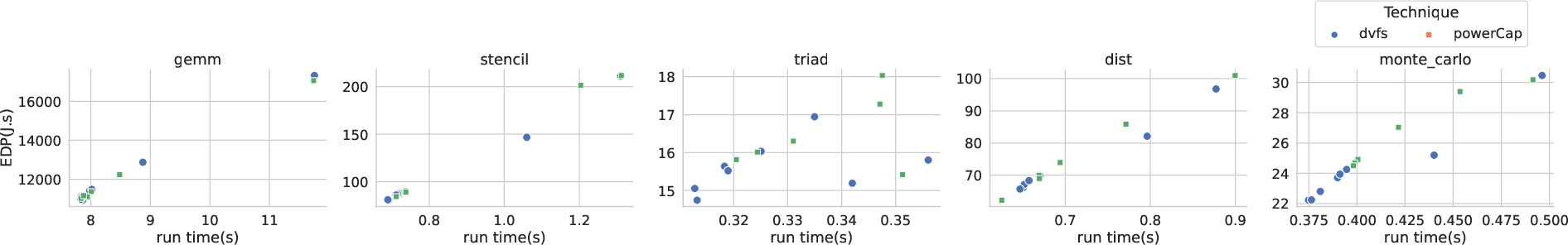

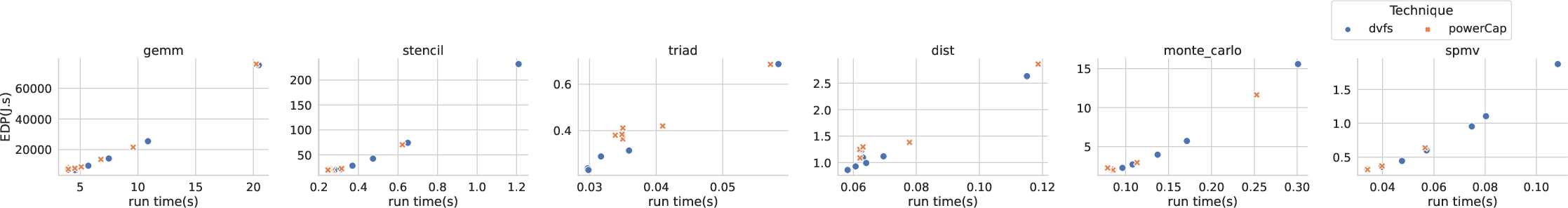

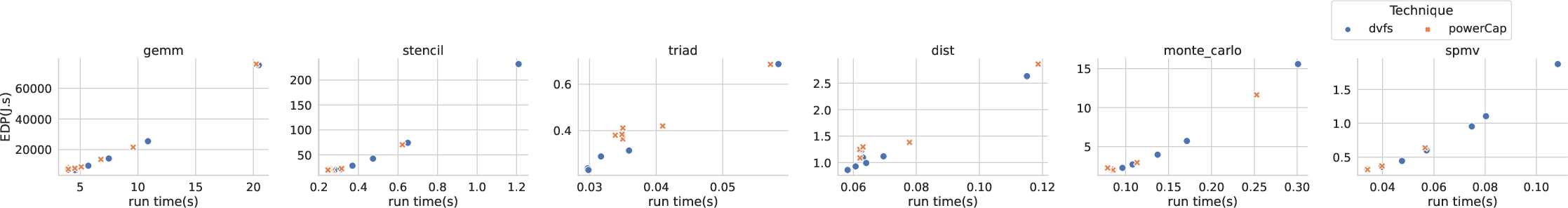

Figure 3: EDP vs Time on AMD for JAX.

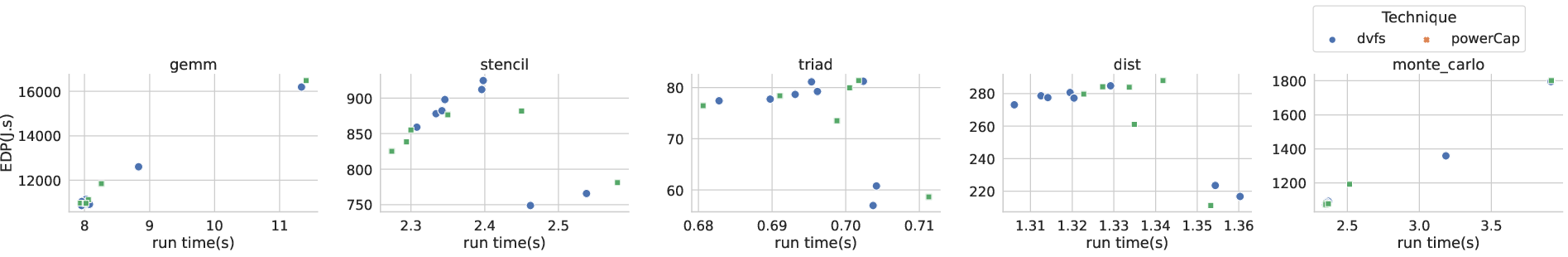

Figure 4: EDP vs Time on AMD for TF.

Figure 5: EDP vs Time on Nvidia for JAX.

Figure 6: EDP vs Time on Nvidia for TF.

The plots of EDP vs time (Figures 1, 2, 3, 4, 5, and 6) quantify the efficiency trade-offs achievable with different energy management techniques. Tables 2, 3, 4, 5, 6, and 7 summarize the optimal EDP observed for each instance of ({Platform, Framework, Benchmark, ManagementMethod), presenting EDP reduction and the corresponding running time gap relative to the baseline. The plots confirm that some configurations out of the baseline ones yield a significant reduction of the EDP, each management technique having a specific impact.

The paper reveals platform-specific trends in power management:

- Intel: Power capping frequently yields EDP reductions with slightly lower running times. DVFS also lowers EDP, but the optimal EDP often comes with performance degradation.

- AMD: DVFS consistently yields the lowest EDP values, often at the expense of increased running time. ACPI governors, particularly 'powersave', reduce EDP but increase running time.

- Nvidia: Power capping is highly effective for EDP reduction on A100. DVFS reduces EDP but seems limited by JAX's inability to run at the lowest frequencies.

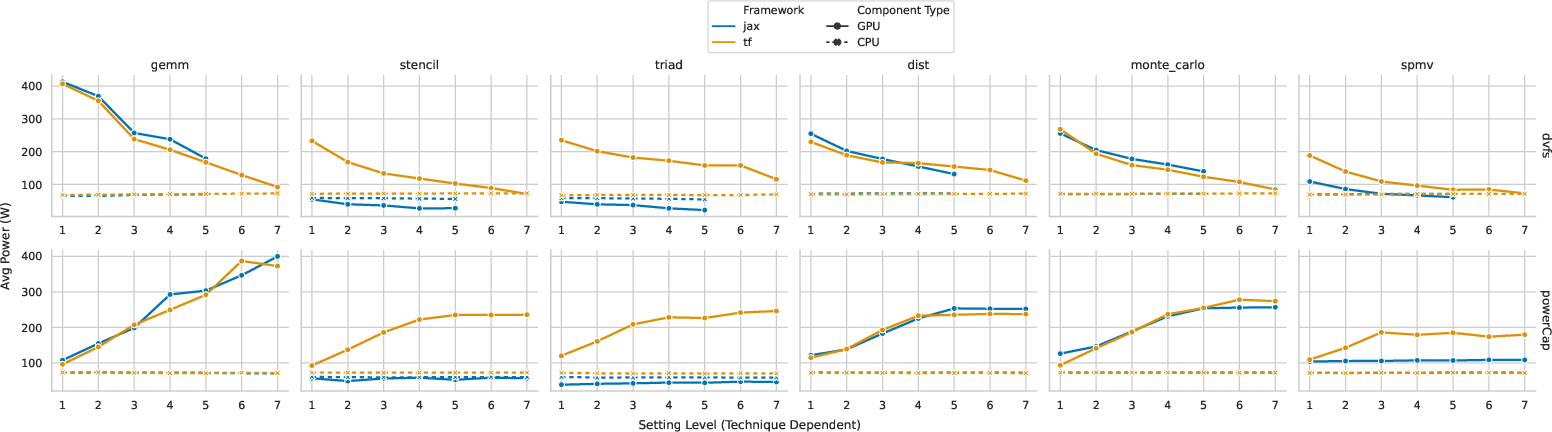

Power Consumption Analysis

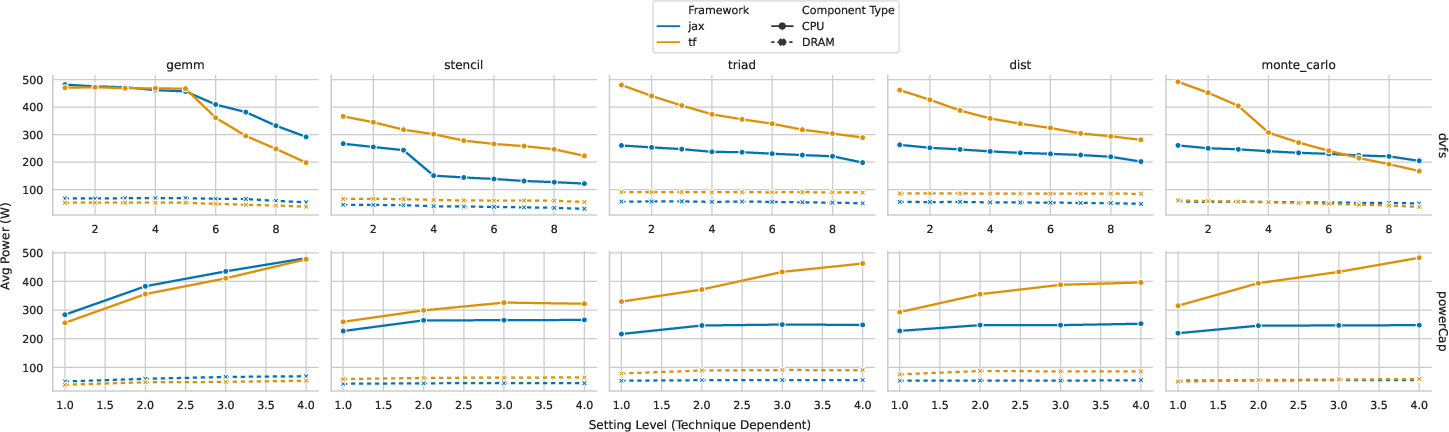

The power analysis at the component level reveals that DVFS and power capping primarily modulate the overall CPU/GPU power consumption. JAX and TF show different energy efficiencies with similar power draw values and different running times.

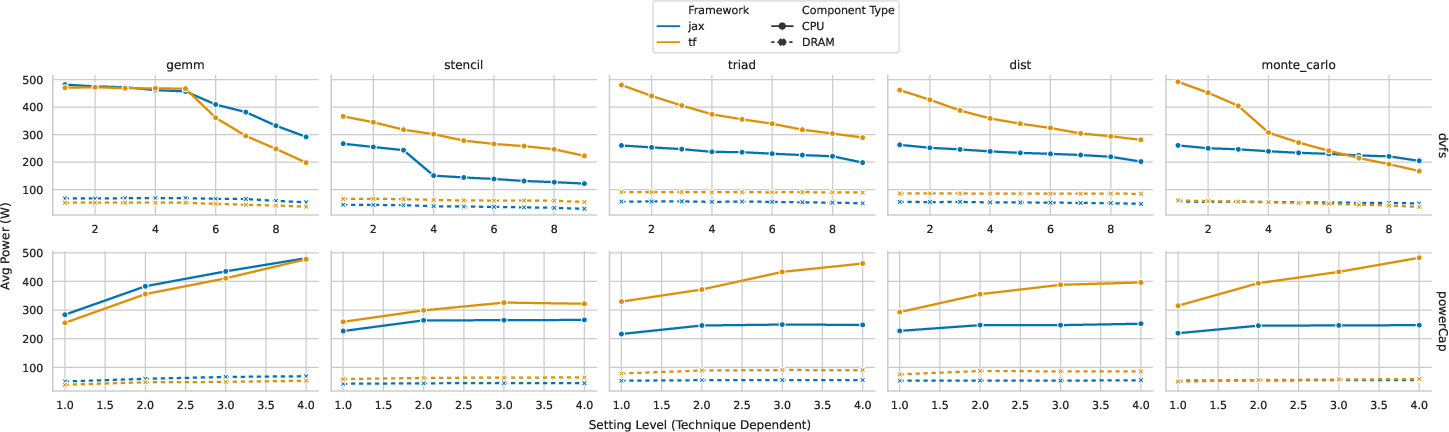

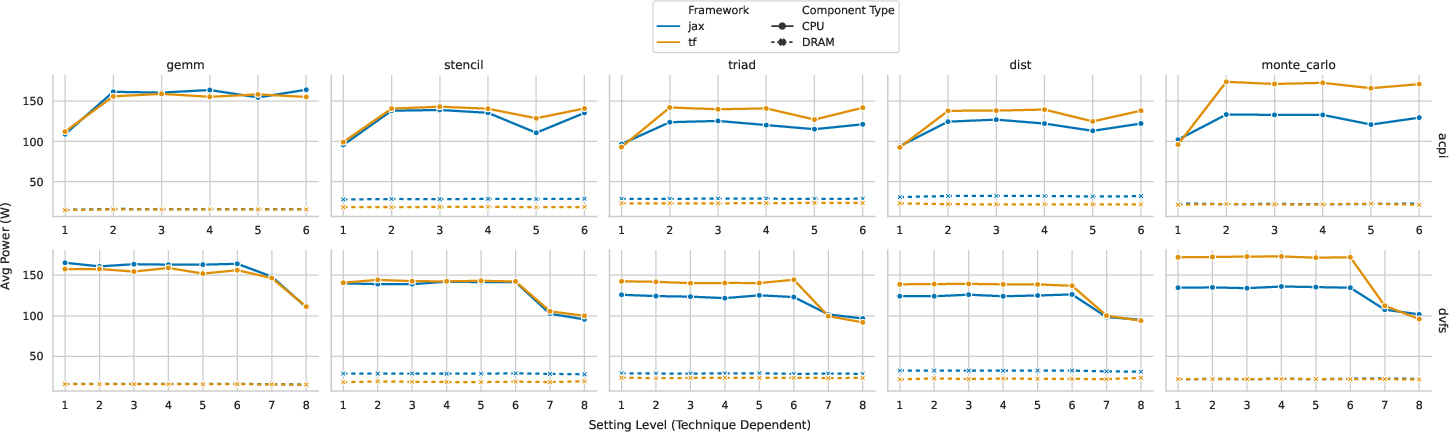

Figure 7: Power trends on Intel.

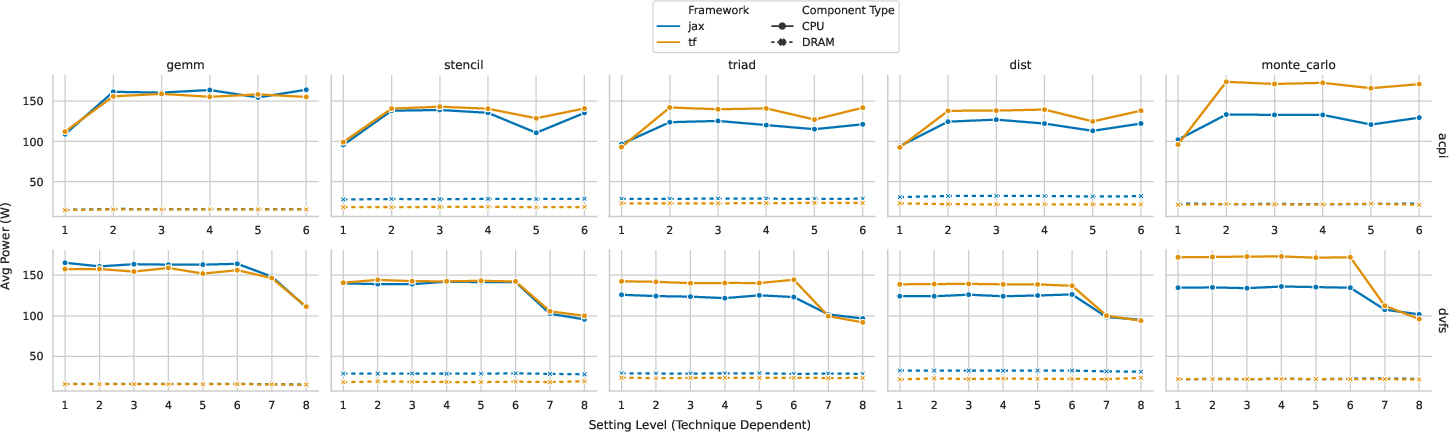

Figure 8: Power trends on AMD.

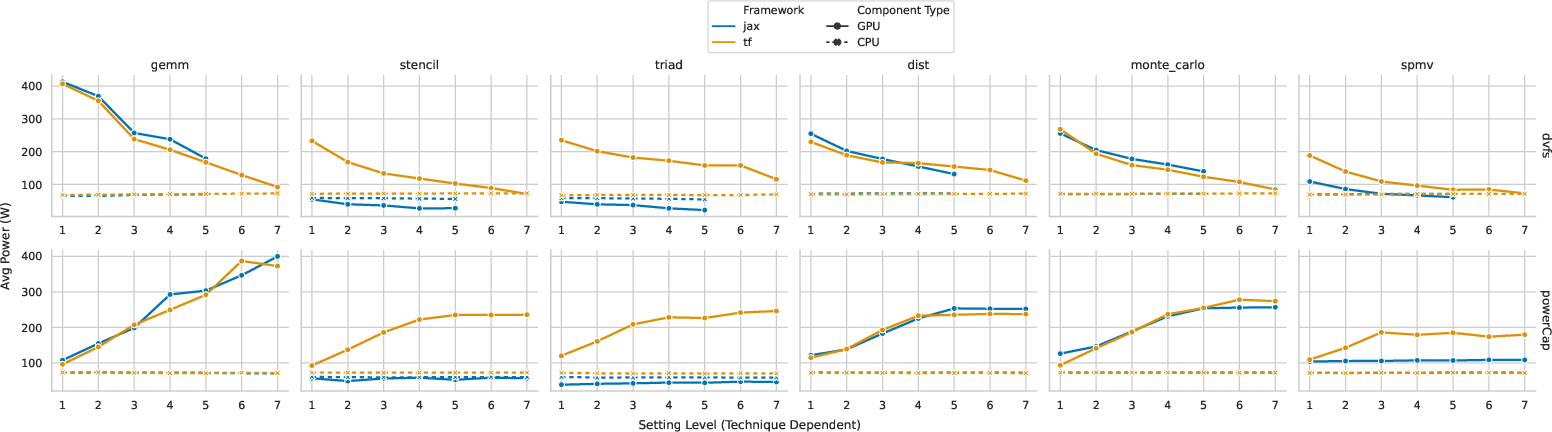

Figure 9: Power trends on Nvidia.

Figures 7, 8, and 9 display the average power draw of the primary component (CPU Package 'Pkg' for Intel/AMD, 'GPU' for Nvidia) and a secondary component ('DRAM' for CPUs, 'Host' CPU for Nvidia) with our considered management settings. The figures show that applying DVFS consistently reduces the power consumption of the main compute component. Similarly, power capping directly limits the power of the primary compute component. The choice of governor does not significantly influence the DRAM power draw.

Framework Efficiency Trends

The paper also explores framework efficiency trends. Considering Triad on A100, power capping yields better EDP reduction for JAX (42%) than for TF (24%), with a significant improvement over DVFS for both. The paper suggests that the frameworks differ considerably in their runtime efficiency for specific tasks, potentially due to compilation strategies, kernel implementations, runtime overheads, and memory management. Notably, JAX was significantly faster on Stencil than TF despite memory pressure. This strongly indicates that XLA's fusion for stencil computations was highly effective at reducing the total number of memory accesses and arithmetic operations.

Conclusions

The paper concludes that the effectiveness of energy management techniques is highly context-dependent, requiring a platform/workload-aware approach. It emphasizes the importance of characterizing workload types and considering framework robustness when optimizing energy efficiency. For practitioners, the paper recommends platform-specific tuning, workload characterization, and benchmarking. For framework developers, it suggests ensuring robustness across operational settings and integrating energy-aware runtime/compilation strategies. For hardware designers, it reinforces the importance of accurate power monitoring features and effective control mechanisms.