The paper "Training LLMs for Social Deduction with Multi-Agent Reinforcement Learning" (Sarkar et al., 9 Feb 2025 ) presents a framework for enabling LLM based agents to learn effective natural language communication strategies within multi-agent settings, specifically focusing on social deduction games, without reliance on human demonstration data. The methodology employs Multi-Agent Reinforcement Learning (MARL) and decomposes the communication problem into distinct listening and speaking components, guided by a dense reward signal derived from predicting critical game state information.

MARL Framework for Social Deduction

The environment is modeled as a Partially Observable Markov Game (POMG), augmented with a social deduction objective: identifying a specific element from a set (e.g., the imposter's identity). Each agent 's policy is parameterized by an LLM, specifically RWKV, selected for its recurrent architecture suited to processing long action-observation histories () prevalent in MARL and its efficiency for RL fine-tuning. The agent's history is formatted as a sequence of text tokens. Action selection involves the LLM predicting the next token, constrained by the set of valid actions provided by the environment simulator.

A baseline MARL approach utilizes Proximal Policy Optimization (PPO) to optimize the agent policies (). The objective maximizes the expected discounted sum of sparse environmental rewards (e.g., +1 for winning, -1 for losing, small rewards for task completion).

where is the advantage estimate.

To mitigate catastrophic forgetting of language capabilities and prevent the policy from diverging into non-linguistic action sequences during RL optimization, a KL-divergence regularization term is added to the PPO loss. This penalizes deviations from the base pre-trained LLM ():

Training employs iterated self-play, where agents are trained against frozen policies from previous iterations. Specifically, crewmates train against past imposter policies, while imposters train adversarially (using an inverted speaking reward, discussed later) against crewmate policies. To enhance robustness and prevent convergence to exploitable conventions (e.g., all agents remaining silent), heterogeneous team training is used: one crewmate agent is kept frozen using only the listening policy (, detailed below), simulating ad-hoc teamwork scenarios.

Furthermore, a world modeling loss () is incorporated. This auxiliary loss trains the LLM policy to predict the next observation token based on the current history and action . This objective aids in stabilizing training for the recurrent RWKV architecture, preserves the model's understanding of environmental dynamics encoded in observations, and discourages the policy from over-utilizing action tokens during discussion phases.

Decomposed Communication Learning: Listening and Speaking

The core idea is to decouple the complex communication learning problem into two sub-problems: understanding incoming information (listening) and generating useful outgoing information (speaking).

Listening via Imposter Prediction

The listening skill is framed as a supervised learning task focused on the core deduction objective: predicting the imposter's identity . During specific points in the game (e.g., between discussion messages), the environment queries each crewmate agent for its belief about the imposter. The agent is trained to maximize the probability assigned to the true imposter given its current history . The loss function is the negative log-likelihood:

This loss is computed only at query steps and utilizes the ground truth imposter identity provided by the simulator. This provides a direct, dense signal for interpreting observations and messages in the context of the primary game goal, effectively grounding the communication. The resulting policy optimized solely with RL and this listening loss is denoted .

Speaking via Reinforced Discussion Learning

The speaking skill is trained using RL, guided by a novel dense reward signal that measures the immediate causal impact of an agent's message on the beliefs of its teammates. When agent sends a message at time , resulting in a new history for other agents at time , the speaking reward is calculated as the change in the sum of probabilities assigned to the true imposter by all other living crewmates :

where and is the corresponding sum before the message was processed (at time ).

This reward directly incentivizes messages that positively influence the team's collective certainty about the imposter. For imposter training, an adversarial reward is used to encourage messages that confuse or mislead crewmates. This reward term is incorporated into the PPO objective for message generation actions.

Integrated Objective

The final policy, , is trained by combining the standard PPO loss for environmental rewards, the supervised listening loss , and the PPO loss incorporating the speaking reward , along with the KL regularization and world modeling loss.

where represents the PPO loss component derived from the speaking reward , and terms are hyperparameters balancing the different objectives.

Role of Dense Reward Signal

The prediction of the imposter's identity serves as a crucial dense reward signal. Standard MARL in such settings often suffers from sparse rewards (win/loss occurs only at the end of an episode), making credit assignment difficult, especially for communicative actions whose benefits are indirect and delayed.

By contrast, the belief-based signals provide immediate feedback:

- Listening: The loss provides frequent supervised signals during the game, guiding the agent to extract relevant information from observations and dialogue.

- Speaking: The reward provides immediate reinforcement after each message, directly correlating the message content with its effect on teammates' critical beliefs ().

This grounds the natural language communication in the underlying game objective, pushing agents to produce strategically relevant utterances rather than just syntactically correct text.

Experimental Validation and Results

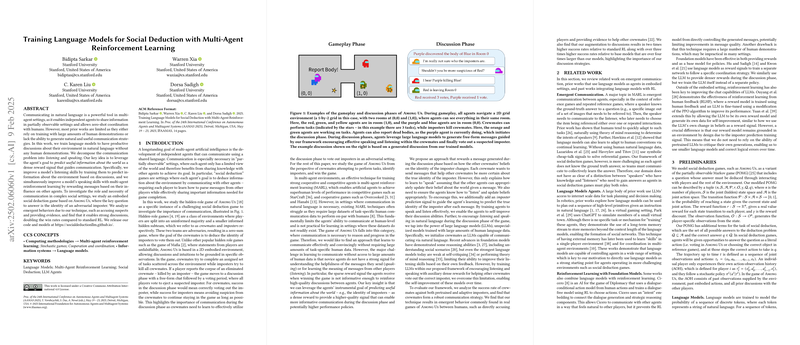

The framework was evaluated in a custom 2D grid-world environment inspired by Among Us. Agents navigate, perform tasks, report bodies, engage in free-form text discussions, and vote. The environment simulates partial observability, task dependencies, kill mechanics, and voting dynamics.

Performance Metrics

- Base LLMs (RWKV 1.5B, 7B) without MARL training achieved low win rates (<20%).

- Standard PPO () improved performance but struggled with the deduction aspect.

- Adding the listening loss () significantly boosted the crewmate win rate.

- The full model incorporating the speaking reward () achieved the highest crewmate win rate, approximately doubling the win rate of the standard RL baseline () and substantially outperforming even larger base LLMs.

Emergent Behaviors

Qualitative analysis revealed the emergence of sophisticated, human-like communication strategies:

- Accusations: Agents directly accuse others based on observed behavior or inconsistencies.

- Evidence Provision: Agents support accusations with justifications derived from their observations (e.g., "Player Green was seen leaving the room where the body was found"). Agents also learned to sometimes fabricate evidence convincingly.

- Imposter Counter-Play: Imposters learned to deflect suspicion, make counter-accusations, and mimic crewmate communication patterns to blend in.

Robustness and Ablations

- Iterated self-play with adversarial imposter training resulted in robust crewmate policies, evidenced by a rapidly narrowing exploitability gap against evolving imposter strategies.

- Ablation studies confirmed the significant contributions of both the listening loss () and the speaking reward () to the final performance. The listening component () appeared particularly critical for grounding the agents' understanding and enabling effective deduction.

Conclusion

The research demonstrates a viable method for training LLM agents to use natural language communication effectively in complex MARL environments like social deduction games, bypassing the need for human communication data. By decomposing communication into listening (trained via supervised prediction of game state) and speaking (trained via RL with belief-based rewards), and leveraging the imposter prediction task as a dense reward signal, the framework enables agents to develop robust and strategically relevant communication behaviors, leading to significant performance improvements over standard MARL approaches.