The paper presents a comprehensive framework for training LLMs using 4-bit floating-point (FP4) quantization. The primary motivation is to significantly reduce the computational cost and memory footprint associated with training state-of-the-art LLMs while maintaining competitive accuracy compared to higher precision schemes (e.g., BF16 and FP8). The framework addresses two critical challenges intrinsic to using FP4: the substantial quantization errors induced by the limited representational capacity and the instability caused by the dynamic range of activation tensors.

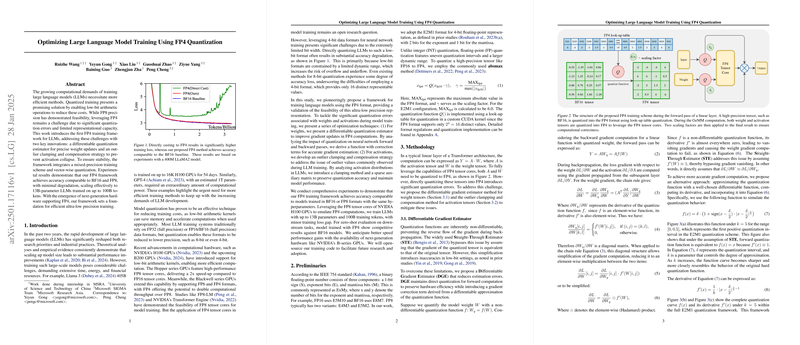

A key contribution of the work is the development of a Differentiable Gradient Estimator (DGE) that improves the accuracy of gradient propagation through the non-differentiable FP4 quantization operation. Instead of using the standard Straight-Through Estimator (STE), which assumes the derivative and thereby overlooks quantization errors, DGE approximates the forward quantization function through a smooth, parameterized function. For example, the paper introduces an approximation of the quantization function defined as

where

- is the quantization interval,

- is a parameter controlling the degree of smoothing.

Its derivative,

is used during backpropagation to correct the weight updates. This approach yields a more accurate gradient signal while maintaining the hardware efficiency benefits of using low-precision arithmetic in the forward pass.

The second innovation is an Outlier Clamping and Compensation (OCC) strategy designed to mitigate the quantization issues associated with activations. Activation tensors in LLMs often display broad distributions with significant outliers that can lead to underflow when quantized into only 16 discrete levels. The proposed method identifies extreme values via quantile search and clamps them to a predefined threshold. Subsequently, the small residual error introduced by clamping is compensated by a sparse correction matrix computed on these outlier elements. This dual-step process ensures that the inherent loss in precision does not cascade into critical performance degradation. Quantitative analyses, including improvements in cosine similarity, mean squared error (MSE), and signal-to-noise ratio (SNR) between original and quantized activations, are provided to validate the approach.

The framework employs mixed-precision training where general matrix multiplications (GeMM) are performed in FP4 to harness emerging FP4 tensor cores, while non-GeMM operations retain higher precision for numerical stability. The paper provides extensive empirical results across different model sizes (ranging from 1.3B to 13B parameters) trained on up to 100 billion tokens. The FP4-trained models exhibit training loss trajectories that closely follow BF16 baselines, with final training losses for the 13B model being 1.97 (FP4) versus 1.88 (BF16). In zero-shot evaluation on a diverse set of downstream tasks (including PiQA, Hellaswag, BoolQ, and SciQ), the FP4 models achieve performance that is competitive with or marginally exceeds the corresponding BF16 counterparts. These numerical results strongly support the claim that ultra-low precision training with FP4 can deliver both computational efficiency and competitive model performance.

In summary, the paper makes the following contributions:

- Differentiable Gradient Estimator (DGE): Provides a mathematically principled approach to approximate the non-differentiable quantization function, thus enhancing gradient propagation over traditional STE-based methods.

- Outlier Clamping and Compensation (OCC): Effectively handles the problematic dynamic range of activation tensors by clamping extreme values and compensating for the induced error via a sparse auxiliary matrix.

- Empirical Demonstration: Extensive experiments and ablation studies that validate the approach across multiple LLM scales, showing minimal degradation in training loss and downstream performance, thereby establishing a robust foundation for ultra-low-precision training.

The detailed derivations, implementation considerations (including vector-wise quantization and scaling schemes), and thorough ablation studies collectively demonstrate that the proposed FP4 framework is not only theoretically sound but also practically viable for the next generation of efficient model training architectures.