Quantization of Transformers with LLM-FP4

The paper "LLM-FP4: 4-Bit Floating-Point Quantized Transformers" addresses an important challenge in the deployment of large transformer architectures: the escalating computational and memory costs as models increase in size. The authors propose a novel quantization technique, LLM-FP4, which compresses both weights and activations of LLMs to 4-bit floating-point precision without retraining the network, a process known as post-training quantization (PTQ). This work aims to mitigate the inefficiencies of integer-based PTQ methods that falter below 8-bit quantization, offering a floating-point alternative that leverages its inherent flexibility in handling diverse data distributions.

Key Concepts and Approach

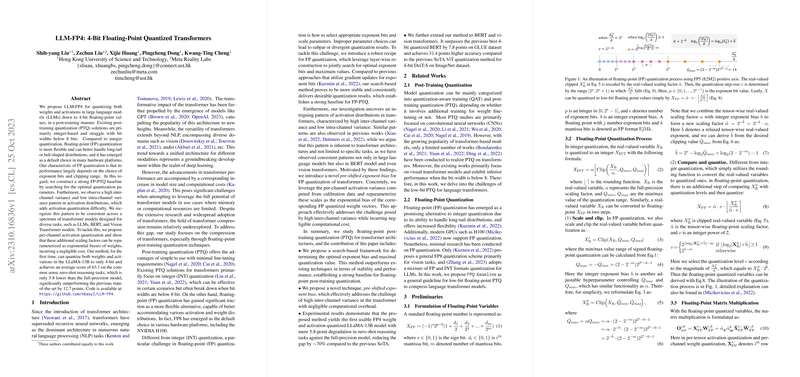

Floating-point (FP) quantization, compared to its integer counterpart, can more adeptly manage the long-tail or bell-shaped activation distributions typical in transformers. This potential stems from an FP quantization's nonuniform representation capability, determined by the choice of exponent bits and the clipping range. Recognizing the limitations in existing FP quantization methods, the authors establish a robust FP-PTQ baseline through a novel search-based approach to identify optimal quantization parameters. These include exponent bits and maximum value determinations, to create a stable foundation for FP-PTQ.

A standout observation detailed in the paper is the high inter-channel variance coupled with low intra-channel variance across transformer activations. This pattern, identified in models such as LLaMA, BERT, and Vision Transformers, complicates activation quantization. To overcome this, the authors introduce a pioneering technique of per-channel activation quantization. By reparameterizing additional scaling factors as exponential biases of weights, they achieve comprehensive 4-bit quantization of both weights and activations with negligible computational overhead.

Strong Quantization Results

Empirically, the proposed method achieves impressive quantization performance. For example, it is notable that the quantized LLaMA-13B model attains an average score of 63.1 on common sense zero-shot reasoning tasks, demonstrating only a 5.8 point degradation compared to the full-precision model. This represents a considerable leap, outperforming the previous state-of-the-art quantization methods by 12.7 points. This performance also extends to other transformer architectures like BERT and Vision Transformers, achieving notable improvements over previous benchmarks.

Implications and Future Directions

Practically, LLM-FP4 stands to benefit industries deploying large language and transformer models on hardware that demands efficiency due to resource constraints. The method's compatibility with modern hardware, such as the NVIDIA H100 which supports FP quantization natively, further enhances its applicability. Theoretically, insights from LLM-FP4 contribute to a broader understanding of compression techniques’ applicability across various domains, promoting further research into specialized quantization methods that can adapt to the unique distribution patterns of diverse networks.

Looking ahead, the future development of AI may explore extending the application of this quantization technique to other complex models and domains, including audio and other sensory modalities. Additionally, the exploration of generative tasks and other complex applications would provide a frontier for testing and extending the findings of LLM-FP4.

Ultimately, while LLM-FP4 does not wholly resolve the myriad challenges associated with quantization in AI, it provides substantial advancements toward efficient, lower-precision computations without sacrificing robustness. The impact of such innovations is poised to empower emerging AI capabilities across increasingly constrained environments.