Overview of LLMs and Their Potential for AGI

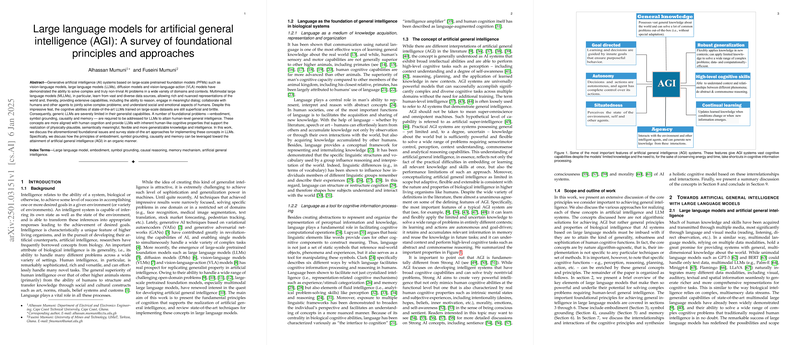

The research paper titled "LLMs for AGI: A Survey of Foundational Principles and Approaches" by Alhassan Mumuni and Fuseini Mumuni offers a comprehensive examination of the foundational principles and methodologies that underlie the potential of LLMs to achieve AGI. The paper scrutinizes the ability of generative AI systems based on large-scale pretrained foundational models, including vision-LLMs, LLMs, diffusion models, and vision-language-action models, in solving complex AI problems across multiple domains. It acknowledges, however, that despite impressive capabilities, the cognitive abilities of LLMs remain superficial and brittle.

Limitations and Foundational Challenges

The paper identifies several foundational problems that need to be addressed for LLMs to evolve into AGI systems. Notably, it highlights issues of embodiment, symbol grounding, causality, and memory. These are seen as crucial for endowing LLMs with human-like cognitive properties, making them more flexible, generalizable, and semantically meaningful.

- Embodiment: The paper notes that for AGI to attain human-level intelligence, LLMs must have some degree of physical interaction with their environment. This is crucial for achieving robust perception and intentional actions that align with high-level goals.

- Symbol Grounding: It is essential for LLMs to connect internal symbols with real-world entities and concepts. This ensures that AI systems can understand and manipulate abstract representations meaningfully.

- Causal Reasoning: Understanding cause-and-effect relationships is critical for LLMs to make sense of the dynamic world, allowing for robust decision-making and adaptation to new contexts or environments.

- Memory: Effective memory mechanisms are necessary to retain and access accumulated knowledge, facilitating continual learning and improved problem-solving capabilities.

Practical and Theoretical Implications

The paper suggests that addressing these foundational issues could significantly extend the cognitive and operational capabilities of LLMs. By incorporating concepts more aligned with human cognition, LLMs could achieve a level of intelligence that supports high-level reasoning, complex problem-solving, and deep understanding of social and emotional contexts.

Moreover, the integration of these principles presents implications for the future development of AI:

- Practical Implications: Enhanced AGI systems could revolutionize fields such as autonomous systems, natural language processing, and human-computer interaction. For instance, AI with robust cognitive abilities could seamlessly collaborate with humans in complex, unstructured environments such as healthcare, legal systems, and creative industries.

- Theoretical Implications: The research underscores the need for interdisciplinary approaches combining insights from cognitive science, neuroscience, and AI to enable the transition from current AI capabilities to AGI. It points to the importance of developing new algorithms that emulate human cognitive functions, which could pave the way for more sophisticated and adaptable AI systems.

Speculation on Future Developments

The paper cautiously speculates that while significant progress has been made in LLM research, achieving AGI akin to human cognitive capabilities remains a formidable challenge. Future developments will likely rely on breakthroughs in understanding and implementing human-like cognitive processes such as causal reasoning, abstract symbol manipulation, and dynamic memory systems. Moreover, collaborative research across disciplines, leveraging advancements in neuroscience and psychology, could accelerate the development of more capable and reliable AGI systems.

In conclusion, this paper reflects both optimism and realism regarding the potential of LLMs to evolve into AGI systems. By addressing the core foundational challenges and leveraging interdisciplinary insights, there is a promising pathway towards the realization of human-level AGI, although it remains a distant horizon for now.