An Analysis of MAmmoTH-VL: Advancing Multimodal Reasoning with Instructional Tuning

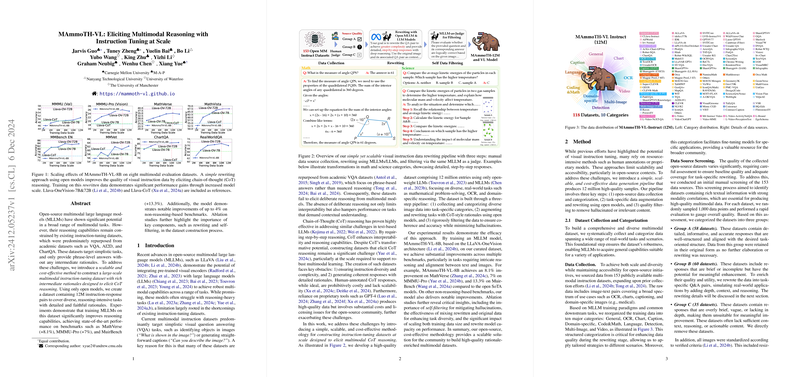

The academic paper "MAmmoTH-VL: Eliciting Multimodal Reasoning with Instruction Tuning at Scale" explores an innovative approach to enhance the reasoning capabilities of Multimodal LLMs (MLLMs) by means of instruction tuning. This method utilizes an extensive multimodal instruction-tuning dataset designed to facilitate chain-of-thought (CoT) reasoning. The researchers introduce a large-scale dataset comprising 12 million instruction-response pairs that cover a wide range of reasoning-intensive tasks. This work seeks to address the current limitations of MLLMs in reasoning-heavy tasks, which often stem from the inadequacies of existing instruction-tuning datasets.

Methodology and Dataset Construction

The authors propose a scalable and cost-effective methodology to construct a high-fidelity multimodal dataset capable of eliciting CoT reasoning. This process involves three primary stages: data collection and categorization, rewriting tasks with detailed rationales, and rigorous self-data filtering for quality assurance. Through the use of open-source models, the dataset comprises a robust and diverse collection of task-specific Q&A pairs, thereby improving the interpretability and reasoning capabilities of MLLMs.

The data collection phase aggregates 153 publicly available multimodal datasets, subsequently categorized into ten major functions such as General, OCR, and Domain-specific, among others. This structured categorization enables task-specific enhancement during the rewriting phase, thus improving the coherence of responses. The implementation of model-as-judge filtering ensures that the dataset remains free of hallucinations and achieves high coherence.

Evaluation and Results

The MAmmoTH-VL project culminates in the creation of MAmmoTH-VL-8B, a large multimodal model trained using the Llava-OneVision architecture. Evaluations were conducted across 23 different benchmarks, including single-image, multi-image, and video tasks. The results demonstrate a marked improvement in the model's reasoning capabilities, achieving state-of-the-art performance on several fronts. Notable improvements include an 8.1% increase on the MathVerse benchmark, a 7% improvement on MMMU-Pro, and a 13.3% gain on MuirBench compared to open state-of-the-art models.

The effectiveness of the data filtering and rewriting strategies is evidenced by the significant gains in model performance across a variety of reasoning benchmarks. The combination of rewritten and original datasets in a 3:7 ratio further optimized model training, supporting the premise that carefully curated, rationale-rich data enhances multimodal reasoning.

Implications and Future Directions

MAmmoTH-VL's approach presents a cost-effective and scalable pathway to expand the reasoning competencies of MLLMs, leveraging open models without dependence on proprietary tools. The research underscores the criticality of detailed instruction-tuning datasets in eliciting complex reasoning and provides a replicable framework for future multimodal research.

The implications for the field are substantial, with potential applications across various AI-driven domains where interpretability and complex decision-making are imperative. Future endeavors could focus on expanding the dataset's scope to include even more diverse visual components or explore advanced filtering methodologies to further reduce hallucinations. As research progresses, the effective integration of such models into practical applications will validate the tangible impact of MAmmoTH-VL on the broader AI and machine learning landscape.

The advancement realized through the MAmmoTH-VL initiative represents a significant step toward addressing the reasoning gaps in multimodal LLMs, marking an important development in the field of artificial intelligence and its application across diverse, real-world scenarios.