A Critical Analysis of "T-SciQ: Teaching Multimodal Chain-of-Thought Reasoning via Mixed LLM Signals for Science Question Answering"

The paper "T-SciQ: Teaching Multimodal Chain-of-Thought Reasoning via Mixed LLM Signals for Science Question Answering" presents an innovative approach to enhance the performance of smaller models on complex multimodal science question answering tasks. By leveraging LLMs to generate high-quality chain-of-thought (CoT) rationales, the authors propose an advanced method that addresses the inefficiencies and inaccuracies inherent in human-annotated rationales. This essay provides a detailed examination of the key methodologies, results, and implications of this research.

Introduction and Context

The motivation behind this work arises from both the limitations of existing datasets and the potential of LLMs in generating CoT reasoning. Previous datasets for scientific problem-solving are limited in scale, and while LLMs have demonstrated remarkable CoT reasoning abilities in NLP tasks, extending these capabilities to multimodal scenarios presents significant challenges. The ScienceQA dataset, which includes diverse topics and skills, serves as the benchmark for evaluating the proposed method.

Methodology

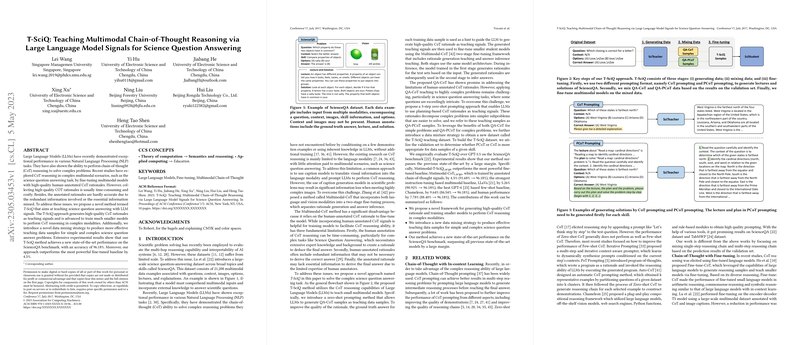

The T-SciQ approach is structured into three main components: generating teaching data, mixing teaching data, and fine-tuning smaller models.

- Generating Teaching Data: The authors employ zero-shot prompting with LLMs to generate two types of teaching data: QA-CoT samples and QA-PCoT samples. While QA-CoT samples involve traditional CoT rationales, the QA-PCoT samples are derived via a three-step prompting process that decomposes complex problems into simpler subproblems, enhancing the LLM's reasoning output.

- Mixing Teaching Data: This novel data mixing strategy aims to combine the strengths of both QA-CoT and QA-PCoT datasets. By leveraging the validation set, the method selectively determines the appropriate teaching signal for each data example based on problem complexity.

- Fine-Tuning: Following a two-stage fine-tuning framework, the student models are trained first to generate rationales and then to infer answers. This structured approach ensures that the models can effectively utilize the high-quality rationales generated by LLMs.

Experimental Results

The empirical evaluation demonstrates substantial improvements over existing state-of-the-art models. Notably, the model trained using T-SciQ signals achieves an accuracy of 96.18% on the ScienceQA benchmark, outperforming the strongest fine-tuned baseline by 4.5% and exceeding human performance by 7.78%. These results confirm that the proposed method is highly effective for multimodal science question answering tasks.

Implications and Future Developments

The implications of this research are both practical and theoretical. Practically, the ability to train smaller models with high-quality CoT rationales generated by LLMs provides a cost-effective and scalable solution for complex AI tasks. Theoretically, the success of the data mixing strategy and the decomposition approach highlights the untapped potential of LLMs in improving multi-step reasoning processes.

Future research could extend this work by exploring the integration of additional LLM architectures and parameter-efficient fine-tuning techniques. Moreover, applying T-SciQ to other domains and reasoning tasks could further validate its versatility and robustness.

Conclusion

In summary, the "T-SciQ" approach presents a significant advancement in teaching multimodal CoT reasoning by leveraging LLMs. The rigorous methodology, combined with compelling experimental results, showcases the potential of this approach to enhance the capabilities of smaller models in science question answering tasks. This work sets a new benchmark and opens avenues for future exploration in the field of AI-driven education and multimodal reasoning.

The publicly available code fosters transparency and reproducibility, encouraging further research to build upon these foundational findings. The results indicate that high-quality CoT signals generated from LLMs can substantially improve AI models' performance in multimodal and complex problem-solving scenarios.