An Analysis of "Divot: Diffusion Powers Video Tokenizer for Comprehension and Generation"

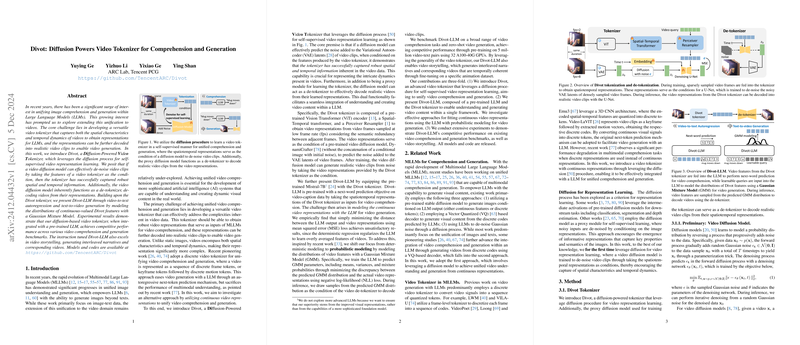

The paper under discussion presents a novel approach to unifying video comprehension and generation, building upon the progress made with LLMs for image data. The authors introduce Divot, a Diffusion-Powered Video Tokenizer, which is utilized to achieve a seamless integration of video comprehension and generation within an LLM framework. This innovation addresses the complexities involved in handling both spatial and temporal video data, a topic of significant interest given the under-explored status of video processing within the domain of multimodal intelligence.

Key Contributions

- Diffusion-Powered Tokenization: The paper leverages the diffusion process for self-supervised learning of video representations. The principle is to employ a video diffusion model to de-noise video clips conditioned on features generated by a video tokenizer. This de-noising capability serves as proof that robust spatial and temporal information is successfully captured by the tokenizer.

- Dual Functionality of the Diffusion Model: Beyond acting as a proxy for learning robust video representations, the diffusion model functions as a video de-tokenizer. It can decode representations back into realistic video clips, demonstrating a dual utility in both understanding video inputs and generating outputs.

- Integration with LLMs: Using Divot-LLM, the paper extends the application of pre-trained LLMs to video-to-text autoregression and text-to-video generation. Through a Gaussian Mixture Model (GMM) to model distributions of continuous Divot features, the approach supports advanced capabilities in video storytelling and generation tasks.

Numerical Evaluations and Implications

Experimental results indicate competitive performance across various benchmarks for both video comprehension and generation when Divot is integrated with a pre-trained LLM. For instance, the instruction-tuned Divot-LLM model excels in video storytelling by generating interleaved narratives and corresponding video outputs. Such demonstrations underscore the potential of diffusion processes in enabling LLMs to handle video data with similar efficacy as text.

Theoretical and Practical Implications

Theoretically, this research underscores the potential for diffusion models to serve as effective intermediaries in the representation learning of complex, multimodal information. The use of a diffusion-based approach opens a new avenue for tackling the intrinsic challenges of capturing spatiotemporal dynamics in video data, possibly inspiring further refinements and innovations in video and multimodal machine learning research.

Practically, tools like Divot may inform the development of applications in areas requiring nuanced video analysis and generation, such as automated video editing, content creation, and immersive communication technologies. This integration of comprehension and generative capabilities marks a step towards more sophisticated AI systems capable of dynamic content understanding and creation.

Future Directions

The work sets the stage for several potential avenues in AI research and application:

- Long-form Video Generation: Extending the capabilities of Divot to support longer and more complex video sequences could be a promising direction, particularly in applications demanding comprehensive storytelling capabilities.

- Optimizing Training Efficiency: Further work could explore the reduction of computational resources required for training diffusion-based models, possibly through advanced neural architectures or more refined training methodologies.

- Cross-modal Applications: The technique can potentially be expanded beyond video to other complex data types, such as 3D representations in virtual or augmented reality contexts.

In conclusion, the paper presents a robust, dual-functional approach to video data comprehension and generation, offering substantial contributions to the field of multimodal AI by integrating advanced machine learning techniques within a unified framework. This exposition serves as an insightful foundation for researchers aiming to further bridge the gap between video data processing and LLMs.