Align Your Latents: High-Resolution Video Synthesis with Latent Diffusion Models

This paper presents advancements in high-resolution video synthesis through the application of Latent Diffusion Models (LDMs). The authors aim to extend the existing capabilities of diffusion models, traditionally focused on image synthesis, to the more computationally demanding task of video generation.

Summary of Contributions

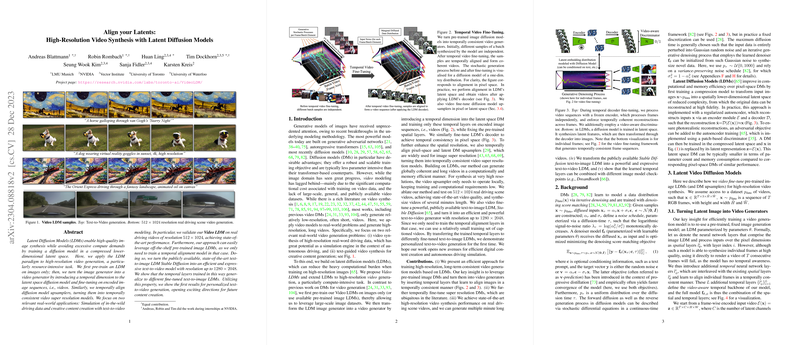

- Video Latent Diffusion Models (Video LDMs): The paper introduces Video LDMs, an adaptation of LDMs aimed at synthesizing high-quality videos. The key innovation involves pre-training a diffusion model on image data and then fine-tuning it to operate over video sequences by incorporating a temporal dimension.

- Temporal Alignment and Fine-Tuning: The paper proposes temporal alignment for the diffusion models, ensuring temporally consistent video generation. The temporal layers are trained to turn independent image frames into coherent video sequences, extending the image model's capabilities without retraining its core spatial layers.

- Applications: The authors validate their approach on real-world driving video scenarios and illustrate applications in creative content generation via text-to-video modeling. These applications span multimedia content creation and autonomous vehicle simulation.

- Resolution and Efficiency: The Video LDMs facilitate efficient high-resolution video generation up to pixels by leveraging existing pre-trained image LDMs like Stable Diffusion. Upscalers enhance the spatial resolution, ensuring computational efficiency.

Key Results and Implications

The paper demonstrates state-of-the-art performance in high-resolution video synthesis, notably for in-the-wild driving data at resolution. The approach outperformed existing baselines, both qualitatively and quantitatively, showcasing improved video quality and temporal coherence.

A critical finding is that pre-trained image LDMs, when supplemented with learned temporal layers, generalize well to video data. This has profound implications for both academic research and industrial applications, suggesting a path to scalable video synthesis without the burdensome data requirements often associated with video pre-training.

Future Directions and Implications

The work opens avenues for future exploration in AI, particularly concerning efficiency improvements and the expansion of video datasets. As the authors utilize a model as complex as Stable Diffusion, future research could focus on refining training schemes and exploring new architectures that balance computational efficiency with synthesis quality.

The practical implications are substantial. For autonomous driving, the ability to simulate various driving scenarios with high fidelity can significantly impact safety and development testing. In creative industries, more accessible high-resolution video generation could democratize content creation, offering new tools for artists and media professionals.

Conclusion

The paper successfully adapts LDMs for video synthesis, providing a route toward efficient high-resolution video generation. Its methodological innovations around temporal alignment and video fine-tuning establish a framework that leverages existing image models, heralding a significant advancement in the synthesis of realistic video content. While challenges remain, particularly around data diversity and model scalability, this research marks a significant stride in merging diffusion models with video applications.